Architectural Constructs for Time-Critical

Networking in the Smart City

by

Manishika Agaskar

S.B., Massachusetts Institute of Technology (2012)

M.Eng., Massachusetts Institute of Technology (2013)

Submitted to the Department of Electrical Engineering and Computer

Science

in partial fulfillment of the requirements for the degree of

Doctor of Philosophy

at the

MASSACHUSETTS INSTITUTE OF TECHNOLOGY

September 2018

c

○ Massachusetts Institute of Technology 2018. All rights reserved.

Author . . . .

Department of Electrical Engineering and Computer Science

August 8, 2018

Certified by . . . .

Vincent W.S. Chan

Joan and Irwin Jacobs Professor of Electrical Engineering

Thesis Supervisor

Accepted by . . . .

Leslie A. Kolodziejski

Professor of Electrical Engineering and Computer Science

Chair, Department Committee on Graduate Students

Architectural Constructs for Time-Critical Networking in the

Smart City

by

Manishika Agaskar

Submitted to the Department of Electrical Engineering and Computer Science on August 8, 2018, in partial fulfillment of the

requirements for the degree of Doctor of Philosophy

Abstract

The rapid maturation of the Internet of Things and the advent of the Smart City present an opportunity to revolutionize emergency services as both reactive and pre-ventative. A well-designed Smart City will synthesize data from multiple hetero-geneous sensors, relay information to and between emergency responders, and po-tentially predict and even preempt crises autonomously. In this thesis, we identify distinctive characteristics and networking requirements of the Smart City and then identify and evaluate the architectural constructs necessary to enable time-critical Smart City operations.

Two major goals inform our architectural analysis throughout this work. First, critical traffic must be served within a specified delay without excessive throttling of non-critical traffic. Second, surges in critical traffic from a geographically-concentrated region must be handled gracefully. To achieve these goals, we motivate, evaluate, and finally recommend:

1.) connecting local area Smart City networks to the existing metropolitan area networking infrastructure,

2.) standing up a dedicated municipal data center inside the metropolitan area served by the Smart City,

3.) deploying a contention-based priority reservation MAC protocol that guaran-tees latency and throughput for some specified maximum number of critical users per wireless access point,

4.) configuring MAN routers to provide non-preemptive priority service at output queues to critical Smart City traffic,

5.) offering dedicated optical paths from edge routers to the municipal hub in order to effectively form a virtual star topology atop a MAN mesh and significantly reduce switching delays in the network,

6.) processing select applications at dedicated fog servers adjacent to edge routers in order to reduce upstream congestion in the MAN, and

7.) setting up a network orchestration engine that monitors traffic into and out of the Smart City data center in order to preemptively detect critical traffic surges and

to direct network reconfiguration (access point reassignment, load-balancing, and/or temporary resource augmentation) in anticipation.

Thesis Supervisor: Vincent W.S. Chan

Acknowledgments

I must first and foremost thank my research supervisor, Professor Vincent Chan, for his dedication to my academic and professional development from my undergraduate days through the present. His advice and guidance has been invaluable in shaping my research and helping me (hopefully!) achieve the distinction of “thoughtful engineer.” I would also like to thank Professor Anantha Chandrakasan and Professor Ed Crawley for carving out time to sit on my thesis committee and for their assistance in polishing this work.

To my fellow groupmates in the Chan Clan – Matt, Henna, Esther, John, Shane, Anny, Arman, and Junior – thank you for making the office environment so enjoyable, and only mildly distracting.

I am so grateful for the friendship and support of friends both near and far. I especially want to thank my roommates, Paula and Harriet; my ice hockey teammates, in particular my “accountabilibuddy” Jane, “personal cheerleader” Sherry, and “token teenage friend” Gabby; and of course my fellow Loopians who cheerfully followed my daily progress updates at the end of this journey.

Finally, to my family, I must express my deepest appreciation for their steadfast love and encouragement. To Ameya and Cheri: thank you for the good food and better company, and for my amazing niece and nephews. And to my mother, Rohini: what can I say? This is as much your accomplishment as mine.

Contents

1 Introduction: The Internet of Things 17

1.1 IoT Applications . . . 17

1.1.1 Vehicular Telematics and Autonomous Vehicles . . . 19

1.1.2 Urban IoT and the Smart City . . . 21

1.1.3 Disaster Response and Emergency Networks . . . 22

1.2 IoT Architecture . . . 25

1.2.1 Intersection with 5G . . . 27

1.2.2 Cognitive Networking . . . 33

1.2.3 Security of the IoT . . . 40

1.3 Time-Critical IoT . . . 42

1.4 Thesis Outline . . . 45

1.5 Major Architectural Recommendations . . . 47

2 Smart City Network Model 49 2.1 Example Application . . . 49

2.2 Markov Model of Devices . . . 50

2.3 Network Traffic Model . . . 54

2.3.1 Small Surges . . . 55

2.3.2 Medium Surges . . . 57

2.3.3 Large Surges . . . 57

2.4 Potential Data and Latency Requirements . . . 58

2.4.1 Vehicle Tracking . . . 59

2.4.3 Video Monitoring . . . 61

2.5 Distinctive Network Characteristics . . . 61

2.6 Network Infrastructure . . . 62

3 Priority Access at the Network Edge 65 3.1 Related Work . . . 66

3.1.1 Packet Reservation Multiple Access . . . 66

3.1.2 Industrial Wireless Sensor Networks . . . 67

3.2 Determining User Criticality . . . 69

3.3 TDMA Critical Reservation Scheme . . . 71

3.3.1 Contention Period . . . 73

3.4 Cognition and Protocol Gain . . . 86

3.4.1 No Cognition: Stabilized Slotted ALOHA . . . 86

3.4.2 No Cognition: Class-Agnostic Reservation Scheme . . . 89

3.4.3 Cognition: Priority Reservation Scheme . . . 89

3.5 Why not FDMA? . . . 97

3.6 Servicing Regular Data . . . 98

3.6.1 Omniscient Access Point . . . 99

3.6.2 Limited Access Point Cognition . . . 101

4 Priority Service in the Metropolitan Area Network 105 4.1 Network Topology . . . 105

4.2 MAN Router Model . . . 107

4.2.1 Packet Service Time . . . 109

4.2.2 Packet Interarrival Time . . . 109

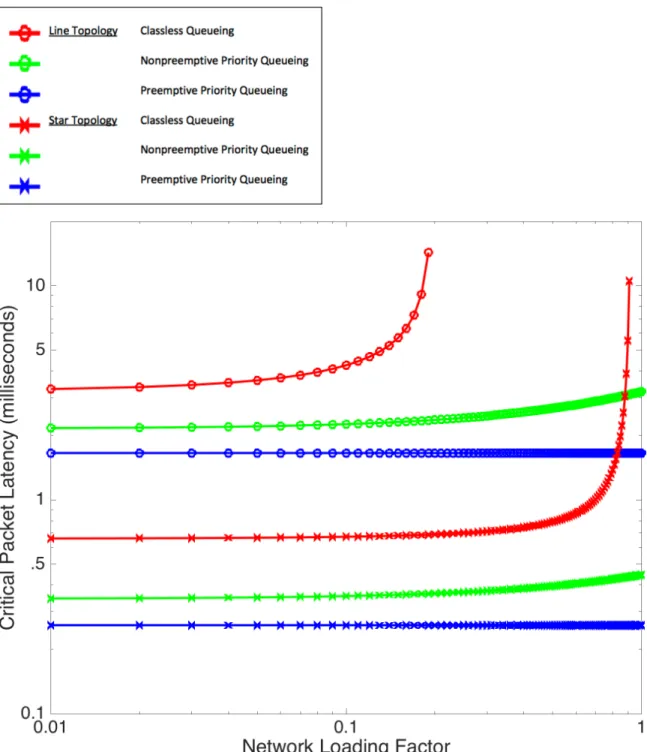

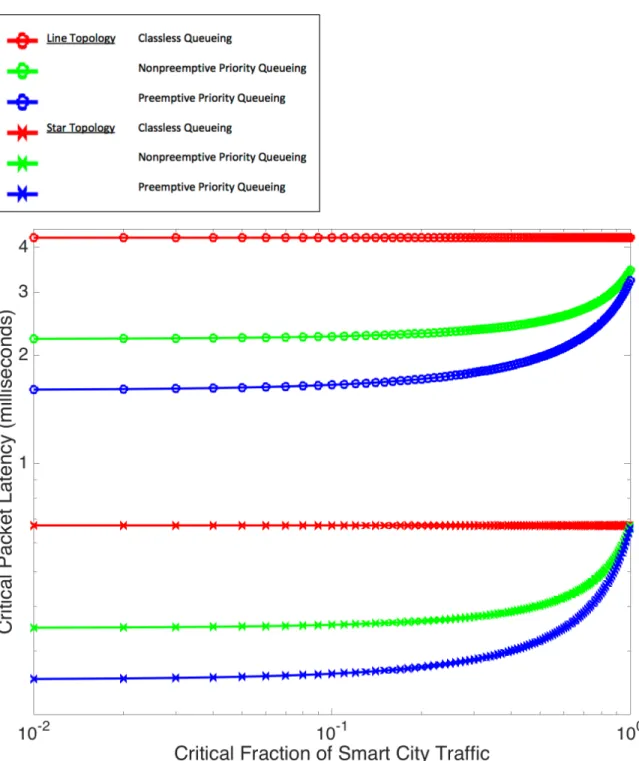

4.3 Queueing Disciplines . . . 110 4.4 Expected Delay . . . 112 4.4.1 Line Topology . . . 113 4.4.2 Star Topology . . . 115 4.4.3 Example System . . . 117 4.5 Discussion . . . 127

5 Smart City Network Orchestration 131

5.1 Related Work . . . 133

5.2 Smart City Application Workflow . . . 140

5.3 Networking Delay with Fog . . . 143

5.3.1 Star Topology . . . 145

5.3.2 Ring Topology . . . 148

5.3.3 Analysis of Example System . . . 152

5.4 Optical Bypass versus Fog Processing . . . 156

5.4.1 Optimal Fog Traffic Volume . . . 160

5.4.2 Size of Network . . . 161

5.4.3 Network Loading . . . 163

5.4.4 Fog Processing Speed . . . 165

6 Dynamic Network Reconfiguration 167 6.1 Access Requirements Unique to the Smart City . . . 167

6.2 Reallocation of Resources . . . 169

6.2.1 Access Point Reassignment in the LAN . . . 169

6.2.2 Load-Balancing in the MAN . . . 173

6.3 Surge Detection and Response . . . 178

6.4 Orchestrating Reconfiguration . . . 191

7 Conclusion 193 7.1 Major Architectural Recommendations (Reprise) . . . 193

7.2 Future Work . . . 198

7.2.1 Mechanics of Device Handoff . . . 198

7.2.2 Temporary Resource Augmentation . . . 199

List of Figures

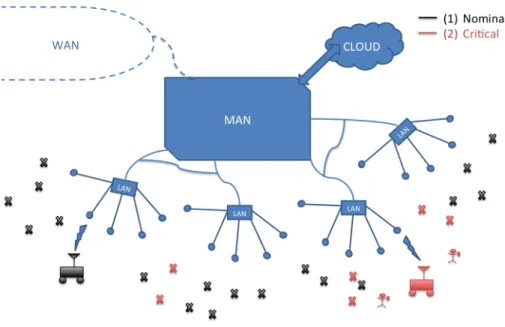

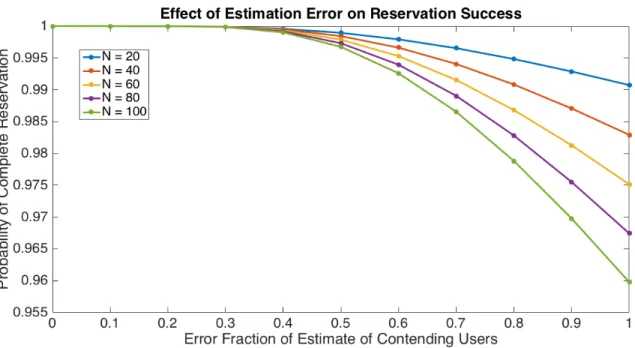

1-1 An example IoT network. “Critical” users are shown in red. . . 43 2-1 A two-state Markov model represents the criticality of a node. . . 50 2-2 Example of a critical traffic surge. . . 56 3-1 Diagram of the reservation protocol. A priority contention period is

followed by a scheduled priority transmission period. The best effort period is unspecified. . . 72 3-2 A fixed-length contention period with 𝑁 slots, one for each user. Note

that this requires the access point to know the total number and identi-ties of users in the cell. If too many regular users have data to transmit, the access point must somehow select which users may transmit. . . . 74 3-3 Markov absorption chain where each state is the number of as-yet

un-scheduled contending users. Each contention slot corresponds to a state transition. . . 75 3-4 The number of time slots to complete reservation is roughly linear with

the number of contending users. . . 77 3-5 The probability of complete reservation increases with the number of

time slots. . . 78 3-6 The probability of complete reservation 𝑃𝑛𝑐→0(𝑇 ) in 5ˆ𝑛𝑐 time slots

versus the estimation error 𝑛^𝑐−𝑛𝑐

𝑛𝑐 . . . 81

3-7 The expected number of unscheduled users E[𝑛𝑐(𝑇 +1)] after 5ˆ𝑛𝑐

times-lots versus the estimation error ^𝑛𝑐−𝑛𝑐

3-8 Effect of the intial error on the number of slots required for complete reservation, across 10000 simulations. . . 84 3-9 A linear relationship exists between the number of contending users

and the average number of contention time slots required for complete reservation. . . 84 3-10 ˜𝐶𝑎𝑙𝑜ℎ𝑎 (blue) is close to 𝐶𝑎𝑙𝑜ℎ𝑎 (red) especially for 𝐷 ≥ 0.05 s. . . 90

3-11 The critical fraction of traffic is plotted against 𝐺1, the gain of the

priority reservation scheme over stabilized slotted ALOHA for various delay bounds 𝐷𝑚𝑎𝑥. . . 93

3-12 The critical fraction of traffic is plotted against 𝐺1, the gain of the

priority reservation scheme over stabilized slotted ALOHA for various data packet lenghts 𝐿. . . 94 3-13 The critical fraction of traffic is plotted against 𝐺1, the gain of the

priority reservation scheme over stabilized slotted ALOHA for various packet arrival rates 𝑞. . . 95 3-14 The ratio of the data packet size 𝐿 to the reservation slot size 𝑙 is

plotted against 𝐺2, the gain of the priority reservation scheme over a

regular reservation scheme. . . 96 3-15 Diagram of an FDMA reservation protocol. Priority traffic is red;

regular traffic is gray. The channel is split such that one packet is transmitted through one frequency band in time 𝐷𝑚𝑎𝑥. Best effort

users are neither guaranteed a transmission slot, nor to finish their transmission once it starts. . . 98 3-16 𝐶𝛾 is plotted against 𝛾 for various values of 𝐷𝑟𝑒𝑔 = 𝑚𝐷. . . 104

4-1 Example MAN topologies. . . 106 4-2 Access points are connected to Smart City aggregation gateways, which

connect the Smart City to the MAN. The dashed lines indicate redun-dant connections to a second aggregation gateway, for extra system resilience. . . 107

4-3 Input and output ports of an example MAN router. . . 107 4-4 MAN router with shared memory switch architecture. 𝑘 equally-loaded

parallel output-queued channels comprise the link to an adjacent router.109 4-5 On the left, an example MAN topology (a Petersen graph) with the

minimum spanning tree highlighted in blue. On the right, dedicated lightpaths shown in red form a virtual star topology. . . 116 4-6 Expected delay of critical packets versus normal link congestion. . . . 119 4-7 Reduction in delay via priority queueing versus normal link congestion. 120 4-8 Expected delay of critical packets versus Smart City traffic load. . . . 121 4-9 Reduction in delay via priority queueing versus Smart City traffic load. 122 4-10 Expected delay of critical packets versus critical fraction of Smart City

traffic. . . 123 4-11 Reduction in delay via priority queueing versus critical fraction of

Smart City traffic. . . 124 4-12 Expected delay of critical packets versus size of the network. . . 125 4-13 Reduction in delay via priority queueing versus size of the network. . 126 5-1 One MAN router is connected to the municipal hub. The others are

connected to small fog servers (in addition to Smart City gateways and other LANs, not shown). . . 132 5-2 Internal resource allocation of municipal hub and fog nodes. . . 141 5-3 Best and worst case topologies for MAN routers with fog servers. . . 144 5-4 Fog networking decreases the switching delay of the longest Smart City

path in the network. . . 154 5-5 Fog networking decreases the average switching delay of Smart City

packets in the network. . . 154 5-6 The latency of critical packets in the the star topology is lower than

that in the ring topology – even with fog networking. . . 155 5-7 Fog networking accommodates surges in critical traffic beyond the

5-8 Two configurations for a ring network. . . 157

5-9 Gain (in terms of networking latency) of using fog servers in a star topology versus the fraction of fog-diverted traffic. . . 158

5-10 The dominant delay contributions in E[𝐷𝑚→fog] and E[𝐷𝑚→0]. . . 160

5-11 Effect of network size on the best network configuration. . . 162

5-12 Effect of network loading on the best network configuration. . . 164

5-13 The traffic threshold where fog networking reduces latency more than optical bypass is plotted against fog processing speed. . . 165

5-14 Effect of fog processing speed on the best network configuration. . . . 166

6-1 The maximum fraction of critical devices that can be accommodated depending on the number of access points in a given region, when the surge area is 10% of the total region. Solid line indicates devices have directionally tunable antennas. . . 172

6-2 Gain in terms of surge capacity achievable by increasing the number of access points in given region, when the surge area is 10% of the total region. Solid line indicates devices have tunable directional antennas. 173 6-3 The maximum fraction of critical devices that can be accommodated depending on the number of access points in a given region, when the surge area is 20% of the total region. Solid line indicates devices have tunable directional antennas. . . 174

6-4 A surge in a Smart City LAN associated with Router 2 could be rerouted through Router 2 to Router 3 before being forwarded to the hub. . . 175

6-5 Reduction in delay possible via rerouting traffic before optical bypass. 177 6-6 Reduction in delay from rerouting versus size of critical traffic surge. . 178

6-7 Re-routing order of operations. . . 180

6-8 Evolution of 𝑁𝑝𝐴(𝑡) (blue) and 𝑁𝑝𝐵(𝑡) (red) after a priority surge and user reassignment. 𝜆𝑛𝑜𝑚 = 1 week−1, 𝜆𝑠𝑢𝑟𝑔𝑒 = 1 hour−1, and 𝜅 = 0.2 minutes−1 . . . 187

6-9 Evolution of 𝐹𝐴, 𝐹𝐵, 𝑁𝑝𝐴(𝑡) and 𝑁𝑝𝐵(𝑡) after a priority surge and

user reassignment. 𝜆𝑛𝑜𝑚 = 1 week−1, 𝜆𝑠𝑢𝑟𝑔𝑒 = 1 hour−1, and 𝜅 =

0.2 minutes−1 . . . 190

7-1 Diagram of Smart City network architecture. Key architectural con-structs are labeled “A” through “J,” and are explained on the following pages. . . 194 7-2 Summary of Smart City architectural constructs. . . 201

Chapter 1

Introduction: The Internet of Things

We begin this thesis with an overview of the Internet of Things (IoT) and the network research we believe is required to bring the IoT vision to fruition. Because the IoT is so broadly defined, this research naturally encompasses the 5G cellular architecture as well as new cognitive networking paradigms for heterogeneous networks. The vast amount of potential data from sensors and mobile devices, the increased traffic demand from end-users, and the ever-increasing number of end-users will require a smarter approach to network management and control.

This chapter touches on all of these areas, summarizing current research directions and identifying critical open questions. Obviously, it is not our intent to answer nor even address all of these questions within this thesis; this chapter serves to introduce the research landscape to which we hope to contribute.

Before diving into the complex questions of a new network architecture, we first look at some visions of IoT applications. These applications are ultimately what will drive the development of the future network architecture.

1.1

IoT Applications

Researchers and technologists have envisioned a wide range of Internet of Things applications; the most prominent of these fall into the general areas of public safety, healthcare, infrastructure (e.g. power and water), vehicular telematics and autonomous

vehicles,1 manufacturing and logistics, and, of course, entertainment. A significant portion of IoT research is application-focused. The two major research flavors are:

∙ Identifying new use cases for the integration of sensor networks and mobile de-vices with the Internet and Internet-connected computing and storage elements, ∙ Determining the infrastructure and protocols required for the more complex of

these use cases.

Additionally, research in big data analytics continues with an eye towards processing the vast expected quantities of generated IoT data.

We consider premature the consensus that machine learning will be the basis of IoT data processing. One issue to be addressed is the ability of algorithms trained on historic data to handle extreme “black swan” events, especially for time-critical applications. Black swan events by definition have not occurred before and would not be in any repository of historic data. Thus, pure learning algorithms are unlikely to be adequate; a class of different techniques must be brought into the solution space for this problem. The system must be designed to distinguish true substantive changes in the environment from outliers that randomly occur during normal (noisy) operation. For example, suppose temperature sensors are deployed in a building for fire detection. If one sensor has a sudden large spike in temperature, is that sufficient to trigger the building sprinkler system? What if multiple sensors see a temperature spike, but only for a single millisecond? Application-specific models will be necessary to understand and validate incoming data.

On the software side, a considerable amount of research has been done to develop IoT “middleware” solutions to enable easy integration of heterogeneous devices that have different purposes, data formats, and even manufacturers. This is especially relevant for what is sometimes know as the “Web of Things:” the interconnection, perhaps via IPv6, of web-enabled devices. A common software platform would enable

1Autonomous vehicles are not always considered under the Internet of Things umbrella. We include them in this discussion because any telecommunications infrastructure that emerges to sup-port the Internet of Things will also need to supsup-port the critical communications requirements of self-driving cars – which will presumably fall under the umbrella of the “Internet of Vehicles.”

rapid deployment of “composable applications,” applications that utilize available IoT nodes in new ways. As a trivial example, the temperature sensors being used for fire detection could also be used for indoor climate control – but with very different networking (in this case latency) requirements.

The utility of composable IoT could be vast – and indeed the benefit of com-posability is that we need not know today what we may need tomorrow – but the political issues will be as complex as, if not more than, the technical issues. Pri-vacy and human rights are clear examples where the technical and political interact (there would need to be some method of defining “available” information so as to take privacy and user permissions into account), but there are others as well. Differ-ent standards bodies will have competing priorities; where the U.S. GovernmDiffer-ent will prioritize security, the IEEE may prioritize fairness, while commercial entities will seek to maximize profit. Regardless, the global marketplace may reject the options put forth. The adoption of middleware could have unintended consequences, e.g. an artificial monopoly and the resultant stifling of innovation. Thus even the technical aspects of the IoT architecture are married to the resolution of political questions.

We now briefly detail a few complex applications that will require collaboration and large-scale infrastructure coordination.

1.1.1

Vehicular Telematics and Autonomous Vehicles

The field of vehicular telematics is getting a lot of attention, especially as the goal of communicating with vehicles expands from routing and safety to include entertain-ment and general Internet connectivity. The concept of vehicle-to-vehicle communica-tions took form at first as “VANETs,” or vehicle MANETs – small-scale, homogeneous mobile ad hoc networks. The “Internet of Vehicles,” in contrast, may be more service-oriented and contain humans and road-side units in addition to vehicles [20].

This new model of a vehicular network requires consideration of transmission strategies that address road topology, connectivity, and heterogeneous communica-tions capabilities. For instance, unicast transmission from a single source to desti-nation can be accomplished via a greedy multi-hop algorithm, perhaps with a

store-and-forward component. A geocast strategy can improve routing efficiency by taking advantage of vehicle trajectory and GPS information as well as information from local traffic lights [20]. Geolocation- and prediction-assisted routing algorithms, especially in conjunction with directive transmission via beamforming, will be much more effi-cient and have less delay than algorithms that don’t use this information [31], [13], [36]. For applications such as advertising traffic or weather updates, a simple broad-cast strategy could be employed, though re-broadbroad-casting should be done carefully so as not to congest the network [20].

Per [20], routing algorithms could be topology-based, position-based, map-based, path-based, or some combination thereof. Mobility will need to be considered; the network map may be 1-D, 2-D, or 3-D, depending on latency and capacity require-ments. Because the devices will be heterogeneous, gateways will likely be needed to bridge edge devices and the core network. These gateways could be IP compatible “cluster heads” (as in wireless sensor networks) with some sort of vertical handoff, or could be more akin to cellular base stations of WiFi access points. Cognitive radio could improve spectral and spatial efficiency for highly dense vehicular networks.

A discussion of vehicular telematics would not be complete without the mention of the growing dependence of cars on network-connected electronics, and of course the emergence of autonomous vehicles (self-driving cars). Whereas HTTPS may be suffi-cient for an “infotainment” VANET, any network over which vehicle control functions are transmitted will have specific, stringent requirements. Driverless cars obviously fall into this latter category, but so do human-operated vehicles with networked com-ponents vulnerable to remote attacks. Thus despite their common infrastructure, the Internet of Vehicles and self-driving cars will have widely diverging requirements. We anticipate that vehicular network requirements – such as latency and security constraints – will be the subject of government safety regulations. As an example, safeguards against GPS failure will likely be required, in addition to regular software patches against discovered vulnerabilities.

1.1.2

Urban IoT and the Smart City

An urban IoT architecture will consist of dense, heterogeneous devices and will likely require centralized control, data storage, and processing. Unconstrained nodes with complex capabilities must interact with constrained nodes with low energy consump-tion and low data rates. In the link layer, unconstrained nodes can currently com-municate via Ethernet, Wi-Fi, UMTS, LTE, while constrained nodes need low-power solutions such as Bluetooth Low Energy, ZigBee, near-field communications, or even RFID [99]. These protocols and those at higher layers will need to interact vertically and horizontally across the whole network.

The “Smart City” concept has demonstrable worldwide appeal. City and national governments look to manage and optimize public services via communications infras-tructure and IoT data. A proof-of-concept urban IoT system was deployed in Italy by researchers at the University of Padova [99], but this test bed is tiny compared to the size of a fully realized Smart City infrastructure.

The Singapore government is in the process of deploying a large-scale urban IoT network via its Smart Nation program [93]. Singapore’s plan to utilize IoT devices and data includes, for example:

∙ Instituting smart parking systems to monitor and adjust for space demand. ∙ Automating indoor climate and lighting control to reduce energy consumption

in unoccupied buildings. ∙ Optimizing waste collection.

∙ Preempting building maintenance issues [53] .

The city of Barcelona also has a major Smart City effort with numerous deployed and proposed smart applications [29]. A smart traffic light system allows blind pedes-trians to use a remote control to trigger audible traffic signals, and also responds to fire emergencies by keeping lights green along the fire engine’s projected route. A smart irrigation system for the city’s green spaces prevents water waste by using sen-sor data about plants, soil, and recent rainfall to control the input and output of

water. The city has also started an effort, called CityOS, for large-scale analysis of city data from sensors, social media communications, municipal databases, and other sources. Analyzing the data may enable the city government to simulate potential scenarios, identify event behavior patterns, and generally improve city services.

The most effective Smart City programs will consider the long-term needs of their populations and invest accordingly. In the aggregate, IoT sensors can have non-obvious applications; for example traffic congestion could be estimated using air quality and acoustic measurements from road-side units [99]. The power of IoT in part comes from the sheer number of sensors that can contribute to composable applications. A complete realization of the urban IoT will therefore require an R & D investment in the infrastructure to enable large-scale data aggregation and analysis. In this thesis, we will look at the architectural requirements for enabling time-critical emergency services via the Smart City.

1.1.3

Disaster Response and Emergency Networks

The potential for ubiquitous communications devices to assist in disaster relief scenar-ios is a promising area of current IoT research. It is also an area that the commercial sector has little incentive – or worse, a competitive disincentive – to pursue, but is a valuable target for academic and government research.

Battery-powered2 smart devices that survive a critical infrastructure failure could

(via multiple short-range device-to-device hops) replace an unusable long-range com-munications channel. IoT devices could not only provide important situational data to both remote operations centers and first responders on the scene, but could also supplement the short-range radios first responders carry for low data rate voice com-munications. The energy limitations on such a network are obvious – but the goal would be to enable temporary network capabilities (especially for emergencies) while more permanent solutions were developed. The challenge is that vendors of IoT

prod-2Energy-harvesting or solar-chargeable devices would be even better in such a situation – and might even be necessary for IoT in general given the difficulty of replacing billions of device batteries regularly.

ucts have no incentive to cooperate (i.e. share their designs and allow access to their devices) to create an integrated crisis response network. Furthermore, end-users have little incentive to allow their devices to be co-opted for such a purpose, particularly given privacy concerns. Emergency networking presents a natural setting for govern-ment investgovern-ment in the IoT. For example, the governgovern-ment could arrange for access to architectures from individual vendors in order to practice deploying an interconnected architecture that made use of all possible assets in the field.

Congress created the First Responder Network Authority (FirstNet) under the U.S. Department of Commerce to develop and deploy a nationwide public safety broadband network that would enable first responders to communicate across de-partments and municipalities. FirstNet is an attempt to address the repeated in-stances of communication failure during crises: the incompatibility of police, fire, and Port Authority radio systems during the 9/11 attacks, the infrastructure collapse in the aftermath of Hurricane Katrina, and more recently, communications breakdowns during Hurricane Sandy, the Boston Marathon bombing, and even the Ferguson, MO protests [78], [6]. Across the U.S., radio systems used by different units are often incompatible, and the sheer volume of traffic during events and catastrophes can incapacitate public networks.

At the 2012 Optical Fiber Communication Conference, the R & D director of the Japanese Ministry of Internal Affairs and Communication, described the communi-cations outage in the aftermath of the March 2011 Fukushima earthquake. The lack of communication hindered the disaster relief efforts of first responders during the critical first few days. The disabled communication infrastructure took 30 days to restore [85]. The Japanese Government instituted a program to address this problem. The U.S. President, in reaction to both domestic and foreign disasters, proposed an R & D program in 2012 to find solutions for this “instant infrastructure” problem but did not find sufficient support in Congress.

FirstNet released its RFP in January 2016, so a fully deployed solution is likely years away. The growth of the IoT over that timeframe could enable an enhanced network for first responders, affording them Internet connectivity, low-latency

com-munications, and up-to-date situational awareness from fielded sensors. We envision that incompatible radio systems could be bridged by digitizing communications in the cloud or even via edge-based voice-to-text conversion and ad-hoc networking. In this thesis, we narrow our focus to the strict latency requirements of such applications.

We now briefly describe a few emergency network designs in the literature. ∙ The emergency communications solution presented in [57] contains three major

parts: rapidly deployable low-altitude aerial platforms, portable terrestrial sta-tions, and satellites to fill coverage gaps. Low-altitude platforms and portable terrestrial base stations could temporarily replace local infrastructure and re-establish basic Internet connectivity to smart devices. Because the coverage requirements of a disaster area would be nonuniform, the optimal placement of these base stations and aerial platforms involves a tradeoff between covering as much area as possible while supplying enough capacity to critical points. ∙ A cluster-based hierarchical structure is proposed in [92] for wireless data

deliv-ery in a emergency scenario. Since the IoT will consist in large part of wireless sensor networks, a self-organized post-disaster wireless network could provide important situational data. Mobile nodes (such as cell phones carried by first responders) could act as “information ferries” to exchange data between isolated sensor subnets - albeit with no guaranteed delay bounds. A proactive routing scheme with location awareness and trajectory prediction would reduce latency. The deployment of aerial platforms may be necessary to fill coverage gaps and reach remote sensors. A similar “bootstrapped” wireless mesh network is pro-posed in [4] as a means of reaching critical sensors and actuators during a power outage by using self-organized smart devices operating on locally-generated en-ergy.

∙ If a disaster impacts terrestrial network infrastructure on a large scale, satellite links may be necessary to connect a remote crisis center to the sensors, actuators, and first responders at the disaster site. First responders could carry portable multifunctional radios to communicate with nearby IoT devices (together these

radios and sensors would form an ad-hoc “incident area network”), and relay nodes (e.g. UAVs) would provide a satellite connection to the Internet and to the central control center [75]. Alternatively, there is the possibility of using software defined radio at IoT access points to communicate with existing first responder devices without having to replace them with multi-functional radios.

All of these scenarios involve aerial vehicles, highlighting the importance of UAVs to disaster relief applications. We will cover both self-organization and satellite com-munications with respect to IoT in our discussion of IoT architecture in the subsequent section.

1.2

IoT Architecture

To fully realize the IoT as it is often imagined, a major change in the current network architecture is required. There are several reasons for this architecture overhaul:

∙ Energy efficiency will become much more relevant due to resource constrained IoT devices.

∙ Interference mitigation techniques will be needed to accommodate large num-bers of devices with diverse spectrum requirements.

∙ Crosslayer protocols will be required to account for differences in latency and reliability requirements among different network nodes and applications. ∙ Device mobility in concert with higher data rates and larger traffic demands

will require new paradigms of routing and congestion control.

∙ The “coherence time” of network dynamics is likely to decrease due to highly variable traffic loads and service requirements. The network coherence time is the length of time during which traffic requirements across a network or subnetwork are roughly constant. It is thus dependent on the frequency of major events that produce many and/or large data transactions, and also on

the frequency and prevalence of node movement. We discuss this further in Chapter 2.

As noted in [88], network monitoring in the future IoT will need to be smarter to handle large volumes of data traffic. Similarly, network routing and transport protocols will need to be smart about what data is necessary to make decisions. While massive data analytics could still occur in the cloud, collected data will need to be pared near the network edge to avoid overloading the backhaul. Additionally, applications that make rapid data-based decisions may not tolerate the transmission delay of sending data to the cloud for processing. (We note that transmission delay is mostly determined not by propagation time but by software delay in, e.g. electro-optic packet conversion and routing.) The network management structure must navigate the tradeoff between straining limited resources at the edge and congesting the core network. We will discuss this further in Chapter 5.

As the number of devices and connections grows, this sort of smart networking will be imperative to keep up with network demands. Cooperative relay and recep-tion techniques for dynamic network topologies should be investigated to supplement spectrum sharing and spatial reuse techniques for wireless sensor networks [15]. Ad-ditionally, the question of how to dynamically track network states without storing and transmitting the full link state must be considered [14]. Is there a subset of network parameters that is sufficient to achieve some adequate performance metric? How current does the sampled state information need to be? How dense? How do we differentiate between a state change due to expected random fluctuations versus a black swan-type network event? Learning on past data alone will not suffice to flag black swan events without additional modeling and more sophisticated decision techniques. This question may be underemphasized by the commercial sector, where infrequent abnormal circumstances will not impact the bottom line.

1.2.1

Intersection with 5G

It is impossible to consider the architecture of the IoT separately from the 5G cellular mobile network. Because mobile devices are integral to many IoT applications, the 5G architecture will be directly informed by IoT requirements, and vice versa. The “pico,” “femto,” and “atta” cells proposed for 5G spectrum-sharing (and the associ-ated handoff and cell selection algorithms), as well as new communication media like mmWave and free-space optics, all fall within the IoT research space.

The IEEE Communications Society held its first 5G Summit in May 2015, and the Internet of Things was referenced in a number of the featured talks [38]. Topics discussed included:

∙ 5G network service requirements like user mobility, disruption tolerance, multi-homing, content addressability (in network caches, e.g.), and context-aware de-livery [73], in addition to time-deadline support for delay sensitive applications [54].

∙ Self-governing networks, i.e. the use of control theory, predictive analytics, and context-aware computing to automate network management functions [62]. ∙ “Fog Networking,” or the use of end-users and edge devices to store,

commu-nicate, and process data. IoT devices could be used for caching popular data, crowd-sensing information, processing local data (that is both generated and consumed locally), and spectrum-sensing for bandwidth management [23].

The second item was contributed by Ericsson Network Management Labs. Other industry contributors at the summit were Cisco Systems (“Internet of Everthing” and information-centric networks), Bell Labs (“Always Connected, Always Untethered” – the dominance of wireless communications at the edge), and Intel (IoT and mmWave communications). Several companies, including Intel, Qualcomm, and National In-struments are working on mmWave communications in the context of 5G networks to increase capacity and possibly improve physical layer security [38].

Many 5G network management proposals fall under the heading of cognitive net-working, which we discuss in a later section. First, we discuss some structural aspects of 5G network architecture.

Small Cells

The increasing capacity requirement of wireless networks is likely to result in the microization and densification of cellular networks [17]. “Femtocells” will increase the received signal strength by decreasing the signal propagation distance. This will allow transmitters to use less power, resulting in less interference and improved spatial spectral efficiency. Indoor femtocells can use high data rate visible light or infrared communications to further reduce interference (and possibly improve security).

Networks of femtocells will improve coverage, reliability, and quality of service – assuming throughput is base-station limited and not backhaul limited. This as-sumption is reasonable in the foreseeable future due to the high-capacity optical fiber backhaul, but it may be challenged if IoT devices start demanding large, bursty, so-called “elephant” transactions. In either case, device mobility and increased net-work demand will require optimization of device discovery, cell selection, and handoff algorithms. Maintaining a high data rate for vehicular communications will be partic-ularly challenging. Cooperation between base stations of very dense small cells may help to improve performance via beamforming.

The 5G network architecture design presented by Agyapong et al in the IEEE Communications Magazine consists of “ultra dense small cells” and posits the intel-ligent use of network data to support billions of connected devices with potentially high mobility and strict latency requirements [1].3 While not all IoT applications

require low latency, many potential applications require fast feedback and rapid re-source allocation. Agyapong’s vision of IoT contains two categories of sensors: those with limited resources and intermittent connectivity, and those with continuous

mon-3It is unlikely that the deployment of small cells will be the only required physical layer modifi-cation. Additional physical layer techniques such as MIMO, beam-forming and antenna-nulling may be required to improve performance and capacity. We discuss one such physical layer technique – directionally tunable device antennas – in Chapter 6.

itoring/tracking capabilities. The network architecture must scale with increasing numbers of both kinds of sensors. Intelligent clustering, relaying, and signaling – including new waveforms to suppress interference – will be required to optimize both energy efficiency and data transmission. We now highlight some features of the 5G design proposed in [1].

∙ To increase capacity and data rate in dense IoT networks:

1. Higher frequency bands will be used within small cells. New and improved receiver hardware will be needed to support the higher frequency commu-nications.

2. Local traffic will be offloaded via network-controlled device-to-device com-munications – similar to how mobile traffic is currently offloaded to Wi-Fi when available.

∙ To decrease latency:

1. Network-aware admission and congestion control algorithms will be devel-oped to replace TCP.

2. Ultra-dense small cells will bring endpoints closer.

3. Cognitive processes at edge devices will enable caching and pre-fetching. ∙ To decrease cost:

1. Higher-layer functions will be moved to the cloud via network function virtualization (NFV).4

2. The number of base station functions can be reduced via software-defined networking (SDN). Intelligent energy management of base stations will be

4It is unclear whether NFV will live up to the surrounding hype. The focus of recent NFV research has been on software rather than network performance [50]. However, at least one experiment on end-to-end network performance has shown that virtualization can cause latency and throughput instability even when the network is underutilized [91]. The major challenge in NFV research is improving upon “purpose-built” hardware implementations [50]. What may be sufficient for low data-rate traffic may not suffice as the number of nodes and amount of traffic increases.

important for ultra-dense networks.5

The architecture envisioned in [1] consists of two layers: the radio network layer and a network cloud layer. Via SDN and NFV, resources can be borrowed from remote data centers when local infrastructure is overwhelmed – a sort of “infrastructure-as-a-service” business model. Network intelligence will enable data-driven decisions for independently provisioning coverage and capacity. For example, energy can be saved by dynamically switching cells on and off, and group mobility (e.g. cars on a highway) can be supported via nomadic hotspots.

Gupta and Jha’s 5G architecture survey raises some unanswered questions, one of which is how base-station/user associations are formed [49]. This is especially challenging when high mobility is considered. Does the base station have full or partial control over link formation? How is interference management conducted?

Researchers at NTT have patented a system for nodes to discover geographically adjacent access routers [41]. This can be used instead of mobile IP for local com-munication and handoff. Nodes can locate nearby access routers and resolve their network layer address. A localized network map can be generated using mobile nodes as sensors. This could be very helpful for routing data flows from mobile devices.

Algorithms for non-centralized resource allocation need to be studied and vali-dated. Local servers known as “cloudlets” could be used in a hierarchical scheme, perhaps using context information to manage connectivity [49]. With respect to the security of device-to-device communications, should the ad-hoc networks formed in congested areas at cell edges be open or closed access? Should authentication be performed via the base station? If so, how is that authentication managed?

Satellite Communications for IoT

Satellite communications will likely support the IoT as part of the 5G infrastructure [34], [75]. Satellite links may be used to:

5We envision that very constrained base stations could simply digitize signals and send bits directly to the cloud for processing.

∙ Extend network coverage to rural areas – e.g. to connect isolated small cells to the rest of the network.

∙ Add extra capacity to urban networks in times of high demand and to maintain coverage for mobile nodes quickly traversing small cells.

∙ Serve as the thin-line infrastructure6 for emergency disaster relief.

∙ Support content delivery networks that cache popular data at the network edges – e.g. by using multicast capabilities to distribute content.

∙ Offload control plane traffic while large data flows are transmitted along terres-trial paths [34].

∙ Reconstitute and rekey networks after infrastructure failure by identifying func-tional nodes and connecting terrestrial partitions.

Global deployment of monitoring systems is expected to be part of the IoT. In order to enable remote connectivity and resilience in the face of terrestrial link failure, at least some IoT devices should be able to connect to both terrestrial and satellite networks. IoT subnetworks that connect to the terrestrial long-haul under normal operating conditions can use these devices as gateway nodes if a switch to satellite communication is necessary. Minimizing the power consumption of such an opera-tional handover would be a key consideration [34].

The role of satellite communications in the context of remote or widely dispersed IoT devices is examined in [75]. Some IoT scenarios that could be served by satellite communications are listed below:

∙ Smart meters, automated power monitoring systems, and power management systems in rural areas.

6“Thin-line infrastructure” is the bare minimum network infrastructure required to satisfy critical service requirements. The determination of a thin-line infrastructure for the IoT naturally rests on the determination of the absolute minimum “critical” requirements.

∙ Outdoor environmental monitoring (e.g. air quality, water pollution, wildlife population) and natural disaster detection/warning (e.g. earthquakes, land-slides, avalanches, volcanic eruptions).

∙ Emergency communication with first responders.

For low data rate sensor applications, the high latency of satellite communications may be acceptable. For critical applications such as smart grid monitoring, a satel-lite backup to vulnerable terrestrial infrastructure may be desirable despite latency limitations.

De Sanctis et al list some design considerations in [75] for implementing IoT applications over satellite. Network functions to be considered include:

∙ MAC protocols for sensors to access satellites. MAC protocols must be spe-cialized for IoT scenarios; for example, large sensor networks with low overall data volume would probably perform better with a contention scheme than with TDMA [63].

∙ IPv6 over satellite. Assuming IPv6 is required to address the vast numbers of IoT nodes, enabling IoT over satellite would require enabling IPv6 over satellite. ∙ Interoperability of satellite and terrestrial networks. In order to seamlessly switch between terrestrial and satellite operations in the case of disaster, the two networks must be interoperable from the perspective of the IoT sensors. ∙ Optimized resource allocation and transmission management for group-based

communications. Group-based data delivery from logically or geographically associated nodes can limit signaling overhead and congestion. The broadcast, multicast, and geocast capabilities of satellites can support such group-based IoT communications.

An open question concerns the potential deployment of nanosatellite constellations for IoT applications. In [75], De Sanctis claims that such constellations may form a

viable solution for delay-tolerant applications but that the difficulty of planning the coverage area is an impediment to their use for time-critical applications.

Because the focus of this thesis is the network architecture to support time-critical applications, we do not devote much attention to satellite communications beyond this section. We will note here that if satellite integration with time-critical IoT applications is desired, the typical circuit-switched satellite communication will have to be replaced with a packet-switched architecture [63].

We now turn our attention towards cognitive networking, which we introduced early in our discussion of 5G architecture. The use of cognitive techniques will be essential in optimizing 5G for IoT [38].

1.2.2

Cognitive Networking

Cognitive networking began as the natural extension of cognitive (or software-defined) radio to complex heterogeneous wireless networks and has since grown into a new field of communications with significant applicability to the Internet of Things and 5G architecture.

A key architectural question is how many devices have cognitive functionalities, and the relevant follow-up is how does the network scale with the number of cogni-tive nodes? This latter question is discussed in [2] where a network protocol stack for cognitive machine-to-machine (M2M) communications is presented. At the phys-ical layer, IoT devices may use existing cognitive radio techniques for energy effi-cient spectrium utilization and interference management. Low-complexity cognitive transceivers will be necessary for constrained devices. The MAC and network layer protocols proposed in [2] leverage the cognitive capabilities of devices to improve higher layer efficiency.

Increasingly the idea of cognitive networks is growing beyond the use of cognitive radio to improve network performance. As defined in [87], a cognitive network senses current network conditions (including but not limited to spectrum use) and then uses this information to satisfy end-to-end goals. Per [60], a cognitive network uses processed network state data – such as traffic and flow patterns – to improve resource

management. Cognitive TCP, or CogTCP, is a proposed cognitive network protocol wherein wireless clients optimize transport layer behavior based on the experience of other nodes [60]. This is referred to in [60] as “inter-node learning,” and could, for example, allow nodes to circumvent a slow start if nearby nodes are not experiencing congestion.

The design of a cognitive network should consider the following questions:

∙ Should cognitive processes be centralized, fully distributed, or partially dis-tributed?

∙ How do you filter network state info as the amount of global information grows? ∙ How do you implement network changes synchronously among several subnets

and controllers?

∙ Can the network function well with undersampled and stale link state data?

We now summarize some areas of active cognitive networking research that look to address these and other questions.

Software-Defined Networking

Software-defined networking (SDN) offers many tools to support the collection, storage, forwarding, and processing of IoT data in cognitive networks [82]. Via SDN, automated network operators could track network resources and traffic patterns, and IoT traffic could be intelligently routed through underutilized network resources. Spe-cific to wireless IoT subnets, SDN controllers associate clients with access points and perform load-aware handoff scheduling, perhaps even between different wireless tech-nologies. (In [82] this is called “media independent handover.”) The controllers would need to track node mobility and perhaps facilitate data aggregation of grouped sen-sors, particularly since association and handoff protocols would need to scale with traffic volume and number of devices.

The optimum logical placement of SDN controllers is still a research question, but more critical is the scalability of SDN network management and control. There is no favored proposal thus far; none have provably scaled to large numbers of end-nodes with highly dynamic reconfiguration rates.

Software-defined networking today is predominantly a layer 3 (network layer) paradigm. OpenFlow was originally based off of an Ethernet switch for experimen-tation with routing protocols [61]. Future networks will require SDN at the physical and link layers as well, with very different tradeoffs and complexities. For example, a controller cannot re-route an optical flow due to a congested link without addressing the wavelength assignment problem. SDN controllers that span the protocol stack could schedule flows, reserve routes, assign wavelengths, negotiate data rates, and perhaps even perform the data aggregation for the composable IoT applications that we discussed earlier. In this last case, note that the end-users for the SDN controller are not just routers and switches, but also edge devices. There is mounting evidence that most current SDN techniques do not scale in this fashion. The future may be dominated by the company that provides a disruptive innovative solution in this emerging research area of “network orchestration.”

Network Estimation

As the number of nodes in a cognitive network increases, the scalability of the cognition processes will depend on the ability to cope with growing global state in-formation. Meanwhile, resource constraints will limit the ability of the network to collect and disseminate the full state information [64]. Network estimation will en-able cognitive networks to make effective decisions with only a subset of network state data. However, nonuniform traffic and dynamic traffic will be major impediments to estimating network parameters in the future IoT.

Proactive and reactive routing schemes for heterogeneous wireless networks are compared in [5]. Proactive schemes, where nodes stay up-to-date on full network state information, involve significant signaling overhead (in the form of link state

advertisements) to prevent route information from becoming stale. Reactive schemes, where nodes search for a new route after the current path fails (or becomes congested), are preferred to proactive schemes only for all-to-all uniform traffic [5]. This will not be the case in the IoT which will have generally asymmetric traffic.

In [64], the estimation problem is treated for networks with “sparse dynamics,” i.e. networks where very few link states change in any given time period. In such a network, adaptive compressed sensing schemes could be employed whereby each cognitive node selectively senses the channel state based on its uncertainty. A central controller could combine information from all nodes into a sparse measurement matrix that would slowly change in time. Such a scheme is not expected to succeed in a highly dynamic IoT.

If cognitive nodes can become powerful enough to perform complex statistical in-ference, the solution may lie in machine learning. Machine learning could be used to analyze network dynamics via minimal information (such as energy readings) and to implement an adaptive transmission strategy [12]. Cognitive terminals using statisti-cal learning theory could estimate network transmission trajectories and map trans-mission decisions to predicted network states. Then each node would decide whether or not to transmit in the next time step. We again note that learning techniques will be ineffective against black swan events.

It is suggested in [12] that information theoretic analysis is necessary to fully understand what constitutes the “minimal information” required for estimation. For instance, in extreme operating regimes – e.g. rare traffic arrivals with infrequent colli-sions or frequent traffic arrivals and nearly full buffers – observed energy readings will have low information capacity. Additional state variables may be needed to capture time-varying network parameters, but again we note the importance of estimating fewer but more relevant parameters in order to minimize overhead and computation [12].

For example, in [100], a scalable active probing algorithm to schedule end-to-end all-optical flows is proposed. The scalability comes from minimizing the number of probed optical paths and then reducing the computational complexity of path

selection by collapsing the data into entropy and mutual information scalars. The algorithm minimizes the amount of data collected, disseminated, and processed by the schedulers.

An open problem: where should the aggregation and processing of sensed network data optimally occur?

Fog Networking

Fog networking (also called fog computing or edge computing) refers to the “de-scent” of data aggregation and processing from the cloud to the network edge [22]. The OpenFog Consortium is a nonprofit founded by ARM, Cisco, Dell, Intel, Mi-crosoft, and Princeton University to explore the potential for fog computing to ad-dress network challenges such as high latency, end-point mobility, and unpredictable bandwidth bottlenecks [28]. Cisco Systems, the first to formalize the idea of fog net-working, envisions a network in which controllers, switches, routers, and local servers comprise so-called “fog nodes” that would run various IoT applications and then direct ingested IoT data appropriately. Simple processing could occur at any of these nodes; the data from multiple fog nodes could be passed to a more capable “fog aggregation node” for joint processing; or the data could be transmitted all the way to the cloud [39]. Processing data locally instead of transmitting potentially exabytes of IoT data to the cloud would save upstream bandwidth, and additionally decrease the amount of IoT data transmitted over IP [39].

OpenFog board member and Princeton University Professor Mung Chiang cites four goals of fog networking [22]:

∙ To satisfy strict time requirements for applications such as the Tactile Internet. In Chapter 5 we consider the potential contribution of fog networking to time-critical IoT applications in the Smart City.

∙ To improve privacy and reliability by shortening the path distance of data com-munications based on client requirements.

∙ To improve network efficiency by pooling local resources such as storage, sensing capabilities, and processing power.

∙ To enable innovation via easier experimentation.

Network management, i.e. network measurement, control, and configuration, could also be pushed to the network edge [22]. As discussed in our treatment of network estimation, the key question remains: how much information must edge de-vices synthesize about the network as a whole in order to make effective network management decisions? Some examples of fog networking currently under investi-gation include localized content caching, crowd-sourced network inference, real-time data mining, and bandwidth sharing between neighbors [22]. Fog networking has some overlap with information-centric networks, which we turn to next.

Information-Centric Networking

Named-data networking is an abstraction for the world wide web intended to replace the IP host-to-host abstraction [66], [30]. It is similar to (or perhaps derivative of) content-centric networking which aims to address content instead of hosts. Both of these concepts are part of the broader field of “information-centric networking,” which treats data independently of location [67]. Popular data that is addressed by name can be cached near the edge (e.g. in the fog) for faster delivery.

In [70], the concept of “context-aware computing” is described. Context infor-mation is linked to sensor data to aid the interpretation of that data. The central idea is that context enables raw data to become knowledge. Context can determine how data should be presented to a user; it can be used to detect events or trigger actions; or it can be used to fuse relevant data from multiple sensors, including those collecting network state data to inform network management decisions. We note that context is key to processing data in the fog.

Information-centric networking, together with fog networking and software-defined networking could form a new cognitive network architecture for future 5G and IoT networks.

Network Control

We envision that control of cognitive networks will become an important research area. With exponentially growing numbers of nodes and connections, we anticipate growth of the control plane with respect to the data plane. The protocols that today maintain a stable network – media access control and congestion control, endpoint admission control and flow control – may not be sufficient to handle highly dynamic external traffic inputs. New control theory will be required to make optimal decisions for systems where the internal state and feedback are intimately affected by system inputs, and where feedback delay is nondeterministic and dependent on exogenous input.

Self-organizing IoT Networks

The concept of a self-organizing IoT network is a good illustration of the cognitive networking paradigm. We briefly touched upon this concept in the section about emergency networks, where we discussed how ad-hoc device-to-device connectivity could prevent an IoT data blackout during a power outage or other critical infras-tructure failure [92]. Through real-time network intelligence and low-power cognitive functionality, a self-organizing IoT network could manage neighbor discovery, medium access control, path establishment, service recovery, and energy conservation [3]. Such a network could then:

∙ Preserve functionality of IoT devices when provisioned communications fail. ∙ Allow command and control of sensors and actuators during an emergency. ∙ Prevent cascading failures [3].

From a systems perspective, the IoT is a heterogeneous network of multiple in-terconnected subnetworks. Cooperative communication between these subnetworks requires distributed situational awareness such that nodes are cognizant of their neigh-borhood operations and operations of adjacent neighneigh-borhoods [3]. In order for nodes

to monitor the status of their peers, some sort of load-balancing will be necessary to handle the energy and spectrum constraints of transmitting that information. One possibility is to adopt the hierarchical clustering protocols of wireless sensor networks, whereby alternating cluster heads flatten noisy data, locally repair nodes after node failure, and communicate with other cluster heads [52]. Another possibility is the cognitive energy management strategy proposed and analyzed in the context of in-frastructureless wireless networks in [31]. If self-organization is to be possible, the initial deployment of IoT devices should be optimized with respect to the higher func-tioning (i.e. multi-radio, multi-channel) devices that can interconnect subnetworks [3].

For emergency applications, a self-organizing ad-hoc network requires individual nodes to act selflessly [84]. A node with information to transmit may use the link state information of its neighbors to compute its optimum path, but it must take into account which nodes are currently supporting mission critical communications and adjust its transmissions accordingly. Emergency networks might prove to require centralized control in order to fairly satisfy emergency priorities [84].

We know from copious research about mobile ad-hoc networks that maintaining the link state of a mobile network is very difficult. Prior attempts have been shown not to scale beyond a dozen or so mobile nodes [31]. Self-organizing IoT networks may therefore need to rely heavily on immobile edge devices.

1.2.3

Security of the IoT

To conclude our survey of IoT research, we briefly address privacy and security vul-nerabilities of the Internet of Things. The following three paragraphs comprise the only place in this thesis where security issues of the IoT will be addressed.

Inherent tradeoffs between privacy and performance, and between security and cost, limit the financial incentive for commercial entities to devote attention to the issue. As a result, there is need for centralized development of industry-wide secu-rity standards. Research in this area is largely focused on device/user authentication and resource-constrained encryption, along with some research on physical layer

de-vice security. However, having a massive number of “Things” in a network increases the likelihood of compromised nodes, and substantially increases the risk of insider attacks. A new security paradigm that allows normal operations in the presence of compromised nodes and frequent insider attacks is a major shift from the assumptions of previous models.

Several different types of security must be considered. Vehicle safety is an ex-treme example; communication between vehicles and road-side units about local road information must be accurate and timely. For driverless cars, the threat of denial-of-service or jamming attacks is of particular concern. For large data networks, the integrity of link state data will be important for network control and management functions. With the growth of SDN and perhaps NFV, control plane security becomes critical. If learning algorithms are used in support of network operations, then data contamination will be especially dangerous, impacting not only immediate actions, but also future decisions.

Security must be addressed in the context of the huge and hugely dynamic future network. Network protocols – including security – must scale to support billions of nodes and to respond rapidly to changes in traffic and link states. Because of the large number of resource-constrained devices entering and leaving the network, the assumption that all nodes are nonmalicious is untenable. In contrast, the network should be designed under the assumption that some fraction of nodes are compro-mised, and there should be graceful performance degradation as the compromised fraction grows. There should be a ubiquitous sensing function to assess the integrity of nodes, and active query techniques should further vet node integrity. The network should maintain up-to-date node profiles and monitor content relevance to mitigate denial-of-service attacks by compromised nodes. Regardless of chosen methods, se-curing the IoT is an obvious open problem that needs to be addressed by all parties involved in developing and deploying new IoT applications.

We now turn our attention to another open problem in the IoT: enabling low-latency networking for time-critical applications.

1.3

Time-Critical IoT

In the remainder of this thesis, we narrow our focus to a challenging requirement of the future IoT: time-critical networking in the Smart City.

The rapid proliferation of smart devices that has characterized the emergence of the IoT has revealed new research opportunities in the area of priority networking, in particular for emergency applications - both preventative and responsive. The IoT offers urban planners and city officials the opportunity to synthesize data from multiple sensors, in order to relay information to emergency responders and opera-tional partners and potentially to avert crisis itself. These sensors could be of the same or different type, and have similar or different service requirements. For exam-ple, a traffic sensor network might include multiple cameras, radars, and RFID tags, all of which could be used to obtain a full understanding of traffic conditions. As the number of connected devices grows, computational networking tasks may need to be conducted closer and closer to edge devices (e.g. fog computing/networking, discussed previously), and the need for crosslayer protocols that optimize network resource allocation will grow. An example network is depicted in Figure 1-1.

The existing body of IoT research illustrates several examples of low-latency re-quirements for various applications. In [51] and [83], time-critical eHealthcare ap-plications are discussed. Body sensors collect information, potentially using smart phones or other access points as fog nodes to do preliminary data processing be-fore forwarding to the cloud. Different sensors will have different quality of service requirements, and abnormal readings may require a rapid response.

The industrial IoT, discussed in [94], [8], and [102], consists of networks of phys-ical sensors and actuators in office buildings, laboratories, factories, power plants, etc. Per [8], priority is required for packets relating to “flow control, process monitor-ing, and fault detection.” Consequences of excessive packet delay could range from pipe overflows due to failed valve closures to chip malfunctions due to faulty wafer placement on a fabrication line [94].

Figure 1-1: An example IoT network. “Critical” users are shown in red.

intelligent transportation systems, and augmented reality, any of which could have latency requirements measured in single-digit milliseconds [86].

The authors in [94] posit that the main cause of latency in industrial IoT is contention MAC; the authors in [8] concur but additionally specify factors such as node density, data rate, energy per node, and processing power. There are many examples of MAC protocols that have been developed for various latency-sensitive applications ([8], [94], [37], e.g.). We will summarize these in Chapter 3 and propose a new MAC protocol for time-critical IoT applications.

In [86], the authors note that massive numbers of small packets would comprise a routing burden, and thus some sort of flow management (they propose a resource reservation scheme) would be necessary. We consider this in our treatment of network orchestration in Chapter 5.

In [44], the challenge of analyzing large volumes of high-frequency data is dis-cussed; proposed solutions include built-in event-detection via user-defined filters and “analytic operators.” Data aggregation to eliminate redundancy and minimize com-munications load in the IoT is proposed in [33] and [8], and specifically for industrial