Digitized

by

the

Internet

Archive

in

2011

with

funding

from

Boston

Library

Consortium

IVIember

Libraries

Massachusetts

Institute

of

Technology

Department

of

Economics

Working

Paper

Series

BIAS

CORRECTED

INSTRUMENTAL

VARIABLES ESTIMATION

FOR

DYNAMIC

PANEL

MODELS

WITH

FIXED EFFECTS

Jinyong

Hahn

Jerry

Hausman

Guido

Kuersteiner

Working

Paper

01

-24

June

2001

Room

E52-251

50

Memorial

Drive

Cambridge,

MA

02142

This

paper

can

be

downloaded

without

charge

from

the

Social

Science

Research Network

Paper

Collection

atMASSACHUSEHS

INSTITUTEOFTECHNOLOGY

Massachusetts

Institute

of

Technology

Department

of

Economics

Working

Paper

Series

BIAS

CORRECTED

INSTRUMENTAL

VARIABLES

ESTIMATION

FOR

DYNAMIC

PANEL

MODELS

WITH

FIXED

EFFECTS

Jinyong

Hahn

Jerry

Hausman

Guide

Kuersteiner

Working

Paper

01

-24

June

2001

Room

E52-251

50

Memorial

Drive

Cambridge,

MA

021

42

This

paper

can be downloaded

without

charge

from

the

Social

Science

Research Network

Paper

Collection

at

Bias

Corrected

Instrumental Variables

Estimation

for

Dynamic

Panel

Models

with Fixed

Effects

Jinyong

Hahn

Jerry

Hausman

Guido

Kuersteiner

Brown

University

MIT

MIT

June,

2001

Acknowledgment:

We

are grateful toYu

Hu

for exceptional research assistance. Helpfulcomments

by

Guido

Imbens,

Whitney

Newey,

James

Powell,and

participants ofworkshops

atArizona

State University, BerkeleyHarvard/MIT,

Maryland,

Triangle,UCL,

UCLA,

UCSD

are appreciated.Abstract

This

paper

analyzes the second order bias of instrumental variables estimators for adynamic

panel

model

with fixed effects.Three

differentmethods

of second order bias correction areconsidered. Simulation experiments

show

that thesemethods

perform

well if themodel

doesnot

have

a root near unity butbreak

down

near the unit circle.To remedy

theproblem

near the unit root aweak

instrumentapproximation

is used.We

show

thatan

estimatorbased

on

long differencing the

model

isapproximately

achieving theminimal

bias ina

certain class ofinstrumental variables (IV) estimators. Simulation experiments

document

theperformance

of theproposed

procedure in finite samples.Keywords:

dynamic

panel, bias correction,second

order, unit root,weak

instrumentreduces the finite

sample

biasand

alsodecreases theMSE

ofthe estimator.To

increeise further the explanatorypower

ofthe instruments,we

use the technique ofusing estimated residuals asadditional instruments a technique introducedinthesimultaneous equations

model by Hausman,

Newey, and

Taylor (1987)and

used in thedynamic

paneldata

contextby

Ahn

and Schmidt

(1995).

Monte

Carloresultsdemonstrate

that the long differenceestimatorperforms

quite well,even for high positive values of the lagged variable coefficient

where

previous estimators arebadly biased.

However,

thesecond orderbiascalculationsdo

notpredict welltheperformance

oftheestima-tor for these high values ofthe coefficient. Simulation evidence

shows

that ourapproximations

do

notwork

well near the unit circlewhere

themodel

suffersfrom

a near non-identificationproblem. In order to analyze the bias of

standard

GMM

proceduresunder

these circumstanceswe

consider alocal to non-identificationasymptotic

approximation.The

alternativeasymptoticapproximation

of Staigerand

Stock (1997)and

Stock

and Wright

(2000) is

based

on

letting the correlationbetween

instrumentsand

regressors decrease at aprescribed rate ofthe

sample

size. In their work, it isassumed

that thenumber

ofinstrumentsis held fixed as the

sample

size increases. Their limit distribution isnonstandard

and

in specialcases corresponds to exact small

sample

distributionssuch

as theone

obtainedby

Richardson

(1968) for the bivariate simultaneous equations

model.

This approach

is related to thework

by

Phillips (1989)and Choi and

Philfips (1992)on

the asymptotics of2SLS

in the partiallyidentified case.

Dufour

(1997),Wang

and

Zivot (1998)and

Nelson, Startzand

Zivot (1998)analyze valid inference

and

tests in the presence ofweak

instruments.The

associated biasand

mean

squared error of2SLS

under

weak

instrumentassumptions

was

obtainedby

Chao

and

Swanson

(2000).Inthis

paper

we

usetheweak

instrumentasymptotic approximations

to analyze2SLS

forthedynamic

panel model.We

analyze theimpact

of stationarityassumptions on

thenonstandard

limit distribution.Here

we

let the autoregressiveparameter

tend tounity in asimilarway

asinthe near unit root literature. Nevertheless

we

are not considering time series cases since in ourapproximation

thenumber

oftime periodsT

isheld constant while thenumber

of cross-sectionalobservations

n

tends to infinity.Our

limiting distribution for theGMM

estimatorshows

that onlymoment

conditionsin-volving initial conditions are asymptotically relevant.

We

define a class ofestimators basedon

linearcombinations ofasymptotically relevant

moment

conditionsand

show

that abiasminimal

estimator within this class can approximatelybe based on

taking long differences ofthedynamic

panel model. In general, it turns out that

under

near non-identification asymptotics the optimal proceduresofAlvarezand

Arellano (1998), Arellanoand

Bond

(1991) ,Ahn

and Schmidt

(1995.1997) are suboptiraal

from

a bias point ofview

and

inference optimally shouldbe

basedon

asmaller than the full set of

moment

conditions.We

show

that a biasminimal

estimator canbe

obtained by using a particular linear

combination

of the originalmoment

conditions.We

are1

Introduction

We

areconcerned with

estimation ofthedynamic

panelmodel

with

fixed effects.Under

largen, fixed

T

asymptotics it is wellknown

from

Nickell (1981) that thestandard

maximum

hkeli-hood

estimator suffersfrom an

incidentalparameter problem

leading to inconsistency. In orderto avoid this

problem

the hterature has focusedon

instrumental variables estimation(GMM)

applied to first differences.Examples

includeAnderson

and Hsiao

(1982), Holtz-Eakin,Newey,

and Rosen

(1988),and

Arellanoand

Bond

(1991).Ahn

and Schmidt

(1995),Hahn

(1997),and

Blundell

and

Bond

(1998) considered furthermoment

restrictions.Comparisons

ofinformation contents ofvarieties ofmoment

restrictionsmade

by

Ahn

and Schmidt

(1995)and

Hahn

(1999) suggest that, unless stationarity ofthe initial level y^o issomehow

exploited as in Blundelland

Bond

(1998), the orthogonality oflagged levels with first differences provide the largest sourceofinformation.

Unfortunately, thestandard

GMM

estimator obtained after firstdifferencing hasbeen found

to suffer

from

substantial finitesample

biases. SeeAlonso-Borrego

and

Arellano (1996).Mo-tivated

by

this problem, modifications of likelihoodbased

estimatorsemerged

in the literature.See Kiviet (1995), Lancaster (1997),

Hahn

and

Kuersteiner (2000).The

likelihoodbased

esti-mators

do

reduce finitesample

biascompared

to the standardmaximum

likelihood estimator,but

the remaining bias is still substantial forT

relatively small.In thispaper,

we

attempt

toeliminate thefinitesample

biasofthestandard

GMM

estimator obtained after first differencing.We

view

the standardGMM

estimator as aminimum

distance estimator thatcombines

T—

1 instrumentalvariableestimators(2SLS)

appliedtofirstdifferences.This view

hasbeen adopted

by

Chamberlain

(1984)and

Grihchesand

Hausman

(1986). It hasbeen noted

for quite a while thatIV

estimators canbe

quite biased in finite sample. SeeNagar

(1959),

Mariano

and

Sawa

(1972),Rothenberg

(1983),Bekker

(1994),Donald

and

Newey

(1998)and

Kuersteiner (2000). If the ingredients of theminimum

distance estimator are all biased, it is natural to expect such bias in the resultantminimum

distance estimator, or equivalently,GMM.

We

propose to eliminate thebias oftheGMM

estimatorby

replacing all the ingredientswith

Nagar

type bias corrected instrumental variable estimators.To

our knowledge, the idea ofapplying

a

minimum

distance estimator to bias corrected instrumental variables estimators isnew

in the literature.We

consider a second orderapproach

to the bias of theGMM

estimator using theformula

contained in

Hahn

and

Hausman

(2000).We

find that thestandard

GMM

estimator suffersfrom

significant bias.The

bias arisesfrom

two primary

sources: the correlation ofthe structuralequation error with the reduced

form

errorand

the low explanatorypower

ofthe instruments.We

attempt

to solve theseproblems by

using the "long difference technique" of Grilichesand

Hausman

(1986). Grilichesand

Hausman

noted that bias isreduced

when

long differences areused in tlie errors in variable problem,

and

a similar resultworks

here with the second order bias.Long

differences also increases the explanatorypower

of the instrumentswhich

furtherderive the

form

ofthe optimalhnear

combination.2

Review

of

the Bias

of

GMM

Estimator

Consider the usual

dynamic

panelmodel

with

fixed effects:y^t

=

ai+

f3yi^t-i+

eu, i=

l,...,n; t=

l,...,T

(1)It has

been

common

in the literature to consider the casewhere

n

is largeand

T

is small.The

usual

GMM

estimator isbased

on

the first differenceform

ofthemodel

Vit

-

yi,t-\=

(3{yi,t-i-

yi,t-2)+

{en-

^t,t-i)where

the instruments arebased

on

the orthogonalityE

[yi,5 {^it-

et,t-i)]=

s=

0,... ,i-

2.Instead,

we

consider aversion oftheGMM

estimator developedby

Arellanoand

Bover

(1995),which

simplifies the characterization of the "weight matrix" inGMM

estimation.We

definethe innovation

ua

=

Qi+

en- Arellanoand Bover

(1995) eliminate the fixed effect aj in (1)by

applying Helmert's transformation

T

-t

ul Utt

- ^7—7

("j.t+i H 1-Uix) t=

ir-1

T-t+l

instead offirst differencing.^

The

transformationproduces

Vu

=

P^zt+

e*t> <=

1,.. . ,T

-

1where

Xj=

y*t-\- Let z^t=

(j/io, - - ,yit-i)Our

moment

restriction issummarized

by

E[zite*n]=0

<=

l,...,r-l

It

can be

shown

that,with

the homoscedasticityassumption

on

en, the optimal "weightma-trix" is proportional to a block-diagonal matrix, with typical diagonal block equal to E[zitz[^.

Therefore, the optimal

GMM

estimator is equal toET

—1 ^fry *1=1 ^t Ptyt

^GMM

(2)where

x^=

{^ur

,<;)', Vt=

iVur

^Vnt)'-- ^i=

{zu,--- ,Znt)\and

Pt=

Zt{Z\Zt)~^

Z[Now,

let b2sis,t denote the2SLS

of y*on

x*:^VPiVl

^2SLS,t t

=

1,...,r-

1'Arellano and Bover (1995) notes that the cfficienc)' ofthe resultant

CMM

estimator is not afTcrted whetherIfEit are i.i.d. across t, then

under

thestandard

(first order) asymptoticswhere

T

is fixedand

n

grows

to infinity, it canbe

shown

thatV^

(b2SLS,l -/?,-••MSLS^T-I

-/?)->

AA

(0,*)

,where

^

isa

diagonal matrixwith

thet-thdiagonal elements equaltoVar

{en)/ (plim n~^x*'Ptx*).

Therefore,

we

may

consider aminimum

distance estimator,which

solves-1

mm

b\

b2SLS,T-l~^

)

(xrPixj)

-1 (x5,'_jPr-l

2:^-1 )' b2SLS,\-b

\

\

hsLS,T-l

~b

J

The

resultantminimum

distance estimator is numerically identical to theGMM

estimator in(2):

Tj='<Ptx;,-'b2SLS,i

boMM =

Z^t=l ^f -^t^t

(3)

Therefore, the

GMM

estimatorbcMM

n:iaybe understood

as alinearcombination

of the2SLS

estimators b2SLS,i-,--- 7^25LS,r-i- It has long

been

known

that the2SLS

may

be

subject tosubstantial finite

sample

bias. SeeNagar

(1959),Rothenberg

(1983),Bekker

(1994),and

Donald

and

Newey

(1998) for related discussion. It is therefore natural to conjecture that a linearcombination

ofthe2SLS

may

be

subject to quite substantial finitesample

bias.3

Bias Correction using Alternative

Asymptotics

In this section,

we

consider the usualdynamic

panelmodel

with

fixed effects (1) using thealternative asymptotics

where

n

and

T

grow

to infinity at thesame

rate.Such approximation

was

originally developedby Bekker

(1994),and

was

adopted

by

Alvarezand

Arellano (1998)and

Hahn

and

Kuersteiner (2000) in thedynamic

panel context.We

assume

Condition

1en

~

A/^(0,a^) overiandt.

We

alsoassume

stationarityon

yifiand

normalityon

a,^:Condition

2 y,o|«:~

A/"(^,

^)

and

Or^

N

{0,al).In order to guarantee that Z'tZt is nonsingular.

we

willassume

that^This condition allows us to use lots ofintermediate results in Alvarez and Arellano (1998). Our results are expected tobe robust to violation ofthis condition.

Condition

3

^

^

p. where<

p

<l.^

Al\'arez

and

Arellano (1998)show

that,under

Conditions1-3,

v^

(bGM^f

-(3-^{l+

,3)]^-

.'V (0.1-

0') , (4)where

bcMM

is defined in(2)and

(3).By

examining

theasjTnptotic distribution (4)under

suchalternative asjTnptotic

approximation

where

n and

T

grow

to infinity' at thesame

rate,we

can

develop a bias-corrected estimator. This bias-corrected estimator is given

by

1

'GA/M

-bcMM

+

Combining

(4)and

(5).we

can easily obtain:Theorem

1Suppose

that Conditions1-3

aresatisfied. Then,y/nT

[hcMM

—

!3)(5)

.V(0,l-/32).

Hahn

and

Kuersteiner (2000) establishby

aHajek-type

convolutiontheorem

that^^Z"(0, 1—

/3^)isthe

minimal asymptotic

distribution.As

such,thebias correctedGMM

is efficient.Although

the bias corrected

GMM

estimatorbcMM

doeshave

a desirable propertyunder

the alternativeasjTnptotics, it

would

notbe

easy to generalize thedevelopment

leading to (5) to themodel

invohang

other strictlyexogenous

\'ariables.Such a

generalizationwould

require thecharac-terization ofthe asjTiiptotic distribution ofthe

standard

GMM

estimatorunder

the alternativeas^Tnptotics,

which

may

notbe

trivial.We

therefore considerehminating

biases in b^sis.tin-stead.

An

estimator thatremoves

the higher order bias ofb2SLS,t is theNagar

type

estimator.&JV,agar.t

x'/PfX^

—

Xtx'/Mtx^'

where

Alt=

i

—

Pt: ^t^

n-K*

- ^^'^^*

denotes thenumber

ofinstruments forthe t-ih equation.For

example,we

may

use At=

^^'j^^2 ^^ ^°Donald

and

Newey

(1998).We

may

also useLIML

for the f-th equation, in

which

case Atwould be

estimatedby

the usualminimum

eigenvaluesearch.

We

now

examine

properties ofthe correspondingminimum

distance estimator.One

possibleweight

matrix

forthisproblem

is givenby

{xl'Prxl-Xix\'Mrxl)

-1

{^T-l^T-l^T-l

~

^T-l^T-l-^^T-i^T-l)

'.Alvarezand Arellano (1998) onlyrequire

<

p<

oo.We

requirep<

1 toguarantee that Z'lZtissingularforWith

this weight matrix, it canbe

shown

that theminimum

distance estimator is givenby

One

possibleway

toexamine

the finitesample

property of thenew

estimator is to use thealternative asymptotics:

Theorem

2Suppose

that Conditions 1-3 are satisfied. Also suppose thatn

and

T

grow

to infinity at thesame

rate. Then,\/nT

{bj^agar—

P) —*N

(0,1—

/3 ).

Proof.

Lemmas

10,and

11 inAppendix

A

alongwith

Lemma

2 ofAlvarezand

Arellano(1998) establish that 7

<Pte*t

'-^^t'Mts: -> AT 0,VnT^K

'''

n-Kt

' ^V

V

' 1-

/3^and

a^

-^

y

(x'Ptxt

-

-^i-x/Mtx;

, i2'from which

the conclusion follows.In

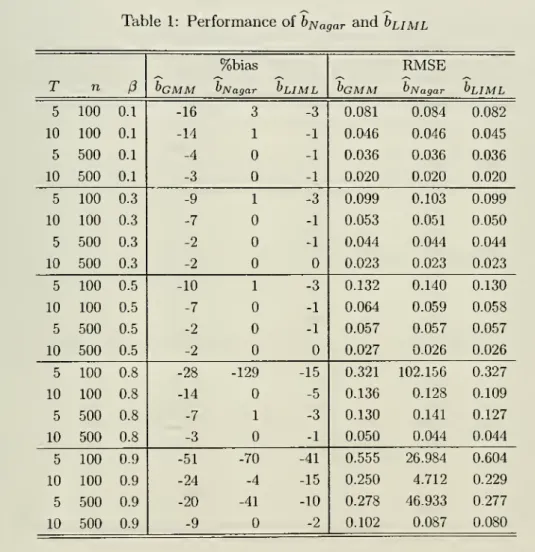

Table

1,we summarized

finitesample

properties of h^agarand

buML

approximated

by

10000

Monte

Carlo runs.^ Here,buML

is the estimatorwhere

As in (6) are replacedby

the corresponding "eigenvalues". In general,we

find that bj^agarand buj^i

successfullyremove

bias unless /? is close to

one

and

thesample

size is small.4

Bias Correction

using

Higher

Order

Expansions

In the previous section,

we

explained the bias of theGMM

estimator as a result ofthe biasesof the

2SLS

estimators. In this section,we

consider elimination of the biasby

adopting thesecond order Taylor type approximation.

This

perspective hasbeen adopted

by Nagar

(1959),and Rothenberg

(1983).For this

purpose

we

first analyze the second order bias ofa

generalminimization

estimator 6 ofasingleparameter

(3&R

definedby

b

=

argmin

Qn

(c) (7)for

some

C

C

M.The

score for the minimizationproblem

isdenoted

by Sn

(c)=

dQn

(c)/dc.The

criterion function isassumed

tobe

oftheform

Q„

(c)=

g

(c)'G

{c)~^g

(c)where

g(c)and

G(c)

are defined in Condition 7.The

criterion functiondepends on

primitive functions 8 {wi,c)and

V(tt;,,c)mapping M^

x

M

into R"^ ford

>

1,where

Wi are i.i.d. observations.We

assume

that

E

[6 {xL\,p)]=

0.We

impose

the following additional conditionson

w^,6,and

ip.Condition

4 The random

variableswi

are i.i.d.Condition

5

The

functions 6{w,c)and

tj;{w,c) are three times dijferentiable in c for c£

C

where

C

CM

is acompact

set such that (3E

intC.

Assume

that 6 {wi,c)and

tp {iVi,c) satisfy aLipschitz condition \\6{wi, ci)

—

6{wi,C2)||<

Ms

(wi)|ci—

C2Iforsome

function Ms{.) : IR'^—

>R

and

ci,C2G

C

with thesame

statement holding for ip.The

functions M5{.)and M^(.)

satisfyE

[Me

{wi)]<

00and

E

|M^

(wi)P

<

00.Condition

6

Let Sj{wi,c)=

d^6{wi,c)/dc^, '^ {wi,c)=

ip {wi,c)il) {wi,c)'and

'^j{wi,c)=

d^^

{wi,c)jdcK

Then, Xj (c)=

E

[6j {wi,c)],and

Aj

(c)=

E

[^j (fOi, c)] all existand

arefiniteforj

=

0,...,3.For

simplicity,we

use the notation Xj=

Xj (/3),Aj

=

Aj

(/?),A

(c)=

Xq(c)and

A(c)

=

Ao(c).Condition

7 Letg{c)

=

-Yl'l^iS{wi,c),Qj {c)=

^

Er=i

^j (""^^.c),G

(c)=

^

E"=i

^

(^^i>c)VK-,c)'

an

dGj{c)

= ^Er=i^iK,c).

Theng{c)

^

E[6iw„c)],

g,{c) -^E[6,{w„c)],

G(c)

E

['^{wi,c)],and

Gj

(c)^

E

[i>-j{wi,c)] for allceC.

Our

asymptotic approximation

of the second order bias of b isbased

on an approximate

estimator b such that b—

b=

Op (^~^) -The

approximate

bias ofb is then defined asE

b—

(3while the original estimator h

need

not necessarily possessmoments

ofany

order. In order tojustify our

approximation

we

need

to establish that b is i/n-consistentand

thatSn

(b)=

withprobability tending to one. For this

purpose

we

introduce the following additional conditions.Condition

8

(i) There existssome

finite<

M

<

00 such that the eigenvalues ofE

[^(zt;,-,c)] are contained in thecompact

interval[M^-',M]

for all cG

C;

(ii) the vectorE[8

{w^,c)\=

ifand

only ifc=

(3; (Hi) X\ 7^ 0.Condition

9

There existssome

r/>

such thatE

Ms

{w^) ^suPcecll^K'C)

|2+T7<

00,and

E

suPcGcllV'(^«i,c)f+''<

00,E

M^

(u'i)^2+7,<

00,<

00.Condition 8 is

an

identification condition that guarantees the existence of a unique inte-riorminimum

ofthe limiting criterion function. Condition 9 corresponds toAssumption

B

ofAndrews

(1994)and

is used to establish a stochastic equicontinuity property of the criterion function.Lemma

1Under

conditions 4 - 9, b defined in (7) satisfies y/n{b—

(3)=

Op(l)and Sn

(6)=

with probability tending to 1.

Proof.

SeeAppendix

B.Based on

Lemma

1 the first order condition for (7) canbe

characterizedby

A

second order Taylor expansion of (8)around

/3 leads toa

representation oi b—

(3up

toterms

oforder Op{n~'^). InAppendix

B, it isshown

thatVS(6 -

ffl=

-i*

+

-L (-ir

+

i*=

-

i;*')

+

Op(-1=)

(9)(See Definition 2 in

Appendix

B

for the definition of*,T,$,H,

and

F.) Ignoring the Op(A^j

term

in (9),and

taking expectations,we

obtain the"approximate

mean"

of ^/n{b—

(3).We

present the second order bias ofb in the next

Theorem.

Theorem

3

Under

Conditions 4-9, the second order biasofb

is equal to(10)

where

E[T]

=

2tTace{A''E

and

E[^=]

=

SX[A-^E

E[^]

=

0, f\cf"1\6i^

j-

2X[A-^E

[^p^i>',A-H^]-

trace(A-^AjA-^i?

[6i6'^) ,A-^Ai

-

4XjA'^E

[6^X\A-'^^P,^'^A^^Ai

and

-SX'^A-^E

[6i6'^A-^AiA-^Ai

+

AX'^A'^E

[<5,<5',]A^^Aa,

E[<l>^]=iX[A-^E[6^6'^A-'X^,

Proof.

SeeAppendix

B.Remark

1For

theparticular casewhere

ip,=

6i, i.e.when

b is aCUE,

the biasformula

(10)exactly coincides with

Newsy

and

Smith's (2000).We

now

applythese generalresults to theGMM

estimator ofthedynamic

panel model.The

GMM

estimatorbcMM

can

be

understood

tobe

asolution to the minimizationproblem

mm

c \

n

m,

(c)for c

G

C

where

C

issome

closed intervalon

the real line containing the trueparameter

valueand

m,

(c) V ^^.T-\ [vIt-i C X.:T-aJ

T7 ^-^ 2=1 Zi\Zii Zx,T~\Z,7-_jWe

now

characterize the finitesample

bias of theGMM

estimatorbe

mm

of thedynamic

Theorem

4

Under

Conditions 1-3 the second order bias ofbcMM

is equal toB1+B2

+

B3

f

1+

-n

\n

(11)where

zx sBi

=

Tr^

ELY

trace((rr)''

T-")

B2

=

-2Tf

zti

EL7

rr' (rr)-'

r--

(rf

)-ir

^3

=

Tr^

^^j-/ ^fr^i

rr' (rr)-'

-B3.1 (t,s)(rf

)-irr

.andTi

=

Er=i'rr'(rfr^rr.

Proof.

SeeAppendix

C.In

Table

2,we

compare

the actualperformance

oihcMM

and

theprediction ofits biasbased

on

Theorem

4.Table

2 tabulates the actual biasofthe estimatorapproximated

by

10000

Monte

Carlo runs,

and compares

it with the second order biasbased on

theformula

(11). It is clearthat the second order theory does a reasonably

good

job exceptwhen

p

isclose to theunitcircleand

n

is small.Theorem

4 suggestsa

naturalway

of eliminating the bias.Suppose

thatBi/B2,Bz

are>/n-consistent estimators ofB-[,B2,B3.

Then

it is easy to seethatt>BCi

—

t>GMM

{B-i+

B2

+

Bsj

(12)is first order equivalent to

bcMM,

and

hassecond

order bias equal to zero. Define Tf^=

n-'

HU

^rt^tM""

=

ri-^EIli

^rtKv

^\T

=

«"'

ELi

<t^l^u^is and

n

i=l

where

e*j=

y*^—

x*^bGMM

LetBi,B2

and

.63be

definedby

replacingrj^,rf^,r"^^ and

53,1(f,s)

by

ff

,fr,%"

and

53,i(i,s) inBi,B2 and

S3-Then

theBs

will satisfy the -yn-consistency requirement,and

hence, theestimator (12) willbe

first order equivalent tobcMM

and

willhave

zero second order bias.Because

thesummand

E

[z^tx*i]'E

\z,t,z\^" 6*iZu\'^K^^Zisz[^E[z,sz[,]~E

[zisX*,]in the

numerator

ofi?3 is equal to zero for s<

i,we

may

instead considerbBC2

=

bcMM

[Bi

+

B2

+

B3

where

lis

=

tr^

eL"/

eI='

f

r' (f

f

) "' ^3,1(*,s)(f

r

) ''f

r-Second

order asymptotic theory predictsapproximately

that 6j3C2would be

relatively freeofbias.

We

examined whether

such prediction is reasonably accurate in finitesample

by

5000

Monte

Carlo runs.^ Table 3summarizes

the properties of 6bc2-We

have

seen inTable

2 that the second order theory is reasonably accurate unless /3 is close to one. It is therefore sensible to conjecture thatbBC2 would have

a reasonable finitesample

bias property as long as /3 is nottoo close to one.

Such

a conjecture is verified inTable

3.5

Long

Difference

Specification:

Finite

Iteration

In previous sections,

we

noted that even the second order asymptotics "fails" tobe a

good

approximation

around

/?~

1. Thisphenomenon

can

be

explainedby

the 'Sveak instrument" problem. See Staigerand

Stock (1997). Blundelland

Bond

(1998) argued that theweak

instru-ment

problem

canbe

alleviatedby assuming

stationarityon

the initial observation yjo-Such

stationarity condition

may

or"may

notbe

appropriate for particular applications. Further,sta-tionarity

assumption

turns out tobe

apredominant

source ofinformationaround

/?«

1 asnoted

by

Hahn

(1999).We

therefore turn tosome

othermethod

toovercome

theweak

instrumentproblem around

theunit circle avoiding thestationarity assumption.We

argue thatsome

ofthedifficulties of inference

around

the unit circlewould

be

alleviatedby

taking a long difference.To

be

specific,we

focuson

asingleequationbased

on

the longdifferenceVtT

-

yn

=

P

iViT-i-

Vio)+

{£iT-

£n)

(14)It is easy to see that the initial observation y,o

would

serve as a valid instrument.Using

similar intuition asin

Hausman

and

Taylor (1983) orAhn

and Schmidt

(1995),we

can

see that2/iT-i

-

l3yiT-2, , yi2—

Pyn

would be

valid instruments as well.5.1

Intuition

In

Hahn-Hausman

(HH)

(1999)we

found that the bias of2SLS

(GMM)

depends on

4fac-tors: "Explained" variance of the first stage

reduced form

equation, covariancebetween

thestochastic disturbance ofthe structural equation

and

the reducedform

equation, thenumber

ofinstruments,

and sample

size:„,:_,

_

m

~

1

(number

ofinstruments) x ("covarianc e")n

"Explained" variance of the first stagereducedform

equation"The difTcrence of Monte Carlo runs here induced some minor numerical difference (in properties of

bcMM)

Similarly, the

Donald-Newey (DN)

(1999)MSE

formula

depends

on

thesame

4factors.We

now

consider first differences

(FD)

and

long differences(LD)

to seewhy

LD

does somuch

better inour

Monte-

Carlo experiments.Assume

thatT —

A.The

first difference setup

is:2/4

-

Z/3=

/?(ya-

y2)+

£4-

£3 (15)For the

RHS

variables it uses the instrument equation:ys

-

y2=

(/?-

1)2/2+

a

+

£3Now

calculatetheR^

forequation (15) using theAhn-Schmidt

(AS)

moments

under

"idealcon-ditions"

where

you

know

(3inthe sense that the nonlinearrestrictionsbecome

linear restrictions:We

would

then

use (y2, yi,yo,cv-f-ei,a

-I-£2) asinstruments.Assuming

stationarity forsymbols,but

not using it as additionalmoment

information,we

can

writeyo

=

Yzrp

+

^0'where

^0^

(0' -iln^ )• ^^ ^^^ t>eshown

that the covariancebetween

the structure errorand

the first stage error is

—

o"^,and

the "explained variance" in the first stage is equal tocr'^-^r^-Therefore,the ratio that determines the bias of

2SLS

is equal to-a2

l+

p

2^±1

1-/3'

which

is equal to—19

for /?—

.9. Forn

=

100, this implies the percentage bias ofNumber

ofInstruments—19

,„„ 5—19

,„„ ,^^ ^^:^ ;

—

7; TT-

X

100=

——

-—

r x

100=

-105.56

Sample

Sizep

100 0.9We

now

turn to theLD

setup:y4-yi

=

P

(ys-

yo)+

£4-£i

It

can be

shown

that the covariancebetween

the first stageand

second stage errors is—

/3^(T^,and

the "explained variance" in the first stage is givenby

—a

(2/3^-

4/3''-

2/3^+

4/32+ 4p-2p^

+

6)a^

+

P^-p^ +

2-

2/3^(-2/3-3

+

/?2)a2-l+/32

2

where

a^= %.

Therefore, the ratio that determines the bias is equal to2

{-2p-3 +

p^)a^-\+p^

(2/3^

-

4/3^-

2/3'^+

4/32+

4/3-

2/3^+

6)a'^+

/?^-

p'^+

2-

2p^which

is equal to2.570

3x10-''

-.374

08

+

a2

+

4.8306x

IO-2for /?

=

.9.Note

that themaximum

value that this ratiocan

take in absoluteterms

is-.37408

which

ismuch

smallerthan

—19.We

thereforeconclude that the longdifferenceincreasesi?^but

decreases the covariance. Further, thenumber

of instruments is smaller in the long differencespecification so

we

should expecteven

smaller bias.Thus,

all ofthe factorsin theHH

equation,except

sample

size, cause theLD

estimator tohave

smaller bias.5.2

Monte

Carlo

For the longdifferencespecification,

we

can

usey^o aswell asthe "residuals" yir-i—

l3yiT-2-, -• -,yi2—f3yii as validinstruments.^

We

may

estimatep

by

applying2SLS

tothelongdifferenceequa-tion(14) usingyioasinstrument.

Wemaythenuse

(yio,yiT-i-b2SLsyiT-2,

,yi2-hsLsyn)^

instrument to the long difference equation (14) to estimate p. Call the estimator b2SLS,i-By

iterating this procedure,

we

can define 62SL5,2, i>2SLS,Si Similarly,we

may

first estimate /?by

the Arellanoand Bover

approach,and

use (yio,yiT-i-bGMMyiT-2,

--

,yi2-bcMMynj

asinstrument to the long difference equation (14) to estimate p. Call the estimator 6251,5,1•

By

iterating this procedure,

we

can

definebGMM,2, ^GMM.s,

-- Likewise,we

may

first estimateP

by

huML,

and

use [yio,yiT-i-

buMLyiT-2,

,yi2-

buMLynj

as instrument to the longdifference equation (14) to estimate p. Call the estimator

bijML^-

By

iterating this procedure,we

can

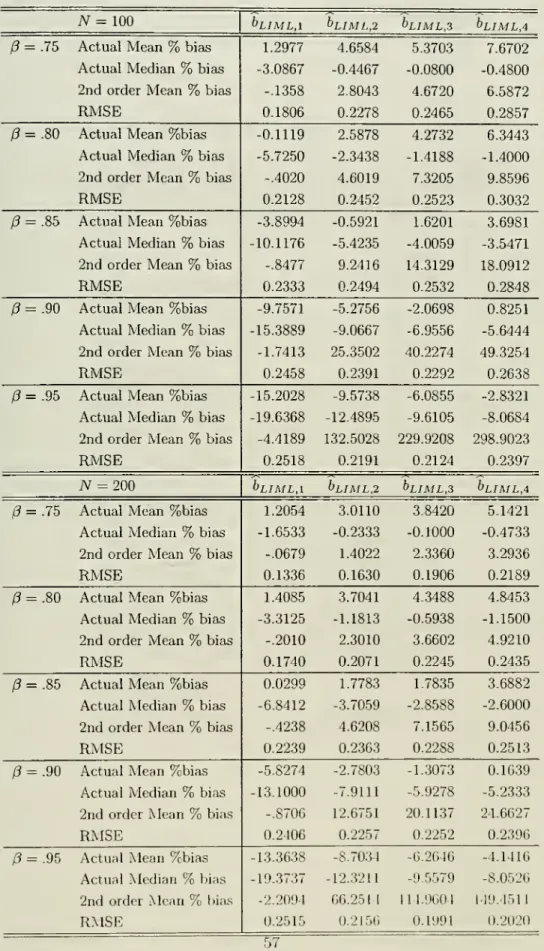

define bLiML,2, bLiML,3, --We

found

that such iteration of the long differenceesti-mator

works

quite well.We

implemented

these procedures forT

=

5,n

=

100,p

=

0.9and

(j^

=

o"^=

1.Our

finding with5000

monte

carlo runs issummarized

in Table 4. In general,we

found

that the iteration ofthe long difference estimatorworks

quite well.^We

compared

theperformance

ofour estimator with Blundelland Bond's

(1998) estimator,which

uses additional information, i.e., stationarity.We

compared

four versions oftheirestima-tors bsBi, ,

bsBi

with the long difference estimatorsbu

m

lATbu

m

L,2,bu

m

l,2,- For the exactdefinition of 6bbi, , 6bb4, see

Appendix

E.Of

the four versions,6bb3 and bsBi

are the onesreported in their

Monte

Carlo section. In ourMonte

Carlo exercise,we

setp

=

0.9, a^=

\, Q.i r^N

{0,1).Our

findingbased

on 5000

Monte

Carlo runsis contained inTable

5. In terms ofbias,

we

find that Blundelland Bond's

estimators6bb3 and 6^54 have

similar properties as thelong difference estimator(s), although the former

dominates

the latter interms

of variability.We

acknowledgethat theresidualinstruments are irrelevant underthe near unity asymptotics.^Second order theory does not seem toexplain the behavior ofthe long difference estimator. In Table 7, we

comparethe actual performance ofthe long difference based estimators with the second order theory developed

(We

note, however, that6bbi and 6bs2

are seriously biased.This

indicates that the choice ofweightmatrix

matters inimplementing

Blundelland

Bond's

procedure.)This

result is notsurprising

because

the long difference estimator does not use the information contained in theinitial condition. See

Hahn

(1999) for a related discussion.We

alsowanted

toexamine

thesensitivity ofBlundell

and

Bond's

estimator to misspecification, i.e., nonstationary distributionofyio- For this situation the estimator will

be

inconsistent. In order to assess the finitesample

sensitivity,

we

considered the caseswhere

yio~

fj3k~

'j^L

)Our

Monte

Carlo resultsbased

on 5000

runs are contained inTable

6,which

contains results for (3p—

.5and

^p

=

0.We

find that the long difference estimator is quite robust,

whereas 6^53 and 6bb4

become

quitebiased as predicted

by

the first order theory.(We

note thathBB\

and

6bb2

are less sensitiveto misspecification.

Such

robustness consideration suggests that choice ofweightmatrix

is notstraightforward in

implementing

Blundelland Bond's

procedure.)We

conclude that the longdifference estimator

works

quite welleven

compared

to Blundelland

Bond's

(1998) estimator.^6

Near

Unit

Root

Approximation

Our

Monte

Carlo simulationresultssummarized

inTables1, 2,and

3 indicate thatthe previously discussedapproximations

and

the biascorrections that arebased

on

them

do

notwork

well near the unit circle.This

isbecause

the identification of themodel becomes

"weak"

near the unitcircle. See Blundell

and

Bond

(1998),who

related theproblem

to the analysisby

Staigerand

Stock (1997). In this Section,we

formallyadopt approximations

local to the points in theparameter

space that are not identified.To

be

specific,we

considermodel

(1) forT

fixedand

n

—

> 00when

also /?„ tends to unity.We

analyze the bias of the associatedweak

instrument limit distribution.We

analyze the class ofGMM

estimators that exploitAhn

and

Schmidt's (1997)moment

conditionsand

show

that a strict subset of the full set ofmoment

restrictionsshould

be

used in estimation in order tominimize

bias.We

argue that this subset ofmoment

restrictions leads to the inference

based

on

the "long difference" specification. FollowingAhn

and Schmidt

we

exploit themoment

conditionsE[uiu[]

=

{al+

cTl)l+

alll'

E

[uiyiQ] cr.ayo-with

1=

[1,.-.,1]' a vector ofdimension

T

and

Uj=

[u,i,•j^^zt]be

writtenmore

compactly

asThe

moment

conditions canchE[uiu'^\ 2 vech/ 9 vech(7

+

ll')E

[uiyio]=

<^e+

^a

+

O'oyo1

(16)

^We

did try tocompare thesensitivity ofthe twomoment

restrictionsby implementingCUE.

but we experi-enced some numericalproblem. Numerical problem seemstobe an issuewithCUE

in general. Windmeijer (2000) reports similar problemswithCUE.

where

theredundant

moment

conditionshave

been eHminated

by

use ofthevech

operatorwhich

extracts the

upper

diagonal elementsfrom a symmetric

matrix. Representation (16)makes

itclear that thevector b

E

R^(^+^)/2+^

is contained ina

3 dimensionalsubspace which

is anotherway

ofstating that there areG

=

T{T

+l)/2

+

T

—

3 restrictionsimposed

on

b.This statement

is equivalentto

Ahn

and

Schmidt's (1997) analysis ofthenumber

ofmoment

conditions.GMM

estimators are obtainedfrom

themoment

conditionsby

eliminating theunknown

parameters

(T^,<y1,and

Oay^.The

setofallGMM

estimators leadingto consistent estimatesof/?can

thereforebe

describedby

a{T{T

+

l)/2+

T)

x

G

matrix

A

which

contains all the vectorsspanning

the orthogonalcomplement

of6.This

matrix

A

satisfies b'A=

0.For our purposes it will

be

convenient to chooseA

such thatb'A

=

[EuitAuis,E

{uiT^Uij),EuiAuik,

EAu'^yio] ,s

=

2,...,T;i=

l,...s-2;j=

2,...,r-l;A;=

2,...,Twhere

Aui

=

[ui2—

un,

...,Uix—

UiT-i] Itbecomes

transparent thatany

other representationofthe

moment

conditionscan

be

obtainedby

applying acorresponding nonsingularlinear operatorC

tothematrix

A.Itcan

be

checkedthat thereexistsa nonsingularmatrix

C

such that b'AC

=

is identical to themoment

conditions (4a)-(4c) inAhn

and Schmidt

(1997).We

investigate the properties of (infeasible)GMM

estimators basedon

E

[u^tAuis (/?)]=

0,S

[u^T^Uij (/?)]=

0, £;[u,Au,k (/?)]=

0,S

[Vro^u.t (/?)]=

obtained

by

settingAuu

{(3)=

Ayu -

PAyu-i-

Here,we

assume

that the instrumentsuu

areobservable. Let

gn

{(3) denote acolumn

vector consistingoiuuAuis

(/3) ,UixAuij

(/?)

,UiAuik

(/?).Also let g,2{(3)

=

[y^oAu,{f3)]. Finally, letgn{P)

=

n-^^j:^^,

[g^l (P)',9^2 {P)']'

with

theoptimal weight

matrix

fin=

E

[gi (/?„) gi (/?„)'].The

infeasibleGMM

estimator of a possiblytransformed set of

moment

conditionsC'gn

(/?) then solvesP2SLS

=

argmin

g„(/3)'C

(C'C!„C)+ C'gn

{(3) (17)where

C

is aG

x rmatrix

for 1<

r<

G

such thatC'C

=

U

and rank (c{C'Q,C)'^ C'\

>

1.We

use [C'Q.C)'^ to denote theMoore- Penrose

inverse.We

thus allow the use of a singularweight matrix.

Choosing

r lessthan

G

allows to exclude certainmoment

conditions. Letki

=

-dgii{0)/dp,

/,,2=

-%2(/?)/a/?,

and

/„=

n-^^Z^^i

[fuJ^]'-

The

infeasible2SLS

estimator canbe

written asP2SLS

-Pn=

(fnC

(G'fi„G)+

C

fX'

f^C

(C'fi„G)+ C'gn

iPn) (18)

We

arenow

analyzing the behavior of /?2sl5-

Pn

under

local to unity asymptotics.We

make

the following additional assumptions.^

Condition 10

Letyu

=

Oi+

PnVit-i+

^it withen

~

A'' (0,cr^), ai^

N

(0,a^)and

y^o~

A'' (

^%

,j^^

],where

/?„=

exp(—

c/n) forsome

c>

0.Also note that

Ayu

=

Pl^^Vio+

^it+

^

El=i

Pn'^^u-s

+

Op(n-^)where

r?,o~iv(0,(^„-l)y

(1-/32)).

Under

the generatingmechanism

described in the previous definition the followingLemma

can

be

established. 'Lemma

2

Assume

/?„=

exp(—

c/n) forsome

c>

0.For

T

fixedand

asn

-^ ooi=l i=l

and

where[!i'^,i'y]

~

iV(0,E) withE

Ell Ei2 E21 E22

and

Ell=

^I, E12=

SMi

E22=

SM2,

where2 2

Ml

=

-1 1

and

S12=

E21.We

also have1

-1

M2

2-1

•--1

-1 2 1^

\ ^ E22+

o(l)Proof.

SeeAppendix

D.Using

Lemma

(2) the limiting distribution ofP2SLS

~

Pn

is stated in the next corollary. For thispurpose

we

define theaugmented

vectors^f

=

[O,...,0,^^]and

^*

=

[O,...,0,^^]and

partition

C

=

[C'qjC{]' such thatC'^f

=

C[^^. Let rj denote therank

of Ci.Corollary

1 LetP2SLS

" Pn

^^ given by (IS). IfCondition 10 is satisfied thend

SiCl (C1S22C1)

C{^y

P:2SLS-

1c;ci(C5E22Circ;e

=

^V(C,S22)

(19)Unlike the limiting distribution for the

standard

weak

instrument problem,X(C,

E22), asdefined in (19), is

based

on

normal

vectors thathave

zeromean. This degeneracy

is generatedby

the presence of the fixed effect in the initial condition, scaledup

appropriately to satisfythe stationarity requirement^'' for the process yu- Inspection ofthe proof

shows

that the usualconcentration

parameter

appearing in the limit distribution isdominated by a

stochasticcom-ponent

related tothe fixedeffect.This

situationseems

tobe

similar totime

seriesmodels

where

deterministic trends can

dominate

theasymptotic

distribution.Based

on

Corollary 1,we

define thefollowing classof2SLS

estimatorsfor thedynamic

panelmodel.

The

class contains estimators that only usea

nonredundant

set ofmoment

conditionsinvolving the initial conditions yiQ.

Definition

1 LetP2SLS

^^ defined as P^gj^g=

argmin^

^2,n(/?)'Ci

lC{QCi)

C[g2,n{0),where

92,71(0)

=

Ti~^'^Yl^=\ 9i2{P)r^

is asymmetric

positive definite(T

—

1)x

(T

—

1)matrix

ofcon-stants

and C\

is a[T

—

\)X

r\matrix

offullcolumn rank

rj<

T

—

1 such thatC[Ci

=

I.Next we

turn tothe analysis ofthe asymptotic bias for the estimatorP2SLS

^^thedynamic

panel model. Since the limit only

depends on

zeromean

normal

random

vectorswe

can apply

the results ofSmith

(1993).Theorem

5 LetX*

(Ci,QJ

be the limiting distribution ofP2SLS

~

1 i''^ Definition 1under

Condition 10. Let

D

=

[D

+

D')/2, whereD

=

(c{QCi]

C[M[Ci.

Then

[x

(c„n)] =

A-

f;

<MWc»

(fl,/n

-

A- {c[acX')

fc=0 \2)\+k'^- V

^ ^

/

E

'"An alternative way to parametrize the stationarity condition is to set yu

=

(!—/?„)Qi+

(B^yu-i+

en,£it

~

N

(O.CTj) ,Qi~

N

{0,al) andy.o~

N

iOi,y^^

j with/3„=

exp(-c/n).It canbeshownthatanestimatorsoley based on the

moment

condition E[uii^Uik(Po)]=

is consistent. Restrictingattention toestimators that are ba.sed on allmoment

conditions except the conditionE[uiAu,k(/3o)]=

0, onecan show thatwherefi

=

It-icry/2.Estimatorsintheclass /Jjs^s definedinDefinition1 canbeanalyzed bythesame methodsasunderthestationarityassumptionsdiscussedin thepaper. Theirlimiting distributions aredenotedby

X'

(Ci, ).

Moments

oiX*

areintractably complicated unlessQ,=

It-\- Then the mean of^*

(Ci,/t-i) is givenby^i'D^J

„,r-l

r-l

^l'n n'D^i_,_^,r-l

r-1

r+1

'^'(^-•^

+

i'^)-7TT^"^^^^~^'^-

+

'>~-with

D

=

[D+

D

)/2,D

=

C[MiC-i and /i=

C\ij.. Whilefindingan exact analyticalminimum

ofthe abovebiasexpression as a function ofthe weight matrix is probably infeasible due to the complexity of the formula, one sees relatively easily that for large

T

theminimum

must approximately be achieved forC

=

lr~i, thus leading approximatelytothe long difference estimator.where

n

=

rank(Ci),

(a)j, is thePochammer

symbol

r{a

+

b)/r{b),C^^{.,.)

is a top order invariantpolynomial

defined byDavis

(1980)and A

is the largest eigenvalue of(c[ClC]\

.The

mean

E

X

[Ci,^)

existsforr\>\.

Proof.

SeeAppendix

D.The Theorem

shows

that the bias of /?2sl5both depends on

the choice ofC\

and

the weightmatrix

^.Note

foreexample

thatE[X{C\,It-\)]

=

txD/r\.

The

problem

ofminimizing

the biasby

choosing optimal matricesC\

and

Vtdoes

notseem

to lead to

an

analytical solutionbut

could in principlebe

carried out numerically for a givennumber

oftime

periodsT. For ourpurpose

we

arenotinterested insuchan

exactminimum.

We

show

however

that fortwo

particular choices ofQ.where

Q,=

It-\ ox Q.=

II22and

subsequent

minimization

overC\ an

analytical solution for the biasminimal

estimator canbe

found. Itturns out that the

optimum

is thesame

forboth

weight matrices.The

theorem

alsoshows

that the

optimum

canbe

reasonably wellapproximated

by

a procedure that is very easy toimplement.

Theorem

6

LetX

(Ci,Qj

be as defined in Definition 1. LetD

=

{D

+

D') /2where

D

=

{C[

ncX^

C[

M[

Ci

.Then

c

st'i'^c I\E{^(CuIt^,)]\=

min

\E[X

{Cu^22)]\

-Oi 5.1. OjL-i

=

J7-j Ci s.i. GjGi=irjri=l,..,r-l Ti

=

l,..,T-lMoreover,

E[X{CiJT-i)]

=

tTD/r^.

Let

CI

=

argmin^^j \E[X{CiJt-\)]\

subject toC[Ci

=

Ir^,ri=

1,..,T-

1.Then C^

=

Piwhere

/9j is the eigenvector corresponding to the smallest eigenvalue li of

D.

Thus,mincitx D/ri

=

min/,/2.

As

T

-^ 00 the smallest eigenvalue ofD,

minli—

> 0. Let 1=

[1,...,1]' be aT—1

vector.Then

forCi

=

l/(l'l)^/^ itfollows that trD

-^ asT

-^ 00.Theorem

6shows

thattheestimator thatminimizes

thebiasisbased

onlyon a

singlemoment

condition

which

is a linearcombination

ofthemoment

conditions involving j/jQ as instrumentwhere

the weightsare the elementsoftheeigenvectorp^ correspondingtothesmallesteigenvalueof

(Ml

+

Mj)

/2.This

eigenvalue canbe

easilycomputed

forany

given T.The

Theorem

alsoshows

that at least for largeT

a

heuristicmethod

which

puts equal weighton

allmoment

conditions leads to essentially the

same

bias reduction as the optimal procedure.The

heuristicprocedure

turnsoutbe

equal tothemoment

conditionE

{{uiT—

"n)

yio]which can be motivated

by

taking"long difTerences" ofthemodel

equation yu=

cti+

/?„yit_]+

en

i.e.by

consideringIt can also

be

shown

that a2SLS

estimatorthat uses allmoment

conditions involving?/,oremains

biased even as

T

—

> 00.7

Long

Difference

Specification:

Infinite

Iteration

We

found

that the iteration ofthe long difference estimatorworks

quite well. In the {i+

l)-thiteration, our iterated estimator estimates the

model

ViT

-

yn

=

PiViT-i-

Uio)+

£iT-

ea

based on

2SLS

using instruments Zi {^(^e))—

{yi0,yi2-

P{e)yn,--- ,yiT-i-%)?/ir-2J, where

P,(\ is the estimator obtained in the previous iteration.

We

might want

toexamine

properties ofan

estimatorbased on an

infinite iteration,and

see if it improves the bias property. Ifwe

continue the iteration

and

it converges^\

the estimator is a fixed point to the minimizationproblem

where

^i{b)=

Zi(b) {{yiT—

yii)—

b{yir-i —yio))- Call theminimizer

the infinitely iterated2SLS

and

denoteit I3j2Sls-Another

estimatorwhich

resemblesPj2sls

i^CUE,

which

solves(N

\'

/ '^\~^

/

^

{b)

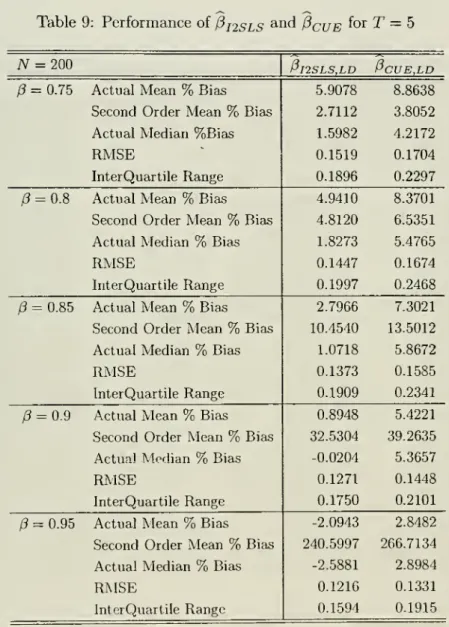

Their actual

performance

approximated

by 5000

Monte

Carlo runs along with the biasespre-dicted

by

second order theory inTheorem

4 aresummarized

in Tables 8and

9.We

find thatthe long difference

based

estimatorshave

quite reasonable finitesample

properties evenwhen

/?is close to 1. Similar tothefiniteiteration in the previoussection, thesecond order theory

seem

to

be

next to irrelevant for /3close to 1.We

compared

performances

ofour estimatorswith

Ahn

and

Schmidt's (1995) estimator aswell as Blundell

and Bond's

(1998) estimator.Both

estimators are defined in two-stepGMM

procedures. In order to

make

a accuratecomparison with

our longdifference strategy, forwhich

thereis

no

ambiguity

ofweight matrix,we

decided toapply the continuousupdating

estimatortotheir

moment

restrictions.We

had

difficulty of finding globalminimum

forAhn

and

Schmidt's(1995)

moment

restrictions.We

therefore used aRothenberg

typetwo

step iteration,which

would have

thesame

second order propertyastheCUE

itself. (SeeAppendix

I.) Again, in orderto

make

a accurate comparison,we

applied thetwo

step iteration idea toour long difi"erenceand

Blundelland

Bond

(1998) aswell.We

call theseestimatorsPcue2

aSjPcuE2

ld^^^^

0cuE2

BB-We

setn

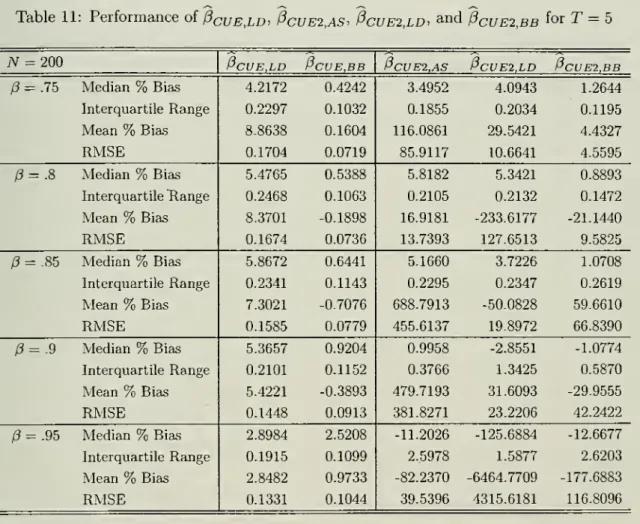

=

100and

T

=

5.Again

thenumber

ofmonte

carlo runswas

set equal to 5000.Our

results are reported in Tables 10

and

11.We

can

see that the long difference estimator has acomparable

property toAhn

and

Schmidt's estimator.We

do

notknow

why

Pcue2LD

^^^ suchalarge

median

bias at /3=

.95whereas

P^y^

iq

does not have such problem."Thereisnoapriori reasonto believe that the iterationsconverge tothefixed point. To show that,onewould

8

Conclusion

We

have

investigatedthebias ofthedynamic

panel effects estimators usingsecond

orderapprox-imations

and

Monte

Carlo simulations.The

second

orderapproximations

confirm the presenceofsignificant bias as the

parameter

becomes

large, ashas previouslybeen found

inMonte

Carloinvestigations.

Use

ofthesecond order asymptotics todefine asecond order unbiased estimatorusing the

Nagar

approach

improve

matters,but

unfortunately does notsolvetheproblem.Thus,

we

propose

and

investigate anew

estimator, thelongdifferenceestimatorofGriUchesand

Haus-man

(1986).We

find inMonte

Carloexperiments

that thisestimatorworks

quitewell,removing

most

ofthe biaseven

for quite high values ofthe parameter. Indeed, the long differencesesti-mator

does considerably betterthan

"standard" second order asymptoticswould

predict.Thus,

we

consider alternative asymptoticswith a

near unit circle approximation.These

asymptoticsindicate that the previously

proposed

estimators for thedynamic

fixed effectsproblem

sufferfrom

larger biases.The

calculations alsodemonstrate

that the longdifference estimator shouldwork

in eliminating the finitesample

bias previously foimd.Thus,

the alternative asymptotics explain ourMonte

Carlo finding ofthe excellentperformance

ofthe long differences estimator.Technical

Appendix

A

Technical

Details

for

Section

3

Lemma

3E

E{<^'-:-^/.'«":)

Proof.

We

haveE

xl'Ptel- ^xl'Mte:

E{tTa.ce{PtEt[e:x;'])]Kt

0.

-E

[trace{MtEtlelx*/])],n-Kt

whereEt[] denotesthe conditionalexpectation givenZt- Because Et[el]

=

0, Et[e^xl']istheconditionalcovariancebetweene^ andyl'-i,whichdoes not depend onZtdueto jointnormality. Moreover, by

cross-sectional independence,

we

haveEt [e;xr]

=

Et [eltxlt] /„.Hence, using the fact that trace (Pj)

=

Kt

and trace(Mj)=

n

—

Kt,we

havexl'Pte;

-

—x'Mtc

n

Et[eltxU

{Kt

-^^^

[n-

Kt)^

=

0,from which theconclusion follows.

Lemma

4Var{xl'Mte;)

=

{n-

t)a^E

[v*^]+

[n-

t){E

[<,e*J)',

Cov

[xl'h'Itel,x'/Msel)=

{n-

s)E

[vie*,]E

[<,£*,],

s<t

wherev*,

=

x*,-

E[x\,\zn].Proof. Follows by modifying the developments from (A23) to (A30) and from (A31) to A(34) in

Alvarez and Arellano (1998).

Lemma

5 Suppose that s<

t.We

haveE

\v^]=

T

-t

/ 10-0

T-t+l ^2T-t

1 "T-t

+

l(T-tf{l-pf

X\{T-t)

+

r-1

E[vy*,]=

-o^+

fT^ /T

-0"-)\JT-

i+

1 (T -i t){\ 1~0)

T~tT-i

+

l{T-tf{l-0)

[T-t]

0-0

1-E[vle*^]

=

-a

+

a'.^/

'

-s

i}-P^-

J 'Vr-

-s+

1 (T --s -5)(1 1-P)

\It-s

+ \{T-i

)(r-1 t){\--P)

1 T--tE

Kel]

^

Y

T

-

f+

1(T

-

5)(r-

t) (1-

/?)(T-t)

T-t

\-p

T-t1-/?

;'

1-/3

Proof.

We

first characterize v*j.We

haveXi,t

=

J/i.t-iXi,t+i

=

Vi.t=

Qi+

Pyi,t-\+

£i,ti,r

=

yi,r-i=

—

i^-Qi

+

P'^ *yi,t-i+

ffi,T-i+

Pei,T-2+

+

P"^ ' ^e^.t)1

—

p

\ / and henceT-f

+

l , 1 ,P-P

T-f+l yi,t-i /?-/?T-t+l ^(T-i)(l-/3)y^"'-^

\l-/3

(r-i)(l-/3)V

(1

-

P)£i,T-i+

(1-

/3^)ei,T-2+

+

(l-

Z?"^-*)ei,t(T-i)(l-/?)

It followsthat

1 /?-/?T-t+l

T-t

from which

we

obtainT-t

{T-t){l-p)

J

''•'-'yi-P

(T-t)

(1-/3)2^ E[ai\zit], 1 (ai -£'[Qi|2,i])T-t

+

lyi-p

{T-t){l-pf

JT-t

(1-

/3)^..T-i+

(1-

/?')£i,r-2+

•••+(!-

P'^-'){T-t){\-P)

T-t

+

l (20)We

now

compute

E

(Qj-

E\ai\zit\y Var[q;!Zit]. It canbeshown

that2 2

Cov(Q„(y,o,-..,y.(-i)')

=

Y^^>

and Var ((y,o, - ,2/zt-i)')=

—

^^^^'

+

Q

where £ is a i-dimensioanl column vector of ones, and