The Actuated Workbench:

2D Actuation in Tabletop Tangible Interfaces

Gian Antonio Pangaro

B.A. Sociology 1999 Harvard University

Submitted to the Department of Media Arts and Sciences, School of Architecture and Planning,

in partial fulfillment of the requirements for the degree of Master of Science in Media Arts and Sciences at the Massachusetts Institute of Technology

September 2003

©

Massachusetts Institute of Technology, 2003.All Rights Reserved.

Author

Program in Media

Certified by

Associate Professor of Media

Accepted by

Gian Pangaro

Arts and Sciences

09 May 2003

Hiroshi Ishii

Arts and Sciences Thesis Supervisor

Andy Lippman

Chair, Pepartmental Committee on Graduate Studies Program in Media Arts and Sciences

SACHUSETTS INSTITUTE OFTECHNOLOGY

SEP 2

9

2003

ROTCH

LIBRARIES

The Actuated Workbench:

2D Actuation in Tabletop Tangible Interfaces

Gian Antonio Pangaro

B.A. Sociology

Harvard University 1999

Submitted to the Department of Media Arts and Sciences, School of Architecture and Planning,

in partial fulfillment of the requirements for the degree of Master of Science in Media Arts and Sciences at the Massachusetts Institute of Technology

September 2003

Abstract

The Actuated Workbench is a new actuation mechanism that uses magnetic forces to control the two-dimensional move-ment of physical objects on flat surfaces. This mechanism is intended for use with existing tabletop Tangible User Inter-faces, providing computer-controlled movement of the physi-cal objects on the table, and creating an additional feedback layer for Human Computer Interaction (HCI). Use of this actu-ation technique makes possible new kinds of physical inter-actions with tabletop interfaces, and allows the computer to maintain consistency between the physical and digital states of data objects in the interface. This thesis focuses on the design and implementation of the actuation mechanism as an enabling technology, introduces new techniques for motion control, and discusses practical and theoretical implications of computer-controlled movement of physical objects in table-top tangible interfaces.

Thesis Supervisor: Hiroshi Ishii

The Actuated Workbench:

2D Actuation in Tabletop Tangible Interfaces

Gian Antonio Pangaro

Thesis Comittee:

Thesis Supervisor

V ~~Hiroshi Ishii

Associate Professor of Media Arts and Sciences MIT Media Lab

Thesis Reader

I Robert Jacob

Associate Professor of Electrical Engineering and Computer Science Tufts University

Thesis Reader

oel Cara sow

Associate Professor of Media Arts and Sciences MIT Media Lab

J P

Acknowledgements

I am forever grateful to the following people for being in my life

during the past few years. Without them, this work might not have been possible.

For their love and support:

Stacy Abder Anthony Pangaro Geraldine Pangaro

For their intellectual guidance, enthusiasm, and inspiration:

Jason Alonso Dan Chak Chris Csikszentmihalyi Rich Fletcher Hiroshi Ishii Rob Jacob John Maeda Dan Maynes-Aminzade Joe Paradiso Amanda Parkes James Patten Hayes Raffle Nancy Sun Brygg Ullmer Bill Verplank

For their friendship, music, and good advice:

John Capello Andy Eggers Scott Roy Altay Guvench Mike Annany danah boyd Daneil Giffin Shane Hamilton Saoirse Higgins Ryan McKinley Cliff Murphy Mary Oey Phua Mei Pin Ali Rahimi Lily Shirvanee Doug Swett Jessica Tardy Noam Weinstein Pierce Woodward

Table Of Contents

Prefatory Material

Cover Abstract Thesis Committee Acknowledgements1. Introduction

11

1.1 Tabletop Tangible Interfaces

1.2 Thesis Goal

2. Theory: Space, Movement, and Haptics for Input Devices

15

3. Related Technologies 1: Tabletop Tracking Technologies

20

4. TUI Inconsistency and Input Device Design

24

4.1 TUI Interactions that can lead to Inconsistencies

4.2 Haptic Input Devices as Physical Output for Digital Media

5. Related Technologies 2: Tabletop Actuation Technologies

33

6. Design and Development

40

6.1 Design Criteria 6.2 Final Design Scheme

7. Technical Details

44

7.1 System Description

7.2 Motion Control and Interpolation

8. Evaluation

58

8.1 Original Design Criteria 8.2 Design Limitations 8.3 User Testing

9. Applications for the Actuated Workbench

64

9.1 Basic GUI Functions

9.2 High-Level Tabletop Interactions 9.3 Haptic applications

10. Future Development

74

10.1 Tiling of Arrays for Larger Surfaces

10.2 New Types of Motion

11. Conclusion

78

Appendix A.

Explanation of Electromagnetic Array

82

Appendix B.

Limitations of SaJe microcontroller

85

Appendix C.

Other Actuation Technologies

87

Appendix D.

Schematics

89

Appendix E.

Microcontroller Code

95

1. Introduction

At the inception of the Tangible Interface realm of Human Computer Interaction (HCI), a research vision called "Tangi-ble Bits" [19] sought to leverage people's existing skills for interacting with the physical world toward improving their interactions with computers. The stated research goal was to extend computation beyond traditional Graphical User Inter-faces (GUls) to interInter-faces that use physical objects and the physical world as tangible embodiments of digital information. Such interfaces allow users direct control of computation through the manipulation of physical objects. These stand in contrast to the traditional Graphical User Interface, which usually consists of a computer monitor (graphical display), keyboard, and pointing device, such as a mouse or trackball. Instead, Tangible User Interfaces (TUls) aim to provide users with means to directly manipulate electronic media by manip-ulating physical objects that represent the data itself, rather than pointers to it. The physical objects in tangible interfaces can take on many forms. Some are simple knobs and levers, while more advanced interfaces include physical models of buildings [52] or lumps of clay [36]. As electronic sensing technologies continue to advance, the possibilities continue to grow for designing physical input devices for computers.

1.1 Tabletop Tangible Interfaces

Recent tangible interface research has given rise to a class of systems based on physical interaction with interactive table-top surfaces. These systems, which I will refer to as 'tabletable-top tangible interfaces' (or 'tabletop TUls' for short), track the position and movement of objects on a flat surface and respond to users' physical manipulation of these objects with graphical output. In most tabletop tangible interfaces, as the user physically interacts with the system, graphical feedback is usually projected on and around the objects on the table. The objects are treated as physical instantiations of digital data: users make adjustments to the digital state of a data item by 1) moving (translating, rotating, etc.) or 2) modifying

Figure 1.1 An abacus, longtime icon of the Tangible Bits concept. The abacus is an interface containing a physical instantiation of every bit, and the physical state of the system represents a logical state that can be

the physical object (pressing a button on it, placing another object on top of it, etc.). Both of these actions not only change the object's state relative to absolute X and Y position on the table, but also in relation to other objects on the table. The distance between physical objects is often mapped to some aspect of data in the digital realm, and therefore the user can easily tell relationships in the digital part of the inter-face from the physical relationship of the objects.

Advantages of Tabletop Tangible Interfaces

Tabletop tangible interfaces offer many advantages over interaction with purely graphical computer interfaces. Users can organize objects spatially in the physical area around them, providing a kinesthetic sense of the location of each data item [34]. Tabletop interfaces allow users to perform two-handed interactions with data, making it possible to adjust several different parameters of a simulation or applica-tion simultaneously. These interfaces also foster ease of col-laboration between multiple collocated users, since the work area is expanded from a small computer monitor to a large tabletop area. Since there is no need for an intermediary tool or pointing device, such as a mouse, any user around the table can reach out and adjust parameters in the application

simply by moving associated physical objects.

Limitations of Current Tabletop Tangible Interfaces

Current tabletop tangible interface systems share a common weakness. While users provide input by manipulating of

physical objects, computer output occurs only through sound or graphical projection. This discrepancy between input and output can make the objects feel more like pointers to digital data and less like physical manifestations of the data itself. Links between physical objects and digital data can also break down when changes occur in the underlying computa-tion that are not reflected in physical changes of associated objects on the table. Since the computer system cannot phys-ically move objects on the table surface, the computer cannot correct physical inconsistencies in the layouts of the objects, and such corrections are left to the user. In addition, tabletop tangible interfaces cannot provide users with physical

ver-sions of functionality commonly found in graphical user inter-faces. Only graphically can the computer system express common interactive functions such as 1) undoing physical input, 2) saving and restoring physical positions of objects in a simulation, or 3) guiding users in manipulation of objects. Most tabletop tangible interfaces respond to users' physical input with output that is not physical, such as sound, light, or graphical displays. For these tangible interfaces, physical interaction between human and computer remains one-direc-tional.

1.2 Thesis Goal

This thesis describes the design and development of the Actuated Workbench, a system that provides a hardware and software infrastructure for a computer to move objects hori-zontally on a table surface. The mechanism for moving objects on the table is a technological innovation using elec-tromagnetic forces to move objects in the manner of a linear induction motor (LIM) expanded to two dimensions. As a Research Assistant in the Tangible Media Group at the MIT Media Lab, I developed this actuation system for use with pre-existing tabletop tangible interfaces, providing a general actuation platform for moving objects in two dimensions.

Actuation can be defined as:

1. A bringing into action; movement [Webster's Revised

Unabridged Dictionary, @ 1996, 1998 MICRA, Inc]

2. the act of propelling [WordNet @ 1.6, @ 1997

Prince-ton University]

In this thesis, I use the term 'actuation' to describe the two-dimensional movement of objects on a flat surface. The attraction and repulsion of an electromagnetic array propels magnetic objects on top of a tabletop tangible interface, allowing both the user and computer to move the objects for physical interaction.

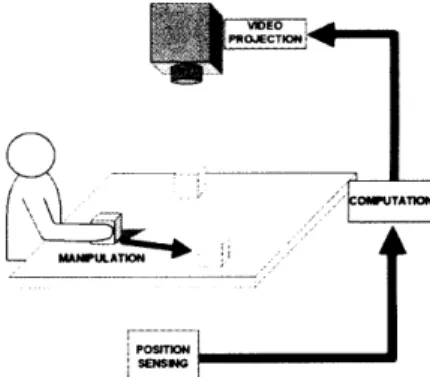

The Actuated Workbench represents one attempt to "close the loop" in the interactive tabletop realm of tangible

inter-OWAUTAT1

SENSNG

Figure 1.2 Traditional Tabletop Tangi-ble Interfaces track multiple objects on a tabletop and respond to user's physical input with graphical output from projection overhead.

ACTUA7iO0M

Figure 1.3 The Actuated Workbench uses magnetic movement of objects on the table as an additional layer of feedback between the computer and the user.

faces, matching physical input with physical output. It is one of only a few projects in tangible interfaces research that attempt to match physical input with physical output [7, 16, 45]. In this thesis, I will situate the system in the context of

past related work and supporting theory, discuss some theo-retical implications of actuation in tangible interface design, discuss the variety of hardware and software design deci-sions involved in its construction, describe the underlying technology of the Actuated Workbench and evaluate its per-formance in light of my design criteria, and finally suggest preliminary applications of this actuation technology. Several of these applications were implemented in collaboration with Dan Maynes-Aminzade, another research assistant in the Tangible Media Group. The Actuated Workbench was devel-oped under the guidance of my advisor, Professor Hiroshi

Ishii. The term "we" in this thesis refers to myself, Dan May-nes-Aminzade, and Professor Hiroshi Ishii, as this was truly a collaborative project throughout most of its design and devel-opment.

2. Theory: Space, Movement,

and Haptics for Input Devices

Tangible User Interface design finds support in early HCI research and psychological studies of kinetics and spatial reasoning. This section discusses work that has been used in the past as supporting arguments for advantages of both graphical interfaces and tangible interfaces. Much of this work has been discussed at length in other publications related to Tangible User Interfaces (TUIs) as well as tabletop TUls. Since this thesis is primarily about actuation in Tabletop TUls, its agenda already assumes the benefits of tabletop TUIs as input devices. Therefore, I will not go into great detail discussing this work. Extensive discussion of these and other supporting works can be found in: Fitzmaurice's Ph.D. Thesis

[13], Ishii and Ullmer's Tangible Bits paper [19], and Patten's MS thesis [34]. However, since much of this work also has

implications supporting actuated tabletop TUI, I will touch on some key supporting concepts of this work and discuss how they can be used to argue the benefits of actuated TUI.

Manipulation: GUI to Graspable UI to TUI

Since 1986, the concept of Direct Manipulation [17] has been at the core of both Graphical and Tangible User Interface research. Much published research has demonstrated how tabletop tangible interface design furthers the cause of direct manipulation, providing 1) "a continuous representation of the object of interest," 2) commands effected through physical action rather than complex syntax, and 3) "rapid incremental reversible operations whose impact on the object of interest is immediately visible" [43,44]. In his 1996 thesis on grasp-able user interfaces, Fitzmaurice argues that "improving the 'directness' and the 'manipulability' of the interface can be achieved by improving the input mechanisms for graphical user interfaces" [13]. He goes on to show how the Bricks interface furthers the cause of direct manipulation by provid-ing users a more physical means of interactprovid-ing with computer graphics through graspable handles.

Figure 2.1 A subject performing a

LEGO sorting task in a study on

peo-ple's use of spatial arrangement and two-handed manipulation.

Since that publication, tabletop tangible interfaces have fur-ther improved the "directness" of manipulation by integrating physical input in the same space as graphical output, often projecting directly onto the objects themselves. This implies to users that they are manipulating physical manifestations of digital data itself, not just physical handles to digital graphics.

Kirsh: The Intelligent Use of Space

Kirsh's research on people's use of physical space shows that the use of physical objects and spatial organization can reduce cognitive load in task performance. Kirsch's experi-ments [24, 25] have shown that subjects who were provided with a means of physically offloading a computational task onto physical objects in front of them were able to perform tasks (such as counting coins) with improved completion times and reduced error rates. Experiments conducted else-where involve similar tasks such as sorting small LEGO parts

by color [13] (Figure 2.1). What is interesting is the number of

these experiments that note improved performance results in conditions involving the use of multiple hand-sized objects

resting on tabletops. These results suggest that a computer

interface using objects on a tabletop would provide similar reductions in cognitive load and increased performance.

A prime example of such results is an experiment conducted by James Patten during his preliminary work on the

Sensetable project [35]. The experiment measured subjects' performance in a memory task, comparing the use of graphi-cal objects, displayed on a screen and manipulated by a mouse, with the use of electronically tagged physical objects. He found that subjects who adopted specific strategies involving the spatial layout of objects performed better on the memory recall task. Patten attributes some of these results to subjects' ability to use their bodies as references to the posi-tions of individual objects. From this and other observaposi-tions, he speculates that the objects people use for such tasks must be large enough to prompt a visceral association with users, yet small enough that many objects can be laid out within arms reach for easily manipulation by a single user.

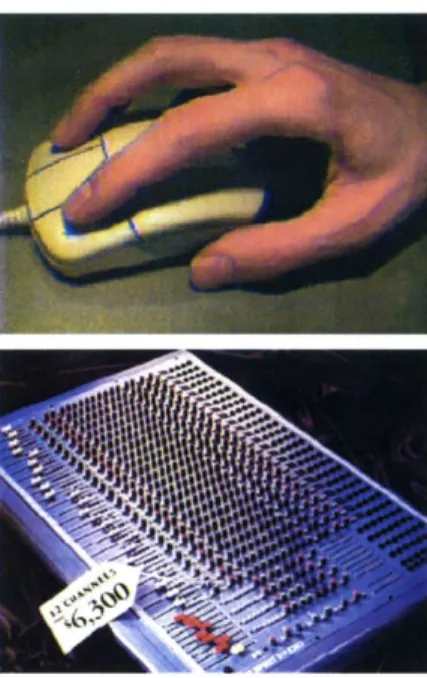

Input Devices: Time Multiplexing vs. Space Multiplexing

Fitzmaurice and Buxton have created a taxonomy of input devices for computer functions. Since there are often many different logical functions that can be performed in a com-puter application, user input must be multiplexed between these functions. Buxton and Fitzmaurice describe input devices as either "time-multiplexing" or "space-multiplexing" [14]. With a traditional mouse and keyboard GUI, the mouse is time-multiplexed between logical functions, meaning that the user repeatedly attaches and detaches the mouse to on-screen tools in order to perform different functions. For exam-ple, to switch between a pencil tool and an eraser tool in a

GUI drawing program, the user clicks with the mouse on

either tool to attach the logical function to the mouse pointer, and then proceeds to use that new function. The keyboard, on the other hand, is space-multiplexed for the input of char-acters to the computer, providing a discrete physical location for each letter of the alphabet as well as other commands. This layout makes touch-typing possible, as the user can remember the persistent location of each letter and can learn to access each letter quite quickly using muscle memory. TUI allows several physical input devices to be simultaneously attached to logical functions, allowing users to access differ-ent tools simply by grabbing differdiffer-ent physical objects in front of them. TUI input devices are said to be space-multiplexed because they are persistently bound to logical functions and the user's input is swapped between physical devices. Fitz-maurice and Buxton argue that space-multiplexed input devices have fewer stages of acquisition before interaction, allowing faster computer input from the user (Figure 2.3).

Snibbe et al. also argue that systems having multiple "modes" for input devices (time-multiplexed input) require that the user keep track of the mode in which the input device was last used, and whether that device's mode must be switched to a new mode before it can be used for another task [47]. Such mode switching increases workload and user error, since the user is charged with the task of monitoring

both the physical and digital state of the input device. Snibbe et al. argue for removing as much mode-switching from input

Figure 2.2 According to Buxton and Fitzmaurice's taxonomy, the mouse (top) is a time-multiplexed input device, while the mixing board (bot-tom) is a space-multiplexed input device.

GUI (Time-Multiplexed) 1. Acquire physical device

2. Acquire logical device

3. Manipulate logical device TUI (Space-Multiplexed)

1. Acquire physical device

2. Manipulate logical device Figure 2.3 Fitzmaurice and Buxton's

acquisition stages for interaction with logical devices. The space mul-tiplexed condition contains one fewer phases of preparatory action for manipulating logical devices.

devices as possible, saying that modeless interaction pro-vides the user with a "consistent and trustworthy physical behavior, like that of a car steering wheel." In modeless inter-action, the behavior of a particular input device is predictable, and the user can take advantage of this predictability by anticipating how the device will react given a certain input. Space-multiplexed input devices exhibit modeless interaction through persistent mappings to logical functions and through their consistent physical behavior.

Fitzmaurice and Buxton conducted an experiment comparing space-multiplexed input devices with time-multiplexed input devices in an on-screen target tracking task [14]. They found that subjects performed the task with less error (i.e. more accurate and constant tracking) in the space-multiplexed con-dition than in the time-multiplexed concon-dition. They also noted that in the time-multiplexed condition, subjects spent almost

50% of device manipulation time acquiring new logical

func-tions for the input device, indicating the large cost of switch-ing the function of the input device. Their results suggest that computer interfaces consisting of multiple, space-multiplexed input devices on a tabletop offer significant time and error improvements over time-multiplexed input devices such as a mouse.

Their experiment also contained separate conditions for space-multiplexed devices with specific physical form (such as a ruler) vs. generic physical form (puck-like objects). The condition with specific physical form yielded better perfor-mance results than the condition of generic physical form, which they attribute to the physical form reminding users of the function of each input device. However, both of the space-multiplexed conditions outperformed the time-multi-plexed input condition, suggesting that even TUIs with generic physical form provide a clear advantage to users. These results support a subsequent step in tabletop TUI design toward generic form factors and the use of both space and time-multiplexing interaction techniques. In a complex application running on a tabletop tangible interface, problems of space, organization, and cost make it difficult or impractical

to have a devoted physical input device for every logical func-tion available in a computer applicafunc-tion. Tabletop TUIs involv-ing objects with generic physical form, such as Sensetable, can offer a combination of both space-multiplexing and time-multiplexing input techniques. The Sensetable system tracks multiple, space-multiplexed physical input devices, built with a generic shape that indicates little about a device's logical function. Devices can be dynamically attached and detached to logical functions, and the functions or parameters to which devices are attached are indicated by projection on and around the objects. Users can use these devices in a space-multiplexed-only scenario, or if they desire fewer objects on the table (to reduce clutter, for example), they can use the objects as time-multiplexed input devices.

These and other arguments have been made supporting the development of tabletop TUIs and their progression toward the generic form factors commonly used today. The next sec-tion describes past work in tabletop tangible interfaces, and the development of interaction techniques using physical objects.

3. Related Technologies 1:

Tabletop Tracking

Technologies

One cannot discuss the evolution of interactive tabletop inter-faces without charting the evolution of technologies associ-ated with them. In this chapter I describe various techniques used in tabletop systems to track objects on flat surfaces, the types of interactions afforded by such technologies at the time, and the limitations inherent in the tracking technologies. Many of the interaction techniques developed in these sys-tems inspired as many questions as they did answers, and I will mention some of these questions as they arise.

The Digital Desk (1993): Computer Vision.

One of the pioneering works in Interactive physical/digital desktops is Wellner's Digital Desk [53] (Figure 3.1). This sys-tem turned a desk surface into a virtual 'touchscreen', allow-ing users to manipulate digital graphics projected on a tabletop by touching the tabletop and dragging the graphics

around. The system captured these gestures with an

over-head camera and picked up commands such as pointing and tapping on the table with a microphone attached to the desk.

Figure 3.1 Wellner's Digital Desk corn- Example applications included a virtual calculator where the

bines graphical projection with a

computer vision system that recog- buttons were projected onto the tabletop, and a document

nizes users' gestures as well as scanner that created projected digital versions of papers on

paper documents on the table.

the table and allowed the user to manipulate the digital docu-ments by pointing and dragging with a fingertip. Wellner's dream for the system also included the use of physical objects, such as paper and pens, so that users could add content to the system through handwriting or through optical character recognition of typed papers. Computer vision could theoretically provide the system with the ability to identify and track any object on the table without the need for tagging or pre-programming the system. However, at the time of the Digital Desk's publication, computer vision technology had not matured sufficiently to provide such functionality.

Bricks (1995): Flock of Birds Magnetic Tracker.

Fitzmaurice, Buxton, and Ishii's "Bricks" [12] are perhaps the first published example of tabletop tangible interfaces, then called 'graspable' interfaces, providing physical objects as 'handles' for manipulating digital graphics projected on a tabletop. Here, a Polhemus "flock of birds" 6D magnetic tracking system [37] was used to track users' movements of two graspable objects on a table. These objects were used as handles to virtual objects which were rear-projected onto the surface of the table. The handles allowed the user to move the projection by repositioning the objects on the table. This system suffered limitations because it included only two tracked physical objects (though capable of accommodating more objects, the Flock of Birds system was then prohibi-tively expensive), and because the objects were tethered (connected with wires to the computer), making it somewhat awkward to manipulate them, especially if more objects were added.

metaDESK (1997): IR Vision

The metaDESK [51] system extended ideas introduced by the Bricks system, moving from generic physical handles for information to specific physical instantiations -- "phicons" or "physical icons" -- of data in tabletop interfaces. In this case, two or three physical models of buildings were tracked on a tabletop by an infrared camera under the table. These mod-els were associated with specific buildings on a map rear-projected on the table. Users could manipulate these models to pan, rotate, or zoom the map, and the system would then update the graphical projection on the table, as well as the graphical output on a movable 'magic lens' display.

Urp (1999): Advanced Vision Tagging

The Urp [52] system used advanced computer vision tagging techniques, based on creating unique patterns of reflective colored dots, to simultaneously track the position and orienta-tion of multiple physical objects on a table. Though the tech-nique of tagging objects optically for computer vision tracking was highly advanced for the time, the system still suffered from classic limitations inherent in computer vision systems,

Figure 3.2 Bricks uses a Flock-Of-Birds 6DoF tracker and a rear-pro-jected desktop to allow users to manipulate graphics with the tracked objects. A "paintbucket" at the top of the desk allows the user to change modes by "dipping" a brick into one of the wells.

tethered handles.

Figure 3.4 The MetaDESK system, including two phicons and several lenses to provide additional views of objects on the map.

Figure 3.5 The Urp system usesvi-sion tracking and special reflectors to locate models of buildings on the table. A digital shadow of the build-ing is then projected around the model.

Figure 3.6 Sensetable 1.0. Wacom Intuos Wireless Mice were used inside the pucks, with dials on top that could be used to manipulate variables associated with each parameter in the application.

such as slow tracking speed, jitter, large size of objects (lim-ited by the size of the reflective dots) and occlusion by users' hands.

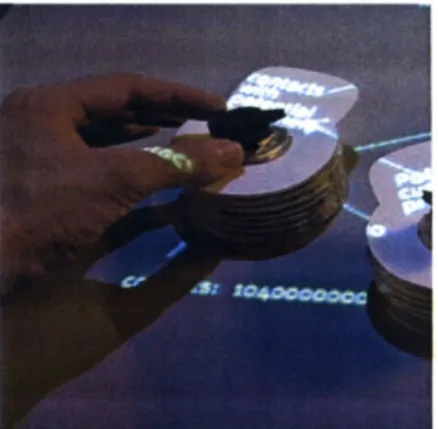

Sensetable (2001): Electromagnetic Tagging

Sensetable 1.0: Wacom Tablets

The advent of commercial electromagnetic tracking technolo-gies provided new levels of functionality and robustness for tabletop interfaces. James Patten's MS thesis, Sensetable, was one of the first systems to take advantage of these tech-nologies and bring new levels of flexibility to tabletop tangible interfaces. The first Sensetable system used Wacom Intu-OSTM tablets and hacked versions of wireless Wacom com-puter mice as the technology built into "pucks" on the tabletop. The Wacom system, then state of the art, provided high-precision, extremely fast tracking of two objects on one tablet. Sensetable's designers (at the time, Patten and I) added circuitry to the mice that allowed the system to track twice as many objects with only slightly increased latency. Since the system used radio frequency signals transmitted inductively between the pucks and antennas in the tablet, it suffered none of the stability problems present in computer vision systems. This fast and robust tracking provided the ability to introduce interactions based on users' real-time ges-tures with pucks on the table. In addition to speed, accuracy, and robustness improvements, the Wacom system provided sensing for rotation of the puck, three separate button presses and the manipulation of a potentiometer (a dial on top of each puck). Sensetable's designers used this addi-tional funcaddi-tionality to develop new interaction techniques, such as the use of physical modifiers (tokens) that could be added to the pucks to change their state, and the inclusion of dials on top of the pucks that allowed users to manipulate variables associated with the pucks.

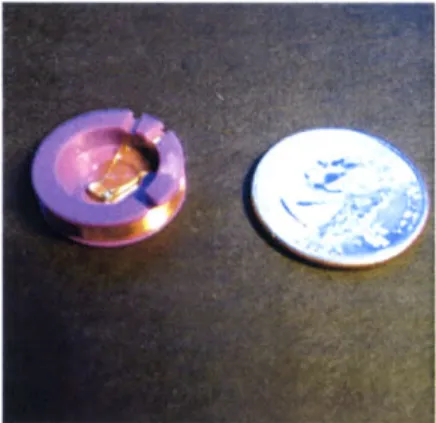

Sensetable 1.5: Zowie

In 2000, Zowie IntertainmentTM, which later became part of the LEGO Group, developed a new multi-object tracking technology licensed from a patent held by the Scientific Generics corporation [15]. This technology was developed

and cost-reduced enough that it could be built into an afford-able toy playset for use with a PC to play interactive games. The playset consisted of movable figurines on a flat plastic surface, which children could move and view interactive graphics on a computer monitor. The tracking technology included passive tags made of LC "tank" circuits, and a flexi-ble antenna array built into the flat surface of the playset. The system measures the amplitude of the tag resonances with several specially shaped antennas. The amplitude of the tag's resonance with each antenna varies as a function of its position on top of the antenna array. This method gives very stable 2D position data, accurate to within 2 millimeters, of up to nine different tags at refresh rates of up to 10 milliseconds. Since each tag on the table resonates at a different fre-quency, their positions can be determined independently.

Unlike the Wacom tablet system, Zowie technology is not capable of sensing rotation of these tags. This tracking tech-nology was later modified and used by Patten in final proto-types of the Sensetable 1.5 system. Puck rotation was

sensed using two LC tags at opposite ends of a puck. Sensetable 2: Capacitive Sensing

Also included in development plans for the Sensetable sys-tem was the design of a new custom tracking platform based on capacitive transfer of radio frequency signals between pucks and the table. This system would provide a hardware infrastructure for tracking as many objects as could fit on the table, and for sensing up to five modifiers and three analog input devices, such as dials, as well as capacitively sensing users' touch. A prototype of this system was designed by Media Lab graduate Matt Reynolds in the fall of 2000, but was never fully implemented by Patten and myself due to the

superior flexibility of the Zowie-based tracking technology.

Figure 3.8 A Zowie LC radio fre-quency tag. US Quarter shown for size.

Figure 3.7 The Ellie's Enchanted Gar-den playset, which contains the Zowie tracking technology used in the Sensetable 1.5 system.

Figure 3.9 Sensetable 2.0 capacitive sensing board, designed by Matt

Reynolds. ... ... .

4. TUI Inconsistency and Input

Device Design

This chapter describes common problems of consistency among tangible interfaces, especially among the tabletop TUIs described above. As a user interacts with a tabletop tan-gible interface, there are many ways inconsistencies between physical and digital states can arise. Previously, designers have had to design interfaces so that as few of these incon-sistencies arise as possible. The long term goals that inspired this thesis project include a vision for seamless bi-directional interaction with physical objects, which might involve the cre-ation of completely new interaction techniques. However, the problem of consistency alone has long called for an actuation technology to address these problems in existing tabletop interfaces. In this chapter, I discuss specific examples of such design techniques and introduce new approaches to resolv-ing inconsistencies through actuation.

4.1 TUI Interactions that can lead to

Inconsistencies

Remote Collaboration

Tangible interfaces have been used in research on remote collaboration and Community Supported Collaborative Work

(CSCW) for years [52, 26], but the technical challenge of

syn-chronizing remote physical objects has limited the usefulness of such interfaces in spatially distributed applications. For remote collaboration applications of tabletop TUls, when there are multiple instantiations of a work table, the physical states of objects on the tables can become inconsistent whenever a user moves an object on one table, but the remote user does not move the corresponding object on the other table. Though the graphical projections on top of the tables can be synchronized, discrepancies between the posi-tions of the multiple sets of physical objects on the tables make it difficult to determine which is the "correct" version to be projected on all workbenches. Even if the system could

determine a correct version and project it on the table, users would still have to manually move the objects to the correct positions. Furthermore, the fact that objects can be even tem-porarily in positions that disagree with corresponding graphi-cal projections tends to break the metaphor that they are in fact two instantiations of the same data item, and the user must mentally accommodate this inconsistency.

Parametric Simulation

A software simulation running in real-time may compute that

the digital value associated with a puck on the table has changed. The puck's position or orientation may be inconsis-tent with the new value of its corresponding software parame-ter. This can happen if the puck represents a variable dependent on another variable that is adjusted by the user. Without actuation, the computer can only attempt to maintain consistency through graphical projection, either changing the projection to display the change, or perhaps prompting the user to correct this problem by changing the physical state of the object until it is consistent with the digital state.

Constraints

An application may contain pre-programmed constraints on the spatial arrangement of objects on the table, such as zon-ing laws that apply to the placement of buildzon-ings in an urban planning application. Constraints in the simulation can improve functionality in an application, helping to make sure the simulated layout is actually possible in the real world. If the user moves an object to the wrong part of the table, some of these constraints may be violated. Existing systems can provide graphical feedback to let the user know a constraint has been violated, but cannot fix the problem for the user in both the digital and physical representations. Giving the com-puter the ability to physically move the objects on the table can correct the problem once it has occurred, moving the physical object to a new position that does not violate the constraint. In addition, the use of force feedback can help guide the user away from violating constraints in the first place. If an actuation mechanism has enough force, it could physically inhibit users from moving objects to the wrong

place on the table, but even if the system is not strong enough to physically fight a user's movement of an object, it could still draw users' attention to the violated constraint by lightly tugging on the object, or by making it vibrate in the user's hand. In the case of tabletop TUls, haptic feedback can be more effective than graphical feedback because the user may be looking elsewhere on the table and not at the object in hand, or because the graphical projection may be occluded by the user's hand grasping the object.

Navigation

On tabletop tangible interfaces, applications with graphical navigation features, such as those using a map [51], have introduced fluid interaction techniques based on the rotation, translation, or scaling of the map by moving physical objects on top of it. For example, physical objects on the table, such as models of a buildings, can be permanently associated with specific positions on the map. A user can move one of the models, and the map displayed on the table changes to fol-low the user's rotation or translation of the model.

In the design of the MetaDesk system, such map manipula-tion techniques introduced the quesmanipula-tion of what happens when there are multiple models of buildings on the same map and a user moves only one model to change the map view. With several models associated with positions of the map, and the displayed map changing to follow the movement of only one model, the physical position of other models on the table will no longer be correct on the new map. In the case of only two models, MetaDESK's designers chose to "ignore the offending rotation," but did not provide a scheme for deter-mining which was the correct or intended rotation. They spec-ulated as to whether the map should be warped to accommodate all rotations of the models, but felt that such a display might not make sense or be useful to the user. More-over, it becomes even more difficult to resort to such a solu-tion when more than two physical objects are used in the interface. Other solutions suggested by MetaDESK's design-ers include: 1) accommodating as many of the physical posi-tions of objects on the table as possible while ignoring and

highlighting outliers, 2) graphically displaying a suggested "best fit" position for all objects on the table and leaving it to the user to move the objects to their appropriate positions, and 3) providing discrete views of the map in the area imme-diately surrounding each object (Figure 4.1). As a solution to the case of rotation and zooming by manipulating two objects on the table, the metaDESK designers used a "rotation con-straint instrument," consisting of two physical pucks that slide along an acrylic rod (Figure 4.2). The rod constrains the transformations expressible through the two physical objects

by ensuring that their rotations always match each other.

Such a solution maintains consistency in the interaction, but becomes increasingly cumbersome as more physical objects are added to the interface. In the original metaDESK system, perhaps the only way of avoiding many large inconsistencies in this type of interaction is to limit the number of physical objects on the table. A computer-controlled system that can simultaneously move multiple objects on the table could eas-ily prevent the rotational consistency dilemma by constantly updating the positions of physical objects on the table, mov-ing them to the correction place as the user moves one or more objects.

The Nulling Problem

In 1986, Bill Buxton coined this phrase to describe

inconsis-tencies that arise with input devices that are time-multiplexed between multiple parameters in an application [8]. Here, a limited number of input devices ('transducers') are used to control multiple digital parameters, such that there are more parameters than transducers and the transducers must be swapped between parameters. Often these transducers are 'absolute', not 'relative' input devices. That is, they put out a discrete value based on their position, not based on relative

changes to their position. Examples of such transducers are

physically directional dials and linear sliders (Figure 4.3). The nulling problem occurs when absolute transducers are swapped (multiplexed) between digital parameters, and the physical state of the transducer must be reset by the user to match each parameter's preset digital state before further adjustments can be made. Buxton says of the nulling

prob-C D

Figure 4.1 MetaDesk alternatives for accomodating multiple rotations of phicons: A) warp to fit; B) flag and ignore outlier; C) best fit with error display; D) discrete views for each phicon.

Figure 4.2 MetaDesk's designers cre-ated a rotation constraint instrument to prevent inconsistent rotations of multiple phicons

Figure 4.3 This audio mixing board has both physically directional dials and linear sliders, both examples of absolute transducers.

Figure 4.4 Physical, directional dials on top of pucks in the Sensetable

1.0 system.

riyure '+.o rrieuy rounu puCIs win

projected graphical arrows and slider bars in the Sensetable 2.0 system.

lem: "It is common, takes time to carry out, time to learn, and is a common source of error. Most importantly, it can be totally eliminated if we simply choose a different transducer."

In his master's thesis, Patten encountered a nulling problem in the first prototype of the Sensetable system [34]. Here, as pucks in the system were dynamically bound to different parameters, dials on the pucks (Figure 4.4) would be in posi-tions that did not correspond to the preset digital state of the new parameters. Patten resolved this inconsistency by auto-matically setting the digital value of the new parameter to the current physical position of the dial on the puck. In his user studies, Patten found that this practice confused and frus-trated users, since the puck was "supposed to be a physically manipulable representation of the data, rather than solely a tool for changing simulation parameters." Users wanted the positions of the dials on the pucks to be automatically con-trolled by the computer. In later versions of the Sensetable system, Patten designed around this problem by using a per-fectly round puck as the transducer and mapping relative rotation of the puck to relative changes in the value of its associated parameter. The user received feedback about the current value of the parameter through projection of an arrow or a slider bar next to the puck (Figure 4.5).

Buxton recommends avoiding the nulling problem in exactly this way -- by choosing input devices whose physical posi-tions do not correspond absolutely to specific numerical val-ues, and instead whose relative motion (rotation, etc.) corresponds to relative changes relative of the parameter's digital state. However, Buxton's argument omits a discussion of the benefits of absolute transducers. The fact that a slider has a limited amount of travel, that a dial has a physical pointer on it, that there are limits to the range and speed of movement of an input device can be of great benefit to inter-action. Absolute positioning in input devices provides users with a kinesthetic sense of the current and last input states of the device, as its digital state is a physically persistent quality of the device. Designers such as Snibbe et al. have argued that absolute input transducers allow users to rely on their

muscle memory when returning to a particular input device without the need for another sensory input, such as vision, which may be busily engaged with another task, "as we do when operating a radio dial without looking: specific destina-tions are stationary relative to the device's base" [47].

If the input device is also actuated, the computer can

auto-matically reset the position of a multiplexed input device to the preset digital state of the new parameter to which it is attached. Moreover, in many interactive workbench applica-tions, the absolute position of objects on the table corre-sponds to a specific value of a parameter, making it difficult to design "relative" controllers such as those recommended by Buxton. Actuation as a solution to the nulling problem allows workbench application designers to retain the functionality of absolute position controllers where they may be more appro-priate to the application than relative controllers. Actuated absolute transducers have already become common in audio mixing boards, in which motorized sliders automatically move to preset positions to set the volume on audio tracks which are mixed together. Automating slider movement allows com-puter control of the audio mix, while also allowing human override or alteration of the audio mix with the same input transducer.

In addition to his use of pucks as relative transducers in the Sensetable platform, Patten has suggested use of actuation in motorized sliders to control variables in the simulation [34]. Here, sliders (motorized linear slide potentiometers) are bound dynamically to a parameters in a simulation. As a user binds sliders to new parameters, the computer moves each slider to the appropriate position corresponding with the pre-set digital state of its new associated variable. Patten's slid-ers were tethered devices, due to the power requirements of the motors in the sliders. The Actuated Workbench makes it possible for such techniques to be applied to controlling the positions of untethered, unmotorized pucks on a table sur-face, allowing more flexible design of tabletop interfaces, and providing actuated rotation as well as translation of objects.

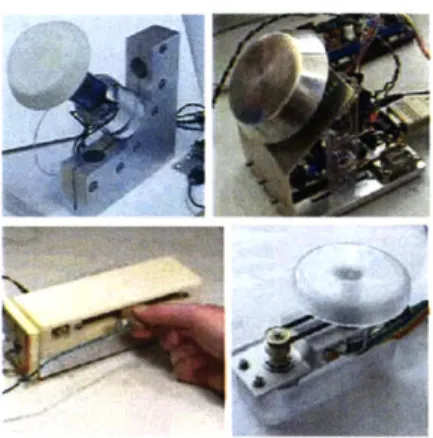

Figure 4.6 Some of Snibbe et al.'s haptic media control devices.

4.2 Haptic Input Devices as Physical

Output for Digital Media

The tactile feedback a user receives from the physical shape and motion of a transducer can be very effective for off-load-ing the cognitive load of monitoroff-load-ing the state of a changoff-load-ing parameter through some other sensory input, such as vision (watching the projection) or audio (monitoring volume). As multiple aspects of visual interfaces compete for a user's visual attention, and aspects of audio interfaces compete for aural attention, maximizing information delivery through a media-independent channel such as touch can reduce sen-sory noise, increasing accuracy and ease of control. Snibbe et al. have made such arguments in the design of haptic physical devices for navigating digital media [47]. For exam-ple, devices such as scroll wheels or even mice have been augmented with actuation to indicate a user's proximity to graphical targets. Snibbe et al. use similar principles to build specialized devices with relative or absolute transducers, designed specifically for certain types of media (Figure 4.6). In addition to the careful selection of input device form-factor and physical behavior, they use "haptic landmarks such as

bumps or textures further "anchor" locations" of digital media on the input device itself. One of Snibbe et al.'s devices maps the absolute position of a slider to the current position in a digital media stream, such as a movie. As the movie advances, the slider slowly moves from one end point to the other, and the user can reposition the current display by grab-bing and manipulating the slider, feeling a springy resistance trying to return the slider to its proper position.

The Immersion company has worked with Logitech, a leading producer of computer mice, to develop products that use haptic feedback to enhance interaction with digital media in the traditional GUI environment. The iFeelTM mouse uses a simple partial-rotation motor built into the mouse to provide the user with 'clicks' and other small sensations while navi-gating over icons in a windows environment. The Wingman Force-Feedback MouseTM is a mouse attached to two mechanical arms which are attached to linear motors built

into a special base underneath the mouse. The Wingman gives strong haptic feedback, as it is capable of physically moving the mouse while it is in the user's hand. However, it has only about a three-inch range of motion and has a rather bulky base underneath the mouse which holds the mechani-cal arm (Figure 4.7).

Building on research that demonstrated the effectiveness of haptic displays in aiding object selection in 3D virtual environ-ments, researchers involved in a project called the Haptic Workbench [31] used a force feedback device called the PHANToM TM [29] (Figure 4.8), to experiment mapping

abstract data to haptic modalities. They mapped a quantita-tive attribute of the data to the motion of a PHANToM device in 3D space, attempting to create a "multi-sensory" interface that would allow users to "assimilate information more effec-tively by mapping different attributes of data to the different human senses" [31]. However, their results did not support the hypothesis that such mappings would reduce cognitive load. They found that instead of decreasing cognitive load by lowering visual sensory overload, haptic displays of abstract information actually increased cognitive load and frustrated users in situations where there was no clear metaphor for mapping the behavior of mathematical data onto the move-ment of the PHANToM. This amounts to what Buxton has called "cross-modality mapping" [8], in this case meaning that changes in an abstract form of data (temperature) were mapped to the linear motion of the PHANToM pointer in 3D space. Buxton criticizes cross-modality mappings, saying they "require learning and impede achieving optimal human performance". This is especially true in the case of this exper-iment, since there was no clear metaphorical link between the changing data and the moving physical object.

Actuation Brings Haptic Techniques to Tabletop TUI The use of actuation in a tabletop tangible interface allows designers to build many of the benefits of absolute input transducers to the free-form spatial organization inherent in tabletop interfaces. The objects in a tabletop TUI are unteth-ered and usually have no physical boundaries limiting their

Figure 4.7 The Logitech WingmanTM mouse is a fully-haptic device for

GUI interaction.

Figure 4.8 The PhantomTM is a haptic device that uses servo motors and encoders attached to the device's arm to provide six degrees of free-dom for haptic input and output.

K

I-movement, but such physical boundaries can be simulated with magnetic fields that inhibit or enhance a puck's move-ment on the table. In addition to recreating the properties of absolute transducers, actuation could introduce haptic response to the behavior of pucks on the table. Such haptic behaviors could allow users to off-load much of the cognitive work associated with visually monitoring graphical feedback about the state of many objects on the table. In the following chapter on applications for the Actuated Workbench I discuss several techniques for introducing these behaviors to table-top TUIs.

5. Related Technologies 2:

Tabletop Actuation

Technologies

Thus far I have tried to present an argument for introducing actuation to tabletop tangible interfaces. For tabletop TUI, actuation constitutes computer-controlled movement of objects on flat surfaces, a subject that has been studied for

many years in both the HCI domain and in the realm of indus-trial mechanics. This chapter charts some of the evolution of

2D actuation from pre-HCI eras through past attempts at

pro-viding an actuation solution for interactive computer systems. The next chapter outlines the design criteria I used in devel-oping my own tabletop actuation system for tabletop tangible interfaces.

Mechanical Systems: Robotic Arms

Seek (1970)

A project by Media Lab founder Nicholas Negroponte, Seek [30] used a robotic arm to arrange objects on a table. In the

case of seek, a hamster was placed inside a maze on the table and left to navigate the maze in search of a piece of cheese. A robotic arm would then rearrange the walls of the maze and the hamster would be made to run the maze again. It is said that the robotic arm tended to pick up and crush the hamster about as often as it managed to pick up one of the walls of the maze, but this was probably due to technological

limitations at the time.

Talking Robotic Chess (2003)

Excalibur Electronics is scheduled to introduce a new robotic chess set to the consumer market in September 2003. This chess set retails for $499, and contains a large robotic arm that mechanically manipulates pieces on the chess board

and a 500-word speaking vocabulary for interaction while Figure 5.1 Talking Robotic Chess set

from Excalibur Electronics, avail-playing or teaching a human opponent. The system has been able in September 2003.

Figure 5.3 The PMD at NYU is a tabletop tangible interface that main-tains constraints on the placement of objects through the computer-con-rolled movment of motors in the objects.

developed "after years of research and a partnership with the University of Hong Kong" [11]. Excalibur's advertisements for the product suggest that the physicality of the robotic arm, combined with the speech interaction, will emulate a human opponent. As a general actuation system, the robotic arm is of course limited to moving one object at a time, but it is encouraging to see the use of sophisticated mechanical

manipulation of physical parts in the entertainment industry. Though an effective and dexterous method for computer con-trol, the use of robotic arms would likely be distracting for interactive workbench systems. Moreover, it would be compli-cated and expensive to implement the multiple arms that would be required to move multiple objects simultaneously.

Mechanical Systems: Motorized Objects

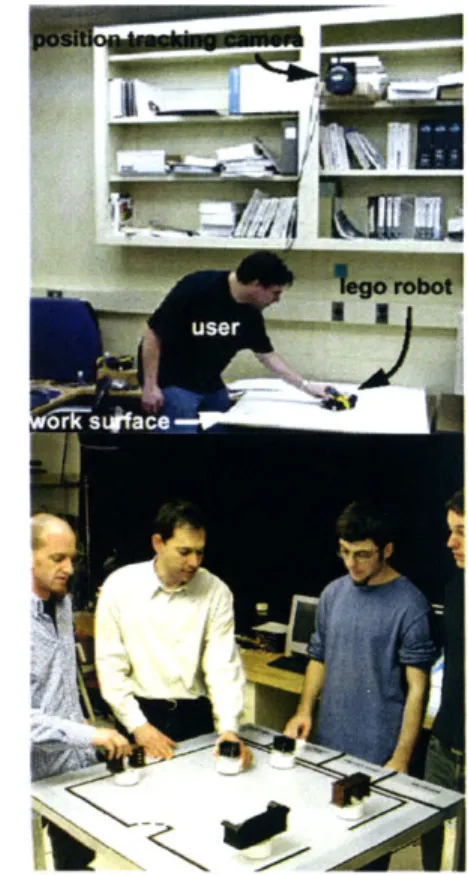

LEGO robots (2001)

Researchers at the National Institute of Standards and Tech-nology addressed the lack of actuation in tabletop tangible interfaces with a project that used motorized LEGOTM robots in a computer-vision tracking system [39]. Their work departs from tabletop tangible interfaces in two ways: 1) The robots they built were rather large compared to the manipulable objects found in most tabletop tangible interfaces. This limits the number of objects that could fit on one tabletop and be easily manipulated by a user's hand. 2) The form factor of the robots did not lend itself to the projection commonly used in tabletop tangible interfaces. Graphical feedback was instead displayed on a computer screen behind the table surface.

Planar Manipulator Display (2003)

A project at NYU, the Planar Manipulator Display [42] uses

motorized objects on a table to maintain constraints in a tabletop tangible interface. These objects contain two wheels, motors, batteries, inexpensive microprocessors, and infrared LEDs which they use to send signals between the table and each object. The objects are tracked through the time-multi-plexed illumination of the infrared LED's on each object,

which are captured with a lateral-effect photodiode, giving accurate 2D position tracking at rates of about 160Hz. This system is designed as a scalable interface that maintains computer-controlled constraints on the locations of physical objects on the table. Though the PMD's designers do not use projection on the objects, instead using specific physical rep-resentations (their example application moves models of fur-niture around a room), the system is compatible with projection from above to achieve a generic tabletop tangible interface. The use of motorized objects departs from the design criteria of the Actuated Workbench, listed in Chapter

6, but it is interesting to note the similarity of the PMD

inter-face and its applications with that of existing tabletop tangible interfaces.

Mechanical Systems: Vibration, Belts, and

Wheels

Outside the realm of HCI, recent robotics research has focused on the problem of actuation in two dimensions, tar-geting applications such as part feeding in factories, parcel sorting in distribution warehouses, and luggage sorting in air-ports.

Universal Planar Manipulator (1999)

The Universal Planar Manipulator (UPM) [40,41] at UC Ber-keley uses the horizontal vibration of a rigid flat plate to move multiple objects simultaneously. The UPM system presents an effective way to manipulate many small parts without the need for motors or magnets, and its designers successfully use it in a closed-loop vision-tracking system. Four voice coil actuators simultaneously vibrate a rigid flat plate horizontally. The average of friction forces over the complete horizontal vibration of the plate work together to move specific objects in a particular direction. At each point on the surface of the table there is only one specific integral of vibration, creating one specific coefficient of friction and therefore one type of motion for each object on the table. The UPM offers an advantage over magnetic actuation systems in that the friction forces on the surface of the table can move any type of object placed

Figure 5.4 The Universal Planar Manipulator at UC Berkeley. Hori-zontal vibrations of the plate com-bine to move multiple objects on S

Figure 5.5 The Modular Distributed Manipulator uses orthogonally ori-ented rollers to rotate and translate objects.

GGO

0

S

GG

G00

GGG0

GO®a

00se

Figure 5.6 Using a variety of wheel movements to achieve part transla-tion and rotatransla-tion in the MDMS

on the table, without the need to attach magnets to objects or limit moveable objects to ferromagnetic materials. This allows the simultaneous, independent movement of multiple objects on the table (published experiments show the independent movement of about 10 objects at a time). Several aspects of the UPM's design detract from its usefulness in interactive workbench interfaces. The original prototype of the system was only capable of object translations and rotations too slow for HCI; its feed rates were on the order of millimeters per second but the system was later updated to tens of centime-ters per second, on par with the Actuated Workbench. Sec-ond, the mechanism for vibrating the surface occupies space around the edges, preventing the easy tiling of multiple sur-faces. The UPM's creator, Dan Reznick, has suggested to me that this limitation could be overcome with a different link-age between the driving coils and the surface of the table. Third, the system is noisy due to the mechanism needed to vibrate the flat surface and the sound of the vibrating objects. While not a problem in a factory assembly-line setting, this noise might be distracting for typical HCI applications in office or academic environments. Reznick has told me that in later versions of the UPM, vibration noise has been sufficiently reduced to make the system usable for HCI.

Modular Distributed Manipulator System (1997)

Another system, the Modular Distributed Manipulator System (MDMS) [28] consists of an array of orthogonally oriented wheels that support and move objects through combined vec-tor forces created by the rotating wheels. This actuation method presents a clever solution to the problem of friction between objects and the table. Instead of dragging or sliding objects on a continuous flat surface, the system moves objects by rolling them along the tops of the wheels, doing away with the friction between two flat surfaces. Because it is an array-based actuation mechanism, the MDMS is scalable to larger areas, requiring only that more actuators be set up next to the existing array. The MDMS is intended for manipu-lating large parcels, factory materials, or pieces of luggage in a conveyor belt situation. Creating and controlling similar actuators on the small scale required for the small pucks

used in HCI would present many challenges for mechanical design. More significantly, the surface upon which objects rest is neither flat nor continuous (because it is made up of many small wheels), making it unsuitable for the projection often used in tabletop tangible interfaces.

Magnetism: X-Y Plotters and Electromagnets

Claude Shannon's Theseus (1952)

One of the first actuation systems that used magnetism to move an object on a table was Claude Shannon's robotic mouse, Theseus, developed in the 1950's [46]. Here, a robotic mouse was equipped with sensors on its whiskers, enabling it to sense contact with one of the walls of the maze, at which point it would rotate ninety degrees and attempt to move forward again. In this manner the mouse constructed a map of the maze and could then run the maze a second time without hitting a wall. This was perhaps one of the first "learn-ing circuits" of its type, and this type of artificial intelligence was the main thrust of Shannon's research with the project. The actuation mechanism was also a novel introduction: electromagnets were mounted on an XY plotter under the surface of the (aluminum) table, and magnetic forces grabbed the metal mouse in order to move it on the table. Multiple electromagnets were used, allowing the system to rotate the mouse on the table. Theseus seems to be the first documented instance of this XY-plotter and electromagnet actuation system, used extensively in later HCI work as well as in commercial toy chess sets.

Fitzmaurice's "Self-Propelled Bricks" (1996):

In his Ph.D. thesis, Fitzmaurice describes a vision for Bricks acting as both physical input and output devices, providing not only "visual or tactile feedback but also position and motion feedback" [13]. He suggests preliminary applications for such technology, such as a desktop "cleanup" function akin to the cleanup command then popular on the Macintosh

GUI operating system.

Figure 5.7 Claude Shannon and the Theseus project, a robotic mouse controlled by an electromagnet mounted on an XY plotter unter the table.