1

Experiential Learning under Ambiguity

Thorsten Wahle

Institute of Management and Organization Faculty of Economics

Università della Svizzera italiana

A dissertation submitted to the

Faculty of Economics of the Università della Svizzera italiana in partial fulfillment of the requirements for the degree of

Doctor of Philosophy

under the direction of Prof. Dirk Martignoni

2

ACKNOWLEDGMENT

I want to thank the Institute of Management and Organization at USI for the supportive and scholarly environment. I am indebted to everyone there for their feedback and friendship. I thank my advisor, Professor Dirk Martignoni, for his guidance. I learned a lot from him in the past five years - especially in being more concise in my writing and efficient in

approaching new tasks. I also thank Nikolaus Beck, Claus Rerup and Zur Shapira for agreeing to serve on my dissertation committee.

Further, I am very grateful for the opportunity to visit NYU – my time there has given me countless insights on the research process and truly made me enthusiastic about the prospect of academia. I’m particularly thankful to Professor Filippo Wezel for supporting my

application, to Professor Zur Shapira for hosting me and working with me at NYU, and to Professor J.P. Eggers for becoming my co-author. I continue to learn from them, and am inspired by their insights, and way of working. Through the many interactions with Professor Shapira in particular, I gained much clarity on my research identity, the state of the field and the people involved in it.

Lastly, I want to thank my wife, Sarah Edris, and my parents for their unconditional love, support and advice during these last years!

3

ACKNOWLEDGMENT ... 2

LIST OF TABLES ... 4

LIST OF FIGURES ... 5

1. INTRODUCTION ... 7

2. THE SOCIAL COMPARISON TRAP ... 10

INTRODUCTION ... 10

LITERATURE REVIEW ... 14

METHOD ... 17

ANALYSIS AND RESULTS ... 22

DISCUSSION ... 31

3. LEARNING FROM OMISSION ERRORS ... 40

INTRODUCTION ... 40

THEORY AND HYPOTHESES ... 44

METHOD AND DATA ... 49

ANALYSIS ... 54

RESULTS ... 55

DISCUSSION ... 61

4. THE BOILED FROG IN ORGANIZATIONAL LEARNING ... 65

INTRODUCTION ... 65

THEORY ... 68

GENTRIFICATION AS ENVIRONMENTAL CHANGE ... 73

METHOD ... 75 RESULTS ... 79 SURVIVAL ANALYSIS ... 85 SIMULATION EXPERIMENT ... 89 DISCUSSION ... 101 5. DISCUSSION ... 105 DISSERTATION SUMMARY ... 105 CONTRIBUTION TO THEORY ... 106

LIMITATIONS AND FUTURE RESEARCH ... 108

6. REFERENCES ... 110

7. APPENDICES ... 121

APPENDIX CHAPTER 2 A ... 121

APPENDIX CHAPTER 2 B - EXPERIMENT ... 125

APPENDIX CHAPTER 2 C ... 141

APPENDIX CHAPTER 3 A ... 144

4

List of Tables

Table 2-1: Treatment conditions chapter 2 ... 20

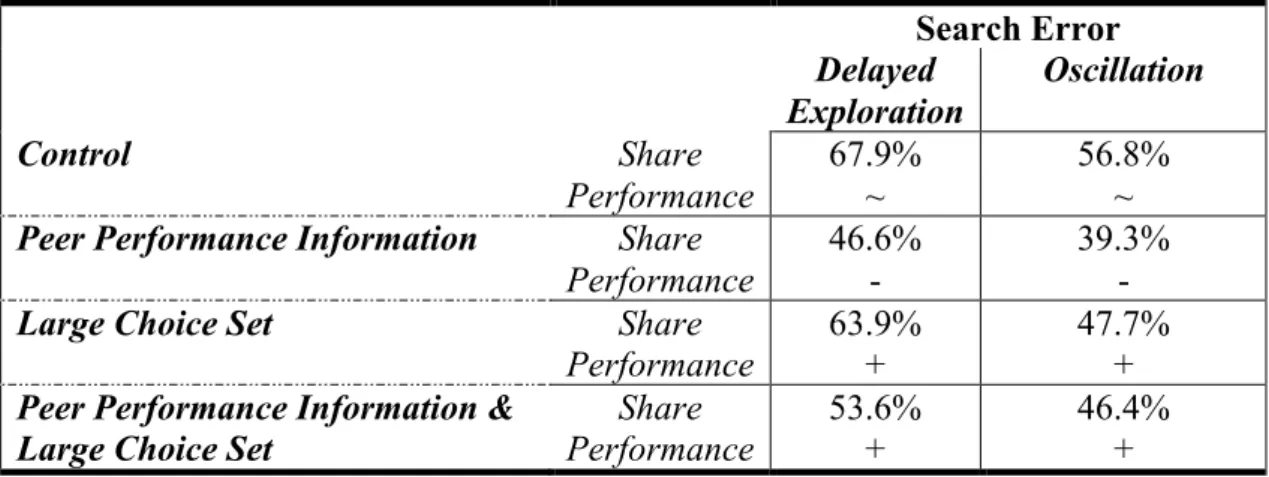

Table 2-2: Performance implications of search errors and percentage of participants committing them ... 28

Table 3-1: Main results (full complexity) ... 55

Table 3-2: Reduced complexity results ... 57

Table 3-3: Split samples based on social aspirations ... 58

Table 3-4: Attention to competitor ... 60

Table 4-1: Gentrification and restaurant lifespan ... 80

Table 4-1a: Gentrification and restaurant lifespan ... 83

Table 4-2: Mediation analysis (lifespan and adaptation) ... 85

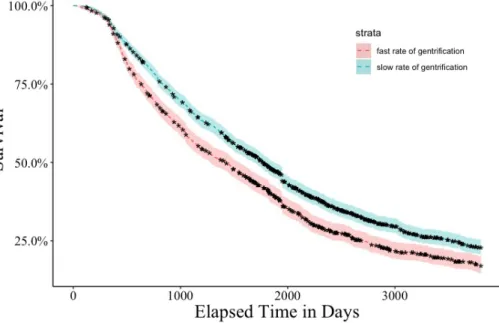

Table 4-3: Significance test between survival rates in Figure 4-7 ... 87

Table 4-4: Mediation analysis ... 89

5

List of Figures

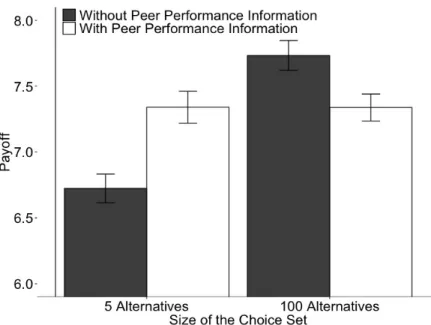

Figure 2-1: Performance effect of social comparisons ... 22

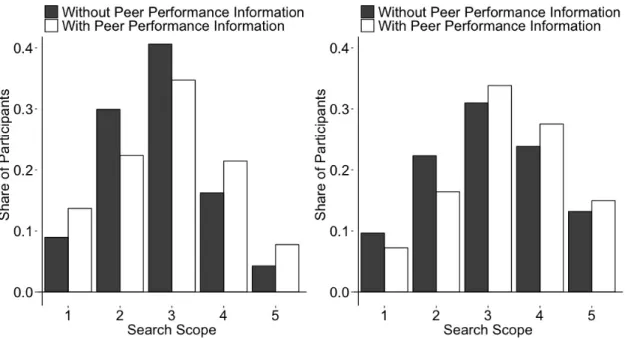

Figure 2-2: Social comparison effects on search behavior ... 23

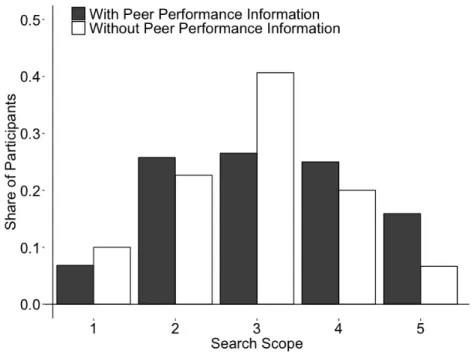

Figure 2-3: Level of exploration for 5 alternatives (left panel) and 100 alternatives (right panel) ... 25

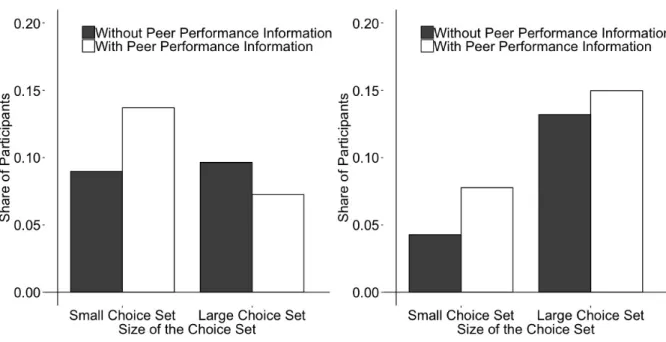

Figure 2-4: Share of participants never searching (left panel) never stopping to search (right panel) ... 26

Figure 2-5: Share of participants searching beyond the best decision alternative ... 27

Figure 2-6: Level of exploration with a fixed sequence of alternatives ... 30

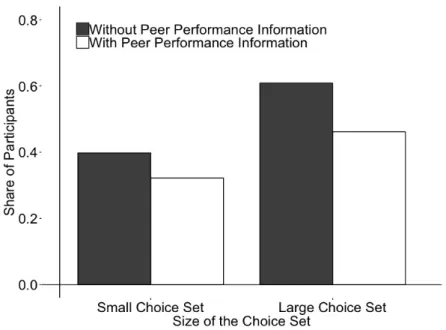

Figure 2-7: ANOVA of performance differences across the four conditions ... 31

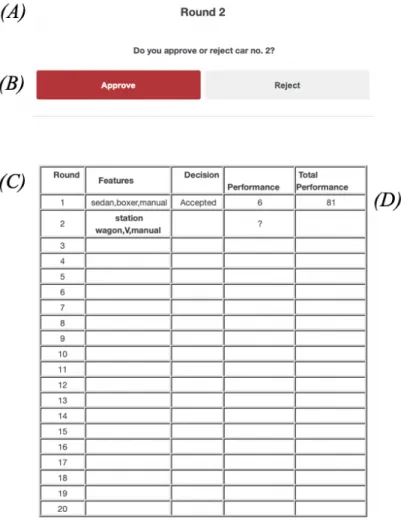

Figure 3-1: Example decision screen ... 52

Figure 4-1: Number of active restaurants over the years ... 76

Figure 4-2: Number of active restaurants across boroughs ... 76

Figure 4-3: Distribution of menu change rates ... 78

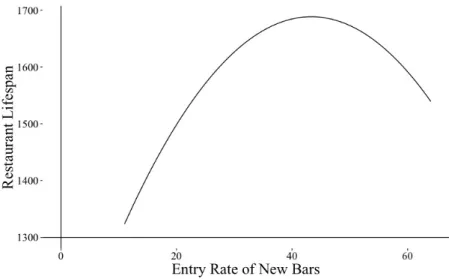

Figure 4-4: Inverted U-shape of the effect of entry rate of bars on restaurant lifespan ... 81

Figure 4-5: Inverted U-shape of the effect of entry rate of cafés on restaurant lifespan ... 81

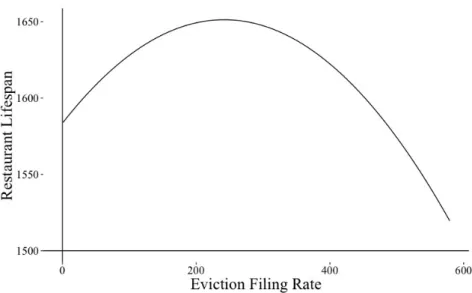

Figure 4-6: Inverted U-shape of the effect of the rate of eviction filings on restaurant lifespan ... 82

Figure 4-7: Differences in survival rates between restaurant exposed to gentrification at fast (strata=0) versus slow rates (strata =1) ... 87

Figure 4-8: Differences in survival rates between restaurant in relatively declining neighborhoods ... 88

Figure 4-9: Sensing accuracy as function of degree of continuous change ... 93

Figure 4-10: Sensing accuracy moderated by sensing threshold ... 94

Figure 4-11: Sensing accuracy moderated by learning rate ... 95

Figure 4-12: Performance implications of different degrees of continuous change ... 96

Figure 4-13: Performance deviation from discontinuity with increasing level of continuous change ... 96

Figure 4-14: Performance comparison with averaging heuristic ... 98

Figure 4-15: Performance comparison with regression heuristic ... 98

Figure 4-16: Performance comparison with minimum heuristic ... 99

Figure 4-17: Performance comparison with averaging-threshold ... 99

Figure 4-18: Performance comparison with minimum-threshold heuristic ... 100

Figure 4-19: Performance comparison with peak-end-threshold heuristic ... 101

Figure 4-20: Performance comparison with peak-end heuristic ... 101

Figure 7-1: Optimal task behavior ... 122

Figure 7-2: Optimal behavior using a 2/3 target heuristic ... 124

Figure 7-3: Instruction screen 1 ... 125

Figure 7-4: Instruction screen 2 ... 126

Figure 7-5: Instruction screen 3 ... 127

Figure 7-6: Decision screen first period ... 128

Figure 7-7: Decision screen mid-task ... 129

Figure 7-8: Attention check ... 130

Figure 7-9: Final task screen ... 131

Figure 7-10: Comprehension questions 1 ... 132

Figure 7-11: Comprehension questions 2 ... 133

Figure 7-12: Additional questions 1 ... 134

Figure 7-13: Additional questions 2 ... 135

6

Figure 7-15: Changed decision screen treatment 1 ... 137

Figure 7-16: Manipulation check treatment 1 ... 138

Figure 7-17: Changed decision screen treatment 2 ... 139

Figure 7-18: Changed decision screen treatment 3 ... 140

Figure 7-19: Performance comparison between managers and non-managers ... 141

Figure 7-20: Performance comparison between age groups ... 142

Figure 7-21: Performance comparison between levels of education ... 143

Figure 7-22: Performance comparison between levels of income ... 143

Figure 7-23: Instructions omissions experiment ... 145

Figure 7-24: Instructions omissions experiment (cont'd) ... 146

Figure 7-25: Omissions post-experiment screens ... 147

Figure 7-26: Omissions post-experiment questionnaire ... 149

Figure 7-27: Omissions treatment information – competitor treatment ... 150

7

1. INTRODUCTION

Learning from experience is crucial for adaptation and to improve performance. Experiential learning takes place by making choices, observing the outcomes, and altering future choices accordingly (Levitt and March 1988 March and Olsen 1975). Over time, such learning is expected to improve choices and outcomes (Lant and Mezias 1992), but only when decision makers can classify outcomes as either successes or failures. This dichotomous outcome has clear behavioral implications - change after failure or persist after success (Greve 2002).

Such classification may be complicated when experience is ambiguous, i.e. a choice may not always produce an outcome that can be readily classified as success or failure (Rerup 2006). In particular, outcomes are ambiguous when they allow for classification into more than one category (Repenning and Sterman 2002). Prior literature has examined how learning from ambiguous outcomes may occur through attention and mindfulness (Levinthal and Rerup 2006). However, different choice contexts are likely to differ in the type of ambiguous experience they create (Weick and Sutcliffe 2006). It is therefore important to understand when learning under ambiguity is possible. Such choice contexts include situations when (1) decision makers do not have enough prior experience to determine a sensible threshold between success and failure (i.e. they do not have enough data to formulate historical aspirations), (2) choices do not produce observable outcomes, or (3) it is unclear if the decision maker’s choice or external factors are causing the observed outcome. In this dissertation, I aim to study these impediments to experiential learning, particularly when decision makers perceive outcomes as ambiguous.

I explore three choice contexts of learning under ambiguity using experiments and a large dataset on restaurants in New York affected by gentrification as well as short simulation experiments.

8

In chapter 2 (study 1, co-authored with João Duarte and Dirk Martignoni) we aim to identify when social comparison improves or reduces search efficacy in contexts of

insufficient prior experience. Social comparison is common in organizations and takes the form of pay transparency, ratings and rankings, among others.

In our study, we seek to enhance our understanding of the implication of this kind of information for search and, in turn, performance. Using a series of online experiments, we identify conditions under which peer performance information may have a positive or negative effect on performance. We identify the number of potential choice alternatives as a novel moderator affecting the performance implications of peer performance information. Our study also sheds new light on the mechanism through which peer performance

information may affect (search) performance: positive performance effects only arise if peer performance suppresses rather than induces search.

In chapter 3 (study 2, co-authored with J.P. Eggers) we turn to examining choices which do not produce observable outcomes. Specifically, we are interested in learning from omission errors. Within organizations, the ability to learn from mistakes in general is central to performance improvement and adaptation. But different types of errors importantly

produce different levels of observable feedback – commission errors generally produce direct feedback, but omission errors often do not. Thus, in order for managers to learn from

omissions they must have the ability to know the outcomes of choices not pursued. One key source of such information comes from observing competitors, but attending to competitors may in itself affect learning and adaptation. In a series of experiments, we study whether decision makers learn from feedback provided by observing competitors. We argue and find that decision makers’ ability to learn from their decision errors depends on their position relative to the competitor. Specifically, leaders tend to learn from their omission errors because they actually pay attention to competitor decisions, but laggards tend to learn only

9

from commission errors. This has implications for our understanding of learning from failure and organizations’ attempts to learn from competitors.

In chapter 4 (study 3, co-authored with Zur Shapira) we explore how learning is inhibited by noisy feedback on outcomes and specifically, how the pace at which the environment is changing impacts organizational adaptation and survival. Such change is often viewed as discontinuous. We introduce the concept of continuous radical change by which old beliefs are rendered fallacious at an incremental pace. We theorize that incremental change can be as harmful as discontinuous change because it can remain undetected for too long by incumbent organizations. Specifically, we argue for a curvilinear relationship between the pace of environmental change and the organizational lifespan. We test our predictions using data from some 31,000 restaurants in the New York City Metropolitan area between the years 2007-2018. We use a fixed effects regression model to test for differences in the lifespan of restaurants located in areas of slow but radical gentrification versus those located in areas with accelerated and radical gentrification. We find support for an inverted U-shape between the pace of gentrification and restaurant lifespan. We explore whether this relationship is driven by differences in restaurants’ adaptation behavior.

Overall, my dissertation makes two main contributions that distinguish it from prior research. Theoretically, I identify choice contexts that produce ambiguous outcomes, and discuss under which conditions decision makers should attempt to learn from such outcomes. Empirically, I develop a novel experimental design to measure search and learning. I

complement the experiments with a unique dataset of gentrification and restaurants in New York that allows for the study of exogenous environmental change.

10

2. THE SOCIAL COMPARISON TRAP

Introduction

Social comparison among employees is common across all kinds of organizations (Adams 1963, Festinger 1954, Nickerson and Zenger 2008). Social comparisons often arise naturally whenever employees can observe their peers’ performance (Kacperczyk et al. 2015,

Nickerson and Zenger 2008). At the same time, organizations may also seek to promote social comparisons through, for example, providing their employees with performance rankings and ratings (Greve 2003b, Greve and Gaba 2017). Manipulating the availability of this peer performance information is an important aspect in designing the “social

architecture” of organizations (Lee and Puranam 2017, Nickerson and Zenger 2008). In so doing, organizations hope to improve the efficacy of their employees’ search processes (Baumann et al. 2018, Greve and Gaba 2017). Most importantly, providing information about better performing peers may trigger search, thereby helping to overcome problems of inertia and overexploitation (Chen and Miller 2007, Greve 2003a, Greve and Gaba 2017).

Though intuitively appealing, empirical research has produced mixed findings on the performance implications of social comparisons. Some studies point to positive performance effects (Blanes i Vidal and Nossol 2011, Stark and Hyll 2011) while others find negative performance effects of social comparisons (Nickerson and Zenger 2008, Obloj and Zenger 2017, Pfeffer and Langton 1993). Together, these findings suggest that the link between social comparison and performance outcomes is under-theorized, in particular that potential moderating effects are neglected.

In our study, we seek to refine our understanding of how and when social (performance) comparisons may affect the efficacy of search processes and, in turn,

11

performance1. Specifically, we conducted a series of repeated-choice experiments in which we manipulated the availability of information about how peers performed in these

experiments. We find that social comparison may improve or hamper the efficacy of search, depending on the type of search problem they face. Interestingly, and in contradiction to previous arguments (Chatterjee et al. 2003, Greve 2003b), positive effects of social

comparison arise if the availability of peer performance information suppresses (rather than spurs) search and exploration. Negative effects, in contrast, arise if peer performance

information induces exploration, specifically in contexts where overexploitation seems to be the key problem, i.e. when they face a multitude of decision alternatives. In sum, in refining and extending the scope of existing search-based theories, we seek to explain both the bright and the dark sides of social comparison. We identify boundary conditions for the positive and negative effects of social comparison (induced through peer performance information) in terms of the type of problem searched and in particular, the number of decision alternatives.

Our study contributes to the existing literature in several ways. First, we refine existing search-based theories to explain the emergence of both positive and negative effects of social comparison and identify boundary conditions for these opposing findings. While we are not the first to point to both positive and negative effects of social comparison, our theory allows explaining both positive and negatives within one theoretical framework, without resorting to adding other mechanisms such as envy (Mui 1995, Nickerson and Zenger 2008), effort (Blanes i Vidal and Nossol 2011, Levine 1993, Stark and Hyll 2011), or motivation (Gubler et al. 2006). For example, while it is often argued that social comparisons may have positive effects on search, they may also lead to envy among employees (Nickerson and Zenger 2008, Van de Ven 2017) and other asocial behavior (Charness et al. 2013, Chan et al.

1 In our study, any differential effects of social comparison on performance are driven entirely by differences in

12

2014) as well as a reduced willingness to cooperate (Colella et al. 2007, Dunn et al. 2012, Nickerson and Zenger 2008). As a result, the overall effect of social comparisons may be negative. In our study, we point to the fact that even if we focus on the implications for search, social comparisons may have positive or negative implications, depending on the size of the choice set decision makers face. When facing a large choice set of many alternatives, decision makers are tempted to search for too long in their attempts to explore at least some share of the set of the available alternatives. Such attempts require searching more choices compared to smaller choice sets (i.e. fewer decision alternatives). As a result, they may search for too long as the optimal stopping point for search is only a function of the time horizon rather than the size of the choice set. Thus, when facing large choice set and provided with peer performance information, decision makers are lured into searching for too long.

These findings also contribute to social aspiration theory (Cyert and March 1963, Greve 1998, Greve 2003b, March 1994) and stretch goal theories (Hamel and Prahalad 1993, Latham 2004, Kerr and Landauer 2004, Sitkin et al. 2011), intellectual offspring of social comparison theory. According to these theories, the benefits of social comparisons stem from the fact that observing better performing peers induces change (Audia et al. 2000, Lant 1992, Miller and Chen 1994), exploration (Baum and Dahlin 2007), and risk taking (Bromiley 1991). After having observed better-performing peers, the status quo appears no longer satisfactory (Simon 1956) and (problemistic) search processes are triggered. Our study points to a novel mechanism of how social comparison may affect search processes. Peer

performance information may serve as a substitute for exploration and may help

organizations put into perspective the performance of alternatives it already tried and knows. Thus, social comparison may both trigger exploration when performance can improve and suppress exploration when performance is satisfactory. It can only do so, however, when decision alternatives are few. When decision alternatives are many, observing high peer

13

performance may spur exploration when performance can improve but fail to curb exploration when performance is satisfactory.

Second, we discuss a novel mechanism by which social comparison affects performance outcomes. Prior research in the tradition of the behavioral theory of the firm highlights the role of social comparison as a means to overcome problems of inertia and under-exploration (Audia et al. 2000, Rhee and Kim 2014). Accordingly, social comparisons are thought to mitigate these issues by inducing search (Chen and Miller 2007, Greve 2003a, Greve and Gaba 2017). Our study points to a novel mechanism through which social

comparisons may improve the efficacy of search processes - not by inducing but by

suppressing search. If peer performance information is not available, decision makers tend to search for some time to determine what might constitute a satisfactory performance outcome. Imagine a situation in which a decision maker runs into the best alternative in his first try. Without any peer performance information (or information about the distribution of the other alternatives’ values), she has to continue searching other alternatives in order to establish the superiority of this first alternative. Now, imagine a situation in which peer performance information is available. If peer performance information is available, it is much easier to recognize her first choice as satisfactory and thus she can avoid the (opportunity) costs of further exploration. In more abstract terms, in searching among a set of alternatives, decision makers seek to acquire two different kinds of information: first, information about what may constitute a better alternative; second, information about the distribution of performance and what may constitute a satisfactory level of performance. Peer performance information is particular helpful in overcoming the second information problem.

Lastly, we contribute to the organization design literature (Puranam et al. 2012, Nickerson and Zenger 2008). In recent years, new forms of organizations have emerged, in particular meta-organizations that are characterized by open boundaries (Gulati et al. 2012).

14

Likewise, organizations face pressure to become more open, granting employees greater participation and transparency for example though pay transparency (Gartenberg and Wulf 2017). For employees, this new openness means both more opportunities to engage in social comparison (because outcomes of peers are now more available) and more decision

alternatives to choose from (because employees are involved at a higher level of problem solving). Our study considers the co-existence of social comparison and a large number of decision alternatives. Our findings suggest that while social comparison may in theory be beneficial to decision makers, in open organizations with many decision alternatives, it may lead to excessive search for new solutions, thus ultimately hurting performance.

The remainder of our study is structured as follows. We first review the theoretical building blocks of our core argument. We then discuss the design of our experiment, followed by the presentation of our results. We conclude by discussing the implications of our results for existing research.

Literature Review

Social comparison in organizations

Social comparison is a ubiquitous phenomenon in social life (Festinger 1954). Social

comparison is commonly understood as comparing own performance to the performance of a peer (Obloj and Zenger 2017). In organizations, employees often compare their performance to those of their fellow colleagues (Nickerson and Zenger 2008).2 Organizations may seek to encourage social comparison by making peer performance information available (Nickerson and Zenger 2008, Kacperczyk et al. 2015, Gartenberg and Wulf 2017). For example, within

2 However, social comparison is not limited to outcome comparisons among individuals (Festinger, 1954:

Nickerson and Zenger, 2008). Business units compare themselves to other business units (Baumann et al., 2018; Birkinshaw and Lingblad, 2005; Galunic and Eisenhardt, 1996) and organizations compare their performance against other organizations (Greve, 1998; Greve 2003b; Joseph and Gaba, 2015).

15

(and across) banks, there is often complete transparency how fellow fund managers and traders performed (Castellaneta et al. 2017, Kacperczyk et al. 2015, Uribe 2017); call center agents are made very well aware of their performance compared to their peers (Neckermann et al. 2009); in many firms such as GE, rankings and ratings become an important part of their culture (Short and Palmer 2003). Finally, many firms are adopted policies of pay transparency (with pay as a proxy for performance) (Gartenberg and Wulf 2017).

While all these means provide more or less accurate information about how peers performed, they often provide far less information about how they achieved this performance, i.e., their peers’ behavior and actions. This makes social comparison different from imitation and isomorphism, which requires observing not only peers’ performance but also their

behavior (Mizruchi and Fein 1999).

Performance Effects of Social Comparisons

Organizations often encourage social comparisons, hoping that such comparisons improve performance (Lee and Puranam 2017, Nickerson and Zenger 2008). As a consequence of social comparison, lower performing employees will realize that they could improve their performance (Festinger 1954) and thus, start searching for new solutions (Jordan and Audia 2012, Simon 1947). This follows the logic of problemistic search (Cyert and March 1963): Decision makers first engage in social comparison and take their peer’s performance as a performance target. They then observe the outcome of their current solution and evaluate it against this target. When they fall short of reaching the performance target, they search for new solutions to improve performance. Exceeding the target, on the other hand, leads to reduced search efforts as performance is deemed satisfactory and opportunity costs would arise from continued search (Levinthal and March 1993, March 1991). Since organizations are often thought to be inert, i.e., their members search too little (Audia et al. 2000, Rhee and

16

Kim 2014), social comparison is assumed to help overcome inertia by prompting individuals in organizations to search more.

While intuitively appealing, there are several empirical studies that point to negative performance implications of social comparison. Some empirical studies find that social comparisons (encouraged by pay transparency or tournaments) may have negative

performance implications (Pfeffer and Langton 1993, Obloj and Zenger 2017). This finding is confirmed by both experimental (Gino and Pierce 2009, 2010, Dunn et al. 2012) and anecdotal evidence (Nickerson and Zenger 2008).

In sum, the mixed theoretical and empirical evidence on the implications of social comparisons suggests that the link between social comparison and performance may still be under-theorized and that important moderating effects may be neglected. Below, we discuss one important moderator variable – the number of decision alternatives a decision maker faces.

The number of decision alternatives

The number of alternatives is an important characteristic of any decision problem (Simon 1955). Decision-makers face problems with varying numbers of decision alternatives (Payne 1976). Some decision problems are characterized by few decision alternatives. Take, for example, the procurement decision of an airline. When it comes to the decision from whom to buy a new wide body airplane, there are often only two choices – either Airbus or Boeing. For other procurement decisions, there are many more decision alternatives. For example, for its choice of supplier for aircraft seats, an airline can choose from +100 different

manufacturers.

Organizations may further seek to manipulate the size of their employees’ choice set as one element of designing an organization’s “social architecture” (Nickerson and Zenger 2004). For example, organizations may seek to increase their set of choices by engaging in

17

open innovation (Terwiesch and Yi 2008) and crowdsourcing (Dahlander et al. 2018). Organizational rules and practices, in contrast, often reduce the size of the choice set (Cohen 1991). As a result, organizational structures “consider only limited decision alternatives” (Cyert and March 1963, p. 35).

Recently, the number of decision alternatives a decision maker within an organization faces, has increased as a consequence of greater organizational openness (Alexy et al. 2017, West 2003). This is apparent in new forms of organizations like meta-organizations

characterized by open boundaries (Gulati et al. 2012). In such organizations, openness means a greater involvement of employees at a higher level of problem solving in the organization (Mack and Szulanski 2017). An organization solves big problems with many alternative solutions by dividing problems into smaller sub-problems with fewer alternative solutions that can be solved at a lower hierarchy level (Nickerson and Zenger 2004). In more open organizations, where employees are involved in the problem-solving process at a higher level, this thus means that employees search through a greater numbers of decision alternatives. It is thus the same force that drives social comparison (because open organizations are inherently more transparent) that increases the number of decision alternatives.

Method

Experimental framing

In our experiments, participants face a simple repeated (five periods) choice problem in which they face a set of five alternatives with different values. These values were not known to the participants. Only by choosing a particular alternative, they could learn about its value: in each period, after they have chosen a particular alternative, its value is revealed. The value of the alternative was drawn at random from a set of five values. In the first

18

period, participants have to explore one alternative at random since they had no prior

information on the value of any alternative ahead of the task. From period two to period five participants could either choose an alternative whose value is known to the participants because the explored it in a past period or explore an alternative of unknown value. Feedback on the values was given without delay or any deception, i.e., the value revealed corresponds to the alternative’s true value. 3 We framed the experiment as a managerial choice task in which the participants (in the role of the manager) have to maximize their payoffs in US dollars ($).

Treatment 1 (“Peer Performance Information”). First, to allow for social comparison, we provided them with information about how a peer performed in the very same task

(“Treatment 1: Peer Performance Information”): “A consulting study concludes that, with the right strategy, your company’s profits could be as high as $121 million a year”. We deliberately chose to frame it not as peer performance information (“Your best performing competitor generated profits of $121 million in his best year”) for several reasons. Our pre-tests indicated that participants often continued searching for even better alternatives even after they had discovered what we told them to be the highest value achievable in this task (here, $121 million). Likely reasons for this type of behavior are first, that participants assumed that their peer’s performance was a function of both their choices and effort, i.e. they would have continued searching because they thought they can do even better than their peer. And secondly, participants may have assumed that if several people simultaneously chose the very same alternative, its value or payoff will have to be split between them. To

3 In each period (after the first period), participants had to decide whether to explore a new alternative of unknown value

or exploit an alternative of known value.To avoid confounding effects of, for example, memory constraints, we narrowed down the actual choices participants had to make between choosing “exploiting the best alternative explored so far” and “exploring an alternative never explored so far”. With such a setup, we avoid, that participants exploit inefficiently (i.e. they do not exploit the best-explored alternative) or re-explore alternatives that they had already explored in the past. In pre-tests of this experiment, we also tested a setup in which the choice was between 5

alternatives in each period, i.e. 5 alternatives were shown in a line and one of them would have to be picked. This led to more random choices of the participants, but had no effect on our findings.

19

avoid these confounding effects and isolate the informational effect of peer performance information, we presented the treatment information in a neutral way that would not invoke any feelings of rivalry (Kilduff et al. 2010).

Treatment 2 (“Large Choice Set”).

In this treatment group, participants faced a much larger choice set, i.e., 100 rather than just five alternatives. We adapted the instructions accordingly (“`You will have to […] choose among 100 different strategies”). This information was reinforced in every period while participants were informed that “You have 100 [99,98,97,96] alternatives left to explore”. Every time a new alternative was searched, its payoff was drawn at random from a set of 100 alternatives. Since our participants always only have five periods to search within their choice set; they could never search exhaustively. Thus, in our experiment, we only indicate that they face a larger choice set without changing the experimental task they face.

Treatment 3 (“Peer Performance Information & Large Choice Set”).

Finally, our last treatment combines treatment one and two. Participants had access to peer performance information and their choice set was large. This means, participants were shown the peer performance information (“A consulting study concludes that, with the right strategy, your company’s profits could be as high as $121 million a year”) as well as informed that they face a choice set of 100 alternatives (“You will have to […] choose among 100 different strategies”).

The study was conducted in a between-subject design. This means each participant was randomly assigned to one of the 4 conditions: control, peer performance information, large choice set, peer performance information and large choice set. In Table 2-1, we show an overview of all four conditions.

20

Table 1. Overview of treatment conditions

Size of Choice Set

Small Large

Peer Performance Information

Absent Control Treatment 2 Available Treatment 1 Treatment 3 Table 2-1: Treatment conditions chapter 2

Experimental setup

Incentives. We relied on the induced-value approach (Smith 1976), i.e., we provided

monetary incentives to the participants such that better choices translated into higher payoffs to participants. In keeping with the tradition of economic experiments, all information provided to the participants was truthful (Ortmann and Hertwig 2002). Before starting the experiment, participants were told that the experiment would take them around 5 minutes to complete and that their payment will be based on the payoffs they accumulate in this task. Each choice was associated with a particular payoff (e.g. “Period 1: The chosen alternative generated $55m”). We chose payoffs in this experimental currency to provide higher incentives to participants. In particular, prior studies have found that participants consider the nominal (the value given in experimental currency) rather than the real payoff value (Davis and Holt 1993) and thus, behave more like they would in a real organizational setting. After each choice, the choice’s payoff was revealed. We also always showed them the profits they had accumulated so far.

We also informed our participants that upon completion, there would be a certain payment of $0.10 and up to $0.20 depending on their performance in the task, i.e. the

cumulative profits associated with their choices. One might be tempted to conclude that such low stakes may result in some confounding effects such as excessive risk taking (Lefebvre et al. 2010, Weber and Chapman (2005), search, or lack of effort (Smith and Walker 1993). We do no observe such confounding effects in our experiments: First, if risk taking was higher due to small stakes, we would expect to see all treatment conditions affected equally.

21

Furthermore, we would expect to see very high levels of search across all treatments. We do not observe such effects – there are no differences in search between control and treatments and we also do not observe excessive search behavior. Also, prior research does not indicate a significant change in behavior if stakes are higher (Camerer and Hogarth 1999, Post et al. 2008). For instance, even if stakes are very high, participants commit decision errors to a similar extent as in low stake contexts (Post et al. 2008). Fourth, low payments are often thought to result in lower effort. In our context, however, effort does not matter. It is a pure choice task and all aspects that require some effort are designed in the way to minimize cognitive efforts by participants. For example, we always provide participants with a complete history of their past choices and their payoffs; we also always highlight the best alternative they have identified so far and make sure that inefficient exploitation is impossible. Generally, we would expect to see extremely monotone behavior, i.e., many participants only exploring or only exploiting throughout the task if they would generally exhibit a lack of effort. We find no such effect. Further, we included a manipulation check to control for a lack of (cognitive) effort. After participants finished the task, we asked them to report back the treatment information. Our findings are robust to the exclusion of those participants that did not accurately recall the peer performance information. In any case, the treatments effects found including those that did not accurately recall the peer performance information, provide a more conservative estimate of the effect.4

Respondents. Participants were recruited on Amazon MTurk. All treatments included, 857 subjects completed the experiment. 61.8 percent of the participants were women, the average age was 37 years and 27.8 percent claimed to hold a managerial or supervisory position at their workplace. Participation was restricted to the United States to avoid language difficulties. On average (median duration), participants completed the

22

experiment in 4 minutes and 38 seconds and spent 10 seconds on each of the four individual choices. Those who did not spend any time on the instruction pages or failed our attention check were excluded from the sample. All participants were randomly assigned to one of the 4 treatment groups (control, peer performance; each for a small and large choice set).

Analysis and Results

The performance implications of social comparison

In our first experiment, we are interested in how the availability of peer performance information may affect our participants search behavior and, in turn, performance. In Figure 2-1, we report the average accumulated (over all five periods) payoffs of our control and treatment groups.

Figure 2-1: Performance effect of social comparisons

If the choice set is small, the availability of peer performance information has a significantly positive performance effect (MD=0.62, p=0.000). In terms of the effect size, this meant that participants earned a 9.16 percent higher payoff in the treatment condition

23

compared with control. If, however, the choice set is large, we observe the opposite effect, i.e., peer performance becomes costly (MD=-0.40, p=0.010). If peer performance information was available, participants earned a 5.12 percent lower payoff in the

treatment condition when compared with the control condition. In other words, depending on the size of the choice set, peer performance information may have a positive or

negative performance effect. It is important to note that these differential effects are not driven by differences in order in which alternatives where searched. The order in which participants could search alternatives is random and each order is equally likely.

In search problems, underperformance is often attributed to insufficient levels of exploration (Baum and Dahlin 2007, March 1991) and social comparisons are considered a remedy, i.e., they induce search (Cyert and March 1963, Greve 1998). Thus, we would expect that the positive performance effects of peer performance information for small choice sets are driven by higher levels of exploration while the negative effects for larger choice sets may be driven lower levels of exploration. In Figure 2-2, we compare the effect of peer performance information on how many alternatives participants explored in small and large choice sets.

Note. Search scope is measured by periods of exploration.

24

With both small and large choice sets, peer performance information induces search: When facing a small choice set, the availability of peer performance information increases search by 3.7% but this increase is not significant (MD = 0.10, p = 0.302). For large choice sets, the increase (by 5.8%) becomes pronounced and more significant (MD = 0.18, p = 0.118). Thus, inducing search through social comparison cannot explain both the positive and negative performance effects of peer performance information.

It is sometimes overlooked that peer performance information may not only induce search but may also suppress further search. Imagine that, by chance, a participant discovers the best alternative with her first choice. Without peer performance information (and thus, without knowing that it is already the best alternative), the optimal behavior is to continue searching. With peer performance information, however, the participant knows that her current choice is already the best alternative and there is no point in searching for better alternatives. She can abort her search.

Using peer performance information to abort search once the best solution is found is always performance enhancing. Yet, in larger choice sets, it is much less likely that any benefits from aborting search can be reaped because it is much less likely that the best alternative is encountered within the first 1-2 periods. With a smaller choice set, in contrast, the probability is high that within the first 1-2 periods, the best alternative is encountered and search can be aborted.

In Figure 2-3, we seek to provide some evidence for the different ways (induce or suppress) peer performance information affects our participants search behavior. Specifically, we disentangle the average search scope and report the share of participants exploring 1, 2, 3, 4, or 5 alternatives. In the left panel, we show the results for small choice set; in the right panel, we show the results for large choice sets.

25

Note. The measure is the share of participants stopping exploration after trying out X alternatives in percent.

Figure 2-3: Level of exploration for 5 alternatives (left panel) and 100 alternatives (right panel)

With small choice sets, peer performance information both suppresses and induces search: the share of participants aborting search after the first period (presumably because – by chance (20%) – they run into the best alternative in in t=1) increases from 8.9% to 13.7%. At the same time, the share of participants continue searching (presumably because they did not run into the best alternative until period 5) for all 5 periods increases form 4.3% to 7.8% (see Figure 2-4).

26

Figure 2-4: Share of participants never searching (left panel) never stopping to search (right panel)

With larger choice sets, the probability of running into the best alternative in the first two periods is much smaller (only 2% compared with 40% in small choice sets). As a result, peer performance information does not help much to suppress exploration. In fact, the share of participants aborting search after the first period decreases from 9.6% without peer

performance information to 7.2% with peer performance information. At the same time, the share of participants never aborting search still increases with the availability of peer performance information (from 13.2% to 14.9%). Instead, there is a shift towards exploring more alternatives, even to the extent that it becomes very costly (search scope=5) (as can be seen in Figure 2-4).

27

Figure 2-5: Share of participants searching beyond the best decision alternative

Inefficient search behavior and peer performance information

In our analyses above, we focused on the question of how our treatments affect how many alternatives (or their search scope) our participants search. These treatments, however, may also affect when participants search. This, in turn, may affect their performance in our task. In our experiments, the optimal search behavior is to first explore different alternatives (for 2-3 periods) and then switch to exploitation (i.e., play the best alternative found in the exploration phase). Our participants deviate from this optimal search behavior in two ways: First, in a behavior we call oscillation, they switch repeatedly between exploration and exploitation. For example, they may first explore two periods, then exploit one period, only to explore for the remaining two periods. Such a search behavior is suboptimal and costly, even compared to participants who search the same number of alternatives: a participant who explores for the first four periods can then exploit a much better alternative in the last period. Second, in what we call delayed exploration, participants may get the order wrong, i.e., they start with

exploitation and then switch to exploration. Again, this is costly compared to the optimal search behavior because superior alternatives found later in the task cannot be exploited for

28

as long as would have been possible had they been found early in the task. Take, for example, a taxi driver. She may begin her tenure by randomly choosing an area in which she believes many potential customers are located and then service this area before finally, towards the end of her tenure, exploring other areas. This is clearly inferior to a strategy in which she tries out different areas at the beginning of her tenure before settling to service the area that turned out to have the most customers.

In Table 2-2, we report the frequency and performance implications of these two types of deviations from optimal search behavior.

Search Error Delayed Exploration Oscillation Control Share Performance 67.9% ~ 56.8% ~

Peer Performance Information Share

Performance 46.6% - 39.3% -

Large Choice Set Share

Performance

63.9% +

47.7% +

Peer Performance Information & Large Choice Set

Share Performance 53.6% + 46.4% +

Note. Search late indicates that participants first exploit a known alternative before searching for new ones. Alternate between Search/Exploit indicates that participants switch more than once between exploiting a known alternative and searching for new ones.

Table 2-2: Performance implications of search errors and percentage of participants committing them

A large share of participants switched repeatedly (rather than just once) between exploration and exploitation. In the control condition, 56.8% of participants exhibited this oscillation behavior. One participant remarked "I made my decision based on what I would do in this actual event. I would play it safe then go for a goal. Once that is met I would then use that method again. Rather than playing it safe the entire time or just going all out. There needs to be an even ground somewhere." This would suggest that participants preferred to oscillate between exploration and exploitation out of a desire to pursue a performance goal while at

29

the same time minimizing risk. When peer performance information was available, the share of participants following an oscillation approach dropped to 39.3%. Interestingly, with larger choice sets, we do not observe such a drop if peer performance information is available (47.7% versus 46.4%). This makes sense in light of the statement above – there is no need to risk moving away from the fallback option for further exploration once the best option has already been identified, which is more likely to happen with peer performance information on small choice sets.

In the experiment, we observe, that many participants engage in delayed exploration, i.e. they only start searching after first exploiting. In the control condition 67.9% of

participants fell back on known alternatives before searching for new alternatives again. When endowed with peer performance information, this proportion fell to 46.6%. Likewise, on large choice sets, 63.9% of participants exploited known alternatives before searching. When peer performance information was available, only 53.6% of participants searching on large choice sets showed this behavior. The universal drop in delayed exploration when peer performance information is available may be explained by the naïve assumption of some participants that whatever alternative they first come across is sufficiently good. For example, one participant remarked: "Take the easy way or try something new. But, hey if the company already pulls in 76 million in profit why change." When, however, peer performance

information was available, participants seemed to question their initial assumption about the quality of the first alternative and start the task by exploring. Thus, overall, our results suggest that peer performance information helps to reduce decision errors for both small and large choice sets.

Robustness Checks

As the sequence of alternative was random in the experiments, a critic may argue that some of the performance effects are due to chance. We therefore run the peer performance

30

treatment again for the case of large choice sets, but this time use a fixed sequence of alternatives. This new round of experiments featured 415 participants across the US, 47 percent of which were women. We again find a significant performance difference between control and peer performance treatment (MD = -0.05; p = 0.030). We also find that these differences were borne by differences in search behavior and that those in the peer performance treatment often over-explored (see also Figure 2-6).

Note. The numbers on the X-axis reflect the stopping point, i.e. the number of alternatives explored. Figure 2-6: Level of exploration with a fixed sequence of alternatives

Secondly, to address criticisms about the sample on the Mechanical Turk platform, we collected information on the participants’ demographic characteristics. We then split the sample population by age (above or below 30 years of age), level of education (college educated versus not college educated) and income (an hourly salary of more versus less than $20). Our results are robust to these different demographic groups. They also hold when we divide the sample of participants into those holding a managerial position versus those that do not (a detailed report is provided in the Appendix 2 C).

31

Critics may also argue that over-exploration is not driven by the prospect of finding the best alternative but rather by handling downward risk: Assuming a uniform distribution, it is more likely to receive a lower payoff in the next draw when choice sets are large. To avoid ending up with a payoff lower than the initial one, participants may just explore more. If that were the case, however, the number of searched alternatives below the initial draw would be higher in the treatment conditions with large choice sets. However, we find no difference in the number of draws with a lower value than the initial draw between conditions with large choice sets and small choice sets (MD = - 0.013, p = 0.86).

Our main effect also holds true if we use an ANOVA to conduct the analysis. We report the results in Figure 2-7. In particular, the analysis confirms that there is a significant effect of peer performance information on large choice sets on performance (p = 0.000).

Figure 2-7: ANOVA of performance differences across the four conditions

Discussion

In our study, we investigated the performance implications of social comparisons in a simple search task. Practitioners and management scholars frequently assume that social comparison

32

improves search efficacy (and as a consequence, performance). However, this assumption has remained untested empirically. In an effort to fill this gap, we ran an experiment and found that while social comparison may improve performance when there are few decision

alternatives, it may turn into a liability when there is a large number of decision alternatives. It is then that social comparison may lead to over-exploration and consequently, cause a performance penalty.

Contributions

Common assumptions in the extant literature are that (1) decision-makers generally search too little and (2) upward social comparison induces search. Thus, social comparison may increase performance by increasing search and overcoming inertia (Audia et al. 2000, Greve 1998, 2003a, Greve and Gaba 2017). However, this argument does not account for the reported negative performance effects of social comparison (Nickerson and Zenger 2008, Obloj and Zenger 2017). We extend the search-based line of reasoning by setting forth conditions under which upward social comparison may have negative performance consequences by altering search behavior. Concretely, we offer a model of search and learning which produces positive or negative performance effects depending on the number of decision alternatives. We contend that social comparison informs the decision-maker on how to improve the efficacy of search but may also lead to costly over-exploration when many decision alternatives are available (Baumann and Siggelkow 2013, Billinger et al. 2014, Clement and Puranam 2017).

Previous research has focused on either positive (Blanes i Vidal and Nossol 2011, Stark and Hyll 2011) or negative performance effects (Colella et al. 2007, Dunn et al. 2012, Gino and Pierce 2009, 2010, Nickerson and Zenger 2008, Obloj and Zenger 2017, Pfeffer and Langton 1993, Van de Ven 2017, Zenger 2016). In framing these conflicting findings, scholars have used distinct theories to explain such effects. Positive effects have often been

33

explained by economic theories of incentives while negative effects have been explained by theories rooted in the psychology literature such as theories of envy (Nickerson and Zenger 2008).

Our search model reconciles the conflicting finding in one theory. In so doing, we show that beyond traits and competitive dynamics, performance effects of social comparison can be thought of as properties of individual search and learning. We thus expand the scope of the theory of search and learning (Denrell and March 2001). What is more, we identify boundary conditions and key contingency factors of a search-based theory of social

comparisons. We show that the key contingency of the benefits of social comparison is the size of the choice set, i.e. the number of decision alternatives. When upward social

comparison is taken as information on a global optimum, it may prevent costly over-exploration once decision-makers find an already optimal solution (Levinthal 1997). Here, social comparison may regulate search efforts. At the same time,social comparison may mislead search efforts such that facing many decision alternatives can in fact cause over-exploration rather than regulation of search. Lastly, our findings add to social aspiration (Cyert and March 1963, Greve 1998 Greve 2003b, March 1994) and stretch goal theory (Hamel and Prahalad 1993, Latham 2004, Kerr and Landauer 2004, Sitkin et al. 2011) which are in part derived from social comparison theory. A common notion of social aspirations is that they are beneficial because they induce change (Audia et al. 2000, Lant 1992, Miller and Chen 1994), exploration (Baum and Dahlin 2007), and risk taking (Bromiley 1991).

Observing better performing others leads to reevaluating current choices and thus triggers (problemistic) search (Cyert and March 1963). The challenge in this predominant view is to fight over-exploitation.

Since March (1991) introduced the exploration-exploitation dilemma into the organizational learning literature, scholars have focused on the cost of too little exploration

34

(Audia et al. 2000, Rhee and Kim 2014). This has been attributed to environmental factors such as delays in feedback (Repenning and Sterman 2002) as well as to individual factors such as overconfidence (Audia et al. 2000). We show that there can also be a problem of over-exploration. In particular, we observe that decision-makers search too much in the absence of social comparison. When social comparison is then possible, participants often search less than they otherwise would. Thus, our results indicate that social comparison often decreases rather than increases the level of search. This is so because information on one’s relative standing to a top performer enables decision-makers to put their own performance in perspective. This way, next to learning about the performance of an alternative their peer is pursuing, decision-makers learn about the performance of their currently pursued alternative. In this way, social comparison is not a trigger but a substitute of search. This is particularly true for the case of few decision alternatives when a decision-maker has a high probability of coming across an alternative that is as good as their peer’s. This finding adds an important contingency factor to Greve’s (2003b) model of search. The direction of the effect of an attainment discrepancy on the level of search may be true for large choice sets but it does not hold for small choice sets. This contingency may explain why occasionally very hierarchical organizations manage innovation more successfully than organizations with flatter

hierarchies (think Amazon): The hierarchy may be an attempt to limit the number of decision alternatives available to decision-makers thus regulating search within the organization.

Our findings also add to the organization design literature (Joseph and Ocasio 2012, Nickerson and Zenger 2008). Managers promote or demote social comparison as one pillar of the social architecture of an organization (Nickerson and Zenger 2008, Zenger 1992). Tools to encourage social comparison are pay transparency (Gartenberg and Wulf 2017), rankings and ratings (Greve and Gaba 2017), or internal benchmarking (Gaba and Joseph 2013). Tools to suppress social comparison include putting restrictions on information sharing and placing

35

offices farther apart (Kulik and Ambrose 1992, Nickerson and Zenger 2008). We point to the number of decision alternatives as a contingency factor of when social comparison should be promoted or discouraged. This applies in a particular way to managing innovation: While systems of open innovations do not present the decision maker with pre-structured problems, systems of closed innovation pre-structure the problem at the organizational level before passing parts of the problem on to employees in the organization (Felin and Zenger 2014). As a consequence, decision makers in systems of closed innovation face fewer decision

alternatives than decision makers in systems of open innovation. In this paper, we suggest that in particular systems of open innovation may be harmed by encouraging social comparison, while systems of closed innovation may be benefitting from more social comparison.

Lastly, we contribute to the behavioral strategy literature by introducing a new contingency variable: the number of decision alternatives (i.e. the size of the choice set). The number of decision alternatives is a central dimension to task complexity (March and Simon 1958, Payne 1976, Simon 1962). Decision-makers often face problems of different degrees of complexity and by extension, varying numbers of decision alternatives. For instance,

hierarchies filter information and suggest limited courses of action, thereby reducing the number of alternatives (Nickerson and Zenger 2004). Likewise, at the organizational level, the number of decision alternatives depends on the market structure in markets for sales and supply. For example, decision-makers may sometimes only have few suppliers available to choose from (think airlines buying either Airbus or Boeing planes) and other times have the choice between many alternative suppliers (think airlines choosing a company to supply the plane seats). The size of the choice set has in the past also been identified as an important decision-making factor in consumer choice (Iyengar and Lepper 2000, Schwartz 2004, Shin and Ariely 2004) in what has been dubbed the “paradox of choice” (Schwartz 2004). The

36

paradox of choice is that while more options should theoretically lead to better decision-making outcomes, it in fact often leads to worse outcomes. It should lead to better outcomes because, as rational choice theory predicts, more options imply a greater chance of optimally matching an alternative with one’s preferences (Arrow 1963, Rieskamp et al. 2006). At odds with this prediction, studies of consumer choice find negative outcome effects of increased choice (Iyengar and Lepper 2000, Schwartz 2004). This is partly because consumers are deterred from making a decision at all because they anticipate regret over what is likely not an optimal choice (Iyengar and Lepper 2000, Schwartz 2004). Alternatively, scholars believe that consumers do not even consider a larger number of decision alternatives because their evaluation may be too costly (Hauser and Wernerfelt 1990) or not cognitively possible (Malhotra 1982, O’Reilly 1980, Reutskaja and Hogarth 2009).

We point to a different hazard of large choice sets that stems from making worse choices rather than not choosing: Larger choice sets may prompt greater levels of search, while the optimal level of search is first and foremost determined by the time horizon and less by the size of the choice set. To be clear, we loan the concept of the number of decision alternatives from consumer choice to introduce it to strategic decision-making. However, while the consumer choice literature examine whether or not consumers choose to begin with, we examine search efficacy, which is more relevant for decision-makers who must choose between engaging in exploitation vs exploration, e.g. deciding on R&D projects (Mudambi and Swift 2014), managing alliances (Yang et al. 2014) or coordinating the internal organization of the firm (Stettner and Lavie 2014).

Giving greater importance to the number of decision alternatives challenges our view on how upward social comparison affects adaptation (Levinthal 1997, Rivkin 2000). A common assumption is that knowing what is possible provides decision-makers with an incentive to persist in the face of adversarial situations (Nelson and Winter 1982). Consistent

37

with the view of “routines as targets”, social comparison with a successful competitor might trigger innovation and global search, even when it is impossible to imitate the competitor’s routines (Nelson and Winter 1982, p. 112). Meanwhile, information on a global optimum may prevent over-exploration once decision-makers find an already optimal solution (Levinthal 1997). Hence, social comparison may regulate search efforts. At the same time, attempting to imitate similar others may trigger exploration even after a global optimum has been found (Czasar and Siggelkow 2010). Our findings point to another downside of social comparison: social comparison may mislead search efforts. We show that the benefits of upward social comparison depend on the size of the choice set and that facing many decision alternatives can in fact cause over-exploration rather than regulation of search.

Limitations

Critics of our experimental approach will argue that our findings might not hold in actual organizational contexts. While we believe that there is some external validity to our findings, the approach is certainly more geared towards establishing the mechanisms through which social comparisons hurt or benefit performance. However, our setting has been proven to be a valid abstraction of many real organizational decision-making scenarios (Aggarwal et al. 2011, Cappelli and Hamori 2013, Puranam and Swamy 2016). Particularly because our setting is abstract enough to capture central features of a multitude of organizational settings, it is likely to yield externally valid results (Cook et al. 2002).

While the decision scenario in our experimental task is a simplified version of reality, the way in which managers perceive the decisions they face can be depicted in a dramatically simplified mental model as well (Gavetti and Levinthal 2000, Halford, et al. 1994, Kelley 1973). In reality, there may also be situations where search is a coordinated team effort rather than conducted by an individual (Knudsen and Srikanth 2014). Teams in which decisions are based on such coordination tend to exhibit lower levels of search (Davis and Eisenhardt

38

2011) and as a consequence, the problem of over-exploration through social comparison may be less pronounced. This is because information sharing and coordination are costly and so teams are more likely to settle on strategies with more predictable outcomes, i.e. exploitation rather than exploration (Lavie et al. 2010). The present research would suggest that social comparison may be capable of breaking up patterns of over-exploitation in leadership teams, but more empirical research is needed to scrutinize the role of social comparisons in

coordinated search.

We also do not claim to present a task that is a valid abstraction for all search tasks in real organizational life. Our experiment does not feature intercorrelated payoffs between alternatives; a situation that can be found for instance when a new region is explored as a sales market and neighboring regions’ profitabilities are highly correlated. For these cases, a landscape model would be the better abstraction (e.g. Billinger et al. 2014, Levinthal 1997). Likewise, we do not model situations where payoffs are dynamically changing over time, which could be better accounted for with a restless bandit model (Loch and Kavadias 2002).

What is more, we deliberately excluded an effort component from our experimental task to focus on the choice problem. However, increased effort might partially offset the negative effect we found of social comparison with a highly successful peer when facing many decision alternatives. Increased effort might make decision-makers find solutions faster and thus partly balance the decreased probabilities of success.

Lastly, we cannot fully explain why decision-makers over-explore when there are many decision alternatives. The finding is, however, consistent with prior experimental studies (Billinger et al. 2014, MacLeod and Pingle 2005). One reasonable explanation for that behavior is that decision-makers are insensitive to changes in probabilities and more focused on the amount of outcomes (March and Shapira 1987).

39

Conclusion

Upward social comparison is often encouraged in organizational contexts to increase managers’ effort in a given task. It may also yield valuable information in search tasks that allow managers to balance exploration and exploitation efficiently. However, this study suggests that it may constitute a previously understudied liability in organizational learning by causing over-exploration when faced with an abundance of decision alternatives.

40

3. LEARNING FROM OMISSION ERRORS

Introduction

Adaptive learning is the core of the behavioral theory of the firm (Cyert and March 1963, March and Olsen 1976). Decision makers choose a solution to a specific problem, and then use performance feedback on that solution to affect the search for future solutions: if the performance feedback is positive, they are expected to choose the successful solution again; if the performance feedback is negative, they are expected to avoid the solution in the future (Glynn et al. 1991). While decision makers have been found to learn from their successful choices, it is much less clear that they learn from their errors (Bennett and Snyder 2017, Kc et al. 2013, Haunschild and Sullivan 2002). Behavioral changes spurred by errors in

previous choices can lead to improved performance in some circumstances, but not in others (Eggers and Suh 2019) suggesting that learning from mistakes is not a simple process.

One complication in learning from errors is that judgment errors take two different forms - errors of commission, where the decision maker does something that produces a negative result, and errors of omission, where the decision maker declines to follow an action that would have improved performance (Green and Swets 1966, Sah and Stiglitz 1986). Commission errors lead to specific penalties for behavior, while omission errors lead to foregone rewards. For example, a manager of an automaker may decide to launch a car that turns out to sell poorly or she may decide against the launch of a car that would have been a success with customers. Such errors in decision-making occur regularly, making learning from prior errors feasible and necessary (Csaszar 2012). While inferring the correct action to take in response to an error is always a challenge, the opportunity to learn from commission errors is always present – experiential learning processes provide feedback that directly affect future choices (Eggers 2012, Maslach 2016). Learning from omission errors, meanwhile, is more complex and challenging. The primary difficulty is that information on