HAL Id: hal-01285852

https://hal.inria.fr/hal-01285852

Submitted on 9 Mar 2016

HAL is a multi-disciplinary open access

archive for the deposit and dissemination of

sci-entific research documents, whether they are

pub-lished or not. The documents may come from

teaching and research institutions in France or

abroad, or from public or private research centers.

L’archive ouverte pluridisciplinaire HAL, est

destinée au dépôt et à la diffusion de documents

scientifiques de niveau recherche, publiés ou non,

émanant des établissements d’enseignement et de

recherche français ou étrangers, des laboratoires

publics ou privés.

Trajectoires: a Mobile Application for Controlling

Sound Spatialization

Jérémie Garcia, Xavier Favory, Jean Bresson

To cite this version:

Jérémie Garcia, Xavier Favory, Jean Bresson. Trajectoires: a Mobile Application for Controlling Sound

Spatialization. CHI EA ’16: ACM Extended Abstracts on Human Factors in Computing Systems.,

May 2016, San Jose, United States. pp.4, �10.1145/2851581.2890246�. �hal-01285852�

Trajectoires: a Mobile Application for

Controlling Sound Spatialization

Abstract

Trajectoires is a mobile application that lets composers

draw trajectories of sound sources to remotely control any spatial audio renderer using the Open Sound Control protocol. Interviews and collaborations with contemporary music composers helped to inform the design of the tool, assess it in real music production context and add several features to manipulate existing trajectories.

Author Keywords

Mobile devices; sound spatialization; music composition; trajectories.

ACM Classification Keywords

H.5.2 [Information Interfaces and Presentation]: User Interfaces - Interaction Styles; H.5.5 [Sound and Music Computing]: Methodologies and Techniques.

Introduction

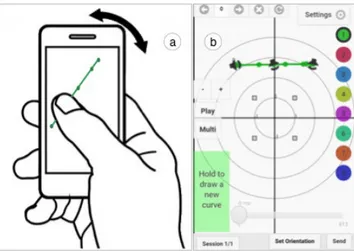

This paper presents Trajectoires [4], a web application running on desktop computers, smart-phones or tablets that lets composers draw and edit trajectories to control sound spatialization with finger-based interactions. Figure 1 shows a user replaying three trajectories controlling sound sources motions.

Permission to make digital or hard copies of part or all of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. Copyrights for third-party components of this work must be honored. For all other uses, contact the Owner/Author. Copyright is held by the

owner/author(s).

CHI'16 Extended Abstracts, May 07-12, 2016, San Jose, CA, USA ACM 978-1-4503-4082-3/16/05.

http://dx.doi.org/10.1145/2851581.2890246

Jérémie Garcia

Department of Computing, Goldsmiths, University of London, London, UK SE14 6NW j.garcia@gold.ac.uk Xavier Favory UMR 9912 STMS IRCAM-CNRS-UPMC 75004, Paris, France xavierfavory@gmail.com Jean Bresson UMR 9912 STMS IRCAM-CNRS-UPMC 75004, Paris, France jean.bresson@ircam.fr Figure 1: Playing three trajectories controlling three sound sources.

Sound spatialization techniques create the illusion that sound sources come from various directions in space and let users organize them in three-dimensional scenes. This process is frequently used by composers to separate sound sources, create motions or to simulate acoustic spaces [1]. Despite the development of advanced spatial audio renderers, the control of sound spatialization remains a challenging task for composers [6]. Spatialization processes require users to define the location of sound sources, as well as a great number of other spatial features such as the sources’ directivity patterns or the characteristics for the virtual projection space. As these parameters often vary to produce sound motions or other dynamic effects, composers also need to specify their temporal evolutions. Our goal is to design interactive tools able to support professional composers work with sound spatialization. We focus on mobile devices that feature touch and gesture input modalities and allow to move in the rendering space [3] for supporting quick definition and assessment of temporal spatial sound scenes.

Trajectoires: design and implementation

Trajectoires lets composers draw, play and edit sound

source trajectories on mobile or tablet devices. While the user is drawing or replaying an existing trajectories, the application sends Open Sound Control (OSC1)

messages to remotely control any sound spatialization engine such as the Spat [2]. The user can freely move in the performance space while using the application to assess the musical result. The application also supports the definition of the orientation of the sound sources by physically manipulating the mobile device.

1http://www.opensoundcontrol.org

Figure 2: Trajectoires interface. The central canvas displays the existing trajectories that are color-coded according to the source they control.

Technical details

Trajectoires is implemented in JavaScript and HTML and

runs on the majority of devices with a web browser. We used the Interface.js library [7] to create a

client/server architecture converting web socket messages into OSC message. The server on the

computer hosts the application that can be accessed via the network. It is possible to have several simultaneous clients. A graphical user interface created with NW.js2,

indicates the address of the web page and a related QR code to open Trajectoires.

Inputting and playing trajectories

Users can draw a new trajectory directly on the canvas of the application while touching a specific area with their non-dominant hand. This is meant to make the input of a new trajectory explicit and disambiguate between input and edition modes. Following the

2http://nwjs.io/

Design goals

We conducted interviews with four composers to understand their work with sound

spatialization and inform the design of Trajectoires. Based on the results, we defined the following design goals: • Supporting quick input

and comparison of trajectories.

• Facilitating integration with several existing sound spatialization engines. • Providing real-time audiovisual feedback. • Enabling edition of existing trajectories.

recommendations of Wagner et. al. [8], the area is located on the bottom left for right-handed users as show in Figure 2. While drawing, OSC messages are sent in order to provide direct audio-visual rendering in the spatialization engine. The motion of the gesture is recorded and used to specify the temporal dimension of the trajectory when replayed.

At the end of the drawing, if the last point is close to the first one, a contextual menu appears and offers the user the possibility to close the path with an extra point. Similarly, if the first point is close to the last point of another trajectory, the contextual menu allows to merge the two curves. This feature emerged from our collaborations with composers who wanted to be able to continue the drawing of an existing trajectory. A “Play” button allows to play/pause the selected trajectory. The playing action streams the data via OSC. A temporal slider displays the current time position and can be directly manipulated to send the position data at a specific time. The “Multi” button triggers the playing of the last used trajectories for each sound source. For example, if the user draws two trajectories for the second source and one for the first source, it will then play the last curve drawn or selected for the second source along with the curve associated with the first source. This is meant to allow composers to quickly test more complex sound scenes.

Editing existing trajectories

Existing trajectories can be selected and translated through one finger interactions by tapping on the canvas to select a curve and dragging it to a new location. Users can scale or rotate any selected trajectory with two fingers interactions and add a third

finger to scale a trajectory along an axis defined by the two first fingers’ positions.

Trajectories can be simplified in order to reduce the number of points or partly redrawn through a contextual menu that appears when the user holds a curve. If the user selects the redraw option from the menu, a temporal range slider appears on the screen to select the part of the curve to draw in replacement of the original portion.

Specifying the orientation

Users can define the orientation of the sound source at specific positions of an existing trajectory using physical manipulation of the mobile device. Figure 4 illustrates the process to set the orientation using the motion sensors embedded in the device.

Figure 4: a) Specifying the orientation by holding a point with the thumb while orientating the device. b) Visual feedback indicating the defined orientations of the trajectory.

Bi-directional

communication

Trajectoires can receives OSC

messages to import existing trajectories or to develop complex processes involving processing of the data in another software [5].

Figure 3: OpenMusic visual program receiving a trajectory from the application, generating ten new trajectories created by rotations the original one and transmitting them back to

The orientation is interpolated between the defined values and is also streamed via OSC message when playing the curve.

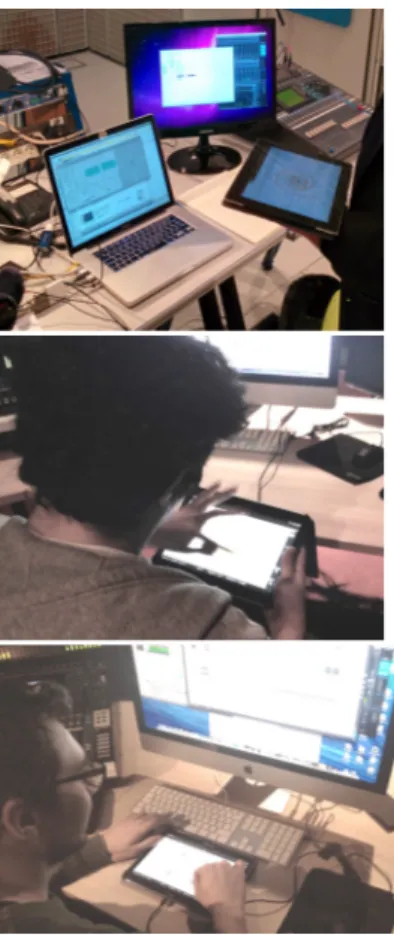

Collaborations with composers

We collaborated with three professional composers while they were composing pieces involving sound spatialization, in order to explore how Trajectoires can be integrated into their workflow and to figure out how to improve it. All composers successfully integrated the tool in their working environment for controlling sound spatialization. Figure 5 shows the three composers using the application to test and assess motions of sound sources.

Their feedback led us to improve the user interface and the edition tools presented previously. Composers also insisted on the need to be able to store and reuse the trajectories as well as exporting them in text-based format. We integrated these suggestions into the current version of Trajectoires, which allows to store users’ work in sessions, to navigate thought these sessions and to export the data.

Conclusion

Trajectoires allows composer to draw and edit motions

of sound sources to compose sound spatialization. Interviews and collaborations with composers informed the design and allowed to assess its use in real world contexts. Future work will focus on the observation and the understanding of its use by composers and of how it can be articulated with other tools to transition from such interactive exploration to more formal

compositional processes.

Acknowledgements

We thank all the composers that participated to the design and evaluation of Trajectoires. We are also grateful to Eric Daubresse and Thibaut Carpentier for their help and suggestions. This work is partly supported by the French National Research Agency: project EFFICACe ANR-13-JS02-0004 and Labex SMART (ANR-11-LABX-65).

References

1. Baalman, M.A.J. Spatial composition techniques and sound spatialisation technologies. Organised Sound 15, 03, 2010, 209–218.

2. Carpentier, T. Récents développements du Spatialisateur. In Proc of JIM 2015.

3. Delerue, O. and Warusfel, O. Mixage mobile. In Proc of

ACM/IHM, 2006, 75–82.

4. Favory, X., Garcia, J., and Bresson, J. Trajectoires: A Mobile Application for Controlling and Composing Sound Spatialization. In Proc of ACM/IHM 2015, 5:1– 5:8.

5. Garcia, J., Bresson, J., Schumacher, M., Carpentier, T., and Favory, X. Tools and Applications for Interactive-Algorithmic Control of Sound Spatialization in OpenMusic. In Proc of InSonic 2015, 2015.

6. Peters, N., Marentakis, G., and McAdams, S. Current technologies and compositional practices for

spatialization: A qualitative and quantitative analysis.

Computer Music Journal 35, 1, 2011, 10–27.

7. Roberts, C., Wakefield, G., and Wright, M. The Web Browser As Synthesizer And Interface. In Proc of NIME 2013, 313–318.

8. Wagner, J., Huot, S., and Mackay, W. BiTouch and BiPad: Designing Bimanual Interaction for Hand-held Tablets. In Proc of ACM/CHI 2012, 2317–2326. Figure 5: Composers using

Trajectoires while working on