HAL Id: tel-02417362

https://hal.inria.fr/tel-02417362

Submitted on 18 Dec 2019HAL is a multi-disciplinary open access

archive for the deposit and dissemination of sci-entific research documents, whether they are pub-lished or not. The documents may come from teaching and research institutions in France or abroad, or from public or private research centers.

L’archive ouverte pluridisciplinaire HAL, est destinée au dépôt et à la diffusion de documents scientifiques de niveau recherche, publiés ou non, émanant des établissements d’enseignement et de recherche français ou étrangers, des laboratoires publics ou privés.

Unified isolation architectures and mechanisms against

side channel attacks for decentralized cloud

infrastructures

Mohammad-Mahdi Bazm

To cite this version:

Mohammad-Mahdi Bazm. Unified isolation architectures and mechanisms against side channel attacks for decentralized cloud infrastructures. Software Engineering [cs.SE]. Université de Nantes (UNAM), 2019. English. �tel-02417362�

T

HESE DE DOCTORAT DE

L'UNIVERSITE

DE

NANTES

C

OMUEU

NIVERSITEB

RETAGNEL

OIRE ECOLE DOCTORALE N°601Mathématiques et Sciences et Technologies de l'Information et de la Communication Spécialité : Sécurité Informatique

Architecture d’isolation unifiée et mécanismes de lutte contre les

canaux auxiliaires pour infrastructures cloud décentralisées

Thèse présentée et soutenue à Nantes, le 08 juillet 2019

Unité de recherche : Laboratoire des Sciences du Numérique de Nantes (LS2N)

Par

Mohammad Mahdi BAZM

Rapporteurs avant soutenance :

M. Yves Roudier Professeur, Université Nice Sophia Antipolis M. Daniel Hagimont Professeur, IRIT/ENSEEIHT

Composition du Jury :

Président

Examinateurs :: M. Pascal Molli

Professeur, Université de Nantes M. Louis Rilling

Ingénieur cybersécurité, DGA

Dir. de thèse: M. Mario Südholt

Professeur, IMT Atlantique Co-dir. de thèse : M. Jean-Marc Menaud Professeur, IMT Atlantique

Encadrant de thèse: M. Marc Lacoste Ingénieur de recherche, Orange Labs M. Christian Perez Directeur de recherche, Inria

Abstract

Since their discovery by Ristenpart [Ristenpart et al., 2009], the security concern of side-channel attacks is raising in virtualized environments such as cloud computing infrastruc-tures because of rapid improvements in the attack techniques. Therefore, the mitigation and the detection of such attacks have been getting more attention in these environments, and consequently have been the subject of intense research works.

These attacks exploit for instance sharing of hardware resources such as the processor in virtualized environments. Moreover, the resources are often shared between different users at very low-level through the virtualization layer. As a result, such sharing allows bypassing security mechanisms implemented at virtualization layer through such a leaky sharing. Cache levels of the processor are the resources which are shared between instances, and play as an information disclosure channel. Side-channel attacks thus use this leaky channel to obtain sensitive information such as cryptographic keys.

Different research works are already exist on the detection/mitigation of these attack in information systems. Mitigation techniques of cache-based side-channel attacks are mainly divided into three classes according to different layer of application in cloud infras-tructures (i.e., application, system, and hardware). The detection is essentially done at OS/hypervisor layer because of possibility of analyzing virtualized instances behavior at both layers.

In this thesis, we first provide a survey on the isolation challenge and on the cache-based side-channel attacks in cloud computing infrastructures. We then present different approaches to detect/mitigate cross-VM/cross-containers cache-based side-channel attacks. Regarding the detection of cache-based side-channel attacks, we achieve that by leveraging Hardware performance Counters (HPCs) and Intel Cache Monitoring Technology (CMT) with anomaly detection approaches to identify a malicious virtual machine or a Linux con-tainer. Our experimental results show a high detection rate.

We then leverage an approach based on Moving Target Defense (MTD) theory to inter-rupt a cache-based side-channel attack between two Linux containers. MTD allows us to make the configuration of system more dynamic and consequently more harder to attack by an adversary, by using shuffling at different level of system and cloud. Our approach does not need to carrying modification neither into the guest OS or the hypervisor. Ex-perimental results show that our approach imposes very low performance overhead.

We also discuss the challenge of isolated execution on remote hosts, different scenarios to secure execution of Linux containers on remote hosts and different trusted execution technologies for cloud computing environments. Finally, we propose a secure model for distributed computing through using Linux containers secured by Intel SGX, to perform trusted execution on untrusted Fog computing infrastructures.

Résumé

Depuis les travaux de Ristenpart [Ristenpart et al., 2009], les attaques par canaux auxil-iaires se sont imposées comme un enjeu sécurité important pour les environnements vir-tualisés, avec une amélioration rapide des techniques d’attaque, et de nombreux travaux de recherche pour les détecter et s’en prémunir. Ces attaques exploitent le partage de ressources matérielles comme les différents niveaux de cache processeur entre des locataires multiples en utilisant la couche de virtualisation. Il devient alors possible d’en contourner les mécanismes de sécurité entre différentes instances virtualisées, par exemple pour obtenir des informations sensibles comme des clés cryptographiques.

L’analyse des défis d’isolation et des formes d’attaques par canaux auxiliaires basées sur le cache dans les infrastructures virtualisées met en évidence différentes approches pour les détecter ou les contrer, entre machines virtuelles ou conteneurs Linux. Ces approches se distinguent selon la couche logicielle où seront appliquées les contre-mesures, applicative, système ou matérielle. La détection reste principalement effectuée au niveau de la couche système ou de virtualisation, ce niveau permettant simplement d’analyser le comporte-ment des instances virtualisées. De nouvelles formes distribuées d’attaques ont aussi pu être mises en évidence.

Pour la détection, nous explorons une approche combinant des compteurs de perfor-mance matériels (HPCs) et la technologie Intel CMT (Cache Monitoring Technology), s’appuyant également sur la détection d’anomalies pour identifier les machines virtuelles ou les conteneurs malveillants. Les résultats obtenus montrent un taux élevé de détection d’attaques.

Pour la réaction, nous proposons une approche de Moving Target Defense (MTD) pour interrompre une attaque entre deux conteneurs Linux, ce qui permet de rendre la config-uration du système plus dynamique et plus difficilement attaquable, à différents niveaux du système et du cloud. Cette approche ne nécessite pas d’apporter de modification dans l’OS invité ou dans l’hyperviseur, avec de plus un surcoût très faible en performance.

Nous explorons enfin l’utilisation de techniques d’exécution matérielle à base d’enclaves comme Intel SGX (Software Guard Extensions) pour assurer une exécution répartie de con-fiance de conteneurs Linux sur des couches logicielles qui ne le sont pas nécessairement. Ceci s’est traduit par la proposition d’un modèle d’exécution répartie sur infrastructure Fog pour conteneurs Linux. Il s’agit d’un premier pas vers une infrastructure répartie sécurisée Fog illustrant le potentiel de telles technologies.

Acknowledgements

First I would like to thank my PhD advisors, Professors Mario Südholt and Jean-Marc Menaud at IMT Atlantique, and my co-supervisor Dr. Marc Lacoste at Orange Labs for supporting me during these past 3 years. I appreciate all their contributions of time and ideas to make my PhD. experience very interesting and productive. I appreciate Marc for his contribution in the edition of our scientific articles and interesting ideas. I appreciate Mario for helping and supporting me in different steps of the PhD. I appreciate Jean-Marc for his useful ideas related to the evaluation of results. Thank you.

I also would like to thank my team manager at Orange, Mr Sebastien Allard. I will never forget his kindness and humanity. He was like a close friend than a manager for me. The members of security of device team (SDS) have contributed to my personal and professional time at Orange. I spent a good times with them in different social events. I would like to thank them especially Pascal who was a very funny college.

I gratefully acknowledge the funding source of my PhD. I was funded by Orange Labs and french National Association of Research and Technology (ANRT) for 3 years.

Finally, I would like to thank all members of my family for encouraging me in all of my pursuits, especially my parents who supported me during these years, without their support I could not finish my PhD.

Contents

Abstract . . . i Résumé . . . iii Acknowledgements . . . v Contents . . . vii List of Figures . . . xi List of Tables . . . xv Introduction 1 1 Context . . . 11.1 Virtualization Technology and Cloud Computing . . . 1

1.2 Isolated Execution Challenges in DCI . . . 2

2 Research Objectives . . . 5

3 Contributions . . . 6

4 Organization of The Thesis . . . 7

Background 9 1 Cloud and Virtualization . . . 9

1.1 Cloud Computing . . . 9

1.2 Deployment Models . . . 9

1.3 Services Models . . . 9

1.4 Virtualization and Cloud Computing . . . 10

2 Computer Architecture and Modules . . . 11

2.1 Memory Structure of Computer . . . 11

2.2 Hardware Performance Counters (HPC) . . . 15

3 Trusted Computing . . . 16

1 Isolation Challenge in Cloud Infrastructures: State of The Art 19 1 Isolation Challenge . . . 20

1.1 Isolation Challenges Related To Hardware . . . 20

1.2 Isolation Challenges Related To Virtualization/OS . . . 21

2 Side-Channel Attacks . . . 23

2.1 Basic concepts: side channels, side channel attacks . . . 24

3 Timing and Cache-Based Side-Channels Attacks . . . 25

3.1 Timing Attacks . . . 25

3.2 Cache-Based Side-Channel Attacks . . . 27

4 Countermeasures . . . 33

4.1 Application-Based Approaches . . . 33

4.2 System-Based Approaches . . . 34

4.3 Hardware-Based Approaches . . . 36

5 Detection of Cache-Based Side-Channel Attacks . . . 37

5.1 Signature-based . . . 38 vii

CONTENTS

5.2 Threshold-based . . . 38

6 Extension of The Attack: Distributed Side-Channel Attacks (DSCA) . . . . 39

6.1 Attack Platform . . . 40

6.2 Attack Prerequisites . . . 40

7 Conclusion . . . 42

2 A Model For Secure Distributed Computing on Untrusted Fog Infras-tructures Using Trusted Containers 43 1 Distributed Computing Paradigms on Fog Infrastructures . . . 44

1.1 Physical Machine as Worker Node . . . 44

1.2 Virtual Machine (VM) as Worker Node . . . 44

1.3 Linux Container as Worker Node . . . 45

2 Problem Statement . . . 45

2.1 Remote Execution . . . 45

2.2 Performance and Flexibility . . . 46

3 Trusted Execution Over Fog Infrastructures . . . 46

3.1 Trusted Execution Technologies . . . 46

3.2 Trusted Execution Scenarios for Linux Containers . . . 48

4 Secure Distributed Computation Using Containers . . . 49

4.1 A Simple Model of Distributed Computing Environment . . . 50

4.2 Management of Trusted Containers . . . 51

4.3 Implementation . . . 53

5 Evaluation . . . 54

5.1 Performance . . . 54

5.2 Security . . . 55

5.3 Benefits and Limitations of The Model . . . 56

6 Related Works . . . 56

7 Conclusion . . . 56

3 A Moving Target Defense Approach to Mitigate Cross-Container Cache-Based Side-Channel Attacks 59 1 Model . . . 60

1.1 Host-Level Reaction Modeling . . . 62

1.2 Cloud-Level Reaction Modeling . . . 63

2 Design . . . 64

2.1 Threat Model . . . 64

2.2 Components of the MTD Framework . . . 65

3 Implementation . . . 70

3.1 Detection Module . . . 70

3.2 Local Reaction Implementation . . . 70

3.3 Cloud Level Implementation . . . 72

4 Evaluation . . . 72

4.1 Experimental Environment . . . 73

4.2 Detection Module Evaluation . . . 75

4.3 Local Module Evaluation . . . 75

5 Related Work . . . 79

6 Conclusion . . . 81

CONTENTS

4 Detection of Cross-VM Cache-Based Side-Channel Attacks 83

1 Design . . . 83

1.1 Threat Model . . . 84

1.2 Components of the Detection Module . . . 84

2 Implementation . . . 86 2.1 Main Thread . . . 86 2.2 Second thread . . . 87 2.3 Detection thread . . . 87 3 Evaluation . . . 87 3.1 Selection of Events . . . 88 3.2 Evaluation Process . . . 89 3.3 Evaluation Results . . . 91 4 Conclusion . . . 93 Conclusion 95 1 Results . . . 95 2 Future Works . . . 97 Publications 99 Résume en Français 101 Contexte . . . 101 Défis de l’isolation . . . 101 Objectifs et Contributions . . . 103 Conclusion et Perspectives . . . 104 Appendix A 105 Bibliography 109 CONTENTS ix

List of Figures

1 A schematic view of Decentralized Cloud Infrastructures. . . 2

2 Different features of every layer in Decentralized Cloud Infrastructures. . . . 3

3 Two main challenges related to secure execution of an application. . . 5

4 Different virtualization models. . . 11

5 A high-level view of computer system architecture. . . 11

6 CPU cache vs Main Memory (RAM) and Hard Disk (HDD). . . 12

7 Cache Organization in CPU. . . 13

8 Direct-mapped cache. . . 13

9 Fully associative cache. . . 14

10 A 2-ways set associative cache. . . 14

11 Inclusive cache vs Exclusive policy in the processor. In inclusive policy, read(x) results in fetching memory line x to L2 and the LLC while in exclusive policy, read(x) results in fetching memory line x to L2. . . 15

12 In TrustZone, there are two worlds: normal and secure. Each word has its own OS and they are separated physically by hardware. Sensitive applica-tions are run inside secure world. All communicaapplica-tions to secure world are only done through the API. . . 16

13 Chain of trust process until launching the application and sending the proof to the verifier. . . 17

1.1 Isolation challenges, side-channel attacks, attak types, and mitigation of the attacks in the cloud. . . 21

1.2 Sharing of resources and cache issue in virtualization . . . 22

1.3 Three phases of Prime+Probe technique. The attacker verifies the state of cache at attributed CPU time slot. . . 28

1.4 Different phases of Flush+Reload technique. The attacker verifies if a spe-cific cache line is in the cache. . . 29

1.5 A schematic view of attack Spectre. . . 31

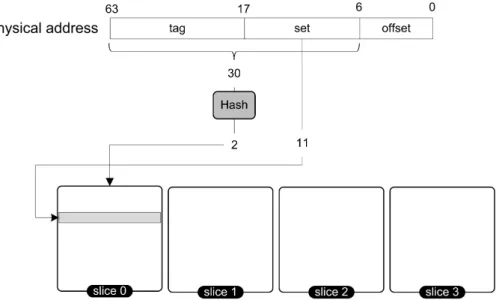

1.6 Output of hash function selects corresponding slice of the LLC in a 4 cores processor. . . 32

1.7 Taxonomy of defense approaches according to different layer of application. 33 1.8 Overall view of the distributed system. Each component of the system has a sub-key to protect data. . . 40

1.9 Malicious VMs co-located with victim VMs. The attack coordination is performed by a botnet under adversary control. . . 41

2.1 Intel SGX protects sensitive part of the appliction inside enclaves. Isolated execution of the application is composed of different steps which are marked in the figure. . . 48

2.2 Different scenarios of trusted execution of containers. . . 49

2.3 Different layers of distributed computing on the Fog infrastructure. . . 50 xi

LIST OF FIGURES

2.4 End-to-end encryption between two enclaves. . . 51

2.5 Join protocol: adding a container to the trusted cluster. . . 52

2.6 Protocol for task processing. . . 53

2.7 Remote attestation process in Intel SGX. . . 53

2.8 Performance of Mapper and Reducer nodes with and w/o Intel SGX. . . 55

3.1 Application of shuffling at three different levels of a virtualized. . . 60

3.2 States of the MTD system. . . 61

3.3 Different types of cache-based side-channel attack which are considered in our threat model. . . 64

3.4 When a container is identified as malicious by the MTD module; 1) MTD identifies core of the container, 2) MTD changes core of the container, and 3) MTD runs the container in an isolated zone of the LLC. . . 65

3.5 Every Linux container is tagged according all monitored events during last three times. . . 67

3.6 RMIDs to track the resource usage [adapted from Intel website]. . . 68

3.7 A NUMA architecture with two CPUs in a container-based environment. . . 69

3.8 If computing platform has not a processor supporting CAT, 1) when a ma-licious container is identified, 2) MTD module sends a migration request to the migration server, 3) the migration server finds a host in the cloud infrastructure, and 4) migrates the malicious container to the destination host. . . 70

3.9 Isolated and Overlapped scenarios in the allocation of the LLC to the classes. COS indicates class of service and M indicates a bit in the maskbit. . . 72

3.10 Sequence diagram of migrating a container from source to destination host. 73 3.11 Intel CAT configuration on the platform 1. . . 74

3.12 Verification of the Intel CAT configuration on the computing platform 1 regarding LLC-misses (MISSES) and cache allocation (LLC[KB]). (a) before running stress container, (b) after running stress container. . . 74

3.13 Perf information of Linux containers in attack situation . . . 75

3.14 Roc curve of the detection module. . . 76

3.15 Evaluation results of OpenSSL Speed for RSA512-4096 bits in the scenarios. 77 3.16 Evaluation results of sysbench in the scenarios. . . 77

3.17 Evaluation results of sysbench in the scenarios. . . 78

3.18 State of the LLC and specially cache-misses numbers before running the attack. Attacker container is running on all cores (cores #0-15) of the processor. . . 78

3.19 State of the LLC and specially LLC cache-misses numbers during the attack. Attacker container is running on all cores (cores #0-15) of the processor. . 79

3.20 State of the LLC during the attack. Attacker container is running on isolated core (core #0) of the processor. . . 80

3.21 Access time of different addresses in the eviction set. (Left) reaction module is not active, (Right) running the attacker on the isolated core. . . 80

4.1 CMT allows monitorting of Cache Occupancy per RMID. . . 84

4.2 Detection module architecture. . . 86

4.3 LLC Occupancy of VMs in different scenarios. . . 90

4.4 Perf information of VMs in different scenarios . . . 91

4.5 LLC cache misses vs. system functions for attacker VM . . . 92

4.6 LLC occupancy of attacker and victim VMs during the attack . . . 93

4.7 Roc curve without & with Intel CMT . . . 93

LIST OF FIGURES

4.8 Implementation of Intel QoS interface in Linux kernel [adapted from Intel website]. . . 105 4.9 Comparing different trusted execution technologies regarding security

prop-erties (source: [Costan and Devadas, 2016]). . . 106

List of Tables

1 Two isolation challenges related to the cloud environments. . . 6

1.1 Identified challenges of virtualization technology regarding to the isolation issue. . . 23

1.2 Impact of the techniques on the isolation in virtualized environments. If a technique plays a helper role in the attack steps, it has a indirect impact on isolation. . . 24

1.3 An overview on the different side-channel attacks. . . 26

1.4 Comparing different techniques of the attack regarding resolution and ex-ploited techniques. . . 32

1.5 An overview of suggested countermeasures according to each level of appli-cation. . . 35

1.6 Different detection approaches of side-channel attacks between two process-es/VMs. . . 38

1.7 Solutions for the security challenges in Table. 1.1. . . 39

2.1 Comparing different computing models vs. performance and security. . . 45

2.2 Trusted execution technologies vs. security. . . 47

2.3 Trusted computing technologies vs. cloud infrastructure level and virtual-ization technology. . . 47

3.1 Specific hardware performance events related to cache . . . 66

3.2 Configuration of computing platform 1, with Intel CAT support. . . 73

3.3 Configuration of computing platform 2, without Intel CAT support. . . 74

4.1 Specific hardware performance events related to cache . . . 85

4.2 Configuration of the experimental platform . . . 88

4.3 Output of perf-kvm for the attacker VM: top functions generating cache misses in the attacker VM . . . 92

4.4 List of processors supporting Intel SGX. . . 107

4.5 List of processors supporting Intel CAT and CMT. . . 107

Introduction

1

Context

Cloud computing provides a pool of resources such as compute, networking and storage which are accessible through Internet, that got more attention with the increase in servers power and Internet bandwidth. This model allows customers to use mutualized software and hardware resources, abstracted as services hosted by cloud providers. Classical cloud infrastructures consist in large data centers owned and operated by private cloud service providers like Amazon. However, such architecture (i.e., semi-centralized architecture) has its own challenges regarding growing demand for computing resources, energy considera-tions, and data center building costs [Simonet et al., 2016].

Over the last few years, there has been a significant evolution of cloud architecture and infrastructures to decentralized paradigms by deporting virtualized resources to the edge of the network (Fig. 1). The most iconic paradigm of these Decentralized Cloud Infrastruc-tures (DCI) is Fog Computing which has been introduced by CISCO [Bonomi et al., 2012]. In this architecture, virtualized resources are no longer available through a few large con-ventional data centers provided by cloud service providers such as Amazon. Furthermore, a mediation layer is introduced including several levels of micro-data centers that can even extend to terminal devices.

This novel architecture has many benefits for many Internet of Things (IoT) appli-cations, such as improving response time and efficiency of cloud-based systems by load-balancing, by processing useful data in core data centers and processing less important data on the edge of the network. It also reduces the building cost of new data centers to provide computing resources to customers.

Fig. 2 illustrates different levels of DCI regarding virtualization technology and other components such as hardware. However, any cloud computing infrastructure leverages virtualization technology to share efficiently physical resources among customers.

1.1 Virtualization Technology and Cloud Computing

Virtualization is the cornerstone of cloud computing by enabling multiple operating sys-tems to be consolidated on a virtualization layer so that physical resources can be shared among them. This, in turn, allows for dynamic resource allocation and service provision-ing. There are different types of virtualization; paravirtualization, full-virtualization and hardwaassisted virtualization. Using paravirtualization the guest OS is modified. It re-quires substantial OS modifications to user applications. However, the hypervisor should support this capability. This technique is not fully transparent from a VM’s point of view [Sahoo et al., 2010]. Using full virtualization, all system resources are virtualized i.e., processors, memory, and I/O devices and run an unmodified operating system includ-1

INTRODUCTION

Figure 1: A schematic view of Decentralized Cloud Infrastructures.

ing all its installed software on top of the host operating system. This is a fully transparent technique. Hardware-level virtualization is nowadays supported by Intel and AMD directly on the processor level.

Virtualization technologies have evolved into lighter forms such as Linux containers, which can be deployed on all different levels of the infrastructure, notably on weak com-puting devices like smartphones. In contrast to VM-based virtualization techniques, Linux containers are often used as a lightweight virtualization solution. Despite advantages of Linux containers, however, they are not the final word in lightweight virtualization. Re-cently, unikernels have been developed which are more flexible and reliable than containers. They are more lightweight than Linux containers and offer excellent security properties by reducing the attack surface through a thin virtualization layer.

On the other hand, multi-tenancy in virtualized environments introduces security chal-lenges regarding isolation between tenants. Therefore, the choice between VM and con-tainer is a challenging decision. Securing concon-tainers is much more challenging than other virtualization methods, even though containers create a strong isolating environment for users. Cloud security frequently relies on OS/hypervisors that implement security mecha-nisms between Containers/VMs.

1.2 Isolated Execution Challenges in DCI

Though cloud computing has a lot of benefits, it suffers from security challenges. In a decentralized cloud infrastructure, security challenges get more attention because of DCI characteristics. Isolated execution gets more attention in such environments. Furthermore,

INTRODUCTION

Figure 2: Different features of every layer in Decentralized Cloud Infrastructures.

we identify two main challenges for isolated execution of applications in cloud computing infrastructures:

1.2.1 Challenge 1: Untrusted Hosts and OS/hypervisor

One of the major challenges in cloud infrastructures is the secure execution of an appli-cation (running either inside a VM or a Linux Container) on remote hosts maintained by third parties. This issue has been getting more attention in decentralized cloud infrastruc-tures like the Fog because of extending cloud services by migrating virtualized resources to the network edge maintained and operated by many different third parties. On the other hand, traditional software isolation techniques like process isolation and sandboxing rely on a trusted component such as the host OS or the hypervisor. Can we trust remote hosts in cloud infrastructures? and run the application on such hosts while the host OS is considered untrusted because it may be compromised by an adversary? For instance, a malicious adversary may access memory pages of the application or the container and retrieve sensitive data (Fig. 3).

Furthermore, co-residency — i.e., different customers placed in the same physical in-frastructure, virtual instances e.g., VMs placed on the same physical machine — has an important security impact in such environments [Xiao et al., 2013]. Embedded security mechanisms can then be bypassed by an attacker, thus threatening the security of data and computations. Strong isolation of resources is a fundamental guarantee for such a shared infrastructure among several tenants. The security of the whole system is therefore

INTRODUCTION

based on a strict separation between execution environments (virtual machines, containers) that is guaranteed by the virtualization layer (hypervisor)/OS, and ensures a safe sharing of hardware resources such as memory among virtualized instances. Any breaking isola-tion results in attacks coming from a malicious virtualized instance to other virtualized instances through hypervisor/OS.

To overcome such security concerns, we may leverage trusted execution technologies on remote hosts to encrypt memory pages of the application (to provide confidentiality) or to reveal any modification of the application (to check integrity). For instance, shielding applications is a technique leveraging Intel SGX to enforce the isolation between different applications running on the same OS, and between applications and the OS. However, the isolation still remains the main challenge to address in virtualized environments in terms of resource sharing mainly at the hardware level.

1.2.2 Challenge 2: Side-channels in Processor Micro-Architecture

As seen above, any virtualization technology leverages sharing of physical resources of computing platforms. Such a sharing has an impact on the isolation between virtualized instances running on computing devices. Generally, isolation can be implemented at two different levels: at the hardware and software levels. As an example of hardware isolation, dedicating a L1 cache to each processor core provides rigid isolation between two cores of the processor. The slicing of the LLC in Intel processors is another kind of hardware isolation to protect per-core data. Software-based isolation is usually implemented in the OS or at the Hypervisor level in order to isolate two threads, processes or virtual machines.

In a multi-tenant environment, hardware-based trusted execution technology can en-force isolation at software level (i.e., OS/hypervisor). However, isolation challenge remains unsolved because of sharing the processor among instances. Different cache levels of the processor are shared between virtualized instances, creating a channel — Side-Channel — of leaking information between instances. Such a channel is exploited in Side-Channel At-tacks (SCA). Side-channel atAt-tacks constitute a class of security threats to the isolation that is particularly relevant for emerging virtualized systems, directly leveraging weaknesses of current virtualization technology. An SCA uses a hidden channel that leaks information on the execution of an operation on the computing platform. Leaked information can be for example the execution time of operation. These attacks mostly target cryptographic operations to retrieve encryption keys.

In the case of Linux containers, they execute on the same operating system. A host therefore shares its resources among containers which are owned by different users. Conse-quently, even if containers are protected by trusted execution technology, they are always vulnerable to side-channel attacks because trusted execution technologies do not protect against side-channel attacks [Schwarz et al., 2017] (see also table 4.9).

Regarding virtualized environments, side-channels can be established based on tech-niques used in the virtualization layer or due to micro-architectural vulnerabilities in pro-cessors. Side-channel attacks can be applied to a wide range of computing devices and can target systems without any special privileged permission. One of the main sources of leaking information in computing platforms is the processor and particularly its cache architecture. In fact, cache levels in the processor are widely exploited to retrieve infor-mation on a target operation running in processor.

INTRODUCTION

Figure 3: Two main challenges related to secure execution of an application.

2

Research Objectives

Despite having been already well investigated separately for cloud and for embedded tems, side-channel attacks are still far from well understood overall for virtualized sys-tems. Research on cache-based side-channel attacks shows the security impact of these attacks on cloud computing and vulnerability of cloud infrastructures [Ristenpart et al., 2009], because of improvements in the attack techniques [Kocher et al., 2018] [Gruss et al., 2015b] [Yarom and Falkner, 2014]. Therefore, the mitigation of these attacks has recently received more attention in cloud computing infrastructures as a new security concern. To mitigate these attacks, countermeasures can be applied at different layers of an information system: application, OS/hypervisor, and hardware. Each level of mitigation comes with its own challenges in terms of characteristics such as performance and compatibility with legacy platforms. The detection of such attacks should necessarily be done at OS/hyper-visor because the detection module needs to analyze behavior of virtualized instances.

The main objectives of this thesis are the following:

• Objective 1: Identifying isolation challenges related to virtualization, more precisely related to challenge 2 (B in Fig. 3), and showing how they have been exploited in already known cache-based side-channel attacks.

• Objective 2: Finding security approaches to mitigate these attacks at the OS-/hypervisor layer through actual techniques and technologies available in computing platforms.

INTRODUCTION

Challenge Approach Contribution(s)

Trusted execution on hosts

Trusted Execution Technology

A trust model for secure distributed computing through using Linux containers hardened by Intel SGX

(IEEE CloudCom 2018)

An in-depth survey of security issues related to side-channels for virtualized systems, detection and mitigation approaches of cache-based side-channel

attacks in computing platforms

(IEEE CSNet 2017)(Annals of Telecommunication, Springer, 2018) A Moving Target Defense (MTD) approach for

a container-based environment to mitigate cache-based side-channel attacks between

two Linux containers. (Ready to submit) Cache-based side-channel attacks Detection/Mitigation of cache-based side-channel attacks

A cross-VM side-channel attacks detection approach that leverages Intel Cache Monitoring Technology (CMT)

and Hardware Performance Counters (HPCs) to detect side-channel attacks conducted between

two VMs through the Last-Level cache. (IEEE FMEC 2018)

Table 1: Two isolation challenges related to the cloud environments.

3

Contributions

In this thesis, we provide the following contributions (see Table 1):

Regarding challenge 1 (i.e., untrusted hosts and OS/hypervisor), we investigate the use of trusted execution technologies in a distributed case, proposing a model for secure execution of distributed applications on untrusted decentralized cloud infrastructures. We present different trusted execution technologies and different scenarios to leverage trusted execution technology to trusted execution of an application on remote hosts in Fog com-puting infrastructures. Furthermore, we study different scenarios to secure execution of Linux containers on remote hosts. Based on our researches, we propose a trust model for secure distributed computing through using Linux containers hardened by Intel SGX.

However, the presented model is only secure regarding class A vulnerabilities (A in Fig. 3) and is vulnerable to cache-based side-channel attacks. Therefore, our further con-tributions tackle challenge 2 (i.e., side-channels in processor micro-architecture).

To achieve the objective 1:

• We provide an in-depth survey of different techniques/mechanisms which are ex-ploited in cache-based side-channel attacks, all attack techniques exploiting cache micro-architecture, and existing countermeasures by classifying approaches accord-ing to different levels of mitigation i.e., application, OS/hypervisor and hardware. We can conclude that the OS/hypervisor layer should get more attention as the best layer for the detection and mitigation of cache-based side-channel attacks from cloud service providers point of view.

To achieve the objective 2:

• We explore how these attacks can be detected in today computing platforms. We leverage hardware performance counters (HPCs) and Intel Cache Monitoring Tech-nology (CMT) to collect statistics of application/container/VM. We then analyze collected statistics to detect any malicious behavior at cache levels of processor. Our

INTRODUCTION

experimentation results show a high detection rate of the attack between two virtual machines, in a VM-based virtualized environment.

• We propose an approach leveraging moving target defense theory to mitigate side-channel attacks in a container-based environment. First, we detect a malicious Linux container and react to that by either running it on a isolated core of processor and in-side an isolated zone in the LLC through Intel Cache Allocation Technology (CAT), or migrating it to another host in the infrastructure. Our approach introduces a negligible performance overhead on a computing platform.

4

Organization of The Thesis

This thesis is organized as follows: • In chapter Background:

– We give some background on memory architecture and cache architecture in computing platforms, hardware performance counters, cloud computing and its different deployment models.

• In chapter 1:

– We provide an in-depth survey of security issues related to side-channels for virtualized systems. We identify security challenges, and cover possible attack types and existing countermeasures. We also sketch some directions for future attacks, extending towards distributed SCAs. The result of this chapter has been presented and published respectively in the conference CSNet 2017 and the journal of Annals of Telecommunication (Springer).

• In chapter 2:

– We discuss the challenge of trusted computing on remote hosts, different sce-narios to secure execution of Linux containers on remote hosts, different trusted execution technologies for cloud computing environments, and finally we pro-pose a model for secure distributed computing through using Linux containers hardened by Intel SGX, to perform trusted execution on untrusted Fog infras-tructures. The model is considered as threat model for a container-based envi-ronment. As we will see further, this model is vulnerable to side-channel attacks. The result of this chapter has been presented in the conference CloudCom 2018. • In chapter 3:

– We propose a Moving Target Defense (MTD) approach for a container-based environment, to mitigate side-channel attacks between two Linux containers. Our MTD approach reacts at local host and cloud level by changing host con-figuration, leveraging Intel Cache Allocation Technology (CAT), and migrating of Linux containers between hosts in the cloud infrastructure. We present a prototype by giving some details on the architecture and implementation. Fi-nally, we evaluate the proposed approach regarding different features such as performance. The result of this chapter is currently prepared for submission to an international conference.

INTRODUCTION

• In chapter 4:

– We present a cross-VM side-channel attacks detection approach. The approach leverages Intel CMT and HPCs to detect side-channel attacks conducted be-tween two VMs through the Last-Level cache. We present a prototype to detect side-channel attacks using HPCs and Intel CMT for the first time. We then in-troduce the concept and implementation of the prototype. Finally, we evaluate the prototype in terms of security and performance features. The result of this chapter is presented in the conference FMEC 2018.

• In chapter Conclusion:

– Finally, we conclude this thesis with some perspectives.

Background

This chapter aims to introduce the lecturer to different aspects, technologies, and tech-niques which are used in this thesis. First, some background on cloud computing and virtualization is given. Then, memory architecture of computer and especially the cache structure in the processor is detailed. Finally, trusted execution technology is explained with a cloud example.

This chapter is organized as follows. Section 1 reviews cloud computing and virtu-alization technology. Section 2 explains memory structure of computer and Hardware Performance Counters (HPCs). Finally, Section 3 gives an outlook on trusted execution technology.

1

Cloud and Virtualization

This section gives some background on cloud computing and the virtualization technology:

1.1 Cloud Computing

Cloud computing is the provision of computer services on the Internet. Its services enable individuals and businesses to use software and hardware that is managed by third parties in a remote site (i.e., Datacenters) with an improved computation efficiency and without installing software by users. Cloud services include online file storage, social networking sites, web-mail and online applications. NIST defines cloud computing as a model for convenient access, network access on demand, pool sharing of configurable computing resources (e.g., networks, servers, storage), applications and services that can be quickly available and deployed with a minimum of effort [Mell et al., 2011].

1.2 Deployment Models

There are essentially four deployments models for cloud computing: Community cloud, Private cloud, Public cloud, and Hybrid cloud. A community cloud is shared among several organizations for specific usage. A private cloud is owned and operated by a single com-pany to provide for its IT requests, while a public cloud is operated by a cloud provider to provide IT services to, typically large numbers of, external customers. A hybrid cloud uses the base of a private cloud and combines it with the use strategy of public cloud services.

1.3 Services Models

NIST defines three services models for cloud computing: SaaS (Software as a Service), PaaS (Platform as a Service), IaaS (Infrastructure as a Service).

SaaS is provided entirely by a cloud service provider (CSP) and users do not have any 9

BACKGROUND

control on the application security, maintenance, update, etc. Otherwise all these tasks are controlled and handled by the cloud service provider. Gmail places in this category of service. PaaS allows users to have a full control on applications/softwares which are oper-ated on PaaS. IaaS allows users to provision processing, storage, and networks resources while users can run any arbitrary software including operating system or applications. In this model, hardware infrastructure is dematerialized under control of the cloud service provider.

SaaS and PaaS constitute higher-level services while IaaS are low-level services provided by a cloud provider. Security for SaaS and PaaS services is guaranteed by the cloud provider because customers do not have permission to access to underlying components of the service. However, IaaS security is a concern for the provider as the customer has complete control on the rented service.

1.4 Virtualization and Cloud Computing

Virtualization technology enables multiple virtual machines (VMs) with different operat-ing systems that are independent of and isolated from each other, to be run on a physical machine through the virtualization layer that shares physical resources among them. This layer is also called Virtual Machine Monitor (VMM). The virtualization layer creates such an isolated execution environment fully through software or partly trough the hardware. If we consider the stack of machine composed of different abstraction layers: hardware, instruction set, operating system, libraries, and user applications. Each of these layers can be virtualized. In the context of cloud computing, the VMM lies between the bare metal and the OS of VM and gives a virtualized view of the hardware. The main and essen-tial aspect in the virtualization to create an isolated environment is address translation, which is used to give a virtual machine the illusion that it runs on a real system. In fact, the VMM manages the isolation between VMs through the address translation mechanism.

At this level of virtualization, there are two main techniques:full-virtualization, par-avirtualization. In full virtualization, all system resources (i.e., processors, memory) are virtualized and run an unmodified operating system including all its installed software on top of the physical machine through the VMM. This is a fully transparent technique. Full virtualization leverage hardware-level virtualization to improve the efficiency through additional instructions embedded mainly in the processor. Intel and AMD provide such a capability respectively by VT-X and AMD-V techniques.

Using paravirtualization the guest OS is modified to increase the scalability and perfor-mance of VMs. It requires substantial OS modifications. This virtualization architecture provides new interfaces to customized guest operating systems. However, the hypervisor should support this capability. This technique is not fully transparent from a VM’s point of view [Sahoo et al., 2010].

Linux containers are OS level virtualization, used as a lightweight virtualization solu-tion, for running several virtualized instances called containers on a physical machine (Fig. 4). Linux containers virtualization leverages cgroups combined with namespace functionality of Linux kernel to provide an isolated environment for running multiple containers on the same operating system.

As another lightweight virtualization technique to deploy cloud services: Unikernels have been developed with more security, flexibility, and reliability than Linux containers.

BACKGROUND

Figure 4: Different virtualization models.

They achieve these properties by reducing the attack surface and shrinking resource foot-print of services through a thin virtualization layer.

2

Computer Architecture and Modules

Memory and processor are main resources of a computer (Fig. 5) which are managed by a software system (i.e., operating system, hypervisor) that is build on top of the hardware layer. All these resources are connected together through a system bus. CPU uses the bus to communicates with I/O devices such as display adapter and DRAM. The operating system runs the processes on the hardware through allocating computer resources. To run multiple operating systems on the same physical machine, hypervisor schedules computer resources among them.

Figure 5: A high-level view of computer system architecture.

2.1 Memory Structure of Computer

In the memory structure of a computing platform, we may distinguish two addressing spaces: virtual and physical. Virtual memory space in the system is split into fixed sized segments called pages. To perform address translation between those spaces, Page tables

BACKGROUND

are used to map pages from the virtual address space to the physical address space. Walk-ing pages tables is time consumWalk-ing thus to speed up address translation, a special purpose cache called Translation Lookaside Buffer (TLB) is used to store recent translations. If an entry corresponding to a requested address is present in the TLB, a TLB hit is trigged. Otherwise the requested address is not present in the TLB, resulting in a TLB miss, and the page table structure is walked in the main memory to perform the translation.

2.1.1 Cache Memory in Modern Processors

The memory hierarchy, specially cache memory, plays an important role for the perfor-mance of a computer system. Caches are specialized memory layers placed (Figure 6) between the CPU and the main memory (RAM). They are used to significantly improve the execution efficiency by reducing the speed mismatch between the CPU (which operates in GHz clock cycles) and RAM (whose accesses require hundreds of clock cycles).

Figure 6: CPU cache vs Main Memory (RAM) and Hard Disk (HDD).

Today, there are typically three levels of cache: L1, L2 and L3 (LLC, last-level cache) levels. The L1 and L2 caches are usually private to each core and the LLC is shared among all cores of processor. The LLC is much larger (size of megabytes) than the L1 and L2 caches (which are sized in kilobytes). Caches are divided into equal blocks called ways and consisting of cache lines. Cache lines with the same index in ways form a set (Figure 7). When the processor needs access (read or write data) to a specific location in RAM, it checks whether a copy of data is already present in the L1, L2 or LLC caches. If the data is found at any cache level (a cache hit ), the CPU performs operations on the cache using only a few CPU cycles. Otherwise a cache miss has occurred and the processor needs to bring data from RAM at a much slower pace.

2.1.1.1 Addressing in Cache As illustrated in Fig. 7, different bits of a physical/log-ical address are used to access a cache line in different cache levels. Caches are virtually or physically indexed to derive the index and tag from the virtual or physical address respec-tively. In virtually indexed caches, the index is directly derived from the virtual address without translation before the cache lookup, thus faster than physically indexed. In phys-ically index caches, the virtual address should be translated to the physical one. Then, index bits and tag bits are derived from the translated address. The index determines a set in the cache, and tag contains a part of the address of fetched data.

BACKGROUND

Figure 7: Cache Organization in CPU.

2.1.1.2 Cache Replacement Policies There are different policies to replace a mem-ory block (in RAM) in the cache: Direct-Mapped, Fully Associative, Set Associative caches. In a direct-mapped cache structure, the cache is divided to several sets and one cache line per set. Each entry in the main memory only goes to one set in the cache. Therefore, several addresses map to the same cache set. The index bits determines the set to place memory block and the tag is stored in the tag field associated with the set (Fig. 8).

Figure 8: Direct-mapped cache.

In fully associative, the cache is divided to one set with several cache lines inside the set. In such a structure, each entry in the main memory can goes to any of the cache lines, avoiding a conflict between two or more memory addresses which map to a single cache line (Fig. 9).

Set-associative cache is an intermediate possibility between direct-mapped and fully associative caches. The cache is organized into multiple sets and each set with multiple cache lines. Each memory block maps to only one set in the cache, but data may be placed

BACKGROUND

Figure 9: Fully associative cache.

in any cache line within that set (Fig. 10).

Figure 10: A 2-ways set associative cache.

2.1.1.3 Cache Architectures There are three common cache architectures (Figure 11) regarding in modern processors:

a) Inclusive: In this architecture, all cache lines in L2 are also available in the LLC. For example, the architecture of LLC in Intel’s Nehalem processors is inclusive in order to reduce snoop traffic between cores and sockets of the processor [Thomadakis, 2011]. Inclusiveness provides less cache capacity but offers higher performance. For instance, for an inclusive LLC, when a cache line is evicted from the LLC, it is also evicted from L2 thus, required to fetch it from the RAM next time.

b) Exclusive: such a cache architecture does not use redundant copies i.e., each cache line is not replicated in the different cache levels thus enables higher cache capacity.

BACKGROUND

clusiveness is widely used in AMD Athlon processors. For instance, for an exclusive LLC, when a cache line is evicted from the LLC, it remains in L2 cache thus, do not require to fetch it from the RAM next time.

Figure 11: Inclusive cache vs Exclusive policy in the processor. In inclusive policy, read(x) results in fetching memory line x to L2 and the LLC while in exclusive policy, read(x) results in fetching memory line x to L2.

c) NINE: In this architecture, the LLC is non-inclusive non-exclusive of L2. If x is found in L2, the data is read from L2 to be processed by the processor, otherwise the data is fetched to L2 from the LLC if available. The eviction of x from L2 does not involve the eviction of x from the LLC. If x is evicted from the LLC, it does not result in the eviction of x from L2.

2.2 Hardware Performance Counters (HPC)

Performance Monitoring Unit (PMU) in Modern processors allows developers to take a quick look into the inner working of the processor during the execution of program binaries. HPCs are special platform hardware registers used to store statistics about various CPU events such as clock cycles and context-switches. During the execution of a binary, if se-lected events occur, the processor increments the respective event counter inside the PMU. Those events are widely used to profile a program’s behavior to analyze and optimize it. Collected performance data may also be analyzed to detect any situation of performance degradation. In Linux-based operating system, these events are accessible through the perf_event (linux/perf_event.h) subsystem provided by the Linux kernel (from version 2.6.31). The Linux kernel provides an interactive interface to them with the perf_event and the corresponding Perf tool for user space applications.

There are two usage modes for events: counting and sampling. Counting measures the number of events during the execution of target program, without any interruption. Sampling mode periodically writes measurements to a buffer whenever the counter exceeds a specified threshold through issuing an interruption. This mode needs to be supported by the hardware.

BACKGROUND

3

Trusted Computing

It refers to technologies allowing a computing platform or an application demonstrate it-self as a trusted entity to users/clients. The trustworthy is proved through cryptographic operations provided by a trusted execution technology.

We demonstrate usage of trusted computing through an example. We may distinguish two ways to perform operation or services either on local devices or on a remote server hosted in the cloud. For instance, in a malware checking service, checking the hash of an application can be done locally on the user’s device through a database of signatures pro-vided by the security service providers. However, to perform local checking, this operation consumes computing resource of the device like smartphones. In addition, anti-malware vendors do not want to send their database of signatures to clients. This is why moving this service to the cloud get more attention. This service type is a remote membership test, in which a user wants to check if item q is part of set X (i.e., dictionary). As a solution, we may leverage approaches based on cryptographic primitives like Private Set Intersec-tion (PSI) [De Cristofaro and Tsudik, 2010] or Secure Multi-party ComputaIntersec-tion [Goldreich, 1998].

Figure 12: In TrustZone, there are two worlds: normal and secure. Each word has its own OS and they are separated physically by hardware. Sensitive applications are run inside secure world. All communications to secure world are only done through the API.

However, such approaches suffer from scalability and complexity. To overcome these limitations, we may leverage hardware-assisted trusted computing. In fact, trusted com-puting provides a secure execution environment to isolated execution of the application (in our scenario, the part that processes clients queries) on the remote server through security mechanisms implemented in the hardware. Furthermore, isolated execution allows run-ning an application (trusted application) separately from other applications runrun-ning on the same host. Trusted computing addresses following challenge: Secure execution of an application on hosts owned by third parties.

Trusted execution mechanisms provided by processor-embedded hardware technolo-gies like ARM TrustZone [ARM, 2005], are highly promising to overcome such chal-lenges (Fig. refchapter/background/fig/trustzone). A secure component like TPM [Sadeghi

BACKGROUND

Figure 13: Chain of trust process until launching the application and sending the proof to the verifier.

et al., 2006] in a computing platform plays the role of Root of Trust (RoT) through specific embedded cryptographic mechanisms. To make the platform trusted, the RoT extends its trust to other components of the platform by building a Chain of Trust (CoT) in which each element extends its trust to the next element in the chain until launching the trusted application (Fig. 13).

Another security mechanism which is provided by trusted computing technology is Remote Attestation. It allows authorized remote parties to detect any change in the ap-plication, by providing reliable evidence to authorized remote parties about the state of application executing on the computing platform.

Chapter 1

Isolation Challenge in Cloud

Infrastructures: State of The Art

Virtualization is the main enabler for sharing physical resources of a host among virtual machines through a hypervisor abstraction layer [Sahoo et al., 2010]. By partitioning physical resources, virtualization enables software isolation between virtualized instances e.g., virtual machines. However, resource sharing among different users and hardware vir-tualization are challenging [Menon et al., 2006] [Uhlig et al., 2005]. Many optimization mechanisms and techniques have therefore been proposed at the application, hypervisor, OS and hardware levels [Neiger et al., 2006]. Unfortunately, despite their benefits, such approaches raise major security concerns, in particular, because resource sharing between several entities has a strong impact on isolation in such environments. Potential threats include data confidentiality and integrity, and availability of provided services (e.g., DDoS threats [Bazm et al., 2015]). Among known threats to data security in a virtualized en-vironment such as the cloud, Side-Channel Attacks (SCA) target highly sensitive data and computations, e.g., cryptographic operations. SCAs use a hidden channel that leaks information on an operation such as AES encryption, typically execution time or cache access patterns. Such channels are commonly created in software implementation of cryp-tographic algorithms, in a number of techniques and mechanisms and techniques widely used in hypervisors such as memory deduplication and in the hardware design itself e.g., in the LLC of processors. SCAs can be applied to a wide range of computing devices from smart cards to VMs. Their impact may be greater than other attacks targeting crypto-graphic algorithms as they attempt to retrieve secret data without any special privileged access and in a non-exhaustive manner.

There are different categories of the attack regarding to the type of exploited channel; Timing attacks, Cache attacks Electromagnetic attacks, and Power-monitoring attacks. Electromagnetic attacks and power-monitoring attacks are more applicable to physical devices such as smartcards. Cache-based and timing attacks are the main software at-tacks applicable in cloud computing because of sharing the resources and virtualization techniques. To mitigate these attacks, countermeasures may be applied at three different levels: application, system, and hardware. Each level of defense has its own challenges, benefits and limitations w.r.t. metrics for assessing effectiveness, e.g., performance over-head, or compatibility with legacy. Application-level approaches to mitigation are based on programming and software implementation techniques to make sensitive applications such as OpenSSL SCA-resistant. Instead, system and hardware approaches explore how to enforce loose and strong isolation between computing elements respectively. While iso-lation risks and countermeasures are now fairly well-understood for a virtualized system 19

CHAPTER 1. ISOLATION CHALLENGE IN CLOUD INFRASTRUCTURES: STATE OF THE ART

when considering control of main communication channels (authorization), it is much less so when channels are indirect (side-channels). Due to the increasing complexity of vir-tualization stacks, blending multiple layers of variable levels of trustworthiness, it is thus important to provide a comprehensive overview of those security issues to help architects, developers, and security teams to prevent, detect, and react to such attacks by pinpointing the key elements not to overlook and by suggesting some countermeasures.

The objective of this chapter is to provide an in-depth survey of security issues related to side-channels for virtualized systems. We identify security challenges, and cover possi-ble attack types and existing countermeasures. We also sketch some directions for future attacks, extending towards distributed SCAs.

This chapter is organized as follows. Section 1 reviews security challenges for a virtu-alized environment, focusing on isolation. Sections 2 and 3 explain side-channel attacks and their techniques. Section 4 discusses approaches for attack mitigation at different levels. Section 6 gives an outlook of future attacks, potentially concerned systems and in-frastructures, and security recommendations for mitigation. Finally, Section 7 concludes this chapter.

1

Isolation Challenge

This section overviews two different levels that have a security impact on the isolation in computing platforms in cloud infrastructures.

1.1 Isolation Challenges Related To Hardware

Using virtualization, physical hosts can run several virtual instances e.g., VMs in parallel, typically by means of a hypervisor. VMs are then executed on the cores of a processor. However, virtualization technology may have a strong impact on the security of hosts and hypervisor. In the following, we present several security challenges which may direct-ly/indirectly (Table 1.2) compromise isolation in a virtualized platforms (Table 1.1).

1.1.1 Speculative Execution in Modern Processors

To improve the performance of processors, an optimization mechanism is implemented in processors to execute instructions in an out-of-order (parallel) manner instead of sequential execution. This mechanism allows processors to continue to speculatively execute other instructions through branch prediction. If the result of a prediction is incorrect, the proces-sor reverts to its initial state (i.e., before the execution of incorrect branch). Otherwise, the predicted branch is true and the processor takes it and continues. This mechanism may be exploited by an adversary to access sensitive data of other processes, even kernel data. In fact, when the predicted branch is incorrect, speculative data related to instructions which are executed during the prediction phase, remains in processors cache levels, thus leaks information on data in caches. The adversary runs speculatively malicious instructions to bring privileged data to cache levels of processor [Kocher et al., 2018].

1.1.2 Hardware Transactional Memory

Transactional memory mechanisms in processors provide atomicity and consistency for transactions in the program code, which are highly used in concurrent programming. Such mechanisms allow detection of conflicts during the execution of transactions on shared

CHAPTER 1. ISOLATION CHALLENGE IN CLOUD INFRASTRUCTURES: STATE OF THE ART

Figure 1.1: Isolation challenges, side-channel attacks, attak types, and mitigation of the attacks in the cloud.

data. If any conflict is detected, the transaction rolls back to a previously defined state. Otherwise, a commit is performed. During a transaction, data related to instructions which are executed by the transaction are buffered and remain in cache levels of the processor until commit/roll back time. Data are also isolated from other threads running concurrently on the processor. An adversary may exploit such mechanism to see whether a cache line is present in cache levels of the processor, and create a victim cache accesses profile to retrieve sensitive information [Disselkoen et al., 2017].

1.1.3 Issues of Shared Caches in Modern Processors

Multi-core processors are nowadays widely used in a virtualized environment because of their potential for powerful parallel processing. However, the cache hierarchy, especially the LLC, can be exploited as a vulnerable channel that leaks information on processes running on the cores. Figure 1.2 shows the cache hierarchy and its position w.r.t. VMs and the main memory.

1.1.4 Simultaneous multi-threading

Simultaneous multi-threading (SMT), such as Intel’s Hyper-Threading (HT), enables mul-tiple threads to be run in parallel on a single core of a processor in order to improve execution speed. SMT allows sharing of dedicated resources of a core, mainly its L1 cache, between multiple threads running on the same core. This sharing can leak information between threads (of a core) and may be used by a malicious thread to gather information from other threads. SMT can potentially ease cache-based side-channel attacks [Demme et al., 2012] [Percival, 2005].

1.2 Isolation Challenges Related To Virtualization/OS

1.2.1 Data Deduplication

Online storage is one of the most-popular services offered by cloud providers. To maximize the number of users of a storage service, cloud providers constantly look for new means to store data more efficiently. Data deduplication is a widely-used technique to this end. It stores a single copy of redundant data belonging to different users. In addition, it also saves network bandwidth by requiring much less data transfers across network. However, data deduplication is subject to security issues because it can create a leaky channel between two users of the same cloud storage service [Harnik et al., 2010].

CHAPTER 1. ISOLATION CHALLENGE IN CLOUD INFRASTRUCTURES: STATE OF THE ART

Figure 1.2: Sharing of resources and cache issue in virtualization

1.2.2 Page Deduplication

It is another optimization technique which is used in operating systems and hypervisors to optimize memory usage [Arcangeli et al., 2009]. Generally, an operating system loads libraries which are used by programs in the main memory. Then, related processes access to the same memory pages harnessing the mapping between its virtual addresses and the pages of the libraries. The most frequent form of page deduplication is content-based in which identical pages shared among several processes are merged by the operating system or the hypervisor. It typically enables the hypervisor to save memory, but also cache-based side-channel attacks [Suzaki et al., 2011] [Bosman et al., 2016] [Xiao et al., 2013] [Gruss et al., 2015a] [Xiao et al., 2012]. Furthermore, mapping the virtual address space of two processes to the same physical pages can leak information. Consequently, it may be used as a method to exploit cache activities of a process or a VM.

1.2.3 Large Page Memory

The memory management unit (MMU) in hypervisors uses memory paging techniques to improve VM performance. One of these techniques is large page memory. Inside a processor, memory address translation is performed by translation lookaside buffers (TLB) and large page memory improves the efficiency of TLB accesses by reducing table entries. Default page size used in OS is 4KB, but the size of a large/huge page is 2MB or 1GB. Although this technique is useful in term of efficiency, it is also subject to severe security issues as part of the mapping of a virtual address to a physical one. Furthermore, large page memory divulges more bits of a physical address in the mapping process to an adversary than the small page memory. In fact, many bits of the virtual address remain unchanged in mapping process to physical address. Consequently, it gives more resolution to attack on the LLC that need selecting special sets [Apecechea et al., 2014].

1.2.4 Preemptive Scheduling

Using a time-based scheduling algorithm, schedulers divide CPU time into equal slices and allocate each slice to a virtual machine for executing its instructions on the processor. However, the scheduler can interrupt the execution of a VM and allocate CPU time to a priority task or VM. In this case, the switch time between two VMs can constitute a security risk. An attacker can force the scheduler to interrupt the execution of a victim VM. When

CHAPTER 1. ISOLATION CHALLENGE IN CLOUD INFRASTRUCTURES: STATE OF THE ART

Security Challenge Description

Shared Cache in Processors Sharing of CPU’s cache between threads and VMs running on a processor

Hardware Transactional Memory Providing atomicity and consistency for transactions in the program code

Inclusive caches In an inclusive cache all memory lines in L1 are also present in L2 and LLC Simultaneous multi-threading Running multiple threads in parallel

on a single core of,CPU Data Deduplication Merging of redundant data in order to

optimize memory usage

Page Deduplication Condensing redundant memory pages by hypervisor to obtain more RAM

Large Page Memory Using large page memory to improve the efficiency of TLB access

Preemptive Scheduling

Time scheduling algorithm in which scheduler divides CPU’s time to equal slices and attributes each one to threads Non-Privileged access to instructions Using hardware instructions in a malicious way

to exploit important information

Table 1.1: Identified challenges of virtualization technology regarding to the isolation issue.

a switch happens between the malicious VM and the victim VM, the attacker can get the state of the victim VM, e.g., the victim’s cache activities just after context-switching.

1.2.5 Non-Privileged access to hardware instructions

Almost all side-channel attack techniques exploit hardware instructions, such as rdtsc and clflush, to perform attacks. Furthermore, these instructions are used to measure time and to evict a memory line from cache, respectively. As the execution of such instructions is not controlled, they may be used by an untrusted process to manipulate CPU caches. To ensure the trusted execution of a process; for instance in modern ARM processors, the execution of certain hardware instructions is forced to be privileged from the top layers.

2

Side-Channel Attacks

The use of cryptography is raising in many fields of computer science in recent years. Its applications abound especially in cloud computing for securing data and services [Parashar and Arora, 2013] [Li et al., 2009]. Since many cryptographic algorithms are documented and publically accessible, the security of a cryptographic system frequently relies on the robustness of its secret key. Among attacks which target a cryptographic cipher, side-channel attacks threaten the security of a system by allowing the encryption key to be inferred. Generally, each application has a especial behavior either in term of cache access or execution time. To perform certain types of the attacks, an attacker requires access to a VM running encryption operations. With cloud technology that is based on the virtualization, new means have became available to access to other guest operating systems which are hosted on a same physical machine. Cryptographic algorithms can be attacked more flexibly. Hence, the traditional implementations of these algorithms need to by verified in order to be compatible with virtualized environments [Weiß et al., 2012].