HAL Id: hal-02958902

https://hal.archives-ouvertes.fr/hal-02958902

Submitted on 23 Oct 2020

HAL is a multi-disciplinary open access

archive for the deposit and dissemination of

sci-entific research documents, whether they are

pub-lished or not. The documents may come from

teaching and research institutions in France or

abroad, or from public or private research centers.

L’archive ouverte pluridisciplinaire HAL, est

destinée au dépôt et à la diffusion de documents

scientifiques de niveau recherche, publiés ou non,

émanant des établissements d’enseignement et de

recherche français ou étrangers, des laboratoires

publics ou privés.

SpheriCol: A Driving Assistance System for Power

Wheelchairs Based on Spherical Vision and Range

Measurements

Sarah Delmas, Fabio Morbidi, Guillaume Caron, Julien Albrand, Meven

Jeanne-Rose, Louise Devigne, Marie Babel

To cite this version:

Sarah Delmas, Fabio Morbidi, Guillaume Caron, Julien Albrand, Meven Jeanne-Rose, et al..

Spheri-Col: A Driving Assistance System for Power Wheelchairs Based on Spherical Vision and Range

Mea-surements. IEEE/SICE International Symposium on System Integration, Jan 2021, Iwaki, Japan.

pp.505-510, �10.1109/IEEECONF49454.2021.9382766�. �hal-02958902�

SpheriCol: A Driving Assistance System for Power Wheelchairs

Based on Spherical Vision and Range Measurements

Sarah Delmas

1, Fabio Morbidi

1, Guillaume Caron

1,2, Julien Albrand

1,

M´even Jeanne-Rose

1, Louise Devigne

3, Marie Babel

3Abstract— This paper presents “SpheriCol”, a new driving assistance system for power wheelchair users. The ROS-based aid system combines spherical images from a twin-fisheye camera and range measurements from on-board exterocep-tive sensors, to synthesize different augmented views of the surrounding environment. Experiments with a Quickie Salsa wheelchair show that SpheriCol improves situational awareness and supports user’s decision in challenging maneuvers, such as passing through a door or corridor centering.

I. INTRODUCTION

Wheelchairs play today a crucial role for independence, self-confidence, dignity, and overall well-being of people with motion impairments. In France, the proportion of wheelchair users was 0.62% in 2008, or about 361 thou-sand individuals [1]. European data from several countries is consistent with French data: approximately 1% of the total population uses wheelchairs. Since the European Union had 446 million inhabitants in April 2020, the number of wheelchair users in Europe is approximately 4.5 million. On the other hand, the prevalence in the US was 2.3% or 5.5 million adults, 18 years and older, in 2014 [2].

In spite of some recent important advances in assistive robotic technologies (see e.g. [3], [4], [5], [6], [7], [8]), the World Health Organization (WHO) maintains that there is still a shortage of health and rehabilitation staff with appropriate knowledge and skills to provide a wheelchair that meets a user’s specific needs. Moreover, wheelchair users are confronted with daily life difficulties in autonomously navigating modern cities. In fact, the majority of today’s urban spaces does not comply with mobility and accessibility needs. Hence, there still exist numerous physical barriers for those who use mobility aids, outdoors (narrow sidewalks, absence of curb ramps, inaccessible public transport), as well as indoors (corridor centering, backing out of an elevator) [9]. Several research projects [10], [11], [12], have addressed the obstacle-detection problem for smart wheelchairs, by proposing solutions based on arrays of active sensors

*This work was carried out as part of the Interreg VA France (Channel) England ADAPT project “Assistive Devices for empowering disAbled People through robotic Technologies” (adapt-project.com). The Interreg FCE Programme is a European Territorial Cooperation programme that aims to fund high quality cooperation projects in the Channel border region between France and England. The Programme is funded by the European Regional Development Fund (ERDF).

1MIS laboratory, Universit´e de Picardie Jules Verne, Amiens, France.

firstname.lastname@u-picardie.fr

2CNRS-AIST JRL (Joint Robotics Laboratory), IRL, Tsukuba, Japan. 3Rainbow Team at IRISA/Inria Rennes and INSA Rennes, France.

firstname.lastname@irisa.fr

(lasers, ultrasonic or infrared sensors). The goal was to provide assistance for safe navigation and minimize user’s intervention (thus reducing cognitive and physical overload). However, the systems developed in these projects are still at a “proof-of-concept” stage, and they do not leverage visual feedback. In recent years, cameras have been increasingly used for (semi-)autonomous localization and navigation of instrumented wheelchairs [13]. In particular, multi-camera systems (three monocular cameras in [14], a Point Grey Ladybug 2 camera in [15]), have been shown to ensure a better coverage of the surrounding environment, and they have been successfully field tested. However, omnidirectional visionis still underrepresented [16], and its full potential has yet to be exploited for collision-free trajectory planning.

Capitalizing on the recent results of the ADAPT project [12], in this paper, we present a new decision support system for wheelchair users, called SpheriCol (which stands for “Spherical Collision-avoidance”), to assist navigation in confined spaces. Occupational therapists and special-ists in rehabilitation medicine have been consulted from the beginning of the project, to evaluate the “projection into use” in real-world scenarios. The proposed system can be easily integrated into off-the-shelf electrically powered wheelchairs thanks to ROS (Robot Operating System [17]), and it relies on spherical images captured by a pocket-size twin-fisheye camera and range measurements from low-cost infrared/ultrasonic sensors or a laser scanner. Similarly to a car parking assistant, the 360◦images and range information are combined in real time to generate an augmented view of the surrounding environment. In this way, the user can then easily detect nearby obstacles and avoid impending collisions. Different viewpoints (equirectangular, panoramic, spherical and bird’s-eye view) can be selected by clicking on a tactile display, and color-coded distance markers can be superimposed on the images, for direct visual feedback and free-space mapping. Real-world experiments with a mid-wheel drive Quickie Salsa M2 wheelchair equipped with a ring of 48 time-of-flight sensors and an overhead Ricoh Theta S twin-fisheye camera, have shown the effectiveness of the proposed driving assistance system in an indoor cluttered environment.

In the remainder of this paper, Sect. II presents the mate-rial: the power wheelchair and twin-fisheye camera. The ar-chitecture of SpheriCol is described in Sect. III. Finally, Sect. IV is devoted to the experimental evaluation, and Sect. V concludes the paper with a summary of contributions and some possible avenues for future of research.

II. MATERIAL

A. Power wheelchair

The driving assistance system described in this paper is fully compatible with the existing consumer-grade power wheelchairs. The wheelchair should be equipped with a set of exteroceptive sensors arranged along its circumference, 30 to 50 cm above the ground (e.g. a ring of ultrasonic/infrared sensors [18] or a laser scanner). The sensors have a maximum range of 4 m indoors, and 15 m outdoors: they provide proximity information via range (and bearing) measurements to the obstacles around the wheelchair. Our driving assistance system also makes use of a fixed or a mobile tactile display (e.g. a tablet), for human-machine interaction. Besides these basic pieces of equipment, SpheriCol is hardware-agnostic, i.e. it neither requires a specific kinematic/dynamic model for the power wheelchair nor a specific technology for the exteroceptive sensors.

B. Twin-fisheye camera

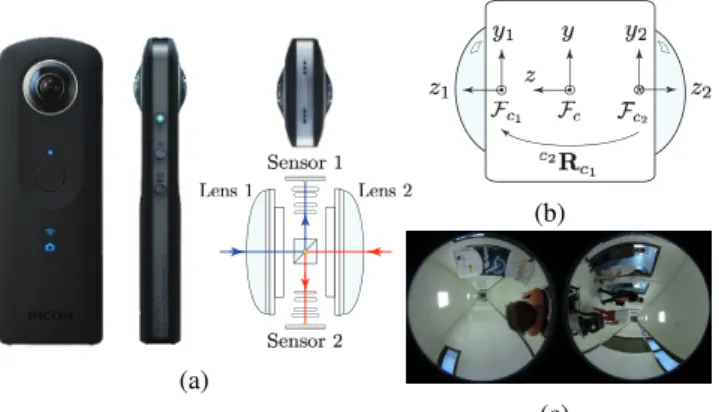

SpheriCol relies on a stream of 360◦ images captured by a twin-fisheye camera (see Fig. 1). These innovative cameras are small, lightweight, and they can record high-resolution (8K) videos with frame rates up to 30 fps. In the last few years, the compact optical design proposed by Ricoh (con-sisting of two fisheye lenses mounted back-to-back, coupled with two light-sensitive surfaces), have become mainstream and it has been adopted by other camera manufacturers (see e.g. Samsung Gear 360, LG 360 CAM, Insta360 series, KanDao QooCam 8K).

Our driving assistance system requires the twin-fisheye camera to be mounted in an elevated position on the wheelchair, to have an unobstructed view of the free space around it. To meet this requirement, a common solution in modern instrumented wheelchairs [16], [19], is to install the camera on top of a mast behind the backrest (see Fig. 6a).

It is well-known that some classes of fisheye cam-eras are approximately equivalent to a central catadiop-tric system [20]. Therefore, the unified central projection model [21], [22], can be used. We describe each fish-eye lens by its own set of intrinsic parameters Pcj =

{auj, avj, u0j, v0j, ξj}, j ∈ {1, 2}, where auj and avj are

the focal lengths in pixels in the horizontal and vertical directions, respectively, and (u0j, v0j) are the coordinates

of the principal point in pixels. The last parameter, ξj, is the

distance between the unit sphere’s first projection center and the perspective second projection center of fisheye lens j, as described in [22, Fig. 2]. Following [23], we assume that the translation vector between the two fisheye frames Fc1 and

Fc2 is zero, to guarantee the uniqueness of the projection

viewpoint. Moreover, we assume that the camera frame Fc

coincides with Fc1 (they are shown separately in Fig. 1b,

for illustration purposes only). The extrinsic parameters of the twin-fisheye camera are then incorporated into the rotation matrix c2R

c1 between Fc1 and Fc2. To calibrate

the twin-fisheye camera, we used a custom-made calibration rig consisting of six circle patterns attached inside two half cubes, and followed the same procedure described in [23].

(a)

(b)

(c)

Fig. 1: (a) Front, side, and top view of the Ricoh Theta S camera (image courtesy of Ricoh): the optical system with two fisheye lenses, prisms and CMOS sensors is shown in the lower right corner; (b) Fc is the camera frame, and

Fc1, Fc2 are the reference frames associated with the two

fisheye lenses (top view); (c) Example of dual-fisheye image captured by the Ricoh Theta S in a corridor: the left and right sub-images correspond to the two fisheye lenses.

III. SYSTEM ARCHITECTURE

In this section, the architecture of our driving assistance system is presented (see Fig. 2a). SpheriCol relies on the middleware ROS (Robot Operating System) for data col-lection, and on the OpenCV library for image processing. The video stream from the twin-fisheye camera is captured via the ROS node cv camera and processed according to the user’s viewpoint preferences (see Fig. 2b).

A. Viewpoint selection

The environment around the wheelchair can be observed from four different “virtual viewpoints”: equirectangular, panoramic, spherical and bird’s eye, as described below.

1) Equirectangular view: The input dual-fisheye images are mapped into equirectangular images by exploiting the estimated intrinsic parameters of the twin-fisheye camera (cf. Sect. II-B). The width of the equirectangular image corresponds to the 360◦ horizontal field of view of the twin-fisheye camera. An example of dual-fisheye image with its equirectangular counterpart is shown in Fig. 3a. The equirectangular view is the default view in SpheriCol, and all the others are generated from it.

2) Panoramic view: A panoramic image corresponds to half of an equirectangular image (i.e. it only covers a 180◦ horizontal field of view). To synthesize a front view, we leveraged OpenCV’s Rect function to crop the left and right bands of the equirectangular image corresponding to the rear of the wheelchair (white dashed areas in Fig. 3a (bottom)). On the other hand, to generate a rear view, the two bands are stitched together using OpenCV’s hconcat function. The user can also select a specific angular sector of the panoramic image (in 5◦ steps): this facilitates early detection of obstacles in the blind spots of the wheelchair (for more details, see Sect. III-C).

(I) (II)

(III)

Options Equirectangular Panoramic Spherical Bird’s eye

Infrared Sonars Ellipses Zones Indics Lines Sound + _ Reset Esc (a) (b)

Fig. 2: (a) Overview of system components: (I) Power wheelchair equipped with a ring of range sensors, (II) twin-fisheye camera, and (III) human-machine interface (tactile display); (b) Functional diagram of SpheriCol: data flow (blue arrows) and libraries (red arrows).

3) Spherical view: The spherical view has the advantage of clearly showing the presence of potential obstacles at ground level (see Fig. 3b). It is generated from the equirect-angular view via a simple Cartesian to polar coordinates transformation (OpenCV’s LinearPolar function).

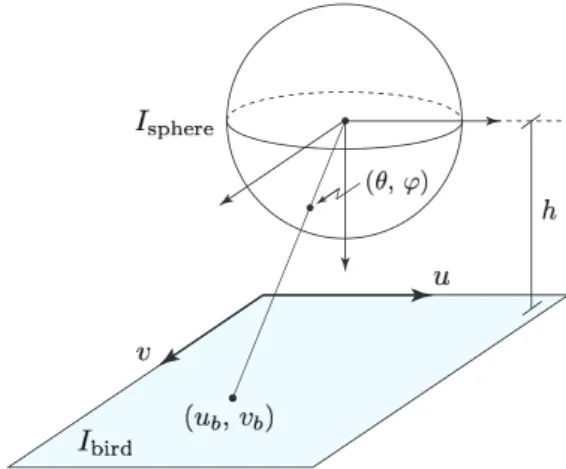

4) Bird’s-eye view: As the spherical view, the bird’s-eye view is useful to detect obstacles on the floor around the wheelchair, but the images have a more uniform (perspective-like) spatial resolution. To generate the bird’s-eye view, we first map the m × n equirectangular image into a unit sphere, via the transformation (see Fig. 4),

θ = 360◦ uθ

m − 1, ϕ = 90

◦ vθ

b(n − 1)/2c, where (uθ, vθ) is the sampling step size of the

equirect-angular image in the horizontal and vertical direction, re-spectively, and (θ, ϕ) ∈ [0◦, 360◦) × [−90◦, 90◦] are the coordinates of a point on the sphere. The bird’s-eye image Ibird is obtained by projecting the point (θ, ϕ) on the unit

sphere, onto a plane at point (ub, vb) (see Fig. 4). The height

h of the sphere above the plane is a free parameter which depends on the footprint of the wheelchair, user’s body size, and type of environment (indoor or outdoor).

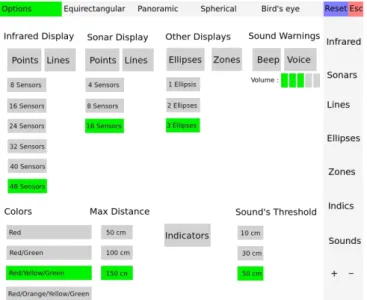

B. Configuration interface

The different functionalities of SpheriCol are accessible via a configuration interface. The “Options” tab in the top left corner of Fig. 5 allows to select the preferred visual/proximity information. With the remaining tabs in the same row, the user can choose one of the four virtual viewpoints described in Sect. III-A. Infrared and ultrasonic sensors being the most popular proximity sensors in smart wheelchairs, they are already available in the “Options” tab, for quick selection.

The “Points” buttons allow to display the distance mea-surements to the obstacles at their corresponding locations in the images. To project the proximity information onto the images, we leveraged the known calibration parameters of the twin-fisheye camera, and empirically estimated the rigid transformation between the camera frame Fc and the

(a)

(b)

(c)

Fig. 3: Viewpoint selection: (a) Dual-fisheye image captured by the twin-fisheye camera (top), and corresponding equirect-angular image (bottom); (b) Spherical view, and (c) bird’s-eye view, corresponding to the same spatial location.

local frames of the range sensors. To filter out noise, a moving average filter was applied to the raw range measure-ments (30 iterations were considered in our experimeasure-ments in Sect. IV). The user can choose how many measurements to display at the same time: if the range sensors are organized in p banks of q units, then a minimum of p measurements, and a maximum of pq, can be shown on the images.

By selecting “Lines”, the distance measurements from the range sensors are interpolated using Lagrange polynomials and superimposed on the images.

The “Ellipses” button allows to display from 1 to 3 concentric ellipses. The first ellipse (red) is placed at a distance d = 0.5 m from the wheelchair’s sides, the second (yellow) at 1 m, and the third (green) at 1.5 m. The ellipses are static and they are not periodically refreshed using the new measurements of the range sensors.

Fig. 4: Generation of the bird’s-eye image Ibird from the

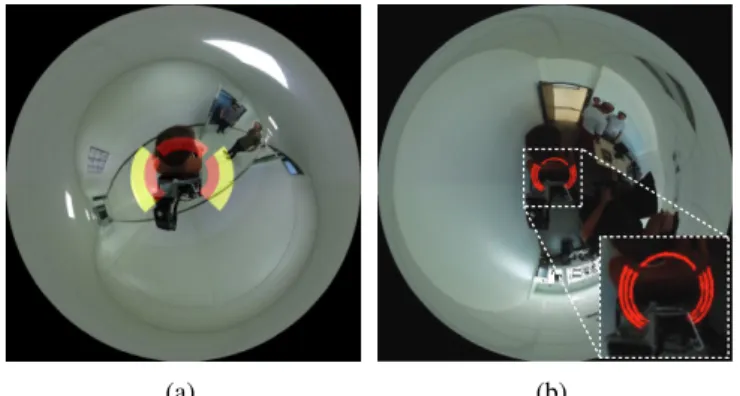

The “Zones” button activates 12 colored bands (in four angular sectors of 90◦) around the wheelchair: green bands for a distance d such that 1 m ≤ d < 1.5 m, yellow bands for 0.5 m ≤ d < 1 m, and red bands for d < 0.5 m. To reduce visual overload, the static bands disappear when an obstacle is detected in the corresponding angular sector.

Similarly to “Ellipses”, the “Indics” (Indicators) button allows to display 1 to 3 red circle arcs on the four sides of the wheelchair. The arcs are dynamic, and they only appear when an obstacle is detected: 1 arc for 1 m ≤ d < 1.5 m, 2 arcs for 0.5 m ≤ d < 1 m, and 3 arcs if d < 0.5 m.

Note that the previous options are not mutually exclusive, and that the interface can accommodate multiple distance markers (e.g. “Points” and “Lines”) at the same time.

Since other impairments (such as low peripheral vision or visual neglect [24], [25]) may coexist with motor dis-abilities, auditory feedback is also available in SpheriCol. By clicking on the “Beep” and “Voice” buttons under the “Sound Warnings” tab (top right in Fig. 5), sound signals are generated when an obstacle is detected. Normally sighted individuals could also benefit from the auditory feedback, when their visual and haptic channels [26] are overloaded with information. Finally, the “Color”, “Max Distance”, “Volume” and “Sound’s Threshold” buttons, give access to additional configuration parameters which allow to fully customize the driving assistance system.

C. Visualization interface

The visualization interface is active during normal opera-tion of the wheelchair. A large poropera-tion of the window is used to display the video stream from the twin-fisheye camera. The top (“Options”) bar and the right menu are similar to those in the configuration interface, and they can be used to switch on/off the selected virtual viewpoint and distance markers, respectively. With the “+” and “−” buttons (lower right corner in Fig. 5), the user can either change the angular

Fig. 5: Configuration interface of SpheriCol.

sector in the panoramic images, or zoom in/zoom out the bird’s-eye images.

If the user turns on the auditory feedback, she/he will either hear a series of beeps whose frequency is inversely proportional to the distance d to the obstacles (“Beep” option), or a voice message announcing the direction of the approaching obstacle(s) (“Voice” option). The two options can be also activated at the same time.

IV. EXPERIMENTALRESULTS

SpheriCol has been validated with a Sunrise Medical Quickie Salsa M2electrically-powered wheelchair. This mid-wheel drive platform has 6 mid-wheels with independent suspen-sions: the 4 castor wheels have a diameter of 17.8 cm and the two drive wheels have a diameter of 33 cm. The wheelchair, which is endowed with a 7 cm curb-climbing ability, mea-sures 61 cm at the widest point, and the 60 Ah batteries can propel it up to 10 km/h. As shown in Fig. 6a, a Ricoh Theta S camera was installed overhead behind the passenger. The camera weighs 125 g and its external dimensions are 44 mm (W) × 130 mm (H) × 22.9 mm (D). We considered a medium video resolution of 1280 pixels × 720 pixels at 15 fps, which allows real-time image processing. The 48 range sensors around the wheelchair are grouped into 7 banks: 6 banks of 6 sensors are located on the left and right side and under the footrests, and 1 bank of 12 sensors is behind the backrest (four of the seven banks are marked in green in Fig. 6a). The housing of the 7 sensor modules has been 3D printed in our workshop. The ST VL53L1X time-of-flight (ToF) sensors have the following technical specifications [27]: distance measurement, up to 4 m, ranging frequency, up to 50 Hz, typical field of view, 27◦, and size, 4.9 mm × 2.5 mm × 1.56 mm.

In our current implementation, SpheriCol runs on an external laptop computer under Linux Ubuntu 18.10 and it relies on ROS Melodic distribution (see Fig. 6b) and the OpenCV 2 library. The laptop is connected to the Ricoh Theta S, and to the wheelchair, via a USB port, to access the range measurements from the ToF sensors. Work is currently in progress to port SpheriCol to the embedded computer of the power wheelchair, to optimize the software for the available hardware.

The operability of the driving assistance system has been evaluated with three able-bodied, non-experienced users, who controlled the wheelchair with a standard joystick in an indoor laboratory environment. As a representative example, we will discuss below the results of a trial performed in July 2019, in which the users were asked to exit a classroom and move along the center of the adjoining corridor (blue path in Fig. 7a). The trajectory includes two U-turns and a door crossing. The presence of a poster board next to the door (red rectangle in Fig. 7a) and of moving obstacles (three people, marked with red disks in Fig. 7a), made the maneuver even more challenging. For this motion task, the spherical and the bird’s-eye views turned out to be particularly useful. In fact, as shown in Figs. 3b and 3c, the contour of the wheelchair is clearly visible in the images, and the free space

(a)

(b)

Fig. 6: (a) Experimental setup: the Salsa M2 wheelchair with the Ricoh Theta S camera and the ToF sensor modules (green boxes). The driving assistance system helps the user to pass through the door in front of him; (b) ROS computation graph (rqt graph) of SpheriCol: nodes (bubbles) and messages (rectangles).

around it can be easily identified to perform collision-free maneuvers. Compared to the spherical and bird’s-eye views, the three users found the equirectangular view less intuitive, since the detection of the obstacles behind the wheelchair re-quires some training (as shown in Fig. 3a (bottom), a vertical discontinuity is present between the front and rear views). Although higher frame rates are possible (up to 30 fps), the users were comfortable with the video stream in the visualization interface, which resulted in accurate collision predictions.

Fig. 7 and Fig. 8 show different ways of augmenting the 360◦ images captured by the Ricoh camera, with the proximity information provided by the ToF sensors: Points (Fig. 7b), Lines (Fig. 7c), Ellipses (Fig. 7d), Bands (Fig. 8a), and Circle arcs (Fig. 8b). The curves interpolating the range measurements, provide a useful approximate representation of obstacle surfaces and they are easier to interpret for reactive control than a sparse set of distance points. How-ever, different contiguous obstacles are not always easy to discriminate with this representation. To address this issue, the lines are then computed from data points in 90◦ sectors (for instance, the front line emanates from points lying within the [−45◦, 45◦] interval, 0◦ being the forward direction of the wheelchair). Moreover, to improve readability, they are displayed at 10◦ intervals. In Fig. 7b and Fig. 7c, the following color convention was used: red, if the distance d to the obstacle(s) is less than 0.5 m, yellow d < 1 m, and green if d < 1.5 m.

In spite of the relatively poor angular resolution of the ring of ToF sensors, the three test subjects were able to correctly identify and avoid the static/moving obstacles around the wheelchair (at a distance of 1.5 m, any obstacle 0.87 m wide or larger is detected by the sensors, cf. Fig. 7b). However, since the sensor modules lie only a few tens of centimeters above the ground (the mount options on the perimeter of a power wheelchair are indeed very limited), the detection of chairs or tables remains quite challenging. The able-bodied users who tested SpheriCol asserted (via a questionnaire)

that the visualization interface is convenient for collision-free navigation, and ensures greater awareness of rear obstacles. While the benefits for experienced wheelchair users might not be as conspicuous (if we exclude a few rare complex maneuvers), the occupational therapists and medical profes-sionals involved in our project do believe that SpheriCol has a strong potential for training first-time users and for subjects with multiple chronic diseases. A video of one of three experimental trials is available at the following address: home.mis.u-picardie.fr/∼fabio/Eng/Video/SpCol SII21.mov

V. CONCLUSION ANDFUTUREWORK

In this paper, we have presented “SpheriCol”, a new simple yet effective driving assistance system for the users of power wheelchairs. The ROS-based system combines 360◦ vision and range measurements to assist navigation in confined environments, and it has been successfully tested indoors on a Quickie Salsa M2wheelchair.

Although our preliminary experimental results are promis-ing, further work is needed to test SpheriCol in more challenging dynamic environments, and to refine it with the help of medical doctors and experienced wheelchair users. Their feedback will be also valuable to adapt the driving assistance system to their specific needs. In particular, to perform a quantitative evaluation on a given trajectory, we plan to compute some standard performance metrics, such as the average completion time, average number of collisions, or path length [26]. We would also like to modify the distance markers according to the velocity information coming from the wheelchair joystick’s angle. Finally, the design of a compact twin-fisheye stereo camera which does not require active range measurements as SpheriCol, is the subject of ongoing research.

REFERENCES

[1] N. Vignier, J.-F. Ravaud, M. Winance, F.-X. Lepoutre, and I. Ville, “Demographics of Wheelchair Users in France: Results of Na-tional Community-Based Handicaps-Incapacit´es-D´ependance Sur-veys,” J. Rehabil. Med., vol. 40, no. 3, pp. 231–239, 2008.

(a)

(b) (c) (d)

Fig. 7: (a) Nominal trajectory of the Salsa M2wheelchair (blue), poster board (red rectangle) and moving obstacles (red disks);

(b) Spherical view with the obstacles detected by the 48 ToF sensors (red and yellow points): the green points show the free space around the wheelchair. Equirectangular view with, (c) lines obtained via Lagrange interpolation, (d) ellipses.

(a) (b)

Fig. 8: Spherical view with, (a) colored bands (“Zones”), (b) circle arcs (“Indics”). In (a), the colored bands show the obstacle-free directions around the wheelchair: the front wall is less than 1 m away, and the back wall is less than 0.5 m away. In (b), the inset shows a focused view of the seven red circle arcs.

[2] D. Taylor, “Americans With Disabilities: 2014,” US Department of Commerce, Economics and Statistics Administration, US Census Bu-reau, 2018, www.census.gov.

[3] T. Dutta and G. Fernie, “Utilization of ultrasound sensors for anti-collision systems of powered wheelchairs,” IEEE Trans. Neur. Sys. Reh., vol. 13, no. 1, pp. 24–32, 2005.

[4] G. D. Castillo, S. Skaar, A. Cardenas, and L. Fehr, “A sonar approach to obstacle detection for a vision-based autonomous wheelchair,” Robot. Autonom. Syst., vol. 54, no. 12, pp. 967–981, 2006. [5] B. Rebsamen, C. Guan, H. Zhang, C. Wang, C. Teo, M. Ang, and

E. Burdet, “A Brain Controlled Wheelchair to Navigate in Familiar Environments,” IEEE Trans. Neur. Sys. Reh., vol. 18, no. 6, pp. 590– 598, 2010.

[6] T. Carlson and Y. Demiris, “Collaborative Control for a Robotic Wheelchair: Evaluation of Performance, Attention, and Workload,” IEEE Trans. Syst. Man Cy. B, vol. 42, no. 3, pp. 876–888, 2012. [7] J. Podobnik, J. Rejc, S. ˇSlajpah, M. Munih, and M. Mihelj,

“All-Terrain Wheelchair: Increasing Personal Mobility with a Powered Wheel-Track Hybrid Wheelchair,” IEEE Robot. Autonom. Lett., vol. 24, no. 4, pp. 26–36, 2017.

[8] Y. Morales, A. Watanabe, F. Ferreri, J. Even, K. Shinozawa, and N. Hagita, “Passenger discomfort map for autonomous navigation in a robotic wheelchair,” Robot. Autonom. Syst., vol. 103, pp. 13–26, 2018. [9] N. Welage and K. Liu, “Wheelchair accessibility of public buildings: a review of the literature,” Disabil. Rehabil. Assist. Technol., vol. 6, no. 1, pp. 1–9, 2011.

[10] S. Levine, D. Bell, L. Jaros, R. Simpson, Y. Koren, and J. Borenstein,

“The NavChair Assistive Wheelchair Navigation System,” IEEE Trans. Rehabil. Eng., vol. 7, no. 4, pp. 443–451, 1999.

[11] E. Demeester, E. Vander Poorten, A. H¨untemann, and J. De Schutter, “Wheelchair Navigation Assistance in the FP7 Project RADHAR: Objectives and Current State,” in IROS Workshop on “Navigation and Manipulation Assistance for Robotic Wheelchairs”, 2012.

[12] “ADAPT: Assistive Devices for empowering disAbled People through robotic Technologies,” 2017–2021, Interreg VA France (Channel) England project, http://adapt-project.com/index-en. [13] J. Leaman and H. La, “A Comprehensive Review of Smart

Wheelchairs: Past, Present, and Future,” IEEE Trans. Hum.-Mach. System, vol. 47, no. 4, pp. 486–499, 2017.

[14] F. Pasteau, V. Narayanan, M. Babel, and F. Chaumette, “A visual servoing approach for autonomous corridor following and doorway passing in a wheelchair,” Robot. Autonom. Syst., vol. 75, pp. 28–40, 2016.

[15] J. Nguyen, S. Su, and H. Nguyen, “Experimental Study on a Smart Wheelchair System Using a Combination of Stereoscopic and Spheri-cal Vision,” in IEEE Int. Conf. Eng. Med. Biol., 2013, pp. 4597–4600. [16] S. Li, T. Fujiura, and I. Nakanishi, “Recording Gaze Trajectory of Wheelchair Users by a Spherical Camera,” in Proc. Int. Conf. Rehab. Rob., 2017, pp. 929–934.

[17] “Robot Operating System (ROS),” www.ros.org.

[18] “Braze Mobility, Blind Spot Sensors for Wheel Chairs & Mobility Scooters,” https://brazemobility.com.

[19] A. Erdogan and B. Argall, “The effect of robotic wheelchair control paradigm and interface on user performance, effort and preference: An experimental assessment,” Robot. Autonom. Syst., vol. 94, pp. 282– 297, 2017.

[20] X. Ying and Z. Hu, “Can We Consider Central Catadioptric Cameras and Fisheye Cameras within a Unified Imaging Model?” in Proc. Eur. Conf. Comp. Vis., 2004, pp. 442–455.

[21] C. Geyer and K. Daniilidis, “A Unifying Theory for Central Panoramic Systems and Practical Implications,” in Proc. Eur. Conf. Comp. Vis., 2000, pp. 445–461.

[22] J. Barreto, “A unifying geometric representation for central projection systems,” Comput. Vis. Image Und., vol. 103, no. 3, pp. 208–217, 2006.

[23] G. Caron and F. Morbidi, “Spherical Visual Gyroscope for Au-tonomous Robots using the Mixture of Photometric Potentials,” in Proc. IEEE Int. Conf. Robot. Automat., 2018, pp. 820–827. [24] L. Iezzoni, When Walking Fails: Mobility Problems of Adults with

Chronic Conditions. University of California Press, 2003. [25] A. Riddering, “Keeping Older Adults with Vision Loss Safe: Chronic

Conditions and Comorbidities that Influence Functional Mobility,” J. Visual Impair. Blin, vol. 102, no. 10, pp. 616–620, 2008. [26] L. Devigne, M. Aggravi, M. Bivaud, N. Balix, C. Teodorescu, T.

Carl-son, T. Spreters, C. Pacchierotti, and M. Babel, “Power Wheelchair Navigation Assistance Using Wearable Vibrotactile Haptics,” IEEE Trans. Haptics, vol. 13, no. 1, pp. 52–58, 2020.

[27] STMicroelectronics, “Long distance ranging Time-of-Flight sensor,” www.st.com/en/imaging-and-photonics-solutions/ vl53l1x.html.