Algorithms for Strategic Agents

by

S. Matthew Weinberg

B.A., Cornell Unversity (2010)

Submitted to the Department of Electrical Engineering and Computer Science

in partial fulfillment of the requirements for the degree of

Doctor of Philosophy in Computer Science

ASS

CHES

at the

MASSACHUSETTS INSTITUTE OF TECHNOLOGY

June 2014

@ Massachusetts Institute of Technology 2014. All rights reserved.

Auho.Signature

red

acted--,-Author..

. . .

...

...

.. ....

..

.

Department of Electrical Engineering and Computer Science

May 21, 2014

Signature redacted

Certified by..

... . . .. .. .. .. ... .. .. .. . .. .. .. .. .. .. .. .. .. .. .. .. .. .. .. .Constantinos Daskalakis

Associate Professor of EECS

Thesis Supervisor

Accepted by

Signature redacted

e(J

Leslie A. Kolodziejski

Chairman, Department Committee on Graduate Students

MASSACHUSETT$ INSTTUffE

OF TECHNOLOGY

JUN 10 2014

Algorithms for Strategic Agents

by

S. Matthew Weinberg

Submitted to the Department of Electrical Engineering and Computer Science on May 21, 2014, in partial fulfillment of the

requirements for the degree of Doctor of Philosophy in Computer Science

Abstract

In traditional algorithm design, no incentives come into play: the input is given, and your algorithm must produce a correct output. How much harder is it to solve the same problem when the input is not given directly, but instead reported by strategic agents with interests of their own? The unique challenge stems from the fact that the agents may choose to lie about the input in order to manipulate the behavior of the algorithm for their own interests, and tools from Game Theory are therefore required in order to predict how these agents will behave.

We develop a new algorithmic framework with which to study such problems. Specifically, we provide a computationally efficient black-box reduction from solving any optimization problem on "strategic input," often called algorithmic mechanism design to solving a perturbed version of that same optimization problem when the input is directly given, traditionally called algorithm design. We further demonstrate the power of our framework by making significant progress on several long-standing open problems. First, we extend Myerson's celebrated characterization of single item auctions [Mye8l] to multiple items, providing also a computationally efficient implementa-tion of optimal aucimplementa-tions. Next, we design a computaimplementa-tionally efficient 2-approximate mechanism for job scheduling on unrelated machines, the original problem studied in Nisan and Ronen's paper introducing the field of Algorithmic Mechanism Design [NR99]. This matches the guarantee of the best known computationally efficient algorithm when the input is directly given. Finally, we provide the first hardness of approximation result for optimal mechanism design.

Thesis Supervisor: Constantinos Daskalakis Title: Associate Professor of EECS

Acknowledgments

To start, I would like to thank my advisor, Costis Daskalakis, for his belief in me and all his guidance in pushing me to be excellent. When I first arrived at MIT, Costis and I discussed research directions. At the time, I was confident that I possessed the tools to make minor improvements on prior work and excitedly told him this. Chuckling politely, he told me that this was great, but that we should strive to solve more important problems. We worked long and hard that year, often going long stretches without any progress, but by the end we had started down the path that would eventually culminate in this thesis. Thank you, Costis, for teaching me to aim high in my pursuits, to always push the boundaries of my comfort zone and for guiding me along this journey.

Special thanks are also due to Bobby Kleinberg, who introduced me to theoretical computer science and taught me how to do research during my time at Cornell. His mix of patience and enthusiasm turned me from an overexcited problem-solver into a competent researcher. Today, Bobby is still one of my most trusted mentors and I am truly grateful for everything that he has done for me. Thank you as well to everyone else who has mentored me through this journey: Moshe Babaioff, Jason Hartline, Nicole Immorlica, Brendan Lucier, Silvio Micali, Christos Papadimitriou and Eva Tardos; especially to Parag Pathak and Silvio for serving on my dissertation committee, and Nicole and Brendan for an amazing summer internship at Microsoft Research.

I wish to thank the entire theory group at MIT. Thank you in particular to Costis, Silvio, Shafi Goldwasser, Piotr Indyk, Ankur Moitra, and Madhu Sudan for always being available to provide advice. Thanks as well to everyone who I've had the pleasure of collaborating with: Moshe, Costis, Nicole, Brendan, Bobby, Silvio, Pablo Azar, Patrick Briest, Yang Cai, Shuchi Chawla, Michal Feldman, and Christos Tzamos. A special thanks to Yang, without whom I'm certain the work in this thesis would not have been possible. I also thank him for teaching me everything there is to know about EECS at MIT - without him, I would be lost.

Thanks as well to the students of MIT, Cornell, and elsewhere who made work so enjoyable: Pablo Azar, Alan Deckelbaum, Michael Forbes, Hu Fu, Nima Haghpanah, Darrell Hoy, G Kamath, Yin-Tat Lee, Matthew Paff, Pedram Razavi, Ran Shorrer, Aaron Sidford, Greg Stoddard, Vasilis

Thank you to all my friends from Pikesville, Cornell, and MIT for supporting me through the difficulties of research: Alex, Brandon, Josh, Megan, Shen, Andy, Ganesh, Farrah, Henry, Jason, Job, Steve, Meelap, and Tahin. Thank you to MIT and Cornell Sport Taekwondo for teaching me to pursue excellence in all aspects of life. Thanks especially to my coaches, Master Dan Chuang and Master Han Cho.

I must thank Jennie especially for her unending love and support over the past five years. Her

presence in my life made it possible to weather the unavoidable hardships that accompany research with determination and hope for the future.

Finally, words cannot express how grateful I am to my family for their incredible support over this long journey. I am eternally grateful to Mom, Dad, Grandma, Alec, Syrus, Dagmara and Myles for everything that they've done to support me; for their love, care, and encouragement. I wish to dedicate this thesis to them.

Contents

1 Introduction

1

1.1 Mechanism Design ... ... 3

1.2 Multi-Dimensional Mechanism Design ... 4

1.2.1 Challenges in Multi-Dimensional Mechanism Design . . . . 5

1.3 Algorithmic Mechanism Design . . . . 9

1.4 Overview of Results. . . . . 11

1.5 Tools and Techniques . . . . 14

1.6 Organization. . . . . 18

2 Background 21 2.1 Concepts in Mechanism Design . . . . 21

2.2 Multi-Dimensional Mechanism Design -Additive Agents . . . . 26

2.3 Linear Programming . . . . 28

2.4 Related Work . . . 30

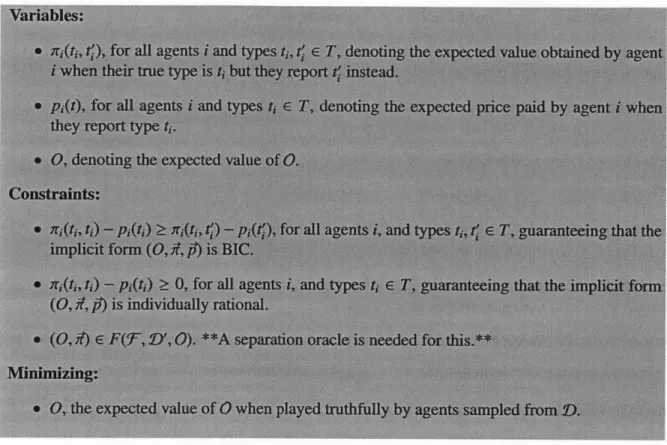

3 Additive Agents 37 3.1 A Linear Program . . . 39

3.2 The Space of Feasible Reduced Forms . . . 40

3.2.1 Examples of Reduced Forms . . . 41

3.2.2 Understanding the Space of Feasible Reduced Forms . . . 43

3.3 Implementation . . . 46

4 Background: General Objectives and Non-Additive Agents

4.1 Preliminaries ...

4.2 Additional Linear Programming Preliminaries . . . .

4.3 Related W ork . . . .

5 The Complete Reduction

5.1

A Linear Program . . . .

5.2 The Space of Feasible Implicit Forms . . . .

5.3 Consequences of using Y' . . . .

5.4 Instantiations of Theorem7 . . . .

6 Equivalence of Separation and Optimization

6.1 Exact Optimization . . . . 6.2 Multiplicative Approximations . . . .

6.3 Sampling Approximation . . . . 6.3.1 YI' Defines a Closed Convex Region . . . .

6.3.2 Every point in P is close to some point in P' . . . . 6.3.3 Every point in P' is close to some point in P . . . .

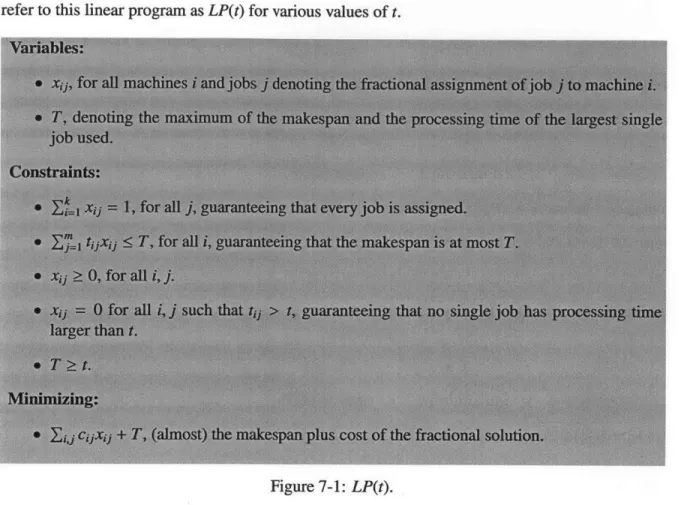

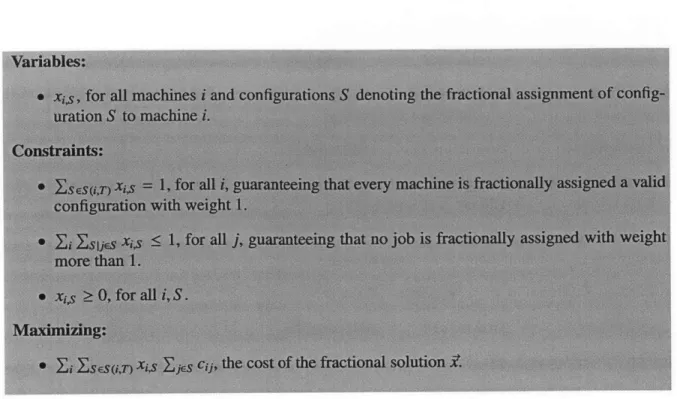

7 (a,p/)-Approximation Algorithms for Makespan and Fairness

7.1 Preliminaries . . . .

7.2 Algorithmic Results . . . .

8 Revenue: Hardness of Approximation

8.1 Cyclic Monotonicity and Compatibility . . . .

8.2 Relating SADP to BMeD . . . .

8.3 Approximation Hardness for Submodular Bidders . . . .

9 Conclusions and Open Problems

49

49

5357

63

64

66

72

75

79

80

82

86

87

89

91

9595

97

105

106

110

113

119

A Extensions of Border's Theorem

137

A.1 Algorithmic Extension . . . 137 A.2 Structural Extension . . . 142

List of Figures

3-1 A linear programming formulation to find the revenue-optimal mechanism. 3-2 A reformulated linear program to find the revenue-optimal mechanism. . . 5-1 A linear programming formulation for BMeD. . . . . 5-2 A linear program for BMeD, replacing F(T, D, 0) with F(r, 1', 0). . . 6-1 A linear programming formulation to find a violated hyperplane. . . . 6-2 A reformulation of Figure 6-1 using a separation oracle . . . . . 6-3 A weird separation oracle. . . . .

7-1 LP(t). . . . .

7-2 (a modification of) The configuration LP parameterized by T. . . . . .

. . . 81 . . . 82 . . . . . 83 . . . . 98 . . . 101 B-I A linear program to output a corner in P that satisfies every constraint in E. . . . . 151 . . . . 39

41 65 66

Chapter 1

Introduction

Traditionally, Algorithm Design is the task of producing a correct output from a given input in a computationally efficient manner. For instance, maybe the input is a list of integers and the desired output is the maximum element in that list. Then a good algorithm, findMax, simply scans the entire list and outputs the maximum. A subtle, but important assumption made by the findMax algorithm is that the input is accurate; that is, the input list is exactly the one for which the user wants to find the maximum element.

While seemingly innocuous, this assumption implicitly requires the algorithm to be completely oblivious both to where the input comes from and to what the output is used for. Consider for example a local government with a stimulus package that they want to give to the company that values it most. They could certainly try soliciting from each company their value for the package, making a list, running the findMax algorithm, and selecting the corresponding company. After all, findMax will correctly find the company with the largest reported value. But something is obviously flawed with this approach: why would a company report accurately their value when a larger report will make them more likely to win the package? Basic game theory tells us that each company, if strategic, will report a bid of oo, and the winner will just be an arbitrary company.

The issue at hand here is not the validity of findMax: the maximum element of {oo,..., oo}

is indeed oo. Instead, the problem is that separating the design and application of findMax is a mistake. The companies control the input to findMax, the input to findMax affects the winner of

the stimulus package, and the companies care who wins the package. So it's in each company's interest to manipulate the algorithm by misreporting their input.

A well-cited example of this phenomenon occured in 2008 when a strategic table update in

Border Gateway Protocol (BGP), the protocol used to propagate routing information throughout the Internet, caused a global outage of YouTube [McC08, McM08]. What went wrong? At the time, offensive material prompted Pakistani Internet Service Providers to censor YouTube within Pakistan, updating their BGP tables to map from www.youtube.com to nowhere insted of correctly to YouTube. The outage occurred when this update inadvertantly spread to the rest of the Internet as well. What's the point? BGP works great when everyone correctly updates their tables, so there are no problems purely from the algorithmic perspective. But when users instead updated their tables to serve their own interests, the protocol failed.

A more recent example occured in 2013, when an entire university class of students boycotted

an Intermediate Programming final in order to manipulate the professor's grading policy [Bud] 3]. What went wrong? The professor's policy curved the highest grade to 100 and bumped up all others

by the same amount. The students' boycott guaranteed that their Os would all be curved to 100s.

What's the point? Algorithmically, grading on a curve is a great way to overcome inconsistencies across different course offerings, so the system works well when all students perform honestly. But when the students performed strategically, the resulting grades were meaningless.

Should every algorithm be implemented in a way that is robust to strategic manipulation? Of course not. Sometimes data is just data and the input is directly given. But as the Internet continues to grow, more and more of our data comes from users with a vested interest in the outcome of our algorithms. Motivated by examples like those above, we are compelled to design algorithms robust to potential manipulation by strategic agents in these settings. We refer to such algorithms

as mechanisms, and address in this thesis the following fundamental question:

1.1

Mechanism Design

Before attempting to design mechanisms robust against strategic behavior, we must first understand how strategic agents in fact behave. This daunting task is the focus of Game Theory, which aims to develop tools for predicting how strategic agents will interact within a given environment or system. Mechanism Design, sometimes referred to as "reverse game theory," instead aims to design systems where agents' behavior is easily predictable, and this behavior results in a desireable outcome. Returning to our stimulus package example, we see that findMax by itself is not a good mechanism: even though each company will predictably report a value of oo, the outcome is highly undesireable.

Still, a seminal paper of Vickrey [Vic6l] provides a modification of the findMax algorithm that awards the package to the company with the highest value even in the face of strategic play. Vickrey showed that if in addition to awarding the package to the highest bidder, that same bidder is also charged a price equal to the second-largest value reported, then the resulting mechanism, called the second-price auction, is truthful. That is, it is in each company's best interest to report their true value. Therefore, even strategic agents will tell the truth, and the package will always go to the company with the highest value.

Remarkably, Vickrey's result was extended by Clarke [Cla7l] and Groves [Gro73] far be-yond single-item settings, generalizing the second-price auction to what is now called the Vickrey-Clarke-Groves (VCG) mechanism. Imagine a designer choosing from any set T of possible out-comes, and that each agent i has a private value ti(x) should the outcome x be selected. Then if the designer's goal is to select the outcome maximizing the total welfare, which is the sum of all agents' values for the outcome selected and can be written as

Li

ti(x), the VCG mechanism is againtruthful and always selects the optimal outcome. The mechanism simply asks each agent to report their value ti(x) for each outcome and selects the outcome argmaxE{E, t;(x)}. It turns out that this selection procedure can be complemented by a pricing scheme to make a truthful mechanism; one example of such a pricing scheme is called the Clarke pivot rule [Cla7 1]. Interestingly, one can view the VCG mechanism as a reduction from mechanism to algorithm design: with

black-box access' to an optimal algorithm for welfare, one can execute, computationally efficiently, an optimal mechanism for welfare. That is, given black-box access to an algorithm that optimizes welfare when each ti(x) is directly given, one can design a computationally efficient mechanism that optimizes welfare when all ti(x) are instead reported by strategic agents.

1.2 Multi-Dimensional Mechanism Design

What if instead of optimizing the value of the agents, the designer aims to maximize his own revenue? Finding the optimal mechanism in this case is of obvious importance, as millions of users partake in such interactions every day. Before tackling this important problem, we must first address what it means for a mechanism to be optimal in this setting. One natural definition might be that the optimal mechanism obtains revenue equal to the best possible welfare, with each agent paying their value for the outcome selected. However, even in the simplest case of a single agent interested in a single item, this benchmark is unattainable: how can the designer possibly both encourage the agent to report her true value for the item, yet also charge her a price equal to that value?

The underlying issue in attaining this benchmark is that it is prior-free. Without any beliefs whatsoever on the agent's value, how can the designer know whether to interact on the scale of pennies, dollars or billions of dollars? And is it even realistic to model the designer as lacking this knowledge? Addressing both issues simultaneously, Economists typically model the designer as having a Bayesian prior (i.e. a distribution) over possible values of the agents, and mechanisms are judged based on their expected revenue. The goal then becomes to find the mechanism whose expected revenue is maximized (over all mechanisms).

Within this model, a seminal paper of Myerson [Mye8l] provides a strong structural charac-terization of the revenue-optimal mechanism for selling a single item, showing that it takes the following simple form: first, agents are asked to report their value for the item, then each agent's reported value is transformed via a closed formula to what is called an ironed virtual value,2 and 'Black-box access to an algorithm means that one can probe the algorithm with input and see what output it selects, but not see the inner workings of the algorithm.

finally the item is awarded to the agent with the highest non-negative ironed virtual value (if any). Furthermore, in the special case that the designer's prior is independent and identically distributed across agents and the marginals satisfy a technical condition called regularity,3 the optimal auction is exactly a second price auction with a reserve price. That is, the item is awarded to the highest bidder if he beats the reserve, and he pays the maximum of the second highest bid and the reserve.

If no agent beats the reserve, the item is unsold. Interestingly, one can also view Myerson's optimal

auction as a reduction from mechanism to algorithm design: with black-box access to the findMax algorithm, one can execute the optimal mechanism for revenue computationally efficiently.

While Myerson's result extends to what are called single-dimensional settings (that include for example the sale of k identical items instead of just one), it does not apply even to the sale of just two heterogeneous items to a single agent. Thirty-three years later, we are still quite far from a complete understanding of optimal multi-item mechanisms. Unsurprisingly this problem, dubbed

the optimal multi-dimensional mechanism design problem is considered one of the central open

problems in Mathematical Economics today.

Question 2. (Multi-Dimensional Mechanism Design) What is the revenue-optimal auction for sell-ing multiple items?

This thesis makes a substantial contribution towards answering this question. We generalize Myerson's characterization to multi-item settings, albeit with some added complexity, and show how to execute the optimal auction computationally efficiently. We provide in Section 1.4 further details regarding our contributions, taking a brief detour in the following section to acclimate the reader with some surprising challenges in multi-dimensional mechanism design.

1.2.1

Challenges in Multi-Dimensional Mechanism Design

Already in 1981, Myerson's seminal work provided the revenue-optimal auction for many bidders in single-dimensional settings. Yet thirty-three years later, a complete understanding of how to sell just two items to a single buyer remains elusive. That is not to say that progress has not been made on the Bayesian prior on the value of that agent. The precise formula for this transformation is not used in this thesis.

(see Section 2.4 in Chapter 2 for a summary of this progress), but prior to this thesis we were still quite far from any understanding in unrestricted settings. The goal of this section is to overview some difficulties in transitioning from single- to multi-dimensional mechanism design.

We do so by presenting several examples involving additive agents. An agent if additive if they have some (private) value vi for receiving each item i, and their value for receiving a set S of items is >2iEs vi. Recall that in principle the agent's private information could be some arbitrary function

mapping each set of items to the value that agent has for that set. So additive agents are a special (but also very important) case. Drawing an analogy to welfare maximization, one might hope that settings with additive agents are considerably better behaved than more general settings. Indeed, if our goal was to maximize welfare, the VCG mechanism would simply award each item to the agent with the highest value (for that item) and charge her the second highest bid (for that item). In other words, the welfare optimal auction for many items and additive agents simply runs several independent instances of the Vickrey auction. So does the revenue optimal auction for many items and additive agents simply run several independent instances of Myerson's auction? Unfortunately, even in the case of a single additive agent and two items, the optimal auction can be significantly more complex.

Lack of Indepencence. Consider a seller with two items for sale to a single additive buyer. Assume further that the distribution of vj, the buyer's value for item 1, is independent of v2, the buyer's value for item 2. Then as far as the buyer is concerned, there is absolutely no interaction between the items: her value for receiving item 1 is completely independent of whether or not she receives item 2. And furthermore her value for item 2 yields absolutely no information about her value for item 1. It's natural then to think that there should be no interaction between the items in the optimal mechanism. However, this is false, as the following simple example shows.

Example: There is a single additive buyer whose value for each of two items is drawn inde-pendently from the uniform distribution on {1, 2}. Then the optimal mechanism that treats both items separately achieves expected revenue exactly 2: the seller can sell each item at a price of 1,

and have it sell with probability 1, or at a price of 2, and have it sell with probability 1/2. In either

of 3 for both items together sells with probability 3/4, yielding expected revenue 9/4 > 2.

It's hard to imagine a simpler multi-dimensional example than that above, and already we see that the optimal mechanism may have counterintuitive properties. One could then ask if there is at least a constant upper bound on the ratio between the optimal revenue and that of the mechanism that optimally sells items separately.

Example: There is a single additive buyer whose value for each of m items is drawn indepen-dently from the equal revenue distribution, which has CDF F(x) = 1 - 1 Ix for all x > 1. Then

the optimal mechanism that treats each item separately achieves expected revenue exactly m: the seller can set any price pi on item i, and have it sell with probability 1 /pi, yielding an expected per-item revenue of 1. Hart and Nisan [HN12] show that the mechanism offering a take-it-or-leave-it price of 0(m log m) for all m items together sells with probability 1/2, yielding expected revenue

)(m log M).4 As m - oo, the ratio between the two revenues is unbounded.

The takeaway message from these two examples is that just because the input distributions have an extremely simple form does not mean that the optimal mechanism shares in any of this simplicity. Specifically, even when there is absolutely no interaction between different items from the perspective of the input, the optimal mechanism may require such interaction anyway.

Non-Monotonicity of Revenue. Compare two instances of the problem where a single agent is interested in a single item: one where her value is drawn from a distribution with CDF F1, and

another where it is drawn from a distribution with CDF F2. Further assume that F1 stochastically dominates F2 (that is, F1(x) F2(x) for all x). Which instance should yield higher expected revenue? As F1 stochastically dominates F2, one way to sample a value from F1 is to first sample a value from F2, and then increase it (by how much depends on the specifics of F1 and F2, but such a procedure is always possible). Surely, increasing the agent's value in this manner can't possibly decrease the optimal revenue, right? Right. The optimal revenue of selling a single item to an agent whose value is drawn from F1 is at least as large as selling instead to an agent whose value is drawn from F2. Indeed, a corollary of Myerson's characterization is that the optimal mechanism

4

Throughout this thesis we use the standard notation f(n) = O(g(n)) if there exists a universal constant c > 0 such that f(n) : c - g(n) for all n, and f(n) = W(g(n)) if there exists a universal constant c > 0 such that f(n) > c - g(n). We also write f(n) = E(g(n)) if f(n) = O(g(n)) and f(n) = 9(g(n)).

for selling a single item to a single agent is to set a take-it-or-leave-it price. If p is the optimal price for F2, then setting the same price for F1 yields at least as much revenue, as by definition we have

FI(p) F2(p).

What if there are two items, and the agent's value for each item is sampled i.i.d. from F1 vs.

F2? It's still the case that values from F1 x F1 can be obtained by first sampling values from F2 x F2

and increasing them (by possibly different amounts, again depending on the specifics of F1 and F2).

And again it seems clear that increasing the agent's values in this way can't possibly decrease the optimal revenue. Mysteriously, this is no longer the case. Hart and Reny [HR12] provide a counter-example: two one-dimensional distributions F1 and F2 such that F1 stochastically dominates F2,

but the optimal revenue is larger for values sampled from F2 x F2 than F1 x F1.

The takeaway from this discussion is that the optimal revenue may change erratically as a func-tion of the input distribufunc-tion. Specifically, changes to the input that should "obviously" increase revenue, such as increasing values in a stochastically dominating way, can in fact cause the revenue to decrease.

Role of Randomization. In principle, the designer could try making use of randomization to increase revenue. For instance, maybe instead of simply offering an item at price 100, he could

also offer a lottery ticket that awards the item with probability 1/2 for 25, letting the agent choose which option to purchase. Myerson's characterization implies that for sellling a single item (even to multiple agents), randomization doesn't help: the designer can make just as much revenue by

setting a single fixed price. But in the case of multiple items, it does.

Example ([DDT13]). Consider a single additive agent interested in two items. Her value

for item 1 is drawn uniformly from 11,2) and her value for item 2 is drawn independently and uniformly from {1, 3}. Then the unique optimal mechanism allows the agent to choose from the following three options: receive both items and pay 4, or receive the first item with probability 1, the second with probability 1/2 and pay 2.5, or receive nothing and pay nothing.

Daskalakis, Deckelbaum, and Tzamos further provide an example with a single additive agent and two items where the unique optimal mechanism offers a menu of infinitely many lottery tickets for the agent to choose from [DDT13]. And even worse, Hart and Nisan provide an instance with

a single additive agent, whose value for each of two items is correlated, where the revenue of the optimal deterministic mechanism is finite, yet the revenue of the optimal randomized mechanism

is infinite [HN13].

The takeaway from this discussion is that randomization is not necessary in single-dimensional settings, but quite necessary in multi-dimensional settings. Not only is the unique optimal mecha-nism randomized in extremely simple examples, but there is a potentially infinite gap between the revenue of the optimal randomized mechanism and that of the optimal deterministic one.

Difficulties like the above three certainly help explain why multi-dimensional mechanism de-sign is so much more challenging than its single-dimensional counterpart, but they also provide evidence that a characterization as strong as Myerson's is unrealistic. In other words, any general characterization of optimal multi-dimensional mechanisms must be rich enough to accommodate all three complications discussed above, and therefore cannot be quite so simple.

1.3 Algorithmic Mechanism Design

The study of welfare and revenue is of clear importance, but the goal of this thesis is to provide a generic connection between mechanism and algorithm design, not limited to any specific objective. In traditional algorithm design (of the style considered in this thesis), one can model the input as having k components, t1, ... , tk, with the designer able to choose any outcome x E T. Based

on the input, t each outcome x will have some quality O(i x), and the designer's goal is to find a computationally efficient algorithm mapping inputs itto outputs in argmaxX {o(t x)}. As an

example objective, welfare can be written as 0(t* x) =

>;

t(x).In Algorithmic Mechanism Design, one instead models each component of the input as being

private information to a different self-interested agent, and allows the designer to charge prices as well. The designer's goal is still to find a computationally efficient mechanism that optimizes 0. Additionally, 0 may now also depend on the prices charged, so we write 0(t* x,

fl).

As an example, revenue can be written as Z, pi. Two natural refinements of our motivating Question 1 arise and lie at the heart of Algorithmic Mechanism Design:Question 3. How much worse is the quality of solution output by the optimal mechanism versus that of the optimal algorithm?

Question 4. How much more (computationally) difficult is executing the optimal mechanism versus the optimal algorithm?

In this context, one can view the VCG mechanism as providing an answer to both Questions 3 and 4 of "not at all" when the objective is welfare, even in prior-free settings. Indeed, the VCG mechanism guarantees that the welfare-optimal outcome is chosen. And furthermore, the only al-gorithmic bottleneck in running the VCG mechanism is finding the welfare-optimal outcome for the given input. For revenue, the answer to Question 3 is "infinitely worse," even in Bayesian settings: consider a single buyer whose value for a single item is sampled from the equal revenue curve (F(x) = 1 - 1 Ix). Then the optimal mechanism obtains an expected revenue of 1 no matter what price is set, whereas the optimal algorithm can simply charge the agent her value, obtaining an expected revenue of oo. Still, Myerson's characterization still shows that the answer to Ques-tion 4 is "not at all" in single-dimensional Bayesian settings. In this context, our results extending Myerson's characterization to multi-dimensional mechanism design further provide an answer of "not at all" to Question 4 for revenue in all Bayesian settings as well.

Still, there are many important objectives beyond welfare and revenue that demand study. Nisan and Ronen, in their seminal paper introducing Algorithmic Mechanism Design, study the objec-tive of makespan minimization [NR99]. Here, the designer has a set of m jobs that he wishes to process on k machines in a way that minimizes the makespan of the schedule, which is the time until all jobs have finished processing (or the maximum load on any machine). Already this is a challenging algorithmic problem that received much attention since at least the 1960s [Gra66,

Gra69, GJ75, HS76, Sah76, GIS77, GJ78, GLLK79, DJ81, Pot85, HS87, LST87, HS88, ST93a].

The challenge becomes even greater when the processing time of each job on each machine is private information known only to that machine. In sharp contrast to welfare maximization, Nisan and Ronen show that the optimal truthful mechanism can indeed perform worse than the optimal algorithm, conjecturing that it is in fact much worse. A long body of work followed in attempt to

remains open today, along with the equally pressing question of whether or not one can execute the optimal mechanism computationally efficiently. Following their work, resolving Questions 3 and 4 has been a central open problem in Algorithmic Mechanism Design.

Our main result addressing these questions is a computationally efficient black-box reduction from mechanism to algorithm design. That is, for any optimization problem, if one's goal is to design a truthful mechanism, one need only design an algorithm for (a perturbed version of) that same optimization problem. While this reduction alone does not provide a complete answer to Questions 3 or 4, it does provide an algorithmic framework with which to tackle them that has already proved incedibly useful. Making use of this framework, we are able to resolve Question 4 for makespan minimization and other related problems with a resounding "not at all."

1.4

Overview of Results

Our most general result is a computationally efficient black-box reduction from mechanism to algorithm design for general optimization problems. But let us begin with an application of our framework to the important problem of multi-dimensional mechanism design. Here, we show the following result, generalizing Myerson's celebrated result to multiple items. In this context, one should interpret the term "virtual welfare" not as being welfare computed with respect to the specific virtual values transformed according to Myerson's formula, but instead as simply welfare computed with respect to some virtual value functions, which may or may not be the same as the agents' original value functions.

Informal Theorem 1. The revenue optimal auction for selling multiple items is a distribution over virtual welfare maximizers. Specifically, the optimal auction asks agents to report their valuation functions, transforms these reports into virtual valuations (via agent-specific randomized trans-formations), selects the outcome that maximizes virtual welfare, and charges prices to ensure that the entire procedure is truthful. Furthermore, when agents are additive, the optimal auction (i.e. the specific transformation to use and what prices to charge based on the designer's prior) can be found computationally efficiently.

In comparison to Myerson's optimal single-dimensional auction, we show that the optimal multi-dimensional auction has an identical structure, in that its allocation rule is still a virtual-welfare maximizer. The important difference is that in single-dimensional settings the virtual trans-formations are deterministic and computed according to a closed formula. In multi-dimensional settings, the transformations are randomized, and we show that they can be computed computa-tionally efficiently. Note that the use of randomization cannot be avoided even in extremely simple multi-dimensional settings given the examples of Section 1.2.1. While certainly not as compelling as Myerson's original characterization, Informal Theorem 1 constitutes immense progress on both the structural front (namely, understanding the structure of revenue-optimal auctions) and algo-rithmic front (namely, the ability to find revenue-optimal auctions efficiently, regardless of their structure). Section 2.4 in Chapter 2 overviews the vast prior work on this problem.

From here, we turn to more general objectives, aiming to describe our complete reduction from mechanism to algorithm design. As we have already discussed, such a reduction is already achieved for welfare by the VCG mechanism [Vic6l, Cla7l, Gro73]. However the resulting re-duction is fragile with respect to approximation: if an approximately optimal welfare-maximizing algorithm is used inside the VCG auction, the resulting mechanism is not a truthful mechanism at all! As it is often NP-hard to maximize welfare in combinatorially rich settings, an

approximation-preserving' reduction would be highly desireable.

Question 5. Is there an approximation-preserving black-box reduction from mechanism to algo-rithm design, at least for the special case of welfare?

Interestingly, the answer to Question 5 depends on the existence of a prior. A recent series of works [PSSO8, BDF+10, Dobi1, DV12] shows that in prior-free settings, the answer is no. In contrast, a parallel series of works [HL1O, HKM 1I, BH 1I] shows that in Bayesian settings, the answer is yes. The takeaway message here is two-fold. First, accommodating approximation algorithms in reductions from mechanism to algorithm design is quite challenging even when an exact reduction is already known, as in the case of welfare optimization. And second, to have

5

1n this context, a reduction is approximation preserving if given black-box access to an a-approximation algo-rithm, the resulting mechanism is truthful, and also an a-approximation.

any hope of accommodating approximation, we must be in a Bayesian setting. Inspired by this, a natural question to ask is the following:

Question 6. Is there an approximation-preserving black-box reduction from mechanism design for any objective to algorithm design for that same objective in Bayesian settings?

Unfortunately, recent work shows that the answer to Question 6 is actually no [CIL12]. Specif-ically, no such reduction can possibly exist for makespan minimization. Have we then reached the limits of black-box reductions in mechanism design, unable to tackle objectives beyond welfare? Informal Theorem 2 below states this is not the case, as we circumvent the impossibility result of [CIL12] by perturbing the algorithmic objective.

Informal Theorem 2. In Bayesian settings, for every objective function 0 there is a

computation-ally efficient, approximation-preserving black-box reduction from mechanism design optimizing 0 to algorithm design optimizing 0+virtual welfare.

Informal Theorem 2 provides an algorithmic framework with which to design truthful mecha-nisms. This thesis continues by making use of this framework to design truthful mechanisms for two paradigmatic algorithm design problems. The first problem we study is that of job scheduling

on unrelated machines, a specific instance of makespan minimization (from Section 1.3) and the

same problem studied in Nisan and Ronen's seminal paper [NR99]. The term "unrelated" refers to the fact that the processing time of job

j

on machine i is unrelated to the processing time ofjob j on machine i' or job f on machine i. Section 4.3 in Chapter 4 overviews the wealth of prior work in designing mechanisms for makespan minimization. We only note here that the best known computationally efficient algorithm obtains a 2-approximation.

Informal Theorem 3. There is a computationally efficient truthful mechanism for job scheduling on unrelated machines that obtains a 2-approximation.

The second algorithmic problem we study is that offair allocation of indivisible goods. Here, a set of m indivisible gifts can be allocated to k children. Each child i has a value tij for gift

j,

and the total value of a child simply sums tij over all giftsj

awarded to child i. The goal is to find anassignment of gifts that maximizes the value of the least happy child (called the fairness). There is also a large body of work in designing both algorithms and mechanisms for fairness maximization, which we overview in Section 4.3 of Chapter 4. We only note here that for certain ranges of k, m, the best known computationally efficient algorithm is a min{(

Vk),

m - k + 1}-approximation.6Informal Theorem 4. There is a computationally efficient truthful mechanism for fair allocation of indivisible goods that achieves a min{ ( Nf/K), m - k + I}-approximation.

Finally, we turn our attention back to revenue maximization, aiming to make use of our frame-work to design computationally efficient auctions beyond additive agents. Unfortunately, it turns out that maximizing virtual welfare becomes computationally hard quite quickly in such settings. Does this mean that our approach falls short? Or perhaps instead that the original problem we were trying to solve was computationally hard as well. We show that indeed the latter is true, providing an approximation-sensitive7 reduction from designing algorithms for virtual welfare maximization to designing mechanisms for revenue. Combined with Informal Theorem 1, this brings our reduc-tion full circle, and in some sense shows that our approach is "tight" for revenue. Again making use of our framework, we provide the first unrestricted hardness of approximation result for rev-enue maximization. Specifically, we show that revrev-enue maximization is computationally hard for even one monotone submodular agent.8

Informal Theorem 5. It is NP-hard to approximately maximize revenue for one agent whose valuation function for subsets of m items is monotone submodular within any poly(m)

factor.

1.5

Tools and Techniques

Our technical contributions come on several fronts. Our first begins with an observation that all mechanism design problems (of the form studied in this thesis) can be solved by a gigantic linear

6We use the standard notation g(n) = O(f(n)) to mean that g(n) = O(f(n) log' f(n)) for some constant c.

7A reduction is approximation sensitive if whenever the reduction is given black-box access to an a-approximation,

the output is an f(a)-approximation, for some function f.

8

A set function f is monotone if f(S) f(T) for all S

g

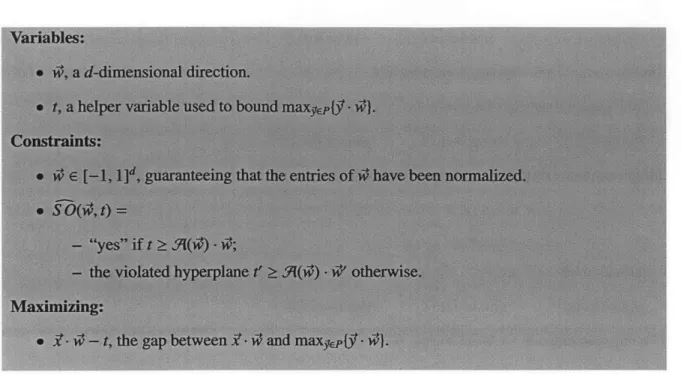

T. f is submodular if it satisfies diminishing returns, orprogram that simply stores a variable telling the mechanism exactly what to do on every possible input. Such a solution is of no actual use, either computationally or to learn anything about the structure of the mechanism, but it does provide a starting point for further improvement. From here, we reformulate this program and drastically shrink the number of variables (essentially by underspecifying mechanisms). After this reformulation, our task reduces to obtaining a separation oracle' for some convex region related to the original problem, and our focus shifts to purely algorithmic techniques to resolve this.

From here, our next technical contribution is an extension of the celebrated "equivalence of separation and optimization" framework developed by Gr6tschel, Lovisz, and Schrijver and in-dependently Karp and Papadimitriou [GLS81, KP80]. In 1979, Khachiyan showed that one can optimize linear functions computationally efficiently over any convex region given black-box ac-cess to a separation oracle for the same region using the ellipsoid algorithm [Kha79]. Remarkably, Gr6tschel, Lovisz, and Shrijver and Karp and Papadimitriou discovered that the converse is true too: one can obtain a computationally efficient separation oracle for any convex region given black-box access to an algorithm that optimizes linear functions over that same region.

Before continuing we should address a frequently raised question: If you can already opti-mize linear functions, why would you want a separation oracle? Who uses separation oracles for anything besides optimization? One compelling use is optimizing a linear function over the

in-tersection of two convex regions. Simply optimizing over each region separately does nothing

intelligent towards optimizing over their intersection. But separation oracles for each region sep-arately can be easily composed into a separation oracle for their intersection, which can then be plugged into Khachiyan's ellipsoid algorithm. There are indeed several other uses [GLS81, KP80], but this is the context in which we apply the framework.

Making use of the framework as-is, we are already able to find the revenue-optimal mechanism in multi-dimensional settings with additive agents, but the existing framework is not robust enough to accommodate combinatorially challenging problems that require approximation. To this end, we extend the equivalence of separation and optimization framework to accommodate traditional

ap-9

A separation oracle for a convex region P is an algorithm SO that takes as input a point I and outputs either "yes" if I E P or a hyperplane (0, t) such that I- 0 > t > maxyp{l - w} otherwise.

proximation algorithms, a new kind of bi-criterion approximation algorithm, and sampling error in Chapter 6. That is, sometimes one can only approximately optimize linear functions over a convex region and would like some meaningful notion of an approximate separation oracle. Unfortunately, when starting from an approximation algorithm, the reduction of [GLS81, KP80] does not produce a separation oracle, and the resulting algorithm may have quite erratic behavior. We call the re-sulting algorithm a weird separation oracle ("weird" because of the erratic behavior, "separation oracle" because at the very least this algorithm does sometimes say "yes" and sometimes output a hyperplane) and show that weird separation oracles are indeed useful for optimization. Specif-ically, we show first that running the ellipsoid algorithm with access to a weird separation oracle instead of a true separation oracle results in an algorithm that approximately optimizes linear func-tions, and second that any point on which the weird separation oracle says "yes" satisfies a strong notion of feasibility (stronger than simply being inside the original convex region). Proving these claims essentially boils down to analyzing the behavior of the ellipsoid algorithm when executed over a non-convex region.

Sometimes, however, one can't even approximately optimize linear functions over the desired convex region computationally efficiently, and we must further search for ways to circumvent this computational hardness. In many such cases, while obtaining a traditional approximation algo-rithm is NP-hard, it may still be computationally feasible to obtain a bi-criterion approximation algorithm. The specific kind of bi-criterion approximation we consider is where the algorithm is first allowed to scale some coordinates of the solution by a multiplicative factor of

p

before com-paring to a times the optimum (whereas a traditional a-approximation algorithm can be viewed as always havingp

= 1). Done carelessly, such bi-criterion approximation algorithms can becom-pletely useless for convex optimization. But done correctly, via what we call (a,fp)-approximation algorithms, such algorithms can replace traditional approximation algorithms within the equiva-lence of separation and optimization framework while only sacrificing an additional factor of

p

in performance. Section 4.2 in Chapter 4 contains a formal definition of such algorithms and more details surrounding this claim.computa-tionally hard to design for a convex region, but that algorithms with small additive error can be obtained via sampling. Such additive error isn't small enough to be handled by the original frame-work of [GLS81, KP80] directly, and the aforementioned techniques apply only to multiplicative error. To this end, we further strengthen the framework to accommodate sampling error in a con-sistent manner. Section 4.2 in Chapter 4 contains a formal definition of what we mean by sampling error and more details surrounding these claims.

Thus far we have only described our technical contributions towards developing our general framework for reducing mechanism to algorithm design. In order to apply our framework to the problems of makespan and fairness, we develop new bi-criterion approximation algorithms for the perturbed algorithmic problems output by our framework, namely makespan with costs and fair-ness with costs. These algorithms are based on existing algorithms for the corresponding problems without costs, and require varying degrees of additional insight.

Finally, we establish that our framework for revenue is "tight" in that the reduction from mech-anism to algorithm design holds both ways. The difficulty in completing this framework is that virtually nothing is known about the structure of approximately optimal mechanisms, yet estab-lishing computational intractibility requires one to somehow use such mechanisms to solve NP-hard problems. To this end, we define a restricted class of multi-dimensional mechanism design instances that we call compatible and show that compatible instances are both restricted enough for us to make strong claims about the structure of approximately optimal mechanisms, yet also rich enough within which to embed NP-hard problems. Making use of this framework, we establish the first unrestricted hardness of approximation result for revenue maximization. Note that prior work has only been successful in establishing hardness of approximation for the optimal determin-istic mechanism in settings where the optimal randomized mechanism can be found in polynomial

time [Bri08, PPl1].

Moreso than any individual technique, we feel that our greatest contribution is this framework itself. Designing complex truthful mechanisms is a really challenging task with little, if any, prece-dent. Even in very simple settings the optimal mechanism is often quite complex, and the design of such mechanisms requires tools that we simply don't have. In contrast, the algorithms community

has developed very sophisticated tools for use in the design of complex algorithms, and our frame-work allows for these tools to be used for the design of truthful mechanisms as well. Similarly, there is little precedent for hardness of approximation results in revenue maximization, while again the algorithms community has developed very sophisticated tools for hardness of approximation in traditional algorithm design. Our framework allows for these tools as well to be used in mechanism

design.

1.6

Organization

Chapter 2 provides the necessary background to understand our results on multi-dimensional mechanism design and formally defines the setting we study. Section 2.4 overviews related work and provides context for our multi-dimensional mechanism design results. In Chapter 3, we pro-vide a complete proof of our results on multi-item auctions (namely, a formal statement of Informal Theorem 1). The purpose of separating this result from the rest is to demonstrate the key ideas be-hind our approach without the technical tools required for full generality.

In Chapter 4, we extend the setting of Chapter 2 to accommodate the full generality of our complete mechanism to algorithm design reduction. Section 4.3 overviews the large body of work on Algorithmic Mechanism Design.

Chapter 5 provides a complete proof of Informal Theorem 2, a reduction from mechanism to algorithm design. The skeleton of the approach is very similar to that of Chapter 3, but more technical tools are required due to the increased generality.

Chapter 6 provides our algorithmic results related to linear programming, specifically extend-ing the celebrated equivalence of separation and optimization to accommodate various types of approximation error. Theorems from this chapter are used in the proofs of results from Chap-ters 3 and 5. We separate them here because these results are of independent interest outside of mechanism design.

Chapter 7 provides algorithmic results for makespan minimization and fairness maximization that can be leveraged within our framework to yield formal statements of Informal Theorems 3 and 4.

Chapter 8 provides our hardness of approximation result for revenue maximization (namely, a formal statement of Informal Theorem 5). We again derive this result as part of a larger framework that will be useful in showing other new hardness of approximation results. In Chapter 9, we provide conclusions and open problems.

Finally, in Appendix A we provide algorithmic and structural extensions of Border's Theo-rem. Both results provide an improved structural understanding of the mechanisms we design in Chapter 3 for revenue maximization. While quite important for this setting, we separate them here because there is no meaningful generalization of these results that applies in the setting of our general reduction.

Results are based on joint work with Yang Cai and Constantinos Daskalakis. Chapters 3 and Appendix A are based on [CDW12a]. Chapters 5 and 8 are based on [CDW13b]. Chapter 6 is

Chapter 2

Background

Here we provide the necessary background for our results on multi-dimensional mechanism design. We first introduce general concepts from mechanism design in Section 2.1. In Section 2.2, we define formally the setting considered in the following chapter. Section 2.3 provides the necessary preliminaries on linear programming. Finally, Section 2.4 provides an overview of related work on multi-dimensional mechanism design.

2.1

Concepts in Mechanism Design

Mechanism Design Setting. In this thesis, a mechanism design setting consists of a single

cen-tral designer and k self-interested agents. The designer has to choose some possible outcome x

from a set 9 of feasible outcomes, and may also charge prices to the agents.

Agent Preferences. Each agent has preferences over the various outcomes, and these preferences

are stored in their type. One can interpret the type of agent i as a function

ti

mapping outcomes in

T to values in R. We use T to denote the set of possible types that agents may have, and assume

that ITI is finite. Throughout this thesis, we assume that agents are quasi-linear and risk-neutral.

That is, the utility of an agent of type ti for the randomized outcome X when paying (a possibly random price with expectation) p is E,.x[ti(x)] - p. From now on, for notational convenience, we just write t(X) to mean E,,x[ti(X)]. We assume that agents are rational, in that they behave in away that maximizes utility.

Private Information. Each agent's type is private information known only to that agent.

How-ever, the designer and remaining agents have beliefs about each agent's possible type, modeled as a Bayesian prior. That is, the designer and remaining agents believe that agent i's type is sampled from a distribution D;. We denote by tra type profile (that is, a vector listing a type for each agent). We also assume that the beliefs over agents' types are independent, and denote by D = xD, the

designer's prior over the joint distribution of type profiles.

Mechanisms. In principle, the designer could set up an arbitrary system for the agents to play, involving randomization, multiple rounds of communication, etc. The agents would then use some strategy to participate in the system, and in the end an outcome would be selected and prices would be charged.

Nash Equilibria. How would utility-maximizing agents interact in such a system? Once the strategies of the remaining agents are fixed, each single agent faces an optimization problem: find the strategy maximizing utility. So if an agent knows exactly what strategies will be employed by the remaining agents, she should find the utility-maximizing strategy (called the best response), and use it. If each agent employs a strategy that is a best response to the strategies of other agents, then this strategy profile is a Nash Equilibrium, and no agent has any incentive to change their strategy. Nash showed that every finite complete information game (and therefore, any cor-responding mechanism, assuming that all agents know the types of all other agents) has a Nash Equilibrium [Nas5 1]. However, due to the uncertainty over preferences of other agents, the Nash Equilibrium is not the right solution concept for this setting.

Bayes-Nash Equilibria. We would still like to model agent behavior via best responses, but we must also take their uncertainty into account. In Bayesian settings (like ours), an agent's behavior isn't just a single strategy, but is instead a mapping from possible types to strategies. Fixing an agent's type, if that agent knows exactly what mapping will be employed by the remaining agents

(but still has incomplete information about their types), she should still play a strategy that is a best response. Overloading notation, one can then say a mapping is a best response if it maps every possible type to a strategy that is a best response. If each agent employs a mapping that is a best response to the mappings of other agents, then the collection of mappings is a Bayes-Nash

Equilirbium, and no agent has any incentive to change their mapping. Every finite incomplete

information game has a Bayes-Nash Equilibrium [OR94].

Revelation Principle. Even though we now have a meaningful solution concept with which to

predict agent behavior, it's not clear how one should find a Bayes-Nash Equilibrium in an arbitrary mechanism (in fact, it is often computationally hard [CSO8, DGPO9, CP14]), or further, how one should optimize over the space of all Bayes-Nash Equilibria of all mechanisms. To cope with this, Myerson introduced the revelation principle [Mye79, Mye8 1], which states that every incomplete information game can be simulated by a direct mechanism. A direct mechanism simply asks each agent to report a type, then directly selects an outcome (and charges prices) based on the reported types. Given any system, one can imagine assigning a consultant to each agent whose job it is to play that system in a way that maximizes the utility of his agent. The agent reports her type to her consultant so that the consultant knows her preferences, and then the consultants play the resulting system in a Bayes-Nash Equilibrium. Myerson's revelation principle essentially suggests viewing the consultants as part of the system. So the agents simply report a type to the new system, the consultants play the original system in a Bayes-Nash Equilibrium, and some outcome is directly chosen. Furthermore, Myerson showed that it is a Bayes-Nash Equilibrium in this new system for each agent to report their true type. All of this is to say that even though in spirit the designer could design an arbitrarily complicated system and expect agents to behave according to a Bayes-Nash Equilibrium of that system, he may without loss of generality instead design a direct mechanism where truthful reporting is a Bayes-Nash Equilibrium that selects the same outcome (and charges the same prices).

Direct Mechanisms. In our setting, a direct mechanism consists of two functions, a (possibly randomized) allocation rule and a (possibly randomized) price rule, which may be correlated. The

allocation rule takes as input a type profile itand (possibly randomly) outputs an allocation A(t). The price rule takes as input a profile tand (possibly randomly) outputs a price vector P(i). When the type profile tis reported to the mechanism M = (A, P), the (possibly random) allocation A(t) is

selected and agent i is charged the (possibly random) price Pi(t).

Truthful Mechanisms. A direct mechanism M = (A, P) is said to be Bayesian Incentive Com-patible (BIC) if it is a Bayes-Nash Equilibrium for every agent to report truthfully their type.

Formally, for all i, ti, t' E T we must have:

B,_y-D-_ [ti(A(ti; tLi)) - P(ti, L~i)] -2 BEt_,,[ At ;Li)) - P(ti', Li)].

That is, assuming that all other agents report truthfully their type, each agent's utility is maximized

by telling the truth. A direct mechanism is said to be Individually Rational (IR) if it is in every

agent's best interest to participate in the mechanism, no matter their type. Formally, for all i, ti E T

we must have:

Be~_[ti( A(ti ; L-i)) - P(ti, Li)] 0.

Dominant Strategy Incentive Compatibility (DSIC) is a more robust notion of truthfulness than

BIC. A direct mechanism is DSIC if it is in each agent's best interest to report truthfully their type, no matter what the other agents choose to report. Formally, for all i, ti, ti, Li we must have:

ti(A(ti; L~i)) - P(ti, Li) > ti( A(t j ; L~i)) - P(t jtLi).

BIC and DSIC are both frequently studied notions of truthfulness. DSIC is obviously a more robust solution concept, but BIC allows for a richer set of mechanisms. In other words, maybe the performance of the optimal BIC mechanism is significantly better than that of the optimal

DSIC mechanism, and it's unclear which is objectively "better:" If you believe that the agent's

will play according to a Bayes-Nash Equilibrium, then the BIC mechanism is better. If you only believe that agents are capable of playing dominant strategies, and that their behavior will be