Distributed Correlation Generators

by

Joseph Hui

Submitted to the Department of Electrical Engineering and Computer

Science

in partial fulfillment of the requirements for the degree of

Master of Science in Electrical Engineering and Computer Science

at the

MASSACHUSETTS INSTITUTE OF TECHNOLOGY

June 2018

@

Massachusetts Institute of Technology 2018. All rights reserved.

Signature redacted

A uthor ...

Department of Electrical Engineering and Computer Science

May 23, 2018

Signature redacted

Certified by.

...

Vinod Vaikuntanathan

Associate Professor of Electrical Engineering and Computer Science

Thesis Supervisor

Accepted by.,

Signature redacted

...

...

/

)

0 slie A. Kolodziejski

Professor of Electrical Engineering and Computer Science

Chair, Department Committee on Graduate Students

ARCHIVES

MASSACHUET INTIUTE

OF TECHNOLOGY.

JUN 18 2018

LIBRARIES

MITLibraries

77 Massachusetts Avenue

Cambridge, MA 02139

http://Iibraries.mit.edu/ask

DISCLAIMER NOTICE

The pagination in this thesis reflects how it was delivered to the

Institute Archives and Special Collections.

The Table of Contents does not accurately represent the

page numbering.

Distributed Correlation Generators

by

Joseph Hui

Submitted to the Department of Electrical Engineering and Computer Science on May 21, 2018, in partial fulfillment of the

requirements for the degree of

Master of Science in Electrical Engineering and Computer Science

Abstract

We study the problem of distributed correlation generators wherein n parties wish to simulate unbounded samples from a joint distribution D = Di x D2 X ... x D., once

they are initialized using randomness sampled from a (possibly different) correlated distribution. We wish to ensure that these samples are computationally indistin-guishable from i.i.d. samples from D. Furthermore, we wish to ensure security even against an adversary who corrupts a subset of the parties and obtains their internal

(initialization) state.

Our contributions are three-fold. First, we define the notion of distributed (non-interactive) correlation generators and show its connection to other cryptographic primitives. Secondly, assuming the existence of indistinguishability obfuscators, we show a construction of distributed correlation generators for a large and natural class of joint distributions that we call conditionally sampleable distributions. Finally, we show a construction for the subclass of additive-spooky distributions assuming private constrained pseudorandom functions (private CPRFs).

Thesis Supervisor: Vinod Vaikuntanathan Title: Associate Professor

Contents

1 Introduction

2 Distributed Correlation Generators

2.1 Distributed Correlation Generators . . . .

3 Relation to Other Primitives

3.1 Relation to Other Primitives . . . . 4 Additive-spooky 2-player distributions

4.1 Additive-spooky 2-player distributions . . . .

4.1.1 Warmup: oblivious transfer . . . . 4.1.2 DCGs for general additive-spooky distributions

5 Distributed Correlation Generators from 10

5.1 Distributed Correlation Generators from 10 . . . .

A Preliminaries and Definitions

A.0.1 Constrained Pseudo-Random Functions . . . . .

A.0.2 Indistinguishability Obfuscation . . . .

A.0.3 Function Secret Sharing . . . .

A.1 Proof of Theorem 5.1.1 . . . . A.1.1 -l: changing the sampling order . . . .

A.1.2 7-12: Removing unnecessary keys . . . .

A.1.3 W3: Fresh-sampling oracle . . . .

A.1.4 W4: Restoring the original programs . . . .

11 15 16 19 20 23 . . . . 24 . . . . 24 . . . . 26 31 32 33 33 34 35 35 37 . . . . 38 38 . . . . 40

List of Figures

2-1 The sampling procedure JointSamplen(1A) defined by the permutation

I I. ... ... 16

4-1 The constraint for the additive-spooky scheme . . . . 26

4-2 The seed generation for the additive-spooky scheme . . . . 27

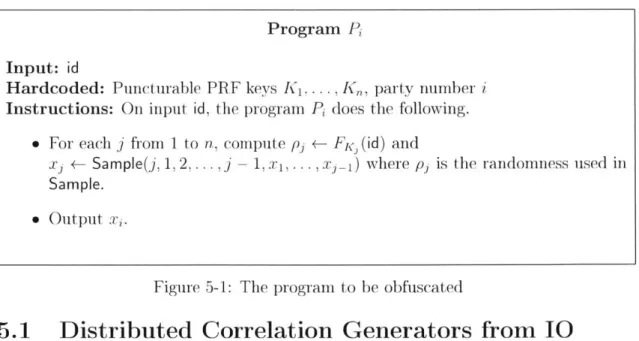

5-1 The program to be obfuscated . . . . 32

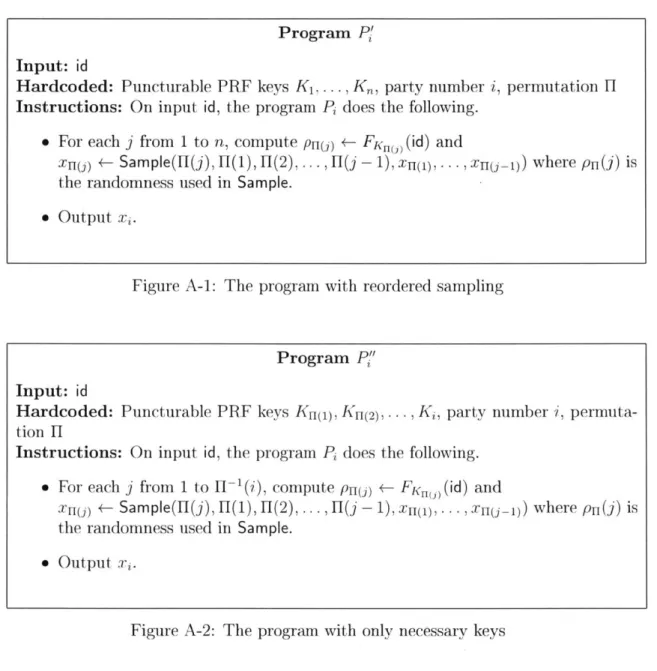

A-i The program with reordered sampling . . . . 36

A-2 The program with only necessary keys . . . . 36

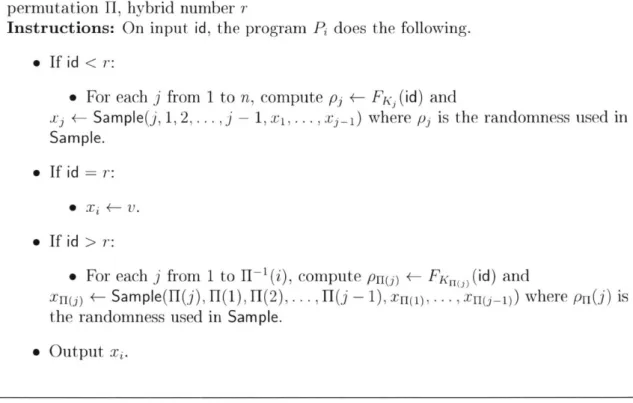

A-3 Switching from one ordering to another . . . . 38

Chapter 1

Introduction

We are interested in understanding the class of joint distributions D, x ... x D" that n parties can simulate, given randomness sampled from a correlated distribution. Several works have explored this problem in various settings:

" Secure sampling for two parties [16, 15, 171: two parties output samples of a pair of jointly distributed random variables such that neither party learns more about the other party's output than what its own output reveals.

" Non-interactive simulation for two parties [9, 13, 11]: the parties need to simu-late D x 2 without communicating with each other.

" Complexity of correlated randomness in secure multiparty computation 1121: given small amount of correlated randomness, n parties need to securely com-pute a function

f

on their private inputs.We continue in this line of research. Specifically, we study the following question: for what class of joint distributions Di x - - - x D, can n parties non-interactively and securely simulate using small amount of correlated randomness? We consider a relaxed requirement where we don't require perfect simulation of D x ... x D, and in fact, we only require the output distribution of the parties to be computationally close to D x ... x D,. This means that any probabilistic polynomial time distinguisher should be unable to tell apart a sample from D1 x ... x D, versus the joint outputs of the parties. We note that all the above works only offer a partial answer to this question. For instance, the works on secure sampling [16, 15, 171 offer interactive solutions. The works on non-interactive simulation [9, 13, 111, however, do not have any security guarantees.

We address the aforementioned question by introducing the notion of distributed correlation generators (DCG). This notion corresponds to a joint distribution Di x

... x D, which is efficiently sampleable. It consists of the following two probabilistic polynomial time algorithms:

" Seed Generator, SeedGen(1P l'): On input A, the security parameter and n, the number of parties, it outputs the initial seeds si, .. ,,s. The ith party gets

the seed si.

* Instance Generator, InstGen(si, i, id): On input si, the seed of the ith party and a session identifier id, it outputs a sample Xid,. The ith party in a session id outputs the sample Xid,i.

Ideally, we require that the above scheme satisfies the following correctness guar-antee: the joint output distribution of the parties in every session should effectively simulate sampling from D, x ... x D, and in particular, independent of all the sessions so far. This is formalized by requiring that the output distribution of the parties in every session should be computationally indistinguishable from a fresh sample drawn from D1 x ... x D.

Satisfying correctness alone is insufficient for many of our applications. In par-ticular, we need to handle the case when the distinguisher is given a subset of seeds,

{si}is

for some subset S c [n]. Indeed, achieving correctness alone is easy: all theparties get a PRF key K and in session id, the 'th party samples from D, x ... x D,

using randomness PRF(K, id) and finally, outputs the ith coordinate in this sample. Defining security involves some challenges and in particular, it restricts the type of joint distributions Di x ... x D, the distributed correlation generators can simulate.

We discuss this next.

Defining Security. For simplicity, we illustrate our definition in the case of two parties. Intuitively, our definition gives us the following security guarantee.

Sup-pose an adversary corrupts party 1 so that she can see the sequence of samples a1

,

a2,... that party 1 outputs. We require that from her view, the sequence ofsamples bi, b2, ... that party 2 outputs are computationally indistinguishable from

fresh samples b', b', . . . where each b' is sampled independently from the distribution D2 conditioned on Di = ai. Although this guarantee can in principle be defined for arbitrary distributions, we consider conditionally sampleable distributions, where the conditional distribution of D2 conditioned on E) = a (resp. E) conditioned on D2 = b) is efficiently sampleable.

Connections to FSS and Additive-Spooky Encryption. We start by

observ-ing connections between distributed correlation generators for a class of distributions called additive-spooky distributions [81, defined as follows. Here, D, X D2 associated

to a function

f

is defined as follows: sample x <- X and y <- Y uniformly at random, and z, w uniformly at random such that ze

w = f(x, y). The output of D is (x, z)and that of D2 is (y, w). We observe that DCGs can be constructed from function secret-sharing schemes, following [3, 4]. The application of FSS towards generating certain correlated distributions is similar to a portion of [21, which uses homomor-phic secret sharing (HSS) to generate certain correlated distributions, such as bilinear correlations. These connections are summarized in Section 3.1.

Constructions for Additive-Spooky Distributions. Our main contribution is a

construction of DCGs for 2-party additive-spooky distributions (corresponding to a Boolean function f) starting from any (single key) private constrained PRF. Roughly speaking, our construction proceeds as follows. Alice and Bob each generate their corresponding inputs to f using independent PRF keys. Alice also receives a private CPRF key, and Bob receives a derived key with a particular constraint (which depends on f(x, y) and contains Alice and BobAA2s hardcoded PRF keys). This allows Bob to learn something that depends on f(x, y) -in particular, he either learns the same bit as Alice, or a random bit. When we XOR these two bits together, we get either

0 (if Bob learns the same bit as Alice), or a random bit (otherwise). By adding

more CPRF keys, we can amplify this so that the XOR is either 0, or 1 with high probability, depending on whether f(x., y) is 0 or 1. However, Alice still learns nothing (because she does not even see the derived key), and Bob also learns nothing (because the key hides the constraint). For the detailed construction and proof, the reader is referred to Section 4.1.

num-ber of random oblivious transfer (OT) correlations using a fixed amount of correlated

randomness. Indeed, in the special case of random OT, we are able to simplify the

construction somewhat, and relax the assumption to just constrained PRFs (without

privacy). Furthermore, building on the standard transformation from random OT to

OT, we show how to achieve n instances of OT with communication 3n + o(n) which

improves on the work of Boyle, Gilboa and Ishai

151

who achieve 4n + o(n), albeit at

the price of achieving the weaker indistinguishability security.

Constructions for General Conditionally Sampleable Distributions. Finally,

we show how to construct DCGs for arbitrary conditionally sampleable distributions

assuming the existence of secure indistinguishability obfuscation schemes. For more

details, we refer the reader to Section 5.1.

Chapter

Distributed Correlation Generators

3l

2.1

Distributed Correlation Generators

We define the notion of distributed correlation generators DCG for a distribution D. It

consists of two probabilistic polynomial time algorithms SeedGen and InstGen defined

below.

* Seed Generator, SeedGen(1A, 1'): On input A, the security parameter and n,

the number of parties, it outputs the initial seeds si,..., Sn.

* Instance Generator, InstGen(si.i, id): On input

si,the seed of the Zth party

and a session identifier id, it outputs a sample Xid,i.

We present our security definitions for a class of distributions that we call

condi-tionally samplable distributions. A condicondi-tionally samplable distribution is a joint

dis-tribution on variables (X

1.... Xn) which admits a polynomial-time sampler Sample.

This sampler allows efficiently sampling from the distribution one variable at a time,

in any order, to build up a sample from the joint distribution.

We present the formal definition below. We think of Sample in the definition

as taking an input index

j

E [n] to sample next, indices si,

...,

i E[n] that have

already been sampled, and the values (xi,..., xi .. ) that were already sampled, and

outputting the next value xj sampled according to Xj conditioned on the previously

sampled values.

Definition 2.1.1 (Conditionally Sampleable Distributions). A distribution D defined

on random variables X1.... , Xn is a conditionally samplable distribution if there exists

a PPT algorithm

Sample(j, i

1,... ),imxi,...Ti)

such that thefollowing

holdsfor

every permutation

H

on [n]:D :: JointSample(1 A)

where JointSample is defined in Figure 2-1.

For i in

...

n:

)+-

Sample(H(i), 1(1), 1(2).

. . . , 1(i - 1), x'r (1)(

.-. ,Xf((-))Output (x'i..., x')

Figure 2-1: The sampling procedure JointSamplen(l') defined by the permutation

H.

We present the correctness and security definitions for distributed correlated

gen-erators associated with conditionally samplable distributions. Roughly speaking, a

distributed correlation generator associated with a conditionally samplable

distribu-tion D is correct for any session ID and (Si,...,sa) generated by SeedGen if the

distribution

(x1,... , x,) sampled according to D. In terms of security, we require that an

adver-sary, given a (non-empty) subset S of {s , ... , sn}, should not be able to distinguish

{

InstGen(si, i, ID)}js from {xi}is, where {xj}igs is sampled according to D condi-tioned on the samples that the adversary has access to. If we allow S to be empty, then this definition subsumes the correctness definition, so we present a single definition for both.Definition 2.1.2 (Correctness and security of DCGs). Let n > 2. Consider a dis-tributed correlation generator DCG = (SeedGen, InstGen) for a conditionally samplable distribution D. The distributed correlation generator is secure if for every probabilis-tic polynomial time adversary A, every set S c [n], the adversary cannot distinguish between the following two experiments.

* In the first experiment, in the setup phase, the adversary receives si for all i E S, where si <- SeedGen(1', 1n). In the sampling phase, the adversary makes queries of the form (i, id), where i e [n] to an oracle that computes InstGen(si, i, id). " In the second experiment, the adversary receives the same seeds si. However, in

the sampling phase, she makes queries to an oracle that computes fresh samples conditioned on the seeds of corrupted parties. That is, for a query (i, id), if i G S, the oracle will answer with InstGen(si, ,id). But if i S, the oracle

will answer with Sample(i, i1, i2, . . , , 1 , x2,. .., xm), where for every

j

E [m]either i E S in which case xij = InstGen(si,,ij, id) or ij V S in which case xij

Chapter 3

3.1

Relation to Other Primitives

Distributed correlation generators are closely related to several other primitives. In

this section, we will consider a special class of distributions called "additive-spooky"

distributions which are defined as follows.

Definition 3.1.1 (Additive-spooky distributions). Consider a binary function f on

two inputs and two efficiently samplable distributions X and Y. The additive-spooky

distribution associated with

f,

X, Y is defined by the following sampling procedure:sample x from X and y from Y, plus a random bit b. The output of the sample is

((x, b), (y, b

e

f (x, y)).

Note that without loss of generality, we may assume X and Y are uniformly

random, because

f

itself can interpret these as random tapes used to efficiently sample

the corresponding distributions.

We will discuss various constructions and implications of distributed correlation

generators for additive-spooky distributions. 2-party additive-spooky DCGs are

im-plied by 2-party function secret sharing (13]), which can be constructed from the DDH

or factoring assumptions (or, as we will show, from single-key private CPRFs, based

on the LWE assumption). In turn, AFS-spooky encryption implies FSS, not only for

2 parties but for any number of parties (see section 6.3 of

[81).

AFS-spooky

encryp-tion can be constructed based on LWE (Theorem 2 of [8]). These relaencryp-tionships are

illustrated below.

LWE AFS-spooky Encryption (2 parties) DDH, Factoring Single-key Function Private CPRF 0. Secret Sharing(2 parties)

Distributed Correlation Generators (2 parties)

Theorem 3.1.2. Assuming two-party FSS and PRFs exist, there exists a distributed

correlation generator for any two-party additive-spooky distribution.

Proof. Let

f

be a binary function (corresponding to the additive-spooky distribution

of

interest). Consider an FSS scheme

Gen., Eval for

f with the natural output domain

0,1

and output decoder

E

(bit XOR). Let g be a PRF.

Construction: We will construct a distributed correlation generator. The seed

" SeedGen(1A, 1n): Sample PRF keys

K

1, K

2.

Leth

be defined ash(id) = f (gK1 (id), K2(id)). Compute FSS keys (ki, k2) = Gen(1A, h). Output si = (Ki, ki).

" InstGen(si, i, id): Output (gKjid), Eval(i, ki, id)).

We now need to analyze the two cases in the security definition (one corrupted party and correctness).

One corrupted party. Without loss of generality, suppose that the adversary cor-rupts the first party. We consider a series of hybrids below.

Hybrid NO: (real scheme) In this hybrid, the adversary receives s1 and oracle

access to (gK2(id), Eval(2, k2,

id)).

Hybrid

N

1: (correct additive-spooky output) In the oracle, we replace thesecond party's bit output with the bit b that makes the additive-spooky output cor-rect. In this hybrid, when the oracle is queried on the second party's bit, it returns h(id) (D Eval(1, ki, id).

Claim 1. A cannot distinguish between No and N1.

Proof. By the correctness of FSS, Eval(1, kj, id) D Eval(2, k2, id) = h(id), therefore

Eval(2, k2, id) = h(id) E Eval(1, k1, id). In other words,

No

= N1.Hybrid N

2: (fresh key) In the oracle, we sample a fresh key K2 and use it to

compute the second party's input to f. Instead of generating the input as gK2(id), the

oracle replies with gKq(id) (the second party's bit is computed to ensure correctness, as before).

Claim 2. A cannot distinguish between R

1and N

2.

Proof. Suppose not. Then we can break the security of FSS. An equivalent description

of this hybrid is that the oracle replies with

gK

2(id), but the first party receives k'which was computed from KI, K2. Consider two functions h(id)= f(gK,(id), gK2(id))

and

h'(id) =f(gK, (id),gK' (id)). Our

adversary asks the FSS challenger for a keycorresponding either to h or h', then simulates the oracle using K2 and computing b

as previously described. If the key corresponds to h, then this experiment is hybrid

N

1;

otherwise, it isN

2. Therefore, we break the security of FSS, a contradiction.El

Hybrid N

3: (random input) In the oracle, we replace the second party's input

to

f

with a random bitstring. Instead of generating the input as

9K2(id), the oracle

samples a random value.

Proof. Suppose not. Then we can break the security of the PRF. We can generate K1. K2, ki ourselves, query the challenger for either the PRF or truly random output, and then compute b as before. If we query a PRF, this experiment is W2; otherwise it is W3-

N3 corresponds to a fresh sampling oracle, so this finishes the proof.

Correctness. As described in Definition 2.1.2, this is just the case when neither party is corrupted. We again consider a series of hybrids.

Hybrid NO: (real scheme) In this hybrid, the adversary receives si and oracle access to (gK2 (id), Eval(2, k2, id)).

Hybrid N3: (second party random) We go directly from NO to N3, in which the second party's input to f is random, and her bit is chosen to make the additive-spooky output correct, just as in Hybrid N3 of the previous argument. Since in this case, the

adversary only has oracle access to party 1 instead of K1, k1, this is a strictly weaker

argument than the previous argument from NO to N3.

Hybrid N4: (random bit for first party) In the oracle, we replace the first party's output bit with a random bit.

Claim 4. A cannot distinquish between N3 and N4.

Proof. Suppose not. By Theorem 9 in

[31,

the output share function is a PRF. Therefore, this breaks the security of the PRF, a contradiction.Hybrid N5: (Random output for first party)

As in N3, we replace the first party's output with a random one. The argument

is the same as in Hybrid N.

-I

Chapter 4

Additive-spooky

distributions

4.1

Additive-spooky 2-player distributions

In this section, we construct distributed correlation generators for a special class

of distributions, additive-spooky distributions, as defined in Definition 3.1.1. We will first construct DCGs for a special case, oblivious transfer, starting from any constrained PRF. As a corollary, we will show an OT protocol that uses amortized 3+o(1) communication per OT. Finally, we extend this construction to a construction for any additive-spooky distribution.

4.1.1

Warmup: oblivious transfer

We note that oblivious transfer is a special case of an additive-spooky distribution. In oblivious transfer, Alice gets bo, bl, while Bob gets c. bc. Consider the additive-spooky distribution associated with the function f which is 0 if c = 1, and bo D b1 otherwise,

and with X and Y being random bits. By a standard transformation, we can take this distribution to the OT distribution.

Theorem 4.1.1. Assuming constrained PRFs exist, there exists a distributed corre-lation generator for the OT distribution.

Proof. Let (KeyGen, Eval, Constrain, ConstrainEval) be a constrained PRF with output length 1. Our construction proceeds as follows.

" The SeedGen algorithm samples a PRF seed a, another PRF seed s and a constrained key o-c, where the circuit C (dependent on s) is defined as follows:

C (ilIy) = 1 if and only if EvaI(s,i) = y. It then outputs the two seeds a and

(OcC, s).

" The InstGen algorithm for the first party, Alice, on session id, outputs

(EvaI (a, idil0), EvaI(a, idill)). The InstGen algorithm for the second party, Bob,

on session id, computes y, = Eval(s, i) and outputs (yi. ConstrainEval(crc, iIIy

))

(y, is the bit determining which bit Bob receives).

For the security proof, we analyze three cases, corresponding to each set of cor-rupted parties in the security definition (the case when all parties are corcor-rupted is vacuously true).

Alice is corrupted. We will contradict the security of the PRF (see Definition

A.0.1) by constructing an adversary A' to win the PRF security game. First, A' samples a PRF key a as the setup would. He then acts as the challenger for A, starting by giving her the PRF key a. For each oracle query i that A issues, A' queries his own oracle on i and receives a bit yi, which is either a uniformly random value, or the value of Eval (s, i) (depending on b2). Then A' responds to A's query

with the response (yi, EvaI-(a, iI10)).

To prove security, we consider A's perspective. If b2 = 1 (i.e. A' receives uniformly

random responses), then the response to A's query is one of her two bits selected at random. This corresponds to the conditionally sampled distribution. On the other

hand, if b2 = 0 (i.e. A' receives the true value of Eval (s. i)), then the response to A's

query is the same as the real distribution. In other words, A' wins the security game with the same advantage as A, and we are done.

Bob is corrupted. We will contradict the security of the constrained PRF. Our

adversary A' samples a PRF key s, then computes C, as described in the setup. Then

A' specifies a function f(x) = C (x) and receives the seed oc from the challenger. Now A' acts as the challenger, giving s and cc to A. To answer a query i, A' first computes yj = Eval (s, i), and then queries its own oracle on

i

1gi (the constrainedinput). A' then responds to the query with (ConstrainEval (ac,

illyi),

O (ac,

illgi))

(in

the appropriate order).

To prove security, we consider A's perspective. If b2 =1 (i.e. A' receives uniformly

random responses on constrained queries), then the response to A's query is the bit that he already knows, and a random bit. This corresponds to the conditionally

sam-pled distribution. On the other hand, if

b2 =0, then the response to A's query is

(ConstrainEval

(ac, il lyi) , Eval (ac, il lgi)), which is computationally indistinguishable

from the real distribution (since ConstrainEval and Eval are indistinguishable on

un-constrained inputs). In other words, A' wins the security game with negligibly close

to the same advantage as A, and we are done.

Correctness. As described in Definition 2.1.2, this is just the case when neither

party is corrupted. We will proceed with three hybrids.

Hybrid WO: The real game.

Hybrid Ri: We replace Bob's output with a freshly-sampled output, as in the

ar-gument in the case of Alice being corrupted (this is a strictly weaker claim, since the

adversary only has oracle access to Alice's output, not her seed).

Hybrid

-2:We replace Alice's output with a freshly-sampled output. Security

follows by the PRF security of a.

Now we show how to obtain n OTs with amortized 3+ o(1) bits of communication

per OT, that is, 3n + o(n) total communication, via a standard transformation. From

the random OT, the sender starts with x

0., x

1and the receiver starts with r, x,. The

sender also has her actual bits yo, yi and the receiver has s and wants to get y,. The

receiver sends

rE

s,and the sender replies with

Yre,E

Xrand

ye,which is

ys.This

uses 3n bits of communication. However, we still need to instantiate the trusted party.

We can use a 2PC protocol in order to compute SeedGen and initialize the protocol.

Assuming CPRFs with subexponential security, the security parameter can be (say)

logarithmic in the number of sessions, so that the total communication in the 2PC

protocol is at most polylogarithmic (in particular, o(n)).

This improves on the 4n bits of communication from Theorem 11 of [12], at the cost

of providing only indistinguishability security instead of simulation security. This may

also be viewed as an OT extension protocol, since the initial two-party computation

can be instantiated with OTs (although these may need to be simulation-secure).

Program Ci

Input: id (the session number)

Hardcoded: f, K, Ky, K,..., Ki_1, and K',.

..,_1

Instructions: On input r, the program Ci does the following.

* Compute b <-

f

(gK, (id) , 9K,(id))E

(ej=1gKid)D

((BKi 9K; (id))" If b

=

0, output 0 (do not constrain). Otherwise, output 1 (constrain).

Figure 4-1: The constraint for the additive-spooky scheme

4.1.2

DCGs for general additive-spooky distributions

In this section we show how to construct a distributed correlation generator for any

additive-spooky distribution using private CPRFs.

The high-level idea of our construction is as follows. Alice and Bob each generate

their corresponding inputs to

f

using independent PRF keys. Alice also receives a

private CPRF key, and Bob receives a derived key with a particular constraint (which

depends on f(x, y) and contains Alice and Bob's hardcoded PRF keys). This allows

Bob to learn something that depends on f(x, y)

-

in particular, he either learns the

same bit as Alice, or a random bit. When we XOR these two bits together, we get

either 0 (if Bob learns the same bit as Alice), or a random bit (otherwise). By adding

more CPRF keys, we can amplify this so that the XOR is either 0, or 1 with high

probability, depending on whether f(x, y) is 0 or 1. However, Alice still learns nothing

(because she does not even see the derived key), and Bob also learns nothing (because

the key hides the constraint).

Theorem 4.1.2. Consider an additive-spooky distribution corresponding to the

func-tion

f.

Let (KeyGen, Eval, Constrain, ConstrainEva\) be a constrained PRF. Then thefollowing 2-party distributed correlation generator is secure.

" SeedGen(1A): See Figure

4-2.

" InstGen(si, i, id): On session id, Alice outputs (gK, (id) , g

i gKi (id)), while Bob

outputs gKy d and Ki (Id)

Proof. To prove the security of this distributed correlation generator, we must prove

it for every set S

C

{1, 2}. If S

=

{1, 2}, there is nothing to prove. Hence we will

focus on the remaining three cases.

Correctness As described in Definition 2.1.2, this is just the case when neither party

is corrupted. We wish to prove that the output of the protocol, i.e.

(InstGen (2, Ky,

K ,...,K' ), InstGen

(2, Ky,K',..., K' )),

is indistinguishable from-I

* Sample two PRF keys (Kr, K.) with KeyGen. " For i from 1 to m:

9 Compute Ci as in Figure 4-1, which depends on

f,

K, Kv, K1,..., Ki_1and Kj',..., K_1 " Ki *- KeyGeno.

" Ki - Constrain(Ki, Ci). Output s, +- (1, Kx, K1,... , Km) and

s<- (2, Ky, K ... , K').

Figure 4-2: The seed generation for the additive-spooky scheme

the seeds sx and sy, as well as the hiding property of the constrained PRF. We will consider five hybrids, where the first hybrid is the real scheme and the last hybrid is the fresh-sampling oracle.

Hybrid NO: This is the real experiment, in which Alice outputs gKx (id) and Eli gKi (id), and Bob outputs gK, (id) and $ 1g9K(id).

Hybrid N1: We change Bob's bit output to f (gKx(id), 9K,(id)) (( ,1gKjid)), so

that the scheme correctly computes

f

on Alice's and Bob's inputs. Security is by Lemma 4.1.3 below.Hybrid N2: We change Alice's bit from

G)L

1 gKi (id) to a random bit. Security is by the PRF security of K,.Hybrid W3: We change Bob's output from gK, (id) to a random bit. Security is by the PRF security of Ky.

Hybrid N4: Similarly, we change Alice's output from 9K, (id) to a random bit. Se-curity is by the PRF seSe-curity of Kx. In this hybrid, Alice outputs a random x and random bit a, Bob outputs a random y and

f

(x, y) E a, which is identical to the fresh-sampling oracle.We now prove the lemma needed to move to hybrid N1.

Lemma 4.1.3. For any adversary A, A cannot distinguish between:

" Alice outputs gKx(id) and EI 1g Ki (id), Bob outputs gK, (id) and e 1 gKjid)

" Alice outputs g,. (id) and ED" gK, (id), Bob outputs gK, (id) andf (9K (id), 9K,(id))

((Di1

gKi (id ))Proof. We claim that the two hybrids are statistically indistinguishable. It suffices to show that with all but negligible probability, gK 9K'(id) f (gi:(id), 9K,(id)) E

(@i1g Ki (id)). In other words, EIm gK (id) EDK (K()K (id)) with all but negligible probability.

Suppose that

f

(gK, (id), 9K, (id)) = 0. Then, considering the constraint in Figure 4-1 and by the correctness of the constrained PRF, we must have gKi (id) = 9K (id) for every i, so that gK 9K(id) = 0 = f (9K. (id), gK, (id)), and we are done. Otherwise, f (gK (id), 9K (id)) = 1. We claim that for every i, if Cji_1 = 1 (i.e.the output is constrained), then gKi (id), 9K'(id) are statistically close to independent coin flips with probability 1. First, by the PRF security of g, 9Ki (id) is statistically indistinguishable from a coin flip (this corresponds to guessing b2 in the security

game). Second, if g<,(id) were statistically distinguishable from a coin flip (i.e. bi-ased towards 0 or 1), then an adversary, given the constrained key, could distinguish the constrained inputs from unconstrained inputs (this corresponds to guessing bi). Finally, if gKi (id) and 9K' (id) were positively or negatively correlated, then an ad-versary, given the constrained key, can distinguish the real evaluations from random, violating the constrained PRF security (this corresponds to guessing b2 again).

Since gKi (id), 9K' (id) are statistically close to independent coin flips, the probabil-ity that gKi(id) ( 9K'(id) = 1 is statistically close to 1. If there is any

j

such thati1

gKi (id) E9K'(id) f (9K, (id), 9K, (id)), then for allj

>j,

gKi (id) = 9K'(id) and therefore1 YK,(id) 9K'(id) ( f (9K. (id),9K,(id)), as desired. So, the only way for this

not to happen is if gKi (id) 9K (id) for every i, which happens only with probability

(statistically close to) 2-m, as desired. El

Alice is corrupted. We now prove that Alice, given her seed (1, K, K1, ... , Km), cannot distinguish Bob's output (i.e. InstGen (2, Ky, K', ... , K' )) from random. This proof is straightforward, because Alice's entire input (sr, K) is generated before Bob's input is determined, and therefore Alice would be distinguishing the output of a PRF from random.

Proof. In the first experiment, Alice gets oracle access to 9K. (id) and @ 9K;(id).

As proved in Lemma 4.1.3, with all but negligible probability, the bit is equal to

f

(9Kn (Id), 9Ky (id)) 9 = 9Ki (id). Therefore, the first experiment is indistinguishable from an experiment in which Alice gets oracle access to 9K, (id) and f (gKx (id), 9K, (id)) 0Di=1 gKi

(id).

Now suppose towards a contradiction that some adversary A distinguishes this experiment from the second experiment. Then there is an adversary A' that dis-tinguishes between the output of a PRF and random. A' gets oracle access to

either 9K, (id) or a random oracle. He generates Alice's keys himself and gives them to A. To answer queries, he queries the oracle on id to get y, and outputs

(y, f (gKx (id), y)

E

9Am qKj(id)). Note that if y is gKy (id), then this is the experimentdescribed previously, whereas if y is random, then this is a fresh-sampling oracle (the second experiment). Therefore, A' distinguishes with the same probability as A, a

Bob is corrupted. We now prove that Bob, given his seed (2, Ky. K', .. ., K'),

can-not distinguish Alice's output (i.e. InstGen (1, K1, K1, ... Kin)) from random (recall

that Alice's and Bob's seeds start with 1 and 2 respectively to indicate which party they are).

Proof. We will consider four hybrids, where the first hybrid is the real scheme and the last hybrid is the fresh-sampling oracle.

Hybrid WO: This is the real experiment, in which Bob gets Ky and K .... , K' ,, and

oracle access to Alice's output, gK.(id) and @ gKi(id).

Hybrid WI: We change Alice's bit output to f (gK.(id), 9K, (id)) ED(il gK'(id), i.e. the output is always correct. This is statistically indistinguishable from the previous hybrid by Lemma 4.1.3.

Hybrid W2: We change Alice's output from gK(id) to 9K'(id), where K' is a PRF key freshly sampled (after the original Kx was already used in computing Bob's keys K... ,K'). The output remains correct, i.e. it is

f

(gK'(id),g9K, (id)) (DED'jK'(id).The indistinguishability of W, and W2 follows from the security of constrained hiding property of private constrained PRFs: if an adversary A can distinguish these two experiments, then it can distinguish between experiments in which the constraints are based on Kx and K'. respectively, a contradiction.

Hybrid R-;: We change Alice's output from gK (id) to a freshly-sampled x. The output remains correct.

The indistinguishability of W2 and R3 follows from the security of pseudorandom

functions. E

F1

Chapter 5

Distributed Correlation Generators

Program P Input: id

Hardcoded: Puncturable PRF keys K...., Ka, party number i Instructions: On input id, the program P does the following.

" For each

j

from I to n, compute p3 <- Fc (id) andxj <- Sample(j, 1, 2,...,j - 1, x1, ... X ) where p3 is the randomness used in

Sample.

" Output xi.

Figure 5-1: The program to be obfuscated

5.1

Distributed Correlation Generators from 10

The high level idea behind the construction is as follows. We can generate an un-bounded stream of random joint samples using a PRF. However, if we gave the PRF seed to everyone (in order for each party to generate the samples), each party would be able to generate the other parties' samples as well. To prevent this, we obfuscate a program that uses the PRF seed to generate the joint sample, and then only outputs the appropriate coordinate.

Let 0 be an indistinguishability obfuscator with subexponential hardness and let F be a puncturable PRF with input length 1. Then consider the following distributed correlation generator, where P is as defined in Figure 5-1.

" SeedGen(1A, 1"): Sample n PRF keys K1, ... , K,. Compute the programs P for

each i from I to n. Output 1.., = 0(P1),.. ,0 (P).

" InstGen(si, i, id): Output 0(P)(id).

Theorem 5.1.1. Assuming subexponentially secure PPRFs and 10, there is a dis-tributed correlation generator for all functionalities.

Proof. The high level idea behind the proof is as follows. If we knew in advance which parties the adversary would corrupt, we could have them sample their coordinates first, and then have all of the remaining parties sample their coordinates conditioned on the corrupt parties' values (using the corrupt parties' seeds). Since the corrupt parties only know their own randomness, this scheme is trivially secure. By using IO, we can obscure the order in which parties are sampled. Since the distribution is conditionally samplable, the outcome takes the same distribution regardless of the order of sampling.

As described previously, we will argue that the programs P, when obfuscated, conceal the order in which the variables are sampled, via a hybrid argument. For the detailed proof, we refer the reader to Appendix A.1. 0

Appendix A

Preliminaries and Definitions

We denote the security parameter to be A. We use the notation Do D ,1 to denote

that the two distributions Do and D1 are identically distributed.

A.0.1

Constrained Pseudo-Random Functions

The notion of constrained PRFs was introduced by the works of 16, 1, 141. We present the definition of private CPRFs from [7]. A private CPRF key can be used to derive a key of constrained by a function

f:

on inputs x such that f(x) = 0, the key canbe used to correctly evaluate the PRF, but otherwise, the output on the constrained key does not reveal the output of the PRF on the real key. Furthermore, of does not reveal any information about the function

f.

Definition A.0.

1

(Constrained PRF). A constrained pseudo-random function (PRF)family is defined by algorithms (KeyGen, Eval, Constrain, ConstrainEval) where:

" KeyGen(1, 1', 1d, 1) is a PPT algorithm that takes as input the security param-eter A, a circuit max-length e, a circuit max-depth d and an output space r, and outputs a PRF key a and public parameters pp.

Dsdf

" EvaI Ip (a, x) is a deterministic algorithm that takes as input a key o- and a string

x E {0, 1}*, and outputs y E Zr;

" Constrainpp(a, f) is a PPT algorithm that takes as input a PRF key a and a

circuit f : {0, 1}* - {0, 1}, and outputs a constrained key of;

" ConstrainEvalpp(cf,x) is a deterministic algorithm that takes as input a

con-strained key of and a string x E {0. 1}*, and outputs either a string y E Z, or

I.

Definition A.0.2. Consider the following game between a PPT adversary A and a

challenger:

2. The challenger generates (pp, seed) <- Keygen(1A, 1' 1d, 1'). It flips three coins b1, b2, b3 <*- {0, 1}. Intuitively, b1 selects whether fo or fi are used for the

constraint, b2 selects whether a real or random value is returned on queries non-constrained queries, and b3 selects whether the actual or constrained value

is returned on constrained queries.

The challenger creates seedj +- Constraingp(seed, fbi), and sends (pp, seedj) to A.

3. A adaptively sends unique queries x

E

{0, 1}* to the challenger (i.e. no x isqueried more than once). The challenger returns:

SI,

if fo(x)

# fi(x).U(Z,), if (fo(x) = fi(x) = 1) A (b2 = 1).

Constrain Evalpp(of, x), if (fo(x) = fi(x) = 0) A (b3 = 0).

Eva Ipp (-, x), otherwise.

4.

A sends a guess (i,b').The advantage of the adversary in this game is Adv[A] = |Pr[b' = bi] - 1/21. A

family of PRFs (KeyGen, Eval, Constrain, ConstrainEval) is a single-key constraint-hiding selective-function constrained PRF if for every PPT adversary A, Adv[A] = negl(A).

We will also make use of regular (non-private) CPRFs, in which case we have the same definition except that there is no b, (the adversary sends only one function f),

and regular PRFs, which are the same definition except without b1 or b3 (there are

no constraints). Finally, we will make use of puncturable PRIs, which are a special case of CPRFs in which the function is 1 (contrained) at only one point.

A.0.2

Indistinguishability Obfuscation

We use the definition of indistinguishability obfuscation as defined in 1101. Informally speaking, indistinguishability obfuscation is a compiler that transforms a circuit into a functionally equivalent circuit such that obfuscations of two functionally equivalent circuits are computationally indistinguishable.

Definition A.0.3 (Indistinguishability Obfuscator). A uniform PPT machine 0 is called an indistinguishability obfuscator (for P/Poly) if the following conditions are satisfied:

" For all security parameters A c N, for all circuits C of size A, for all inputs x,

we have that

Pr[C'(x) = C(x) : C' - O(C)] = 1

" For any nonuniform PPT distinguisher D, there exists a negligible

function

a such that the following holds: For all security parameters Ac

N, for all pairsof circuits C0,01 of size A, for all inputs x, we have that if C0o(x) = C1(x) for

all inputs x, then

IPr[D(((Co)) = 1] - Pr[D(O(C1)) = 1]1 < a(A)

A.0.3

Function Secret Sharing

We present the definition of function secret sharing from

131.

A two-party function secret sharing (FSS) scheme with respect to the XOR output decoder and function class P/Poly is a pair of PPT algorithms (Gen, Eval) with the following syntax:" Gen(1A,

f):

On input the security parameter l and function description f, the key generation algorithm outputs two keys (ki, k2)-" Eval(i, ki, x): On input a party index i, key ki, and input string x, the evaluation

algorithm outputs a value yi, corresponding to this party's share of f(x). satisfying the following correctness and security requirements:

" Correctness: for all

f,

x,Pr [(ki, k2) <- Gen(IA, f) : Dec (Eva1(1, ki, x), Eva1(2, k2, x)) = f = 1

" Security: Consider the following indistinguishability challenge experiment for corrupted parties T:

- The adversary outputs (fo,

fi,

state) <- A(1A).- The challenger samples b <- {0, 1} and (ki, k2) <- Gen(1A, fb)

- The adversary outputs a guess b' <- A((ki)iT, state), given the keys for corrupted T.

Denote by Adv(1A, A) := Pr[b =.b'] - 1/2 as the advantage of A in guessing b in the above experiment, where probability is taken over the randomness of the challenger and of A. We say the scheme (Gen, Eval) is secure if there exists a negligible function v such that for all non-uniform PPT adversaries A, it holds

that Adv(1A, A) < v(A).

A.1

Proof of Theorem 5.1.1

We first restate the 10 security theorem.

Theorem A.1.1. Assuming subexponentially secure PPRFs and 10, there is a dis-tributed correlation generator for all

functionalities.

Proof. The high level idea behind the proof is as follows. If we knew in advance which parties the adversary would corrupt, we could have them sample their coordinates first, and then have all of the remaining parties sample their coordinates conditioned

Figure A-1: The program with reordered sampling

Program P"

Input: id

Hardcoded: Puncturable PRF keys Kr(1), Kr(2),..., Ki, party number i,

permuta-tion H

Instructions: On input id, the program Pj does the following. " For each

j

from 1 to H-1(i), compute pr(j) <- Fjcl(,)(id) andxrn() +- Sample(H(j), II(1),

11(2),

... , H(j - 1), xn(),.. ,xn(-1)) where prn(j) is the randomness used in Sample." Output Xi.

Figure A-2: The program with only necessary keys

on the corrupt parties' values (using the corrupt parties' seeds). Since the corrupt parties only know their own randomness, this scheme is trivially secure. By using IO, we can obscure the order in which parties are sampled. Since the distribution is conditionally samplable, the outcome takes the same distribution regardless of the order of sampling.

As described previously, we will argue that the programs P, when obfuscated, conceal the order in which the variables are sampled, via a hybrid argument. For the detailed proof, we refer the reader to Appendix A.1. We will consider four hybrids. The first hybrid, 'NO, is the real scheme as previously described. In this scheme, the adversary gets access to O(P) for i E S, and oracle access to O(P) for any i, as shown in Figure 5-1.

Program P,'

Input: id

Hardcoded: Puncturable PRF keys K1, ... , K, party number i, permutation H

Instructions: On input id, the program Pi does the following. " For each

j

from 1 to n, compute P1(j) +- FK,,(j) (id) andxr(j) +- Sample(H(j), 11(1), H (2), .. .,FI(j - 1), xr(1), - - , j -n1)) where pr(j) is the randomness used in Sample.

A.1.1

7W: changing the sampling order

In Hybrid 1, we change the order in which the variables are sampled, in order to place all the corrupted parties first, and the honest parties last. The adversary receives

O(P') for i E S, and oracle access to O(P') for any i, where P/ is defined in Figure

A-1.

Claim 5. For any adversary A and set S, A cannot distinguish between 7o and W1.

We will prove this by moving in a sequence of 2' hybrids, one for each session, where the first hybrid corresponds to WO and the last to N1. In hybrid 1o,r, the

programs are sampled according to Figure A-3.

Claim 6. For any adversary A, set S, and index r, A cannot distinguish between

No,r and

Nor+i-Each of these steps in turn involves hardcoding, switching, and un-hardcoding each program's values for that session. We again use a hybrid argument. In Hybrid

NO,r,k for k < n, the first k programs are sampled according to Figure A-4 (i.e.

hardcoded), where the hardcoded values

vare the same as would be sampled using

the original order, whereas the rest are sampled according to Figure A-3 (note that

Hybrid Wo,,,k is therefore the same as Hybrid NO,r).

From

Hybrid No,,, to HybridNO,r,n+1, we switch the hardcoded values v from being sampled using the original

order to being sampled using the new order (that places the corrupted parties first).

Finally, in Hybrid 7 Or,k for k > n + 1, the first 2n +

1

- k programs are hardcodedand the rest are not (note that Hybrid NO,r,2n+1 is the same as Hybrid NO,r+1). Thus, to prove Claim 6, it is sufficient to prove Claim 7 below.

Claim 7. For any adversary A, set S, and indices r, k, A cannot distinguish between NO,r,k and

NO,r,k+1-Proof. Let's first consider the case of k < n. Then NO,r,k and 7Ho,r,k+1 differ only in a

single program which is functionally equivalent. By the security of

10,

the adversarycannot distinguish these two hybrids.

Now let's consider going from k

=

n to k = n + 1. We claim that both of

these experiments are indistinguishable from the experiment in which v is generated

independently at random (according to 'D). Suppose not; then an adversary can

distinguish oracle access to the punctured points of n

-

ISI PPRFs (which are used

to generate the hardcoded

vs)from random (which are used to generate the fresh

random vs), contradicting the security of the PPRF. Since both of these experiments

are indistinguishable from random with probability n3, they are indistinguishable

from each other with probability 2n6.

Program Qj,r

Input: id

Hardcoded: Puncturable PRF keys K1,..., K,,, party number i, permutation H, hybrid number r

Instructions: On input id, the program P does the following. " If id < r:

9 For each

j

from I to n, compute pj - FKj(id) andxj <- Sample(j, 1,2,...,j - 1,x1, ... , X1) where pj is the randomness used in

Sample.

" If id > r:

* For each

j

from I to H-1(i), compute pr) <-- FKHU)(id) andrr>j <-- Sample(H(j),

H(1),

H(2),

. . .,H(j

- 1), rTi>(), .r(j-1)) where pH(j) isthe randomness used in Sample. * Output Xi.

Figure A-3: Switching from one ordering to another

A.1.2

W2: Removing unnecessary keys

In the third hybrid, we give to each program only the keys that are necessary to compute the output. Here the adversary receives O(Pj") for i E S, and oracle access to O(Pj") for any i, where Pi' is defined as in A-2.

Claim 8. For any adversary A and set S, A cannot distinguish between 1 and W2. As in the hybrid step from NO,,,k to NO.r,k+1, we are replacing obfuscations of programs with obfuscations of equivalent programs, so by a hybrid argument we can replace all the programs, or else we contradict the security of the IO scheme.

A.1.3

713:Fresh-sampling oracle

In the fourth hybrid, we replace the oracle to the real scheme with a fresh-sampling oracle. Here the adversary receives O(Pj") for i

c

S, and oracle access to a fresh-sampling oracle.Claim 9.

For any adversary A and set S, A cannot distinguish between W2 and N3.Proof. Suppose not. Note that the adversary A has access to programs which contain

only the PRF keys corresponding to the corrupted parties, and not any PRF keys corresponding to honest parties. We will contradict the security of the PRE. Our adversary B samples the keys Kr(l). Kr(2), ... , Ki himself, produces the obfuscated

Figure A-4: Hardcoding the value for one session Program Q,.,v

Input: id

Hardcoded: Puncturable PRF keys K1,..., K,, punctured at r, party number i,

permutation 11, hybrid number r

Instructions: On input id, the program P does the following.

" If id < r:

o For each

j

from I to n, compute pj <- FKj (id) andxj <- Sample(j, 1, 2,...,j - 1, X1,..., xj-1) where pj is the randomness used in

Sample.

" If id = r:

0 xi <- V.

" If

id > r:

9 For each

j

from 1 to I1-(i), compute pr() +- FK,(,)(id) andxH(j) +- Sample(J(J),

11(1),

1(2),..

.,H(.j

- 1), xH(1), ... , xn(y-1)) where pil(j) is the randomness used in Sample.programs O(P'), and gives them to A. B answers oracle queries by querying its own oracle to compute FKfl,) for the remaining keys, which is either a PRF on randomly chosen keys, or truly random. If it is the former, then A's view corresponds to the first experiment; if the latter, then A's view corresponds to the latter experiment. Thus, B distinguishes between the PRF output and random values with probability

nJ, a contradiction. E

A.1.4

W

4: Restoring the original programs

We have now replaced the oracle access to the real program with oracle access to a fresh-sampling oracle. However, in the previous hybrid, the adversary receives a different program (P") than the real scheme. Therefore, in the last hybrid, we switch the obfuscated programs back to the real one. Here the adversary receives O(P) for i E S, and oracle access to a fresh-sampling oracle.

Claim 10. For any adversary A and set S, A cannot distinguish between W3 and

This is strictly weaker than going from WO to W3, since if an adversary could distinguish these two experiments, it could also distinguish WO and WN3 by just ignoring