A Cognitive Protection System for the Internet of Things

The MIT Faculty has made this article openly available. Please share how this access benefits you. Your story matters.

Citation Siegel, Joshua and Sanjay Sarma. "A Cognitive Protection System for the Internet of Things." IEEE Security and Privacy 17, 3 (May 2019): 40 - 48 © 2019 IEEE

As Published http://dx.doi.org/10.1109/msec.2018.2884860

Publisher Institute of Electrical and Electronics Engineers (IEEE)

Version Author's final manuscript

Citable link https://hdl.handle.net/1721.1/126870

Terms of Use Creative Commons Attribution-Noncommercial-Share Alike Detailed Terms http://creativecommons.org/licenses/by-nc-sa/4.0/

A Cognitive Protection System for

IoT

Joshua Siegel

Michigan State University Department of Computer Science and Engineering

Sanjay Sarma

Massachusetts Institute of Technology Department of Mechanical Engineering

Conventional cybersecurity neglects the Internet of Things’ physicality. We propose a “Cognitive Protection System” capable of using system models to ensure command safety while monitoring system performance, and develop and test a “Cognitive Firewall” and “Cognitive Supervisor.” This system is tested in theory and practice for three threat models.

1 IoT’s Unmet Security Needs

As the Internet of Things (IoT) grows by leaps and bounds fusing the digital and physical, it must address security challenges to assure safe, continued growth.1 To date, the proliferation of increasingly-connected devices has not been met with a commensurate increase in dependability and resilience. 2

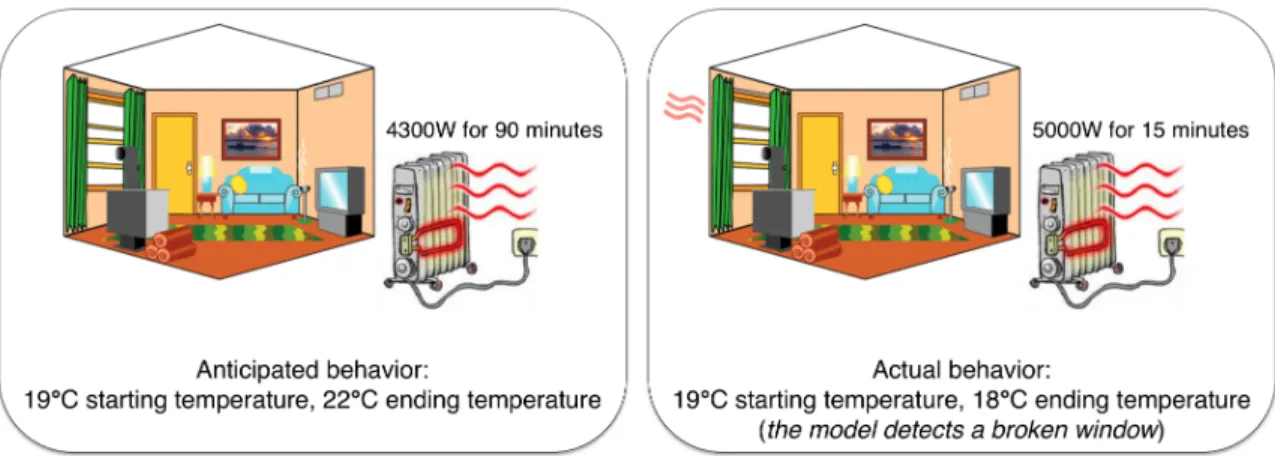

Today’s IoT includes overly-permissive or excessively-inhibited systems, a problem that grows as we put increasingly sensitive and valuable devices on the Internet. The resulting complexity makes IoT difficult to use, while exposing Internet infrastructure to unnecessary risks ranging from Distributed Denial of Service (DDoS) attacks 3 to power generation and distribution threats. 4,5 Yet, we welcome helpful IoT devices into our homes and workplaces – the same devices that compromise the integrity of critical systems. This paper considers common IoT security threats and presents the concept of a “Cognitive Protection System” comprising “Cognitive Firewalls” and “Cognitive Supervisors” using system models in conjunction with machine learning and artificial intelligence to identify anomalous behaviors and proactively test the impact of commands to improve the resilience and safety of the IoT. We explore the concept of a Supervisor in the context of a fictional home heating system, and develop a functioning Cognitive Firewall securing a real voice activated robotic arm.

In Section 2, we outline threats the Cognitive Supervisor and Firewall are designed to protect against, and in Section 3 we consider contemporary firewalls’ shortcomings. We explore the case for Cognitive Protection Systems in Section 4 and design and test a representative system in Sections 6-7. We consider implications, challenges and limitations in Sections 9 and 10, closing with a summary in Section 11.

2 Common IoT Security Threats

untrained employee or inaccurate data entry), and general or targeted (any device that will listen, versus a specific factory). Common vulnerabilities include hard-coded passwords, baked-in Telnet access, or out-of-date firmware allowing malicious actors to compromise entire networks. 1 A survey by the Open Web Application Security Project identified 19 attack surfaces and 130 exploits applicable to the Internet of Things. 2 These and other problems run rampant in incumbent systems due to a rush to market, and problems may endure long after a vendor has ceased operations. The outcomes of cyberattacks may include information leakage, denial of service, or physical harm. 1

IoT is increasingly applied to Industrial Control Systems (ICSs), which comprise a class of Operational Technology (OT) systems different in nature from conventional Information Technology (IT) in that they have restricted resources and functionality based on use case. Whereas IT might have access to abundant power and computing resources, IoT devices must be strategic in expending resources to support security or other critical operations. 6 At the same time, OT ICS attacks can result in physical damage, like that which occurred with Stuxnet or the Ukrainian Smart Grid. Despite these risks, ICS designers have historically been more concerned with system failures rather than attack vulnerability, favoring stochastic failure modeling to active threat mitigation. 5 Only recently did NIST, CIS and IEEE issue security guidelines for critical IoT systems including those coupled to ICS, though these approaches lack enforcement 1 and may not address emerging threats. We consider two attacks aimed at compromising system data (sensor readings and network connectivity) and control integrity (actuation), and the scenario where a system fails to perform as expected due to mechanical or other faults. We ignore privacy threats, but acknowledge that this is a critical area of ongoing research.

Data threats occur when information flow compromises a protected system. In one case, multiple requests query information simultaneously to deny legitimate information requests. This could result in service interruption or costly, heightened resource consumption. In another case, a compromised device could become part of a DDoS attack targeting a remote endpoint. A third category of system data threat involves leaking sensitive information to unauthorized parties. For example, a device monitoring critical infrastructure could divulge sensitive data to an adversary that could be exploited for financial, reputational or other harm or gain.

Control threats include acting upon (un)authorized commands that could result in a dangerous situation, high costs, or compromised mission objectives. These attacks cause a system to exceed safe operational limits or limits in contrast with objectives. Such attacks may originate externally or internally, from illicit agents, “evil maids” with access to legitimate credentials, or trusted users who are not trained or improperly enter data. System threats result from poor maintenance, mechanical faults, or improper data reporting. Such faults could indicate intentional tampering or accidental failures due to age or component wear.

3 Prior Art

previously-learned signatures 7. Typical firewalls protect against data leakage, network traffic issues, and unauthorized communication interactions. In the IoT, firewalls must be equally concerned with the potential for physical consequences.

A firewall’s policies determine its efficacy. However, the most effective attacks are unpredictable, and interdependent rules are difficult to adapt. Even for non-IoT applications, experts struggle to define appropriate rules without human supervision 8 because firewalls lack “context.” 9 In particular, conventional firewalls may allow “known good” actors to send malicious commands to connected IoT devices undetected.

To meet the needs of distributed systems, firewalls must evolve from rule-based to autonomous and adaptive. 10 Shrobe et al. describe a similar system that generates and adapts new models to identify inconsistencies between observations and predictions using an “architectural differencer” to detect and respond to emerging and adapting attacks as they happen. 9

Building upon this work, Khan, et al. developed ARMET, a design methodology for industrial control systems based upon computing and physical plant behavior and using deductive synthesis to enforce functional, security and safety properties. ARMET operates as an efficient middleware comparing an application’s actual execution with its specification in realtime in order to detect computational attacks modifying application or system code or data values. 5 Underpinning this system is the concept of a Runtime Security Monitor (RSM), which compares expected application (digital) and executed implementation (physical) specifications to detect inconsistencies. 7 Additional prior art for reliable-and-secure-by-design ICS applications is explored in Khan, et al. (2018) 5 While ARMET and the RSM are ideally suited to identifying data or code manipulation, these systems may not adaptively learn rules based upon observing systems in context, and neither approach tests commands sent over trusted channels for benignness. Extending the concept of ARMET to test commands in fully simulated environments would add an additional layer of robustness to the security system and protect against emerging threats. There is an opportunity to apply artificial intelligence to learn rules adaptively, and to use computational resources to not only compare real and anticipated system models, but to predict the implications of received commands on the future state of a system to determine if known or learned limits are violated. Utilizing models based upon control theory further enhances state-space data richness, allowing observability of a system’s hidden variables with reduced device resource expenditure compared to full sensor mirroring. Additionally, there is an opportunity for an IoT security system to autonomously learn models from time histories of observations, assisting in cases where IoT’s interconnectedness makes developing standard control theory models impractical.

We propose extending this concept to emulate human cognition, allowing firewalls to apply logic and reasoning to address unexpected scenarios. These firewalls could leverage Cloud resources to ensure scalability and availability. This approach would extend the “Data Proxy” architecture 11,12 for minimizing IoT’s resource use to build intelligent firewalls capable of detecting system anomalies and testing command safety prior to execution. Such “Cognitive” Supervisors and Firewalls could build upon other state-of-the-art techniques for credentialing, white- and black-listing commands and users, and (revocable)

certification to ensure long-term performance.

4 The Case for Cognition

Imagine a home with a connected HVAC system, where the IoT affords the owner remote control of their heating and cooling. Unfortunately, a jilted lover with overlooked administrative credentials could do the same – setting the temperature to its lowest setting of 10◦C in the hopes of cold weather freezing pipes and causing catastrophic damage. A Cognitive Firewall addresses this threat by using scalable computation, context information and system behavior models to test commands for benignness prior to their execution.

When a command is sent to the “Cloud home,” it is tested in context – what’s the weather, is the house occupied, and what’s my energy cost? A quick simulation finds disastrous results and the system rejects the command, prompting the homeowner to use a “two-factor override” to execute such a command. Crisis averted, and the ex-lover is caught. The same model could protect pets or limit electricity costs by using additional context from PIR sensors or webcams to prevent the heat from being turned up excessively.

The same model supports Cognitive Supervisors for detecting hardware, software, and system modeling faults. For example, a model of a furnace can learn its context within the home, creating a state-space model consisting of a measurable heater power input and ambient and interior temperature sensors. This “Cloud mirror” starts with a generic heater model and adapts over time.

One day at 7 A.M., the homeowner requests a temperature raise from 19◦ to 21◦ for a family lunch at noon. At 10:30 A.M., the control system commands 4.3kW to raise the house’s temperature to the target 21◦ within 90 minutes. 15 minutes after starting the heater, the controller commands an atypical 5kW, and the temperature has fallen to 18◦C. A Cloud duplicate of the heater sends the homeowner an alert indicating a fault in the heater, the power meter, the temperature sensor, the system model, or somewhere else in the house. The homeowner checks the system and realizes that the window is open (Figure 1). In this case, the supervisor identified anomalous behavior and was able to notify the homeowner in time to take corrective action. This is similar to ARMET and the concept of a Runtime Security Monitor, which detect data anomalies stemming from physical or cyber faults or attacks. 5,7

The shift in security-related processing from the local device to a remote server affords constrained, embedded system the same protection as a powerful general-purpose system at the expense of latency. In this manner, substantial computing power may help develop a system adaptive to emerging threats that would be infeasible to operate within the constraints of a low-cost IoT device. Moving security to a remote endpoint further allows the security to be maintained over the device’s entire lifetime, even when future security demands outstrip the device’s originally-envisioned capabilities.

These concepts may be applied to protecting and supervising a range of physical and digital systems.

5 Cognitive Firewall and Supervisor Design

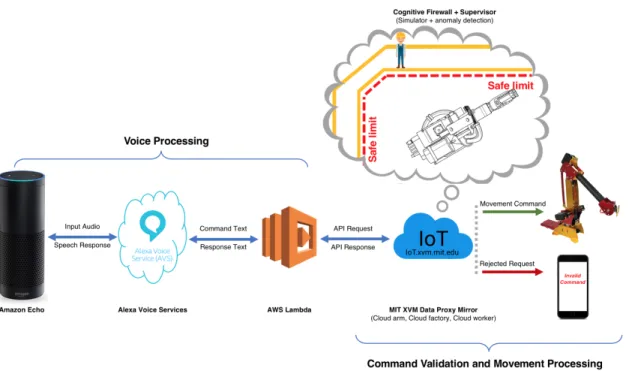

We propose building Cognitive Supervisors and Firewalls using the architecture in Siegel (2017). 12 In this approach, all IoT systems and applications use the Cloud or other scalable computation as an intermediary among requests, commands, and responses. Systems are mirrored using Cloud-hosted Data Proxies, statistical or physical state-space models that take sparse sensor data and create a rich digital duplicate. All data requests are handled entirely in a hardened Cloud or other remote endpoint, where commands are tested against Data Proxy models for safety before being sent to their respective devices.

This approach minimizes constrained devices’ attack surface to one protectable, trusted channel, helping ensure endpoint authenticity. Moving data from devices to a remote endpoint further allows system mirroring efforts and endpoint security to leverage scalable computation, as well as enables data uploaded from a device once to be reused by multiple applications, conserving resources at the constrained node. Endpoint computation then supports the Firewall’s forward simulation, system model adaptation, limit learning, and the Supervisor’s anomaly detection.

Each monitored system comprises:

1. Sensors and sensor data 2. Actuators and control signals 3. Network access

Each system has its own Data Proxy, and multiple Proxies are aggregated in the Cloud to build context (similar to CloudThink’s “Avacar” concept of mirroring vehicles as part of a larger transportation network mirror) 13). These Proxies consist of:

1. Sparse input data

2. Generic system model including state-space representation and physical or statistical relationships between sensors, actuators, and environment data

3. Model tuning parameters for adapting the general model to a specific instance

4. Data-rich digital mirror hosted on scalable computation 5. Safe, interactive endpoint

6. Environment and context data (provided by other systems’ Data Proxies) 7. Known and learned limits which may be hard limits (e.g. physical constraints)

or soft limits (e.g. something that might cause unintended consequences)

6 System Assumptions

To develop and test a Cognitive Protection System, we had to define trusted and untrusted elements. We consider the following as trusted elements:

1. System manufacturers and installers - we assume the system is designed well and not compromised during setup

2. Infrastructure providers – we assume the network is secure from man-in-the-middle attacks through the use of end-to-end encryption and that communications are not modified in transit. We further assume that server infrastructure is up-to-date, properly configured, and provided by trusted vendors

4. Devices – we assume devices to be trusted, but not necessarily trustworthy (for example, we could use a Physically Unclonable Function 14 to validate a device is authentic, but the device is still susceptible to an evil maid attack. We further assume that CPS can be executed in a strongly protected environment like Intel’s SGX or ARM’s Trust Zone

5. Device manufacturers – we assume device manufacturers to follow industry best practices, and to have thoroughly vetted and monitored their supply chain. We do not necessarily trust or not trust the manufacturer or backend provider to be collecting data appropriate for their needs, as this falls outside the scope of the manuscript

In all cases, we assume that trusted elements are built upon widely-accepted technologies and security best practices.

The following are untrusted:

1. Third parties - These individuals or organizations may be malicious attackers 2. Applications - End-use applications could be compromised intentionally, by

human error, or by software bugs

3. Third-party application developers - Malicious developers could design software to undermine system security or to steal data

4. Tailored system models - Factory, plant, or equipment models are adapted using data from a device that itself may be compromised

5. Device owners - A device owner could inadvertently misconfigure or attempt to hack their own device, e.g. to get a replacement under warranty or to file a lawsuit. Any user entering data incorrectly may be at risk of sending potentially injurious commands to their own system

In the next section, we build a Cognitive Protection System and test it against intentional and accidental, targeted and untargeted attacks on control integrity and system data.

7 Testing a Cognitive Protection System

To demonstrate the Cognitive Firewall, we developed a voice-controlled robotic arm. Voice control embodies an important characteristic of the Internet of Things: the ability to reach out and control something physical at a distance. Whether a worker is disabled, far from a machine, or has his or her hands full, voice services increase convenience, efficiency and reach by offering an extra (digital) hand.

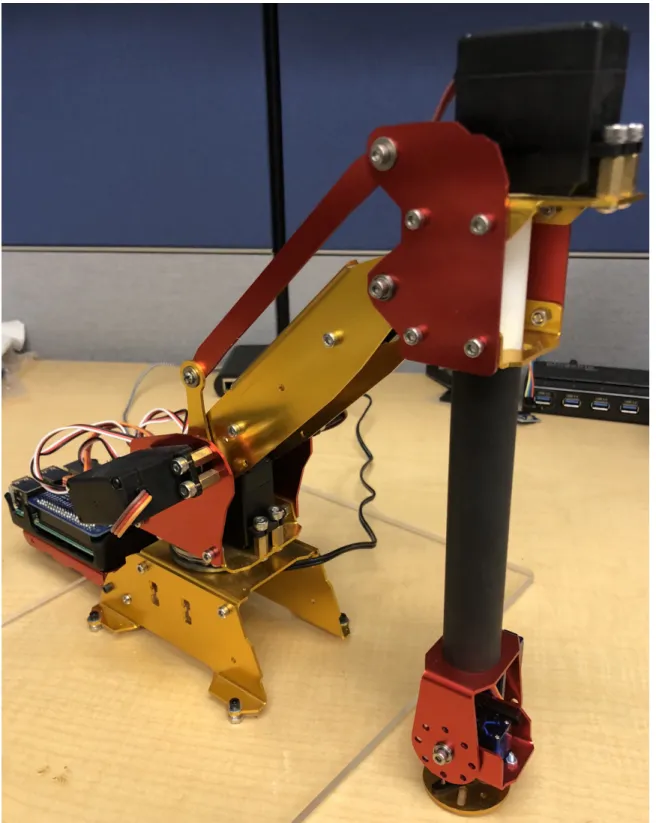

Our demonstration system uses a six degree of freedom robot arm and a Raspberry Pi 3 microcomputer with a 16 channel PWM hat servo interface. The arm is shown in Figure 2. We developed an application for the Amazon Echo Dot using Amazon Web Services to process voice input. Amazon Voice Services parses voice input and sends data to Amazon Lambda, where a script sends appropriate movement requests to a Data Proxy server via an HTTPS request. The server runs a Cognitive Firewall to test commands in the context of a reconfigurable factory, and passes safe commands on to the arm using MQTT. Administrators are notified of rejected commands via e-mail.

The Cognitive Protection server is a virtual machine that can be hardened and scaled as necessary to ensure maximal security, shifting the burden of protection away from the embedded systems within the robot arm or the Amazon Echo. The architecture is shown in Figure 3.

This arm uses a state-space model manually tailored to reflect the arm’s characteristics such as end-effector size and backlash (though AI could automate such adaptation). We also created a simple Cloud factory model with known safe walkway boundaries (placing these limits in the Cloud increases malleability relative to embedded software or mechanical endstops). We tested the Cognitive Firewall’s ability to protect against control threats by speaking safe and unsafe commands.

We first requested 90 degrees counterclockwise rotation. The arm simulated movement in the Cloud and found that the X/Y coordinates of the end-effector cross the plane of a walkway, posing a safety violation. The command was rejected, and notification was sent to an administrator. Next, we sent a command requesting 30 degrees counterclockwise rotation. The Cognitive Firewall simulated the result and found it to be safe, and therefore relayed it to the arm with minimal latency (several milliseconds, orders of magnitude shorter than the voice processing). These scenarios are reflected in Figure 4.

Using the servo’s internal potentiometer or a separate encoder, a Cognitive Supervisor can detect a system fault. If the arm were commanded to move to a particular position but the encoder did not match the anticipated angle, we could detect a system anomaly such as stripped gears, a sensor inaccuracy, such as lost encoder pulses, an actuator error like insufficient input voltage, or model anomaly, such as an incorrect pulse width to position relationship. Such a model could mitigate system threats as shown in Figure 5. In this manner, the Cognitive Supervisor can detect physical system faults, similarly to stochastic fault modeling, as well as false data injection, like ARMET.

Though these are simple models, there is a role for complex models and adaptive limit learning when developing more robust Cognitive Protection Systems. For example, the use of Inverse Kinematics could allow the arm to check for self-intersections, collisions, or to test its center of gravity along a trajectory prior to executing a command. Haptic sensors could provide feedback from the manipulated object, so we could learn the relationship between input current and crushing an egg – even learning the relationship robustly enough to eliminate the need for haptic sensors.

The learned limits may be “hard” or “soft.” An example hard limit would include a limit beyond which a device collides with its environment, which a soft limit is a limitation beyond which the system would appear to work normally, but in practice might experience difficulty (e.g. moving a stepper motor too quickly could cause the motor to lose steps, resulting in lost position tracking in an open-loop system).

This same Cognitive Protection System can respond to data threats similarly to conventional firewalls. The Cognitive Protection server can monitor the traffic flow to and from the arm and learn typical command and data sharing frequency, common requestors and recipients, and safe maximum data rate limits. An analogous cognitive model can be constructed for the network and the physical system to detect and mitigate data leakage. For example, data sent exactly occur once per day to an external device from multiple

devices within a factory could indicate that the devices have been compromised as part of a botnet.

The arm’s Cognitive Protection System has demonstrated our ability to protect against the system, data and control threats while protecting against our untrusted elements. It protects against third parties, applications and their developers, and device owners by proactively testing each command in a trusted computing environment to ensure it is safe and that the system is not performing anomalously.

If we assume that the tailored models operate within a trusted private Cloud, we have solved for all of the challenges we identified. If we assume that the tailored model might be untrusted, the Cognitive Supervisor can provide an additional degree of resilience by detecting anomalous behavior and raising an alarm when the model and system don’t perform as expected.

While the Supervisor uses this model to detect faults, complex models require sophistication to corrupt. With a larger number of included data sources and strong interdependencies, multiple sensors, actuators, and external context models (from other Data Proxies) would need to be compromised to avoid detection. This would require a large, coordinated attack and a deep understanding of the system model’s behavior. We can reduce this risk further by storing the adapted model’s provenance in a distributed ledger and creating a digest of signed software changes.

This concept could be extended to support consensus voting across multiple models trusting elected leaders more, or providing voting mechanisms that could be weighted by proof of stake or proof of work. In this manner, manufacturer’s original models could be trusted more than end-user models, and some end-user models may be trusted more than others.

8 Extensibility

The concept of a Cognitive Protection System has wide applicability. It can be used to monitor the physical world (home heaters and industrial robots) and the digital world (network traffic and data leakage). However, the concept extends beyond the IoT. For example, a Cognitive Protection System could be built into vehicle networks to improve local network safety and system supervision (mitigating car hacking risks while replacing antiquated On-Board Diagnostics with an adaptive diagnostic system). Cognitive Protection might find other homes in the power grid or transportation infrastructure. This approach can be extended to mirror human cognition more broadly. For example, a human touching a hot stove pulls his or her hand away by impulse, well before the brain registers pain. A similar blend of local and remote digital Cognition would allow connected devices to protect themselves from time-sensitive, high risk events with low latency, while monitoring for larger trends where there is time for (multi-device) data aggregation. Addressing this challenge where cost and power concerns are reduced, a locally-run Cognitive Firewall may run offline, for example within the secure enclave of a powerful gateway device. While computation resources are less scalable than a Cloud-based

approach, latency can be nearly instantaneous. This would allow classes of mission-critical faults to be detected and mitigated before sensor data arrives to a remote server for analytics and decision making, even if connectivity is interrupted.

Note that both remote and local Cognitive Firewalls can monitor non-physical states and their impact on physical conditions. For example, a repeated request for a device’s GPS location will drain that device’s battery quickly. A context-aware Cognitive Firewall may detect that the device is moving slowly and that high-frequency position data is unnecessary, blocking the command from being sent to the GPS and conserving battery, preserving device availability for a longer duration.

Future CPSs could probabilistically assess risk and reward to optimally know when to involve humans in the loop, or to make bold decisions autonomously. Richer data would allow models to shift from reactive toward proactive, and the use of AI would allow such systems to identify trends that humans might not be able to identify, vocalize, or formalize as rules.

This Cognitive approach could also be used as the basis of a trust model for connected devices. By observing systems and related actors over time, we could develop trustworthiness scores that would carry from service to service, or attribute more or less value to particular data sources.

9 Implications

With heightened security, IoT’s adoption will grow to include more devices and device categories, bringing with them the potential for new applications and services. Pervasive connectivity will allow IoT to reach its best embodiment, with connectivity and sensing migrating toward mundane “edge” devices that have subtle but significant impacts at scale. The use of Cognitive Protection Systems in a critical fraction f of devices may also bring “herd immunity” to the IoT, where beyond a critical fraction of protected devices, attacks will suffer limited reach and impact. Small changes in initial prevention may be the difference between a single factory being taken down and a global botnet.

10 Challenges and Limitations

Developing Cognitive Protections is not without challenges. Creating system models is complex with or without the use of AI, and developing comprehensive, generalized models with the ability to be “tuned” to specific object instances will require research on what parameters to duplicate and how best to adapt in light of new sensor data and system use cases. These models must be developed in a manner so as to be usable in realtime, with high availability, and low latency.

Real-time systems have a time period T during which the determination must be made whether the system is operating normally or abnormally. 7T varies by application, and for small T, a Cloud-based CPS may not meet requirements.

fault-tolerance that determines whether or not the use of a CPS is appropriate. Architectural changes, such as running a local CPS instance, can reduce the system’s latency enough to support hard-realtime requirements common to IoT and ICS. To a lesser extent, the use of low-latency network technologies and powerful computation can reduce the fault detection time for a remote system. In both cases, additional computation enables a richer simulated environment, longer-timescale simulation protections, and increasingly adaptive learning. Another complicating factor is that if any underlying assumptions are invalid, the CPS may suffer faults of varying severity. For example, if the system, device, or infrastructure manufacturers or maintenance companies are unscrupulous, trusted elements may be modified, backdoors installed, or physically modified, undermining system performance. If the network is compromised, false data may be injected, and the system may act correctly in response to invalid data (increasing the number of measured parameters increases system resilience to this type of attack; the more parameters that are monitored, the more relationships the system learns – meaning an adversary must spoof multiple values appropriately to escape detection). If the Cloud is corrupted or if the device is modified to connect to a non-authentic Cloud, the CPS breaks down and can be used as a honeypot to attack connected devices. If the base system models are not accurate, the system will perform poorly until it learns the true system parameters, or until the model is replaced. In protecting the adapted models, we must develop software to assure model provenance to validate that the model is created by trusted actors on trusted hardware, or to cultivate the ability to disregard model contributions from untrusted actors. The use of a digest would allow proprietary models to be obfuscated while still allowing code to be checked against a trusted register, creating a chain of software accountability.

In developing and deploying Cognitive Firewalls, we must consider the cost of such a system and the potential risks of leaving these devices unprotected. We can frame this problem by considering the cost of security relative to the fraction of connected devices we would otherwise expect to be compromised and evaluating the risk – financial and otherwise – of such faults.

The best solution for improving the CPSs performance and resilience will be to implement such solutions in the real world, which differs wildly the idealized world. By allowing these systems to “experience” the real world firsthand, researchers and the system itself will learn to adapt to emerging and unanticipated threats. Additionally, the learned models can then be deployed across multiple systems, helping to create a cognition-based digital herd immunity for the IoT.

11 Conclusion

The Cognitive Protective System brings a human element to the IoT, addressing significant concerns about data, security, and system threats. We have demonstrated a working, real-world implementation and identified other application areas with the potential to benefit.

Even minimal increases in the security of connected systems have significant impacts due to the IoT’s network effect, and reducing the perception of insecurity will drive adoption

of connected devices and services. Using scalable Cloud resources and cognitive models to protect devices and services will go a long way toward creating a world with pervasive connectivity, and will help push IoT downstream into more mundane and increasingly constrained devices.

Acknowledgments

We thank Shane Pratt (MIT) and Divya Iyengar for their work assembling the robotic arm and developing and testing the voice interface software that made building a Cognitive Protection System possible.

References

1. U.S. Government Accountability Office, “Technology assessment: Internet of things: Status and implications of an increasingly connected world,” U.S. Government Accountability Office, Tech. Rep., May 2017. [Online]. Available: Technology Assessment: Internet of Things: Status and Implications of an Increasingly Connected World

2. M. E. Morin, “Protecting networks via automated defense of cyber systems,” Naval Postgraduate School Monterey United States, Tech. Rep., 2016.

3. S. Sarma and J. Siegel. Bad (Internet of) Things. (2016, November) things. [Online]. Available: https://www.computerworld.com/article/3146128/internet-of-things/bad-internet-of-things.html 4. C.-C. Sun, C.-C. Liu, and J. Xie, “Cyber-physical system security of a power grid: State-of-the-art,”

Electronics, vol. 5, no. 3, 2016. [Online]. Available: http://www.mdpi.com/2079-9292/5/3/40

5. M. T. Khan, D. Serpanos, and H. Shrobe, “ARMET: Behavior-based secure and resilient industrial control systems,” Proceedings of the IEEE, vol. 106, no. 1, pp. 129–143, 2018.

6. J. C. nedo, A. Hancock, and A. Skjellum, “Trust management for cyber-physical systems,” HDIAC Journal, vol. 4, no. 3, pp. 15–18, 2017.

7. M. T. Khan, D. Serpanos, and H. Shrobe, “A rigorous and efficient run-time security monitor for real-time critical embedded system applications,” in 2016 IEEE 3rd World Forum on Internet of Things (WF-IoT), Dec 2016, pp. 100–105.

8. H. Hu, G. J. Ahn, and K. Kulkarni, “Detecting and resolving firewall policy anomalies,” IEEE Transactions on Dependable and Secure Computing, vol. 9, no. 3, pp. 318–331, May 2012. 9. M. T. Khan, D. N. Serpanos, and H. E. Shrobe, “On the formal semantics of the cognitive middleware

AWDRAT,” CoRR, vol. abs/1412.3588, 2014. [Online]. Available: http://arxiv.org/abs/1412.3588 10. Z. Yang, L. Qiao, C. Liu, C. Yang, and G. Wan, “A collaborative trust model of firewall-through based

on cloud computing,” in The 2010 14th International Conference on Computer Supported Cooperative Work in Design, April 2010, pp. 329–334.

11. J. E. Siegel, “Data proxies, the cognitive layer, and application locality: enablers of cloud-connected vehicles and next-generation internet of things,” Ph.D. dissertation, Massachusetts Institute of Technology, 2016.

12. J. E. Siegel, S. Kumar, and S. E. Sarma, “The future internet of things: Secure, efficient, and model-based,” IEEE Internet of Things Journal, vol. PP, no. 99, pp. 1–1, 2017.

13. E. Wilhelm, J. Siegel, S. Mayer, L. Sadamori, S. Dsouza, C.-K. Chau, and S. Sarma, “Cloudthink: a scalable secure platform for mirroring transportation systems in the cloud,” Transport, vol. 30, no. 3, pp. 320–329, 2015. [Online]. Available: https://doi.org/10.3846/16484142.2015.1079237

14. G. E. Suh and S. Devadas, “Physical unclonable functions for device authentication and secret key generation,” in 2007 44th ACM/IEEE Design Automation Conference, June 2007, pp. 9–14.

Figure 3: The flow of commands and data from the Amazon Echo through AWS/AVS to MIT XVM and into the robot arm.

Figure 4: The Cognitive Firewall’s simulates the arm in the context of a factory’s Data Proxy. We show the resulting physical and notification states.

Figure 5: The Cognitive Supervisor detects a mismatch between commanded and actual states, identifying a sensing, actuation, or modeling fault.

AT THE TIME OF SUBMISSION, JOSH SIEGEL (jsiegel@msu.edu) WAS A RESEARCH SCIENTIST AT THE

MASSACHUSETTS INSTITUTE OF TECHNOLOGY (MIT).HE IS NOW AN ASSISTANT PROFESSOR IN COMPUTER

SCIENCE AND ENGINEERING AT MICHIGAN STATE UNIVERSITY (MSU) AND THE LEAD INSTRUCTOR FOR MIT'S

DeepTech BOOTCAMP. HE RECEIVED PH.D., S.M. AND S.B. DEGREES IN MECHANICAL ENGINEERING FROM MIT.JOSH AND HIS AUTOMOTIVE COMPANIES HAVE BEEN RECOGNIZED WITH ACCOLADES INCLUDING THE LEMELSON-MITSTUDENT PRIZE AND THE MASSITGOVERNMENT INNOVATION PRIZE.HE HAS MULTIPLE ISSUED PATENTS, PUBLISHED IN TOP SCHOLARLY VENUES, AND BEEN FEATURED IN POPULAR MEDIA. DR.

SIEGEL'S ONGOING RESEARCH DEVELOPS ARCHITECTURES FOR SECURE AND EFFICIENT CONNECTIVITY, APPLICATIONS FOR PERVASIVE SENSING TO VEHICLE DIAGNOSTICS, AND NEW APPROACHES TO AUTONOMOUS DRIVING.

SANJAY SARMA (sesarma@mit.edu) RECEIVED A BACHELOR'S DEGREE FROM THE INDIAN INSTITUTE OF

TECHNOLOGY, A MASTER'S DEGREE FROM CARNEGIE MELLON UNIVERSITY AND A PH.D. DEGREE FROM THE UNIVERSITY OF CALIFORNIA AT BERKELEY.HE IS THE FRED FORT FLOWERS (1941) AND DANIEL FORT

FLOWERS (1941) PROFESSOR OF MECHANICAL ENGINEERING AND THE VICE PRESIDENT FOR OPEN

LEARNING AT MIT.HE CO-FOUNDED THE AUTO-IDCENTER AT MIT AND DEVELOPED MANY OF THE KEY TECHNOLOGIES BEHIND THE EPC SUITE OF RFID STANDARDS NOW USED WORLDWIDE.HE WAS ALSO THE

FOUNDER AND CTO OF OATSYSTEMS. HE HAS AUTHORED ACADEMIC PAPERS IN COMPUTATIONAL GEOMETRY, SENSING,RFID, AUTOMATION, AND CAD, AND IS A RECIPIENT OF NUMEROUS AWARDS FOR TEACHING AND RESEARCH.

View publication stats View publication stats