Building Note-Taking Tools to Support Learning

in Virtual Reality

by

Erin Hong

B.S., Massachusetts Institute of Technology (2017)

Submitted to the Department of Electrical Engineering and Computer

Science

in partial fulfillment of the requirements for the degree of

Master of Engineering in Electrical Engineering and Computer Science

at the

MASSACHUSETTS INSTITUTE OF TECHNOLOGY

June 2018

c

2018 Erin Hong. All rights reserved.

The author hereby grants to M.I.T. permission to reproduce and to

distribute publicly paper and electronic copies of this thesis document

in whole or in part in any medium now known or hereafter created.

Author . . . .

Department of Electrical Engineering and Computer Science

May 25, 2018

Certified by . . . .

Pattie Maes

Professor of Media Arts and Sciences

Thesis Supervisor

Accepted by . . . .

Katrina LaCurts

Chair, Master of Engineering Thesis Committee

Building Note-Taking Tools to Support Learning in Virtual

Reality

by

Erin Hong

Submitted to the Department of Electrical Engineering and Computer Science on May 25, 2018, in partial fulfillment of the

requirements for the degree of

Master of Engineering in Electrical Engineering and Computer Science

Abstract

With the advent of virtual reality (VR) technology, education and learning appli-cations are able to leverage the fully immersive medium to create more hands-on, sophisticated, and engaging interactions. Alongside efforts to build virtual learning environments, note-taking can be reimagined in a virtual space where visual and au-ditory experiences can be captured and re-experienced. We classify phases as well as affordances of note-taking enabled self-regulated learning to be content capture, review, and modification. We present a first-principles analysis to identify design re-quirements for virtual note-taking that satisfy the three key functions of note-taking. We will discuss our approach to overcoming technical and design challenges as I detail the implementation of a standalone note-taking module, MemoryTree.

Thesis Supervisor: Pattie Maes

Acknowledgments

This work would not have been possible without the strong mentorship and support of my two advisors, Dr. Pattie Maes and Dr. Scott Greenwald. Thank you specifically for supporting me in my career and academic interests through your time, energy, and many efforts to shape me into a better, more curious learner.

I would also like to thank my collaborators, Wiley Corning and Annie Wang, for their amazing work on developing Electrostatic Playground. To Judith Sirera, Hisham Bedri, and Angela Vujic, thank you for the hysterical conversations, memories, and research mentorship I gained while at MIT. I am grateful to all of those with whom I have been invested in by, challenged by to ask the harder questions, and pushed to imagine what did not yet exist as a member of the Fluid Interfaces group at the MIT Media Lab.

Finally, I thank my loving and supportive mom, dad, and sister who have sup-ported my career goals by pushing me forward during my most challenging times. My heartfelt thanks go to my irreplaceable, unforgettable, and absolutely hilarious roomates: Deepti Raghavan, Shirin Shivaei, and Suma Anand; I thank you all, the “MEng Hommies”, for the shared and deeply cherished highs and lows of our Masters of Engineering experience.

Contents

1 Introduction 19

1.1 Learning and Note-Taking . . . 19

1.2 Electrostatic Playground . . . 20 1.3 MemoryTree . . . 20 2 Related Work 23 2.1 Background . . . 23 2.1.1 Note-Taking . . . 23 2.1.2 Virtual Reality . . . 24

2.2 Virtual Learning Environments . . . 28

2.3 Immersive Interfaces . . . 30

2.3.1 Second Life and Sansar . . . 30

2.4 Note-Taking: Pen vs. Keyboard . . . 31

3 Electrostatic Playground 35 3.1 Introduction . . . 35

3.2 System Overview . . . 35

3.2.1 Selector Tool . . . 36

3.2.2 Play and Pause Tool . . . 37

3.2.3 Object Menu . . . 38

3.2.4 Property Editing . . . 39

4.1 Introduction . . . 45 4.2 MemoryTree Illustration . . . 46 4.3 MemoryTree Functions . . . 48 4.4 Encoding . . . 50 4.5 3D Recordings . . . 50 4.6 3D Snapshots . . . 51

4.7 Recording Camera Tool . . . 53

4.8 3D Tree . . . 53

4.8.1 Tree Comparison Use Case Scenario . . . 56

4.9 Replaying . . . 58 4.10 Modification . . . 59 5 MemoryTree Implementation 61 5.1 Introduction . . . 61 5.2 MemoryTree Behaviour . . . 62 5.2.1 Record . . . 63 5.2.2 Replay . . . 63

5.3 Tree Data Hierarchy . . . 66

5.3.1 State Nodes . . . 67

5.3.2 Branches . . . 67

5.3.3 Branch Network . . . 68

5.3.4 Tree . . . 68

5.4 MemoryTree Component Overview . . . 69

5.4.1 Recording System . . . 69

5.4.2 State Capture . . . 70

5.4.3 Tree Drawing . . . 71

5.4.4 Recording Selection . . . 73

5.4.5 Replay Sequence Generation . . . 74

5.4.6 Replay Module . . . 76

6 Evaluation 79

6.1 Testing Method . . . 79

7 Future Work 83 7.1 Scene Setup . . . 83

7.2 Recording Selection Granularity . . . 84

7.3 Comparison Tools . . . 84

7.4 Tree Parameters . . . 84

7.5 Recording Deletion . . . 85

7.6 Annotation . . . 85

List of Figures

2-1 The HTC Vive Head Mounted Display comes with two controllers. The Vive tracking uses two base stations that externally track position and orientation of the headset and two controllers. . . 25 2-2 The Samsung Odyssey also comes with two controllers. The Odyssey

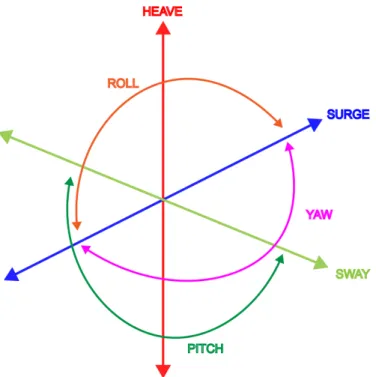

headset and controllers use internal tracking mechanisms with cameras placed on the headset. . . 25 2-3 This figure illustrates the six total axes that can be used in either 3DOF

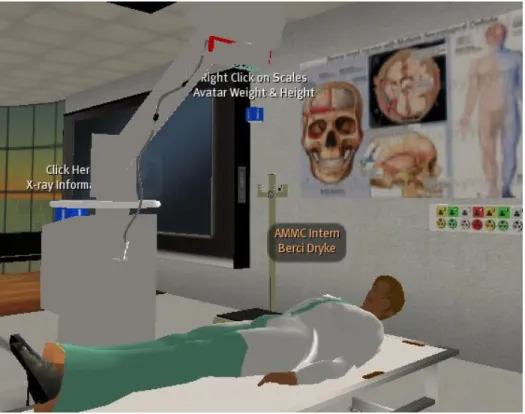

technology or 6DOF. 3DOF technology allows the user to move along three rotational axes known as roll, pitch, and yaw. 6DOF includes the rotational axes in addition to surge, heave, and sway. . . 26 2-4 A scene from Virtual Neurological Education Centre illustrates how

users in the virtual space learned about and embodied neurological diseases. . . 32

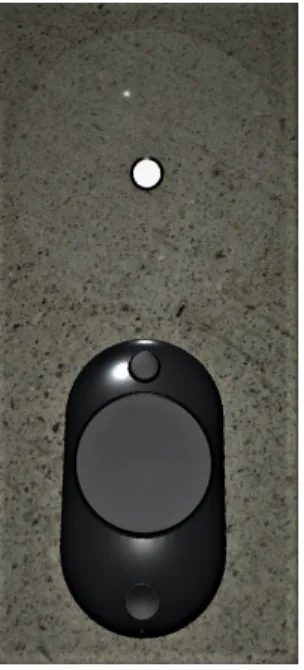

3-1 The selector tool, which maps to one of the two user controllers, allows the user to move objects as well as change object specific properties. . 36 3-2 The Play and Pause tool can take one of two modes: play or pause.

By default, the controller is in pause mode and looks like the right controller. When the user pressed on the touchpad, the controller’s indicator icon changes to the play icon. In play mode, the physics engine system is turned on and the charged objects move in response to the generated electric field. . . 37

3-3 Charge particles can have three various parities: negative, neural, or positive. . . 38 3-4 The magnetic dipole creates a magnetic field once it is introduced into

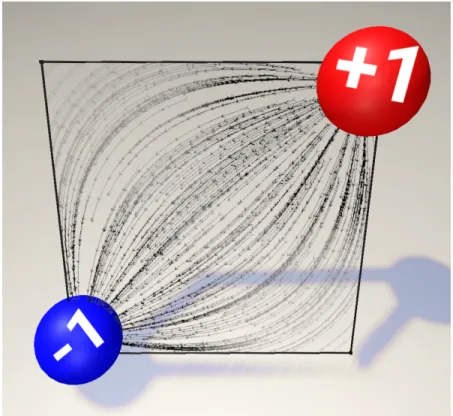

the virtual scene . . . 39 3-5 The field line plane, with a positive and negative charge, renders the

state of the electric field lines on a 2D surface. . . 40 3-6 The three dimensional plane has three toggleable modes which aid the

user in understanding various concepts. In (a), the plane arrows show the direction of the electric field. (b) shows how the electric forces move from positive to negative away from the positive charge. Lastly, (c) reveals the magnitude and positivity and negativity through use of yellow or purple bars respectively. . . 41 3-7 The sphere is an enclosed Gaussian surface that reacts to the presence

of charges and updates its flux value depending on enclosed charge. . 41 3-8 In addition to the sphere, the cylinder is another enclosed Gaussian

surface to further understand how flux and electric field behave. . . . 42 3-9 The Electrostatic Playground offers easy access to all spawnable

physics-related objects by organizing them in a menu. The menu’s visibility can be toggled with the press of a button attached to the side of one of the controllers. Also, the trashcan in the bottom right corner offers the user affordances by opening its lid before deleting an object if one is dragged to the trashcan. . . 43 3-10 The Context Menu, available for every spawnable object, allows the

user to change object parameters post-creation and encourages explo-ration in how different parameters result in different behaviors. The context menu for an object, such as the Gaussian sphere in this figure, can be launched using the Selector tool touchpad where the user can edit properties such as object scale, arrow density, and visualization mode. . . 43

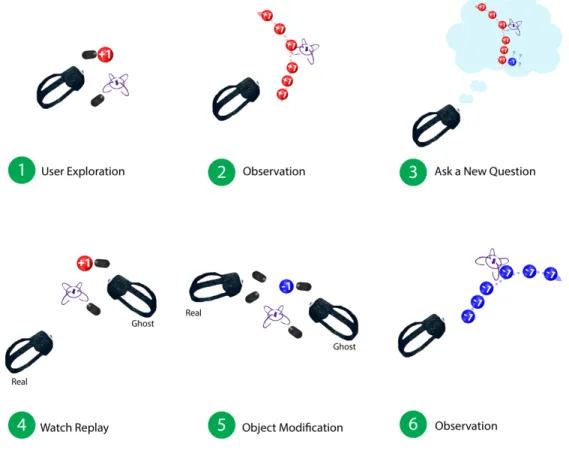

4-1 Step by step, the user explores the behavior between a charge particle and a magnetic dipole. After fixing the charge parity to positive, the user observes a behavior and wonders about how modifying the parity to negative might reveal different behavior. Thus, given the power to re-experience one’s earlier explorations, the user replays the same scenario acted out by a ghost avatar and makes a modification. Finally, in Step 6, the user steps back and observes a different behavior. . . . 46

4-2 The ghost avatar appears in the recording to stand in for the real user who is observing the replay in real time. . . 50

4-3 A three-dimensional snapshot represents the state of the scene at the moment of capture by detecting the objects and their respective posi-tion and orientaposi-tion within the scene. In this figure, the user can see how the state of the room has changed over time with the addition of a Gaussian plane in the presence of charges. . . 52

4-4 The recording camera tool has a record or stop button for 3D recordings as well as a disk slot that can be touched to launch a library of existing recordings and snapshots. . . 54

4-5 Users can view existing 3D snapshots and recordings in the form of polaroids and CD disks respectively. To view the content, users grab an item off the menu and place it onto the disk slot below. . . 54

4-6 The tree can be broken down into branches and further, each branch consists of nodes. Each branch in this figure is labeled with a different color block. The circled numbers on the right of every leave node specify the order in which each branch was generated. Branches 2 and 3 were generated by branching off from the same parent node in Branch 1. . . 56

4-7 The 3D tree model contains a miniature preview, or snapshots, of the virtual room as nodes and uses directed edges to declare the relation-ships between each snapshot and branches of the tree. The ”active” branch that is currently being encoded in this picture is denoted with white intermediate arrows. In the scene, the non-active branches have black arrows but for the visibility purposes, the black arrows are seen as red arrows. . . 57 4-8 This figure illustrates the power the reusability of captured recordings

to ask new questions and observe different behavior. In this use case, the user originally records recording 1 shown on the left with a positive charge that is thrown towards a magnetic dipole. However, during the replay of recording one, the user quickly changes the parity, sparking the creation of recording 2, and observes how a negative charge reacts to a magnetic dipole differently. . . 58

5-1 The MemoryTree state diagram depicts how the system transitions from two states: record and replay. Possible transitions are labeled with letters while states are numbered. While the replay state is achieved by hitting replay, the user can record by spawning a new recording or by waiting for the replayed recording to end without mod-ifying anything in the scene. . . 62 5-2 The replay interface attaches to the non-dominant hand-based tool and

faces inwards where the user can use his or her other controller to tap the rewind arrow. Touching the arrow initates the start of the active recording. . . 64 5-3 The tree data hierarchy separates different levels of data and show the

relationships between each level of concern. . . 66 5-4 Assuming a fixed time rate of 5 seconds, the tree model grows the

active branch one node at a time. The active branch is indicated with the white arrows. . . 71

5-5 The tree uses an arrow coloring scheme to indicate the state of every branch. Black arrows indicate that the branch is non-active. Gray arrows indicate that the path is next in line to become active and will transition once the user hits replay. Finally, the active recording is denoted with white arrows always stemming from the root node to the leave node. . . 73 5-6 The Replay Sequence data structure specifies to the replay module in

MemoryTree what sequence of recordings to play in what order and at what time. Information is stored in pairs of branches that specify recordings as well as the last seen Log Entry to know when a child branch was created. . . 75 5-7 A scene generated from a recording can be modified in four ways:

creation of a new object, object property change, object deletion, or a position change. . . 77

List of Tables

3.1 The flexible design of object property specification allows objects to share properties they have in common as well as manage properties only specific to the object. Charge value adjusts the amount of charge of a charge instance. Scale refers to the uniform scaling of all three-dimensions of any object. Arrow density allows the user to see fewer or more arrows or bars on the Gaussian surfaces. Lastly, visibility mode toggles the type of arrow that the user can see. . . 40

5.1 This table lists all the used properties of every node in the tree which dictate how the tree is drawn. . . 73

Chapter 1

Introduction

1.1

Learning and Note-Taking

In just over a hundred years, we have advanced our technology starting with the invention of the first airplane to launching an electric sports car to orbit around Mars on a rocket that could land itself upright [11],[7]. We have invented wings for mankind to fly yet we have failed to transform the way we learn in the classroom. The traditional classroom structure, first drawn up by Horace Mann in the early 20th century, remains as the default approach to teaching in our educational institutions [5]. Students are stationarily organized in rows of desks all facing the front podium while they are expected to absorb lectured content by watching and listening. Tradi-tional classrooms are treated as a one-size-fits-all solution which encourages passive learning rather than a hands-on one.

Learning occurs when cognitive effort is used to select, condense, organize, and synthesize information and thus, quite naturally, note-taking goes hand in hand with how information is presented to the learner. Note-taking plays a big role in learning environments as a mechanism to maintain some form of externally stored information. Note-taking is often used to eliminate the burden of having to remember everything and to improve retention by actively processing and later reviewing the information. However, the design of traditional classrooms hinder learning by demanding stu-dents to balance both information intake and synthesized information production.

Students are expected to read projected slides, listen to the professor, and take com-prehensible notes all at once, ultimately dividing their attention. Especially today where smart devices sit in our pockets, competition for learner attention and engage-ment is a serious problem. More technology in the classroom does not correlate to better learning and will not until students can use technology to be more actively engaged and undistracted [15]. We argue that virtual realitys immersive properties have potential to drastically transform how education is approached and present a new platform in which note-taking can be re-imagined. Specifically, the new immersive medium allows for more hands-on, life-like, and engaging interactions which would better fit subjects in STEM that require more tactilely and kinesthetically engaging lessons that replicated real world experiences [3].

1.2

Electrostatic Playground

In the Chapter 3, we introduce Electrostatic Playground, a fully immersive virtual learning environment in which users explore physics by engaging with interactive, tangible forms of electromagnetic physics concepts. By playing with spatial and temporal properties of charge particles and Gaussian surfaces, the user can observe different behavior in real-time and discover concepts around charge interaction and electric fields. Electrostatic Playground is a developing solution to learn physics in virtual reality and serves as the environment in which we incorporate note-taking functionality as our proof of concept.

1.3

MemoryTree

While virtual learning environments are a step towards increasing active learning, note-taking, a crucial component of learning is missing. We aim to unlock the un-explored potential of virtual reality where well-established notions of “notes” and “note-taking” drastically change. In Chapter 4, we introduce MemoryTree, a stan-dalone note-taking module that interprets content capture and review in two novel

ways:

• MemoryTree captures virtual recordings (described in Section 4) as a method of capturing content or user interactions with the scene over a period of time.

• Also, MemoryTree allows the user to review one’s “notes” or recordings by re-experiencing a playback of the recording from an observer standpoint.

Furthermore, we will discuss why and how MemoryTree performs content encoding automatically and seamlessly; automated encoding allows the user to capture ver-batim notes more efficiently with little effort in contrast to traditional equivalents. We show that once instances are stored, VR allows the user to feel as if they are re-immersing themselves in a past moment, represented in the form of a 3D record-ing, in a manner that is more native to how people think and recall memories. We make distinctions between passive review and resumed exploration and discuss how MemoryTree encourages the user to make continuous connections between new and old material by allowing for modifications in replaying recordings.

Chapter 5 is a detailed description of the implementation of MemoryTree. First, we mention how new recordings are created by branching off of an existing recording to be consistent with the notion of generative learning. Then, we use a tree metaphor to discuss the relationships between the recordings that have been generated from another. We describe the possible interactions and expected behavior as the user transitions from two states:

• Recording

– The user exists in a recording state when he or she is exploring throughout the scene

• Replaying

– The user exists in a replaying state when he or she is replaying an existing recording made possible by interacting with a single-button interface.

Additionally, we explain how the tree metaphor is visually represented and imple-mented in the form of a three-dimensional, tree graphical model that serves both as a session summarization and comparison tool.

As a perfect medium for computational simulations, engaging interactions, and personalized learning opportunities, virtual reality naturally disturbs the field of ed-ucation. We argue that virtual reality interactions defy existing real life limitations and empower the user to learn, unhindered from exploring and discovering. Few at-tempts have been made to implement note-taking functionality in virtual reality to support learning in existing education applications. Using the three fundamentals of note-taking: encoding, external storage review, and modification, we will introduce a design exploration into the reimagined potential of taking notes in virtual reality where notes can incorporate new dimensions of sensory experience and added levels of engagement.

Chapter 2

Related Work

In this section, we provide some context for our work by first introducing both note-taking and virtual reality. Then, we evaluate existing attempts to incorporate tech-nology into the classroom both immersive and non-immersive. For each attempt, we mention the benefits as well as the pitfalls concluded in conducted studies. Also, we transition from non-immersive, virtual alternatives to early developments of im-mersive classrooms that have contributed to the design of MemoryTree in one way or another. In addition to the evaluation of tools, we argue that studies on note-taking which have revealed negative conclusions on the use of technology cannot be transferred to note-taking in virtual reality.

2.1

Background

2.1.1

Note-Taking

We take notes throughout our daily routine for purposes such as documenting a meeting, remembering a phone number, or learning class material taught in a lecture. We often customize how we note-take to best serve our purposes by using different representations such as abbreviations, syntax, and content formatting [15]. When we make comparisons throughout this paper between traditional and virtual forms of note-taking, we often refer to the common two-dimensional media such as pencil and

paper, computers, and photography as traditional forms of notes.

We identify three fundamentals of note-taking to be encoding, reviewing, and modification. Encoding, in the context of note-taking, is the act of storing information on a form of external memory. Along with encoding, the affordances of note-taking enable external storage review. Review refers to the process of reviewing notes for the purposes of improving information retention. Reviewing information is aligned with the notion that repeated exposure to information results in improved learning [10], [12]. Encoding information without review processes has been shown to lead to poorer recall performance; lower recall was an anticipated result because the act of encoding requires the division of attentional resources to listen to, select, and record information in the moment of receiving information where as reviewing does not [12]. Lastly, modification is considered a fundamental component of note-taking because we introduce notions around the ability to resume exploration or extend an experience in virtual reality which requires the user to begin changing the existing scene.

2.1.2

Virtual Reality

Virtual Reality (VR) is an artificial environment with components of immersion, in-teractivity, and sensory feedback. The level of immersion depends on the experiences allowed degrees of freedom (DOF) for the user and although there are several, 3 DOF and 6DOF are the most common for VR. Figure 2-3 depicts the three degrees of freedom which allow the user to look around 360 content in three rotational move-ments along three perpendicular axes: roll, pitch, and yaw. 3DOF content, viewable through products like Google Cardboard and Gear VR, provide a 360 degree viewing immersive experience without the ability of navigating throughout the virtual space. 6DOF enables translational movement along three axes known as surge, heave, and sway, in addition to roll, pitch, and yaw. 6DOF head mounted displays (HMDs) such as the HTC Vive or Samsung Odyssey provide total immersion and the ability to move throughout the space using positional tracking. Figure 2-1 and Figure 2-2 show the the HTC Vive and Samsung Odyssey respectively. Additionally, most 6DOF headsets come with two controllers used to engage the user with scene interactivity

and provide haptic feedback from the virtual environment. More recently, headsets have been augmented with headphones to add auditory senses to the experience.

Figure 2-1: The HTC Vive Head Mounted Display comes with two controllers. The Vive tracking uses two base stations that externally track position and orientation of the headset and two controllers.

Figure 2-2: The Samsung Odyssey also comes with two controllers. The Odyssey headset and controllers use internal tracking mechanisms with cameras placed on the headset.

At a more technical level, we introduce virtual objects that lie at the core of most virtual scenes. Objects are also known as Game Object elements which are highly customizable and can embody any virtual body such as an avatar, a sphere, or even a 2D plane. Game components can be assigned to the game component that equip the game object with various properties. Game objects can be thought of as building blocks to a virtual environment that tie together built-in and custom features. Built-in and custom features are called components that can be developed usBuilt-ing a game engine scripting API. Using components and game objects, virtual environments and experiences can be designed to fit various purposes.

Figure 2-3: This figure illustrates the six total axes that can be used in either 3DOF technology or 6DOF. 3DOF technology allows the user to move along three rotational axes known as roll, pitch, and yaw. 6DOF includes the rotational axes in addition to surge, heave, and sway.

Virtual reality overcomes boundaries of the physical world and immerses users in a virtual world where they can see the invisible, touch the intangible, and be just about anywhere. 6DOF technology facilitates a more exploratory learning approach in which students engage in self-regulated learning by examining and interacting with their environment to build relationships between existing and new information [2]. In 2014, a study on different learning methods revealed that students who learn in traditional classroom settings are 1.5 times more likely to fail than those in active learning methods [9]. Furthermore, virtual reality environments that encourage user regulated exploration provide the necessary resources for the user to ask and search for the answers to his or her questions. Another benefit from using a fully immersive environment is that students are able to learn while free from external distractions.

The benefits of applying virtual reality technology to education spurred the on-set of several applications but given the nature of VR headon-sets, traditional methods of note-taking to support learning are no longer compatible. We believe that while

VR education applications are a step forward, note-taking is an essential tool that supports learning even when the users are more engaged. Unlike traditional two-dimensional forms, notes in virtual environments can bypass 2D limitations and utilize spatial, temporal, and sensory capacity. In other words, a stark difference between physical and virtual notes lies in capturing instances of ones perspective versus ones full immersive experience. Technically speaking, virtual reality scenes are built using game engines such as Unity and Unreal and consist of a background environment, game objects, and the player; thus, the makeup of the scene allows for capturing immersive, three-dimensional forms of photos and recordings in addition to their 2D counterparts. Three-dimensional photos or snapshots can be thought of as capturing the state of all objects and their properties at a moment in time. Three-dimensional videos appear to be frame by frame plays of 3D snapshots taken across a window of time.

As a reminder, the ability to extend an experience highlights the need to see modifica-tion funcmodifica-tionality as another key phase of note-taking. As in tradimodifica-tional note-taking, modification capabilities renders encoded content to be malleable and open to ex-tended knowledge. Modifying externally stored content in the context of VR learning can occur for several reasons such as: correcting mistakes, trying different object configurations and parameters, or adding annotations.

Moreover, virtual reality offers a notion of customizability where anyone can alter the properties of the environment free of real life consequences such as cost and space. Customizability more importantly offers the ability to personalize ones learning ex-perience. In traditional classrooms, a common method teachers use to illustrate concepts is by giving examples. However, in VR, customizability enables students to take these fixed examples and then manipulate any object parameters to explore unintroduced concepts.

2.2

Virtual Learning Environments

Before virtual reality became easily accessible, web technology entered the traditional classroom in the form of non-immersive virtual learning environments (VLE). VLEs are essentially online learning environments that provide educational tutorials, simu-lations, and videos. One example of a publicly available online learning environment is the MIT OpenCourseWare (OCW) which provides easy access to course material for anyone to utilize. Here, we will discuss VLEs differ from traditional classroom set-tings, how VLEs impact learning, and limitations found in two main studies [14],[17]. First, virtual learning environments lead to the shift of learning control from the instructor to the student; then, the student has more control over his or her learning experience as well as more responsibility. Because VLEs remove geographical limitations and allow for remote learning, the student can decide on the topics, pace, and sequence of instruction [14]. Furthermore, without direct instruction, the student also determines the level of engagement with the class and instructor made available through online forums.

Alongside more student control, VLEs offer more personalized learning experi-ences. For contrast, traditional classrooms try to fit the needs of every student by teaching a wide breadth of subjects at a constant pace. At best, educational insti-tutions offer office hours or discussion sections that provide one-on-one teaching for students who need it. The style of teaching in traditional classrooms result in a spec-trum of glossed over topics to in-depth analyses of familiar information since students come with varying amounts of previous knowledge. But with VLEs, students have the ability to skip over topics they already know or to repeat exposure to topics they find difficult or unfamiliar. Most traditional classes are taught at fixed hours each day whereas VLEs offer asynchronous learning for those who have external conflicts or are even productive at different times.

The earlier mentioned study between VLE and traditional learning defined learn-ing effectiveness by testlearn-ing four factors: test scores, self-efficacy, satisfaction, and climate. As a measure of how well knowledge has been retained, test scores are used

as the first metric of learning effectiveness. Next, self-efficacy can be defined as an in-dividuals perception of his or her own capabilities in attaining a level of performance. Satisfaction is used as a learning effectiveness indicator to measure increased learner control and willingness to adopt the new way to learn. Lastly, the emotional learning climate serves as an indicator of whether the student has had the opportunity to interact with others in such a way that knowledge would be shared.

Overall, the study revealed that students in the VLE group had higher learning effectiveness than the students in the traditional setting. In terms of performance, students in the VLE group had higher final exam scores than the others. Further-more, students who learned virtually reported that they were able to teach themselves independently and were more satisfied than their traditional classroom counterparts [17]. Although emotional learning climate was consistently high in the VLE group, researchers attribute this finding to the novelness of the interaction between the in-structor and classmates.

Despite the positive results, there still exist many limitations to the remote, yet convenient way of learning virtually. The asynchronous ability to learn also intro-duces challenges in receiving timely feedback from instructors and other students which could lead to frustration, confusion, and isolation. Furthermore, another sim-ilar study found that participants struggled with the interface which hindered their ability to learn and access necessary equipment [14]. Non-immersive web technolo-gies drastically change where, when, and what students could learn but not so much how. Web technologies add convenience to learning but learning effectiveness, though positive, was not significant. As with traditional classrooms, VLEs encourage pas-sive learning where students sit and watch and listen to lecture videos and two-dimensional simulations. Engagement between students and instructors occur more passively through online forums and VLEs limit communication to text questions and answers. As a solution, I propose a more immersive technology such as virtual reality where students still have the control over topic, pace, and sequence of instruction as well as the opportunity to engage with other students and teachers virtually. More importantly, virtual reality changes the way students learn by encouraging a more

interactive, exploratory method of discovering information.

2.3

Immersive Interfaces

Before providing an example, we will first thoroughly introduce what immersive in-terfaces offer in the context of learning. Immersion itself is the experience of being completely present through sensory, actional, and symbolic means [8]. Sensory Im-mersion is the act of being immersed in for example, a digital three-dimensional space, in which haptic feedback, sound, and visuals provide feedback. Next, Actional Im-mersion allows the user to interact with the virtual environment which oftentimes, result in novel, unrealistic consequences. Immersive technologies provide users the combination of both egocentric and exocentric perspectives, the ability to experience actional immersion through embodiment as well as the ability to view the environ-ment from a more external perspective. Especially with 6DOF VR immersion, the user has the ability to change his or her frame of reference to observe something differently which is also known as situated learning. Situated learning can aid the understanding of complex phenomena by allowing the embodiment of multiple per-spectives. In addition, the customizability of computer-generated simulations within immersive environments enable transfer learning which is when the user can apply gained knowledge from one situation to another.

2.3.1

Second Life and Sansar

Second Life is a three-dimensional virtual networked environment where users can meet and interact online in a virtual world. Second Life reached more than 6 million users in 2007 where the users embodied avatars, met each other, and interacted with existing objects. Unlike VLEs, Second Life took learning to the next level by allowing users to directly interact with others and virtual objects rather than with forums and videos. Researchers saw the potential of simulating real world challenges in virtual worlds and applied Second Life to medical and health education [6]. The Virtual Neurological Education Centre (VNEC), depicted in 2-4, was built within Second

Life virtual environment to allow users to synthetically experience certain neurolog-ical symptoms that affect motor, sensory, and balance capabilities of the embodied avatar. VNEC had two goals: spread awareness about neurological disabilities and help those with a neurological disability to interact with people virtually in ways that are difficult or impossible in real life. Although VNEC and Second Life users were able to learn about disabilities from a hands-on experience, the application lacked realistic embodiment as users were only able to use a keyboard interface [1].

The founders of Second Life at Linden Labs recently launched Sansar, a similar virtual space to Second Life where users enter the world using virtual reality tech-nologies. Despite the lack of research for Sansar applied to learning, there already exists potential because Sansar comes equipped with assets and virtual spaces that can be used to teach a virtual class [4]. Going back to the VNEC use case, VR would allow the user to be fully immersed with sensory and actional features and could more realistically embody someone with a neurological disability. Furthermore, the user would be able to engage in situated learning by experiencing various kinds of neurological diseases and can transfer gained insight to the real world.

2.4

Note-Taking: Pen vs. Keyboard

Researchers have studied encoding and external storage review, two key aspects of traditional note-taking, to discover how learning is affected. As the presence of lap-tops become increasingly common in the classroom, distractions increase but more interestingly, note-taking transitions from pen and paper to the keyboard. Mueller and Oppenheimer conducted a study to compare how students perform when taking notes on either a laptop or on paper. Previous work had already revealed that verba-tim note taking encourages passive encoding and thus, less absorption of the material [13]. In the pen vs laptop study, participants performed note-taking by using a lap-top or longhand handwriting. Results revealed that longhand note-takers took fewer notes whereas laptop note-takers often took more. While more notes correlated to better performance, laptop note-takers had more verbatim notes which negatively

Figure 2-4: A scene from Virtual Neurological Education Centre illustrates how users in the virtual space learned about and embodied neurological diseases.

predicted performance. After seeing the initial results, researchers wanted to see if note review would counteract the shallow encoding during laptop note-taking. How-ever, after another study, participants in the longhand condition still outperformed their laptop counterparts. In conclusion, more notes positively predicted performance whereas verbatim notes negatively predicted performance.

Existing research on note-taking are predictive of performance but are limited to traditional note-taking methods. Even if note-taking in virtual reality had been established, we anticipate that the findings would not be easily transferable or com-parable to traditional methods as VR is an entirely different medium. Also, virtual equivalents of traditional methods such as typing or writing on a piece of paper would be extremely slow and inefficient and would therefore, be an inaccurate comparison. As we introduce MemoryTree, we will show why and how the system automatically encodes notes verbatim. Although verbatim notes are shown to encourage passive encoding, encoding in MemoryTree does not rely on the user and instead, allows

the user to be fully engaged in the learning processes. Additionally, the user can review either by viewing the three-dimensional summary model in the scene or can re-experience the replay of interactions and simulations.

Chapter 3

Electrostatic Playground

3.1

Introduction

Electrostatic Playground, a room-scale virtual environment, presents an immersive playground in which users can explore concretized notions of electrostatic physics concepts in the form of tangible, interactive objects. Users start in a virtual space where they can introduce electrostatic elements such as charge particles or magnetic dipoles that can then simulate behavior in response to the presence of electric and magnetic fields. Two and three dimensional field lines react in real time as users move around charged elements to demonstrate the concept of how electric fields work. In this chapter, we describe the implementation of Electrostatic Playground that render the abstract and intangible to become real in a virtual medium.

3.2

System Overview

Built in Unity, the Electrostatic Playground enables users to navigate around a virtual scene using HTC Vive or Samsung Odyssey headset tracking. The users can use the two controllers equipped with each VR headset as hand-based tools that enable the user to interact with the scene and objects in various ways. In addition to tools, a physics engine manages the state of all charged objects within the scene to determine how in simulation mode, all other objects would react in the presence of the created

electric or magnetic field. The tools and functionality that users are provided with are the following:

3.2.1

Selector Tool

The Selector tool comes with a 3D cursor to emulate grabbing and moving any object in the virtual space. The cursor detects and interacts with any object that carries a Grabbable component and thus enables the user to grab by pressing down on the controllers trigger button. Once an object is grabbed, the user can move the object alongside the controllers cursor by continuing to hold down the trigger. To let go, the user releases the trigger which then leaves the object suspended in space.

Figure 3-1: The selector tool, which maps to one of the two user controllers, allows the user to move objects as well as change object specific properties.

The 3D cursor is placed at the front tip of the controller and selects an object if it is intersecting with the cursor. To avoid confusion and unexpected behavior, the cursor only interacts with a single object at a time even in the presence of multiple intersecting objects.

3.2.2

Play and Pause Tool

The Play and Pause Tools main functionality is to allow the user to toggle between the two modes of the physics engine simulation: play and pause. The Play and Pause tool shown in Figure 3-2 is also equipped with a 3D cursor to enable the user to use either hand to interact with objects in the scene. While the trigger button for this tool is mapped to the cursors grab and move functionality, the controllers touchpad is pressed down to toggle between the two simulation modes. Corresponding to the mode, either a play or pause icon appears on the touchpad as a visual indicator.

Figure 3-2: The Play and Pause tool can take one of two modes: play or pause. By default, the controller is in pause mode and looks like the right controller. When the user pressed on the touchpad, the controller’s indicator icon changes to the play icon. In play mode, the physics engine system is turned on and the charged objects move in response to the generated electric field.

Initially, the simulation mode is on pause which prevents all charged objects from acting on any existing electric fields. In other words, charged objects in virtual space

remain static unless it is moved by the user. The charge value of any object still statically affects the electric field environment but has no effect on other charged objects while the simulation is on pause.

On play, the physics engine enables all charged objects to be affected by electric field forces and move in the appropriate direction at a particular velocity depending on its own charge value and surrounding electric forces. During play mode, the charged objects are reacting to a dynamically changing electric field in real time. While charged objects are free to move and react to the electric field, the user can override these movements by grabbing and moving objects.

3.2.3

Object Menu

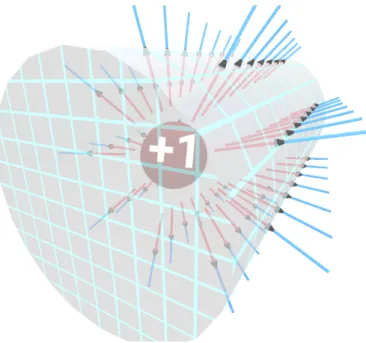

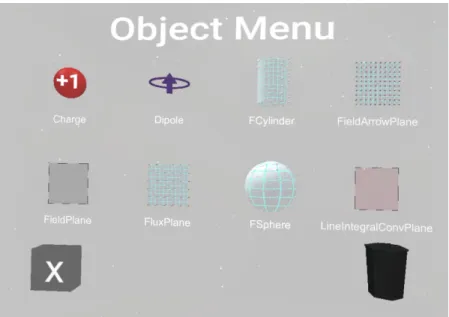

Electrostatic Playground uses several objects to fully convey the notions of charge sources and field line visualization. Charge sources come in the form of single charge particles and magnetic dipoles and can be spawned in the virtual scene to create or change electric fields. Field line visualization objects such as 2D field line planes demonstrate the orientation of field line vectors along the plane surface. Gaussian surfaces display 3D field line vectors along its surface as well as the flux value gen-erated by any enclosed charges. Object visualization was designed and implemented by Annie Wang, a project collaborator. The following figures depict the existing physics-related objects that can generate or represent the state of electric fields.

Figure 3-3: Charge particles can have three various parities: negative, neural, or positive.

Figure 3-4: The magnetic dipole creates a magnetic field once it is introduced into the virtual scene

The object menu in Figure 3-9 is a virtual library that displays a collection of objects that can be spawned into the virtual space. Placed near the inner side of the users non-dominant hand, a toggle button can be pressed using a cursor to launch or collapse the object menu. Individual objects can be spawned into the space by using the same grab and move mechanism with a tool cursor to grab an object out of the menu. To avoid unexpected interactions with existing objects in the scene, the menu object previews are each duplicates of their original prefab without scripts that interact with the physics engine.

The object menu supports content creation by allowing the user to introduce objects into the scene. In addition to displaying the collection of spawnable objects, the menu also offers a method of deleting objects from the scene with a 3D interactive trashcan placed at the bottom corner of the menu. The trashcan, which opens its lid when objects are nearby, presents a clear affordance to the user that dragging and dropping objects into the trashcan will remove the object from the scene.

3.2.4

Property Editing

Each object carries a set of physical properties that can be edited after being spawned into the environment. Object properties are either categorical or numerical and can therefore best be represented differently in discrete or continuous values. The

prop-Figure 3-5: The field line plane, with a positive and negative charge, renders the state of the electric field lines on a 2D surface.

erties we have for each object are the following:

Object Property 1 Property 2 Property 3 Charge Charge Value NA NA Field Line Plane Scale NA NA Gaussian Surfaces Scale Arrow Density Vis. Mode

Table 3.1: The flexible design of object property specification allows objects to share properties they have in common as well as manage properties only specific to the object. Charge value adjusts the amount of charge of a charge instance. Scale refers to the uniform scaling of all three-dimensions of any object. Arrow density allows the user to see fewer or more arrows or bars on the Gaussian surfaces. Lastly, visibility mode toggles the type of arrow that the user can see.

Categorical values can be represented discreetly to determine for example, which field line plane mode should be used. On the other hand, numerically valued proper-ties such as scaled size of the objects are represented using continuous values.

A object context menu of interactive sliders is attached to each object in the scene that enables the user to edit the values of any of the objects categorical and numerical

Figure 3-6: The three dimensional plane has three toggleable modes which aid the user in understanding various concepts. In (a), the plane arrows show the direction of the electric field. (b) shows how the electric forces move from positive to negative away from the positive charge. Lastly, (c) reveals the magnitude and positivity and negativity through use of yellow or purple bars respectively.

Figure 3-7: The sphere is an enclosed Gaussian surface that reacts to the presence of charges and updates its flux value depending on enclosed charge.

properties. The system design is made to be flexible by accounting for both shared and unshared properties between all of the objects. Every object is responsible for specifying first, the set of editable properties and for each property, whether it is categorical or numerical as well as a fixed range of allowable values. When an object interacts with the Selector Tool cursor, an indicator icon will appear on the touchpad

Figure 3-8: In addition to the sphere, the cylinder is another enclosed Gaussian surface to further understand how flux and electric field behave.

for the user to be able to launch the objects context menu. When the touchpad is pressed down, the Selector Tool generates a context menu containing a slider for each of the specified properties by the object. Each slider displays the icon representing the property it can change. During each frame, changes in slider value are propagated to the object to manifest those changes.

Figure 3-9: The Electrostatic Playground offers easy access to all spawnable physics-related objects by organizing them in a menu. The menu’s visibility can be toggled with the press of a button attached to the side of one of the controllers. Also, the trashcan in the bottom right corner offers the user affordances by opening its lid before deleting an object if one is dragged to the trashcan.

Figure 3-10: The Context Menu, available for every spawnable object, allows the user to change object parameters post-creation and encourages exploration in how different parameters result in different behaviors. The context menu for an object, such as the Gaussian sphere in this figure, can be launched using the Selector tool touchpad where the user can edit properties such as object scale, arrow density, and visualization mode.

Chapter 4

Introduction to MemoryTree

4.1

Introduction

Virtual reality presents a new medium for users to learn from self-initiated explo-ration of accurate simulations of real-world phenomena. Unlike traditional methods of learning, virtual reality applications provide engaging and immersive experiences in which the user can interact with concretized notions of abstract concepts instead of lacking, inaccurate two-dimensional representations. Despite the efforts to provide more VR learning applications, note-taking functionality has often been overlooked. Note-taking is a commonly used tactic to improve ones retention rates and concen-tration by requiring mental effort to select, organize, and encode information on some form of external storage.

Although the use of traditional methods of note-taking in virtual reality seems to be an easy alternative to supporting note-taking functionality, there lie physical barriers that render this option inefficient. First, let’s consider using the method of handwriting notes. Given that most fully immersive virtual reality experiences are feasible by using head mounted displays, constantly switching from virtual to reality to observe and encode content by removing the headset would be nonideal. Now if we consider adapting handwriting to virtual reality by allowing the user to write in virtual space, the clunky hand-based controllers and lack of haptic feedback to simulate writing on a surface would make it difficult on the user to write accurately.

Furthermore, traditional methods are not three-dimensional and thus, lack the ability to fully capture high-dimensional simulations and experiences. Therefore, we present a first-principles approach to design and evaluate MemoryTree, a re-imagined form of note-taking functionality in a virtual environment.

4.2

MemoryTree Illustration

Figure 4-1: Step by step, the user explores the behavior between a charge particle and a magnetic dipole. After fixing the charge parity to positive, the user observes a behavior and wonders about how modifying the parity to negative might reveal dif-ferent behavior. Thus, given the power to re-experience one’s earlier explorations, the user replays the same scenario acted out by a ghost avatar and makes a modification. Finally, in Step 6, the user steps back and observes a different behavior.

Before we discuss the details of MemoryTree, we present a concrete example of how the user can interact with the system to illustrate the importance of recordings

and how they can be replayed to review as well as ask new questions. In general, the user starts a MemoryTree user session in a state of exploration where he or she can interact with objects in the scene. In our example using Electrostatic Playground, imagine a user who begins wondering about how a single, positive charge particle would behave in the presence of a magnetic dipole. Figure 4-1 depicts a sequence of steps the user takes to utilize the power of recordings and the ability to re-experience them while asking new questions.

First, in Steps 1 and 2 of Figure 4-1, the user creates a charge and magnetic dipole and while physics simulation is in play, the user throws the positive charge towards the magnetic dipole and observes how the positive charge deviates to the left of the magnetic dipole. Throughout the duration of the user’s exploration, MemoryTree records every interaction performed by the user as well as the state of the objects in the scene until the user decides to replay the sequence of events.

After the user observes the behavior, he or she now wonders about how a negative charge would react in the same exact scenario in Step 3. MemoryTree allows the user to pursue the answer to this question by supporting the ability to replay the sequence of captured events. Next, the user hits the replay button which is available on one of the user’s hand-based tools.

At the beginning of the replay, shown as Step 4, the user observes a spawned ghost avatar who stands in for the user and performs the same actions in the scene. As before, the real user who is observing the recording sees the ghost avatar spawn a positive charge and magnetic dipole.

Now imagine, in Step 5, right before the ghost avatar throws the positive charge, the user modifies the property of the charge to alter its parity to make it hold negative charge. In MemoryTree, modifications or changes to the set of objects in the scene such as a parity change mark the moment in which the user asks a new question and resumes exploration.

As the predicted course of action proceeds, the real user observes how the same exact charge, with a flipped parity, is thrown and deviates to the right instead of the left of the magnetic dipole. Finally, the user can step back and drawn insight from

how a parity flip resulted in two very different behaviors as shown in Step 6.

The illustration just described points to the significance of being able to first, capture a series of motions and user interactions in virtual reality to later be able to replay those interactions and recreate the same scenario as before. Existing notions of recordings cannot be transferred to virtual motion recordings due to the stark difference in how the user experiences the replay. In MemoryTree, the user is fully immersed in the replayed recording and has the ability to change any aspect of the scene in real-time to pivot perspectives and gain understanding. With the example in mind, we fully elaborate on the re-imagined fundamentals of note-taking within the context of MemoryTree.

4.3

MemoryTree Functions

MemoryTree is a standalone virtual reality module that satisfies design requirements around the fundamentals of note-taking. Currently, MemoryTree is adapted to Elec-trostatic Playground but our note-taking solution is mainly designed to provide generic, flexible functionality that are capable with other virtual learning applications. Paired with exploration focused virtual environments, MemoryTree serves as the users appendage for note-taking by eliminating the burden of squandering cognitive effort to take notes throughout ones explorations. MemoryTree seamlessly captures user exploration experiences, simultaneously generates graphical summaries, and enables users to review ones experiences and continue learning. We believe that MemoryTree is the missing component in many VR learning applications that lack the support of note-taking functionality one could use to learn while immersed.

At the most fundamental level, MemoryTree is built around the three necessary functions of note-taking: encoding, viewing, and modification. During exploration, the user can see a real-time updated summary of his or her exploration in the form of a graphical three-dimensional model that provides a chronological timeline of events. At the same time, MemoryTree encodes a three-dimensional recording that allow the user to review and revisit a previous exploration. Unlike traditional note-taking

meth-ods, automated note summarization allows for effective identification of key events that took place. In virtual reality, an unrealized potential is the ability to capture everything a user experiences in the virtual scene. Thus, MemoryTree captures multi-dimensional data and preserves it exactly as it was experienced to materialize the act of remembering an experience later. In other words, the user can re-immerse oneself into and re-experience a memory, or recorded learning experience. More specifically, a memory is encoded in the form of a three-dimensional recording of actions and movements occurring in the scene within a period of time. Lastly, the enabled pro-cess of reviewing information by re-experiencing the recorded session poses a question around modifying a recording; for that reason, the user is allowed to alter a revisited recording which consequently sparks the beginning of a new and different recording.

MemoryTree manages three user states of interaction: exploring, reviewing, and exploring while reviewing. By default, the user is exploring within the educational environment. After some exploration, the user can decide to press a replay button to rewatch ones exploration from the very beginning. The transition from exploring to replay marks the end of an individual exploratory session and marks the beginning of review. Depicted in Figure 4-2, we see how at the moment of replay, the user enters a reviewing state and can rewatch a ghost avatar of oneself probe the envi-ronment, simulating the users earlier interactions. If the user alters something in the scene by adding a new object, changing an existing one, or deleting something, the user then enters the exploring while reviewing state. The exploring while reviewing state encapsulates the moment the user engages in generative learning, a method of discovering new ideas by experimenting with and reviewing existing knowledge and familiar information. For the duration of the replayed recording, the user is in the exploring while reviewing state and after, the user returns to an exploration state. The user is expanding upon an earlier memory by changing an element in the scene to observe different behaviors and outcomes. Next, we discuss design decisions around the interactions in the new virtual medium and show how they fulfill the requirements of note-taking.

Figure 4-2: The ghost avatar appears in the recording to stand in for the real user who is observing the replay in real time.

4.4

Encoding

Before diving into the details around note-taking functions, we first need to determine how information can be encapsulated into modular notes given the nature of VR content. We described earlier that in virtual reality, every object can be captured as well as the player. Drawing inspiration from existing forms of notes, we introduce the notion of three-dimensional, fully immersive snapshots and recordings. While recordings preserve the full experience of the user by capturing a series of interactions performed by the user, we present a compacted form of the recording by using three-dimensional snapshots to represent key frames of each recording. Like in real life, chronologically ordered snapshots in a scrapbook remind the viewer of important events that occurred within the period of time. However, the specific details are lost in memory and can be recalled by viewing a more comprehensive medium in the form of a continuous recording.

4.5

3D Recordings

Three-dimensional, motion recordings are first introduced in [16] and consist of cap-tured sequential user actions and object movement. User actions consist of every function call that belongs to the player ranging from controller movements to

chang-ing a charge particles charge value. By capturchang-ing movement, the user has the power to move throughout the replayed recording to change one’s perspective and can even build off of the captured interactions to make new discoveries. The concept of a in-teractive, immersive recording is powerful especially in an educational context as the user has the freedom to view content from any angle with any level of engagement to better understand concepts. Like real life recordings created by using cameras, the user can be an outside observer but in virtual motion recordings, the user has the added option of engaging with the recording without the constraints of fixed per-spective. Motion recordings capture the user, scene objects, and all interactions and simulations over time. For implementation details, refer to Section 5.

4.6

3D Snapshots

A virtual, three-dimensional snapshot, or frame, on its own only represents a single point in time and is limited in representing concepts or events observed by actively engaging with objects in the scene. However, a compilation of sequential snapshots can tell a story even without filing in the gaps. Thus, throughout each exploration session, MemoryTree chronologically captures three-dimensional snapshots which are discrete captures of objects in the scene. Figure 4-3 shows how every snapshot is an encoding of the scene objects visual components such as placement, orientation, scale as well as other application specific properties. Encoding snapshots, however, only allows for surface-level content review and on its own, would fail to preserve any movement. Capture of movement is a key component for virtual notes as users would be able to capture and review movement-dependent concepts such as particle charge attraction and its resulting impact on the state of the electric field. In contrast to snapshots, recordings are the dynamic counterparts of snapshots that have the added component of motion.

In traditional note-taking methods, taking verbatim note is inefficient and time consuming. Learning processes and cognitive effort are less engaged because minimal thought about content or structure of information is required. However, the reason

Figure 4-3: A three-dimensional snapshot represents the state of the scene at the moment of capture by detecting the objects and their respective position and orien-tation within the scene. In this figure, the user can see how the state of the room has changed over time with the addition of a Gaussian plane in the presence of charges.

why virtual verbatim notes can serve more learning potential is exactly in the ability to change perspective and generate new experiences from existing ones. As alluded to beforehand, note-taking in the real world, even in three-dimensions, can only be seen and experienced exactly as it was seen beforehand. But in the virtual world, the users initial perspective of learning something is preserved and during review, he or she has the freedom to deviate from the original experience by asking different questions and consequently could observe different outcomes.

MemoryTree captures verbatim notes autonomously to fully capture a users ex-ploratory learning experience or memory. The user is relieved of any responsibility to decide what and when to encode information and can therefore, allocate more cognitive effort towards learning by engaging with the virtual environment. Content capture is automatic rather than user-controlled; the user is expected to explore and therefore, cannot anticipate when one will discover new information and build on existing knowledge. Furthermore, user-controlled information encoding would result in capturing only information perceived as important in the first impression, further restricting future exploration to yet another fixed perspective.

4.7

Recording Camera Tool

As a precursor to recording representation in MemoryTree, we first developed a Recording Camera Tool, shown in Figure 4-4, to explore interactions surrounding recordings. We use a camera metaphor to clearly convey that the tool can act just like a physical camera to take photos or videos. The 3D recording camera is respon-sible for allowing the user to capture and view 3D snapshots and recordings. Using a simple button interface, the user can take a snapshot or start a recording of ob-jects in Electrostatic Playground. For viewing, the user opened a toggleable menu that displayed selectable names of recordings and snapshots which is shown in Figure 4-5. In evaluation, we found the menu to be basic and uninformative about the con-tents of each encoded unit, how the scene is organized, and the time at which it was taken. Using a camera tool interface, the user needs to constantly think about what to capture in what form which hinders the exploratory process of learning. More importantly, the user-controlled recording resulted in producing disparate, individ-ual recordings with no information on how concepts were tied together throughout exploration. As a result, insights found from the pitfalls in the design of the Record-ing Camera Tool are incorporated into MemoryTree. Specifically, we provide content contextualization, encoding automation, and visualization of recording relationships.

4.8

3D Tree

MemoryTree mimics the natural process of learning from exploring and remembering those experiences and equips the user with the ability to virtually revisit those experi-ences to generate more understanding through resumed exploration. In the following section, we will first discuss how the MemoryTree module supports learning using vi-sual summarizations of explored concepts using a graphical 3D model we define as the tree. Next, we will introduce the design around review and modification capabilities using MemoryTree.

Figure 4-4: The recording camera tool has a record or stop button for 3D recordings as well as a disk slot that can be touched to launch a library of existing recordings and snapshots.

Figure 4-5: Users can view existing 3D snapshots and recordings in the form of polaroids and CD disks respectively. To view the content, users grab an item off the menu and place it onto the disk slot below.

edges. Figure 4-6 color codes the different anatomical parts that make up the whole tree model. Each node within the tree is generated by taking 3D snapshots of the virtual scene at a fixed time rate. Figure 4-7 shows how the tree chronologically organizes these nodes using directed arrows as edges to represent order. Throughout the entire duration of using MemoryTree, a single tree represents several memories that are individually represented as branches on a tree. A n-degree graph structure best fits the needs of having to cohesively represent how each memory could be extended to generate other memories and so on. A branch, which consists of linear nodes and edges, can be thought of as representing every isolated memory that was generated by extending a previous memory. A new branch is generated the moment the user transitions from reviewing to exploring while reviewing state indicating the generation of a new memory. Thus, by looking at Figure 4-7, the user can see that there are currently two recordings that have been generated. While the user is in one of the two exploration states, the tree continues to grow at a fixed time rate by adding more nodes onto the branch. Consistent with cognitive limitations, MemoryTree enables the user to only generate and grow a single memory at any point in time. Thus, only one tree branch holds an active state, visually indicated with a path of white arrows, and represents the current memory that the user is developing.

The tree supports learning processes throughout both exploration and review as it serves as a comprehensive view of the users exploration history. Ordered chrono-logically, the tree can be viewed as a three-dimensional timeline in which each node is a state of the room captured every 5 or 10 seconds for example. During the review, as a 3D recording is replayed from the beginning, the tree can be used as a reference for the user to understand where in the recording, he or she is in.

More specifically, the tree provides contextualization by highlighting individual keyframes, or nodes, as they are experienced in the scene which can be observed in Figure 4-7. Directed edges denote not only the chronological relationship between individual nodes but also the parent-child relationship between branches which help the reader understand how a particular memory was derived from earlier memories. Furthermore, the chronological order of branches promotes another use case for the

Figure 4-6: The tree can be broken down into branches and further, each branch consists of nodes. Each branch in this figure is labeled with a different color block. The circled numbers on the right of every leave node specify the order in which each branch was generated. Branches 2 and 3 were generated by branching off from the same parent node in Branch 1.

tree as a compare and contrast tool. Branches indicate how recordings have diverged from one another due to the introduction of a single modification; as nodes are ordered along the same axes representing time, the user can induce how single modifications result in different outcomes and behaviors from objects in the scene.

4.8.1

Tree Comparison Use Case Scenario

To illustrate the power behind underlying comparison tool in the tree, we refer back to the use case scenario first mentioned in section 4-1 involving a user who is immersed in Electrostatic Playground using the MemoryTree module. Throughout the whole time the user is observing and replaying the recording, the sequence of interactions is captured in a linear branch of the tree to show key frames of the recording. When the system detects the modification in the charge parity, the system branches off

Figure 4-7: The 3D tree model contains a miniature preview, or snapshots, of the virtual room as nodes and uses directed edges to declare the relationships between each snapshot and branches of the tree. The ”active” branch that is currently being encoded in this picture is denoted with white intermediate arrows. In the scene, the non-active branches have black arrows but for the visibility purposes, the black arrows are seen as red arrows.

from the existing recording and begins a new one. The two diverging recordings are represented as physical branches in the tree model to illustrate how the single modification resulted in two different outcomes. An overlayed comparison depicted in Figure 4-8 shows what insight the user can gain by comparing the two branches in the tree. Because this use case demonstrates the comparison capabilities of the tree, we use this example to test the functionality of MemoryTree.

Figure 4-8: This figure illustrates the power the reusability of captured recordings to ask new questions and observe different behavior. In this use case, the user originally records recording 1 shown on the left with a positive charge that is thrown towards a magnetic dipole. However, during the replay of recording one, the user quickly changes the parity, sparking the creation of recording 2, and observes how a negative charge reacts to a magnetic dipole differently.

4.9

Replaying

Another important learning method that immediately follows exploration and content encoding is review, the process of repeating exposure to information in order to im-prove retention. Without virtual reality, note review may look like going over a series of mathematical steps, rereading slides from a lecture, or rereading ones handwritten notes. Traditional note-taking poses the risk of missing important information that now limits what can be learned during review. In addition to providing a compact view of explorations and findings, MemoryTree re-immerses the user in verbatim ex-periences where the user can repeat exposure as well as experiment with previously unasked questions.