Augmenting Anomaly Detection for Autonomous

Vehicles With Symbolic Rules

by

Tianye Chen

Submitted to the Department of Electrical Engineering and Computer

Science

in partial fulfillment of the requirements for the degree of

Master of Engineering in Electrical Engineering and Computer Science

at the

MASSACHUSETTS INSTITUTE OF TECHNOLOGY

June 2019

c

○ Tianye Chen, MMXIX. All rights reserved.

The author hereby grants to MIT permission to reproduce and to

distribute publicly paper and electronic copies of this thesis document

in whole or in part in any medium now known or hereafter created.

Author . . . .

Department of Electrical Engineering and Computer Science

May 24, 2019

Certified by . . . .

Lalana Kagal

Principal Research Scientist

Thesis Supervisor

Accepted by . . . .

Katrina LaCurts

Chairman, Masters of Engineering Thesis Committee

Augmenting Anomaly Detection for Autonomous Vehicles

With Symbolic Rules

by

Tianye Chen

Submitted to the Department of Electrical Engineering and Computer Science on May 24, 2019, in partial fulfillment of the

requirements for the degree of

Master of Engineering in Electrical Engineering and Computer Science

Abstract

My research investigates the issues in anomaly detection as applied to autonomous driving created by the incompleteness of training data. I address these issues through the use of a commonsense knowledge base, a predefined set of rules regarding driving behavior, and a means of updating the base set of rules as anomalies are detected. In order to explore this problem I have built a hardware platform that was used to evaluate existing anomaly detection developed within the lab and that will serve as an evaluation platform for future work in this area. The platform is based on the open-source MIT RACECAR project that integrates the most basic aspect of an driving autonomous vehicle – lidar, camera, accelerometer, and computer – onto the frame of an RC car. We created a set of rules regarding traffic light color transitions to test the car’s ability to navigate cones (which represent traffic light colors) and detect anomalies in the traffic light transition order. Anomalies regularly occurred in the car’s driving environment and its driving rules were updated as a consequence of the logged anomalies. The car was able to successfully navigate the course and the rules (plausible traffic light color transitions) were updated when repeated anomalies were seen.

Thesis Supervisor: Lalana Kagal Title: Principal Research Scientist

Acknowledgments

I would like to thank my advisor Lalana Kagal and PhD students Leilani Gilpin and Ben Yuan for their continued advice and support on this project.

Contents

1 Introduction 13

2 Related Work 17

2.1 Training data gap . . . 17

2.2 Association rule learning . . . 18

2.3 Reinforcement learning . . . 18

2.4 Diagnostic systems . . . 19

2.5 Adversarial attacks and security applications . . . 19

2.6 Overview of related work . . . 21

3 System Design 23 3.1 RACECAR architecture . . . 24 3.1.1 Localization . . . 24 3.1.2 Vision . . . 24 3.1.3 Path planning . . . 25 3.1.4 Visualization . . . 27 3.2 Commonsense monitor . . . 28 3.3 Driving rules . . . 31 3.4 Updating rules . . . 32

4 Evaluation and Results 35 4.1 Testing setup . . . 37

4.2.1 Anomaly detection . . . 41

5 Conclusion 43

5.1 Future Work . . . 43 5.2 Summary . . . 46

List of Figures

2-1 Noise is generated for each pixel with respect to the underlying gradient for the pixel in the model. The network believes the altered image is a gibbon when it looks the same as the unaltered image to the human eye [14] . . . 20 2-2 Stop sign and its modifications [10] . . . 20

3-1 The fully assembled RACECAR. . . 24 3-2 The map of a section of the basement of MIT’s Ray and Maria Stata

Center. . . 25 3-3 We are looking for the horizontal angle that the cone makes with the

camera; we are looking for the angle 𝛽. The distance between the cone and the center of the image (𝑏) is averaged between the two left and right images. The angle 𝛽 can be calculated by the following equation:

𝛽 = 𝑎𝑟𝑐𝑡𝑎𝑛( 2𝑏

𝑤𝑖𝑑𝑡ℎ𝑡𝑎𝑛(𝛼))

. . . 26 3-4 The distance the cone is from the car can be calculated by using parallax

property of the left and right images. we want to solve for the distance 𝑧.

𝑧 = 𝐵𝑓 𝑥 − 𝑥′

3-5 Waypoints picked around the basement connected with a straight line path. . . 28 3-6 Cone path construction process. . . 28 3-7 Rviz shows that the lidar points matches the map (the rainbow points),

the location of the green cone seen, and the path planned around the green cone. The set of red arrows show the estimation of the car’s pose. 29 3-8 Symbolic breakdown of sentences of scene descriptions and their

respec-tive reasonableness [13] . . . 30 3-9 Computer architecture for autonomous driving [18] . . . 31

List of Tables

4.1 The five chosen sequences of traffic lights without transition errors . . 38 4.2 The five chosen sequences of traffic lights with some random transition

errors. . . 39 4.3 The five chosen sequences of traffic lights with repeating transition errors. 40

Chapter 1

Introduction

The autonomous driving space has dramatically expanded over the last fifteen years. In 2004, the Defense Advanced Research Projects Agency (DARPA) announced the first Grand Challenge [6] where one million dollars would be awarded to the team that can build a car that could autonomously navigate a rugged desert course. None of the fifteen finalists could finish the course. Waymo, a leading self driving company that grew out of a team of engineers who participated in the original DARPA Grand Challenge, debuted its first self driving taxi service in Arizona [9] fourteen years later.

Two popular autonomous driving methods that are currently being explored are behavioral reflex and mediated perception. Behavioral reflex maps sensor data directly to a driving output [15] whereas mediated perception parses the entire scene into discrete components before making a driving decision [15]. Both approaches make use of machine learning techniques, which are opaque in their decision making, in the intermediary stages between input and output. Due to the uninterpretable nature of the space between the input and the output of the network, the decisions made by the network are often difficult to understand or explain.

Autonomous driving systems are not error free and decisions are usually made with models that were formed based on training data. Driving is a complex task, and corner cases lack the large volume of training data necessary for models to make the correct decision based on input. Instead of exhausting all possibilities for driving corner cases, I propose that the autonomous system can evaluate a new situation

using two components - commonsense and a set of driving rules - and then explain its decision based on this information. Analogous to how humans approach a new situation, the autonomous vehicle would make a decision using prior knowledge and applicable driving rules. Those driving rules can then be updated as the car witnesses new situations.

It is common to use training data based models within self-driving solutions. Machine learning techniques lend themselves to identify and classify scenes and actions similar to ones they have already seen. Perception networks are trained to recognize familiar situations since the classifier itself is dependent on the training data used to create it. Pattern recognition and general classification can typically guide the car to a reasonable decision during normal driving situations. However, driving is a complex task and it is difficult to capture every single possible situation a driver may face when they are on the road. In unpredictable situations, a classification error made by a (neural network, machine learning agent, ...) can potentially cost a human life [7]. In Uber’s fatal accident with a pedestrian walking her bike across the street [7], the car had trouble classifying Elaine Herzberg as a pedestrian. The autonomous system initially classified her as a car, then a bicycle, before ultimately classifying her as a pedestrian too late [5]. The uncertainty in the classification could be detected as an anomaly by a commonsense rule base since it is not reasonable for an object to morph from a car to a bike to a human. The accident might have been avoided had there been a commonsense knowledge base in place to recognize that the situation was abnormal and could have alerted the car earlier to take the appropriate safety measures.

When the vehicle makes a decision on unfamiliar data based on previous data, the conclusion it reaches can often be unreasonable. For example, an AI recently interpreted a face on the side of a bus as a person jaywalking [1]. If a human were to see the bus crossing the intersection, it would not think that the advertisement on the side of the bus is breaking the law by jaywalking. The AI lacks the common sense that we humans have in discerning what is reasonable and what is not.

training data can be supplemented by commonsense along with a set of rules regarding appropriate behavior.

My system, implemented as a monitor around the self driving unit, checks vehicle data and decisions for expected validity and reasonableness. The decision is reasonable if follows the rules set and commonsense knowledge base for the autonomous system. Similarly to how biologists are able to classify newly discovered species using a set of taxonomy rules, the set of driving rules can be applied to unforeseen situations that the system has never encountered before and make inferences about what driving actions are reasonable. As the autonomous vehicle encounters new situations, the set of driving rules can be updated to reflect acceptable behavior and expected encounters in the driving world.

In this thesis, I built a hardware platform based on the open-source MIT RACECAR [4] project to evaluate anomaly detection and rules updating on real world data as opposed to simulations and imaginary hard coded situations. I examined how a symbolic reasoner can most efficiently assist a real autonomous driving system in detecting anomalies and inconsistencies with driving rules. Along with Leilani Gilpin, I also developed a mechanism to learn new driving rules from the rate of and similarities between the anomalies seen.

The thesis is organized as follows. Chapter 2 compares and contrasts my work with related work. In Chapter 3, I detail the system design of the hardware platform I built to evaluate anomaly detection and rules updating on real world data. The results of hardware testing and the rule updater are detailed in Chapter 4. The hardware platform built for this thesis is capable of updating a more complicated rule base with the utilization of more of the data that is available to the autonomous machine. I conclude with a summary and future research directions in Chapter 5.

Chapter 2

Related Work

In this chapter, I describe existing literature in the area of anomaly detection as well as discuss other kinds of applications of anomaly detection.

2.1

Training data gap

One of our goals is to tackle a limitation with current machine learning techniques, which is that the trained model can only act predictably on data similar to the data it was trained on.

A recent work by Ramakrishnan et. al tackles a similar problem with gaps in performance when models trained on simulator data is applied to real world scenarios [20]. The problem is that the training model never encounters some aspects of the real world in the simulation, such as emergency vehicles, so the the model learns to ignore aspects of the world that it has never seen. Ramakrishnan et. al’s approach aims to find blind spots before acting in the real world. The solution is to have the autonomous system hand off control to a human when it is not confident of the state. For example, if the autonomous agent has a noisy feature that can predict ambulances 30% of the time, the model learn to transfer control a human when it reaches that state.

This approach is dependent on having a human in the loop and tries to anticipate the uncertainty before it happens whereas my approach aims to catch anomalies

as they appear in real time. As we move towards fully autonomous vehicles, it is important to be able to detect anomalies and respond appropriately to new situations.

2.2

Association rule learning

In the financial fraud space, there has also been work one in using rules to find find fraudulent financial transactions. Sarno et al. used a learned set of rules to detect fraud, which is parallel to finding anomalies in autonomous driving decisions [21]. Positive and negative association rules are applied to the data which makes each data entry positively or negatively associated with fraud. The association rules were generated using an apriori algorithm and expert verification of fraud cases.

This approach in finding fraud/anomalies in financial transaction data is similar to my approach in finding anomalies in driving data in which the data is filtered through a hard set of rules that deems if what autonomous vehicle observed is in accordance to the rules or not. However, the set of rules used by Sarno et al. is based on training data which still inherently has the issue of not being able to cover the corner cases of fraudulent behavior. By using strict driving rules that govern the correct behavior of vehicle action and govern the world around the car, my system does not rely solely on training data to detect anomalies. In addition, the set of rules in the fraud study is created by one set of training data and used on a different set of data to find fraudulent transactions. Unlike this thesis, the set of rules is ever updated after seeing a new set of data, where fraud could manifest itself in a different manner.

2.3

Reinforcement learning

Since autonomous vehicles have been shown to be prone to error [7], it is necessary to consider safety measures for these autonomous counterparts. One way to make these systems safer is to have them learn from their mistakes. This yields the decision of many manufacturers and researchers to use reinforcement learning (RL). In RL, an autonomous agent is able to process an action, state, and a reward to learn an

optimal policy for future decisions. Good actions receive positive rewards, and bad or erroneous actions receive negative rewards. This is similar to learning new rules from anomalous sightings; however, RL can also facilitate human interventions when the agent is behaving badly by training the model to alert a human in unsafe situations [22]. Most research and examples are limited to a gaming environment rather than a real world setting. It has also be shown to be useful in autonomous vehicle negotiation [23], but without being able to explain how and why they came to specific conclusion.

2.4

Diagnostic systems

Diagnostic systems have also been explored as a way to mediate abnormal and critical situations in autonomous systems. This type of diagnostic system has been applied to underwater vehicles. In autonomous underwater vehicles, the state of the critical sensors is checked against a knowledge base of known critical situations and their critical sensor states [16]. The ontology consists of a set of situations each of which contains an antecedent that sets the conditions of situation execution and a consequent that sets the actions to be executed if the condition is true. In this case, the knowledge base and semantic net usage is used to check for proper handling of critical situations instead of anomaly detection.

2.5

Adversarial attacks and security applications

An interesting application of anomaly detection, beyond catching mistakes made by neural networks, is finding unreasonable deductions caused by an adversary with malicious intent. Adversaries may induce the model to come to an inaccurate but precise answer.

Adversaries may be able to manipulate the image so that the neural network produces an incorrect result even when the image does not appear different to human eyes. Adding well crafted noise to the image can induce a trained network to give the wrong prediction [14] (fig. 2-1)

Figure 2-1: Noise is generated for each pixel with respect to the underlying gradient for the pixel in the model. The network believes the altered image is a gibbon when it looks the same as the unaltered image to the human eye [14]

One would want the car to come to the same conclusion when processing two different pictures that appear the same to the human eye. When the autonomous system comes to a different conclusion than the information presented in the scene, we would like the autonomous system to realize that its conclusion is unreasonable. In an automotive application, stop signs augmented with black and white patches that otherwise looks like graffiti caused the LISA-CNN, which has 91% test accuracy to misclassify stop signs as a speed limit 45mph sign [10] (Fig. 2-2). However, a system based on commonsense such as ours would have inferred that is is unlikely and unreasonable to see a speed limit 45mph sign in a residential intersection, which is where stop signs normally appear.

Another example is the research study published by Tencent Keen labs in regards to Tesla’s Autopilot system. Tencent placed three inconspicuous stickers on the road and Autopilot interpreted the stickers as a lane marking and directed the car to drive onto the opposing traffic lane [3]. For the Autopilot case, it is unreasonable to head into oncoming traffic, if you see cars approaching from the opposite direction. A commonsense approach would be to detect the action of heading into oncoming traffic as an anomaly.

2.6

Overview of related work

In summary, current approaches to anomaly detection still rely on training data to create the rules or requires a human in the loop to handle cases when a potential anomaly occurs. Training data cannot encapsulate all possible cases, and thus the rules generated from training data cannot successfully classify all inputs. Our approach begins with a set of concrete rules (in this case, known knowledge of traffic light transitions) and a commonsense knowledge base and then flags anomalies when the data does not follow the set of concrete rules. When anomalous behavior is observed multiple times, the rules updater can then add new rules to the rule base as appropriate.

Chapter 3

System Design

This chapter describes the design and architecture of the hardware and software components I developed.

The hardware platform I built is based on the open source MIT RACECAR [4] hardware platform. It has basic perception and motion planning capabilities that can provide realistic data for the reasonableness monitor and the rule updating component. The vehicle navigates itself in a clockwise loop around a collection of cones that represent traffic light colors. In a real world scenario, when we drive on the road and approach a traffic light, we expect a certain order of light transitions. Lights in the correct sequence appear in a cycle of green, yellow, then red. The RACECAR is given a set of rules that define the traffic light patterns and therefore expects the lights/cones to appear in a certain order. A group of cones represent a single traffic light pattern while each individual cone represents a color that a car would see at a traffic light. For example, a single green cone would mean that the car sees a green light and simply drives through. A group of red and green cones represents a car pulling into a stop light and then leaving when the light turns green.

I introduced anomalies in the traffic light patterns by changing the order in which lights appeared and evaluated whether the reasonableness monitor is able to detect them. I also used a rule updating framework to add new rules regarding light transition patterns in the car’s rules knowledge base on traffic light transition expectations and observed anomalies.

3.1

RACECAR architecture

A Traxxas Slash RC racing car is modified to include a JetsonTX2, a Hoyoku lidar, a ZED stereo camera, a inertial measurement unit (IMU), and a VESC based motor controller[8]. ROS Kinetic runs on the JetsonTX2 and is responsible for the computation and decision making of the car (Fig.3-1).

Figure 3-1: The fully assembled RACECAR.

3.1.1

Localization

The vehicle is given a map of the area it will traverse (Fig.3-2) and localizes itself within the map using lidar and odometry data. A particle filter uses the Monte Carlo Localization (MCL) algorithm to utilize the data together with the motion and sensor model to localize itself within the map.

3.1.2

Vision

A stereo camera on board is used to detect cones and place them on the map accordingly. The car drives slowly, so the camera images are processed at a rate of 5 Hz. Since the camera is only looking for cones straight ahead, I remove top and bottom third of the image, as cones could not possibly appear above the ground or be blocking the car.

Figure 3-2: The map of a section of the basement of MIT’s Ray and Maria Stata Center.

The image is converted to HSV values and masked using empirically tested red, green and yellow thresholds to find and locate the cones in the image. If a contour is found, the distance (fig.3-4) and angle (Fig.3-3)from the car is calculated using the left and right images of the car.

The angle, color and distance of the cone is published on a cone topic that the controller is subscribed to. The image processor always publishes the cone that it sees even if it has seen it before. It is up to the controller to decide whether the image processor is seeing a new cone or re-seeing the same old cone.

3.1.3

Path planning

A Probabilistic Roadmap Method (PRM) path planner is used for path planning around the map. Initially, waypoints are manually placed on the map starting at the top left hand corner and proceeding in a clockwise fashion (Fig.3-5). PRM is used to plan a path from waypoint to waypoint. PRM first randomly generates nodes across the entire map. I seeded the map with 4000 nodes. Nodes that do not have

Figure 3-3: We are looking for the horizontal angle that the cone makes with the camera; we are looking for the angle 𝛽. The distance between the cone and the center of the image (𝑏) is averaged between the two left and right images. The angle 𝛽 can be calculated by the following equation:

𝛽 = 𝑎𝑟𝑐𝑡𝑎𝑛( 2𝑏

𝑤𝑖𝑑𝑡ℎ𝑡𝑎𝑛(𝛼))

interference (i.e. their edge does not go through a wall) are connected if the Euclidean distance is less than a specified parameter. The web of connected nodes are generated once and saved. The saved web of connected nodes are loaded every time the ROS path service is re-started.

A path is created between the start and next goal point using Dijkstra’s search algorithm with Euclidean distance between nodes as weights.

The ROS path service uses the PRM path planner to plan a path from waypoint to waypoint.

When the car detects a cone, it must re-plan a path around the cone in the correct direction: right for green and, left for red and yellow). The planner constructs a

Figure 3-4: The distance the cone is from the car can be calculated by using parallax property of the left and right images. we want to solve for the distance 𝑧.

𝑧 = 𝐵𝑓 𝑥 − 𝑥′

The term 𝐵𝑓 was determined empirically through linear regression.

straight path to the correct side of the cone and re-plans a path from the current point to one end point of the straight line and from the other end of the straight line back onto the originally planned path.

To create the straight line to the side of the cone, a perpendicular line to the original base path is constructed through the cone. Two concentric circles are drawn around the cone and where the perpendicular line intersects the inner cone becomes the midpoint of the straight path. The end points of the path are constructed by drawing the straight line parallel to the base path and through the midpoint. (Fig.3-6)

3.1.4

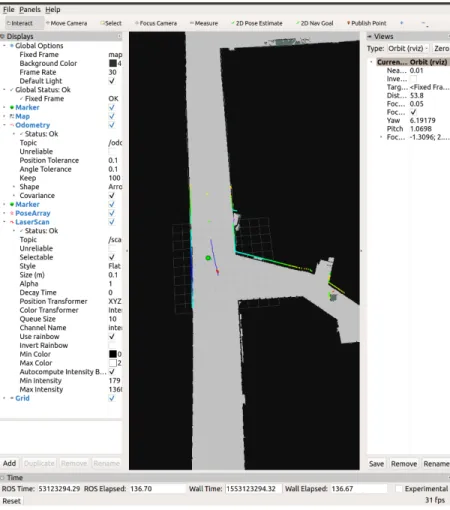

Visualization

I used rviz to visualize the information from the RACECAR and to monitor the status of the planner and the vision system. Rviz is used to make sure that the car is localizing, planning, and recognizing cones in the correct manner (Fig.3-7). Rviz is run on a laptop that is connected on the same network as the RACECAR and the ROS_MASTER_URI is set to the IP address of the RACECAR. Rviz is important in

Figure 3-5: Waypoints picked around the basement connected with a straight line path.

Figure 3-6: Cone path construction process.

visualizing the RACECAR state and a powerful debugging tool.

3.2

Commonsense monitor

One way to check for the anomalies in the scene is to understand what the scene parser believes to be in the scene and how those elements interact with each other. Translating the labeled scene into a human readable description can provide a structured way to parse the scene elements through natural language processing. The description of the scene (with objects as detected by perception) can be broken down into subject, object, and verb. [13] The system then constructs a Trans-frame from the description which represents the concepts in a language free way. Trans-frames work to represent concepts as complex combinations of language-free conceptual primitives

Figure 3-7: Rviz shows that the lidar points matches the map (the rainbow points), the location of the green cone seen, and the path planned around the green cone. The set of red arrows show the estimation of the car’s pose.

for an inner language ontology [13].The commonsense knowledge base is applied to the frame to evaluate the “reasonableness” of the frame (Fig.3-8)

Figure 3-8: Symbolic breakdown of sentences of scene descriptions and their respective reasonableness [13]

This frame parsing and commonsense knowledge base can be used as a check on the reasonableness of objects in the vehicle’s range of sight. The monitor uses this approach and checks if the perceived action in the scene is plausible and fits within the commonsense framework.

Here is how the approach can determine the observation that “a mailbox crossed the street” is unreasonable. Firstly, the MOVE primitive requires that the actor role must be filled by an “animate” object that can make other objects move or change location. Here, the actor role is filled by “mailbox”. To determine whether it satisfies or violates the constraint on the actor role, the system attempts to anchor the mailbox concept to a concept in ConceptNet to determine if it is an animate object or not. In this case there is a path through ConceptNet’s graph between “mailbox” and “object”, the anchor for inanimate objects, and no path between “mailbox”and “animal,” the anchor for animate objects. So the system determines that “mailbox” is inanimate and flags a violation of the actor constraints for the MOVE primitive act [13].

The path planning stage is an alternative place in the autonomous driving pipeline where the common sense and validity of the decision in context with the scene can be checked. One application checks the commanded speed of the car with with a known knowledge base of speed limit data to give the car context awareness control of its speed [24]. If all the cars surrounding the current vehicle are all traveling below the

Figure 3-9: Computer architecture for autonomous driving [18]

speed limit due to an emergency vehicle or if the roads are particularly poor, the car still believes that the maximum speed limit posted when in working reality the limit is lower. Our system can observe the surrounding situation, see that the average speed of other vehicles are significantly below the speed limit, mark the phenomena as an anomaly, and then proceed to update the rules file to lower the speed limit if in the same situations again.

3.3

Driving rules

There are rules for passing each cones, just like there are rules for encountering each color of traffic life when driving on real roads. The rules for passing each cone is as such:

1. green cone: pass on the right

2. red cone: pass on the left

3. yellow cone: pass on the left

The driving rules are built into the controller that processes each cone that is detected. The driving rule is currently represented with the boolean flag “right.” Since the car passes a green cone on the right side, that boolean is set to be true when a

green cone is detected. The controller subscribes to a cone channel that contains cone information, which then requests the path planner to plan around the cone accordingly. The car also has a set of rules regarding traffic light order, which is stored in a Python dictionary. Each cone color maps to a list of allowed preceding colors. After the car passes a cone, the car logs the cone location, car location, and time that it was recorded. The car also checks the color against the last color that it saw and checks if it was a valid transition. If the transition was not valid, the car logs the error, why it is an error, and what color it expected to see given the previous color.

After driving past a set of cones representing a single traffic light, the autonomous driving mode is paused to signal to the system that it is ready for the next set of traffic lights. After each set of traffic lights, the local history of colors seen is reset. The log is not reset and all of the cones/traffic lights seen are aggregated until the end of the test.

3.4

Updating rules

The rule update system is a working system developed by Gilpin et al.[11]. The system is designed to incorporate new rules into the adaptable monitoring system[12]. First, the rule update system reads in an error text file. It then processes errors to determine whether any of them occur multiple times. If there is more than one instance, then it uses the error information to create a new rule. Rule updating can also occur using sentiment analysis of explanations of the anomalous sighting. Sentiment analysis and adding explanations to each anomaly sighting is currently being explored as an expansion of this thesis.

A new rule is created by parsing the error log text. For example, when a green cone appears after a yellow cones several times, it creates a new rule that permits a green cone to appear after a yellow cone. This transition no longer triggers an error in the log text after the rule is updated.

The rule updating system looks for specific keywords in the error description, for example, “before,” “after,” “because,” etc. If it finds a that keyword, then it processes

the text before and after that word for the rule binding. This creates a symbolic triple, composed of the text before the keyword, the keyword itself, and the text after the keyword. This triple is used in the new rule text, which is output into the AIR Web Policy Rule Language[2]. The end result is a new AIR rule, written in N3 that is appended to the existing rule file. The resulting new AIR rule is then read by a human and then manually added to the existing python dictionary as a new rule. The RACECAR then checks its sightings against this set of updated rules. In the future, the rules updating process can be automated. This would happen in two parts. Firstly, if a new rule is needed, it would be compared to the rules in the existing rules file. If there is a similar rule already present, then the new rule would not need to be added. Otherwise, the new rule would be automatically appended to the AIR rule file and applied in next iterations of the system.

Chapter 4

Evaluation and Results

I evaluated the reasonableness monitor and the rule updating system with various traffic light scenarios and this chapter provides an overview of my evaluation. The system was evaluated by navigating the RACECAR autonomously around up to 20 small plastic cones that represent traffic light patterns. Each cone represents a specific traffic light color a car would see and each group of cones represents a single set of traffic light transitions a car would encounter at an intersection. Anomalies were introduced to the traffic light patterns, and I evaluated how the RACECAR detected the anomalies and the rules update process that followed.

There are four possible scenarios for traffic light transitions. Here are the possible transitions along with their explanations

1. green: car goes through green light

2. red → green: car stops at red light, leaves when light turns green

3. yellow → red → green: car approaches light while it is yellow, stops when it turns red, leaves when it turns green

4. green → yellow → red → green: car approaches light when it turns from green to yellow, stops when it turns red, leaves when it turns green

1. red → green

2. yellow → red

3. green → yellow

I limited each traffic light to have one erroneous transition. There are three possible erroneous transitions since for each color, there is only one color that can come after it that will make the transition erroneous. The other two colors are either correct or the same as the initial color. The three erroneous transitions are:

1. red → yellow

2. yellow → green

3. green → red

Using these erroneous transitions, these are the possible traffic lights with a single erroneous transition with the error in bold.

1. red → yellow → red → green

2. yellow → green

3. green → red → green

In each test run, the car navigated five traffic lights, or five sets of cones.

I decided to run three different sets of tests. The first set involved no errors, the second set involved random errors, and the third set involved repeated errors. All three sets of tests are then evaluated with the rule updating system [11]. To avoid bias in choosing traffic light patterns and their placement, each pattern was given a number, and a random number generator was used to generate the sequence.

For the first set of tests with no transition errors, the traffic light pattern was a sequence of five randomly chosen subsequences. For the second set of tests with random errors, I randomly chose the number of errors, where in the sequence of the five lights the errors would appear, and which error would appear in each place. I

randomly chose valid light sequences to fill the places in the sequence without an error. For the last set of tests with repeated errors, I randomly chose a single type of error and then randomly chose the number of times it would appear (a number between three to five) as well as where in the sequence the error would appear. Again, a randomly chosen valid light sequence was chosen to fill the places in the sequence without an error.

I generated five instances of each type of sequence and allowed the RACECAR to drive through the resulting course three times, which generates a sum total of 45 tests.

4.1

Testing setup

The cones were setup in the basement about four feet apart from each other to test the RACECAR’s ability to navigate through cones and detecting errors in the cone transitions (Fig.4-1

The car was required to navigate around all of the traffic cones until the end of the course. The log file was saved and the process repeated.

A total of 15 unique sequences were tested (Fig.4.1, Fig.4.2, Fig.4.3)

The RACECAR is connected to a game pad, and the R1 button gives control of the VESC motor controller to the autonomous system. When the button is not pressed, the joystick sends a command of 0 speed to the VESC. Joystick input always has priority over the autonomous navigation VESC commands as a safety feature. VESC executes commands from the navigation topic only when R1 is pressed. This is to ensure the safety of the car as the testing area is an active hallway with foot traffic. The car is in autonomous control when it navigates around cones. When it completes a set of cones representing a traffic light, the R1 button is released to signal to the system that it has completed a single traffic light and to reset its queue of light sightings for the next traffic light.

sequence # light1 light2 light3 light4 light5 1 green green red green yellow

green yellow red red green green

2 green yellow yellow red red red red green green green green

3 yellow red green green yellow red green green red

green green

4 green yellow yellow green red red red yellow green green green red

green

5 green red red green yellow yellow green green red

red green green green

sequence # light1 light2 light3 light4 light5 6 green green green yellow green

yellow green red

green

7 green red yellow yellow red green red green yellow

green red

8 yellow green green red green green red red green

green green

9 green yellow red green green red green green red yellow green green red

green 10 green yellow yellow green yellow red red green green green

Table 4.2: The five chosen sequences of traffic lights with some random transition errors.

sequence # light1 light2 light3 light4 light5 11 yellow yellow yellow yellow yellow green green green green green

12 green green green green green red red red red

green green green green

13 green green green green green red red red red red green green green green green

14 yellow yellow yellow green yellow green green green yellow green

red green

15 green red red yellow red yellow yellow red yellow

red red green red

Figure 4-1: Cone setup in the basement of MIT’s Stata building

4.2

Results

In this section, I discuss the results I obtained by autonomously navigating each of the 15 unique traffic light sequences three 3 each. The rules updater was used on each of the error logs to find repeated anomalies and to create new rules for valid traffic light transitions.

4.2.1

Anomaly detection

The RACECAR was able to navigate through the cones successfully and detect errors as they occurred. Each cone detected was logged after the car had successfully passed the cone. At times the RACECAR erroneously detected a cone twice; however, that error did not significantly affect the experiment, as it is valid to see the same

traffic light color more than once. The error does not induce an unwanted transition error. Example log files from each of the three differing groups of runs (no anomaly, some anomalies, repeated anomalies) can be found in Appendix A.

Rules Update

The rule updating system reads in the error log (example listed in appendix A). The system found an occurrence of a repeated transition errors (green after yellow) and created a new rule that green can be expected to come after yellow. The output of the updating system, which is a rule, is illustrated below. The rule updating system was able to successfully detect the recurring light transition errors in each log and update the rule base. I evaluated one error log from each of the 15 unique cone sequences using the rule updating system. The rule updating system generated new rules for each log that had repeated transition anomalies.

1 > > p y t h o n 3 rule - l e a r n . py r e p e a t 1

2 The f o l l o w i n g e v e n t s are r e p e a t s and s h o u l d be new r u l e s

3 E R R O R g r e e n a p p e a r e d a f t e r y e l l o w

4 F o u n d the e x p l a n a t i o n E R R O R g r e e n a p p e a r e d a f t e r y e l l o w

5 F o u n d t r i p l e

6 [ ’ green ’ , ’ after ’ , ’ yellow ’]

7

8 : added - rule -1 a air : Belief - r u l e ;

9 r d f s : c o m m e n t " T r y i n g t h i s out .";

10 air : if {

11 foo : g r e e n o n t o l o g y : a f t e r foo : y e l l o w . };

12 air : t h e n [

13 air : d e s c r i p t i o n (" E R R O R g r e e n a p p e a r e d a f t e r y e l l o w ");

14 air : a s s e r t [ air : s t a t e m e n t { foo : c o n e air : c o m p l i a n t - w i t h : l e a r n e d _ p o l i c y . } ] ] ;

15 air : e l s e [

Chapter 5

Conclusion

In this chapter, I describe the many improvements functionally and evaluation-wise that we hope to add to our project.

5.1

Future Work

The work done for this thesis was focused on building and using the RACECAR platform to evaluate the performance of anomaly detection in autonomous vehicles based on symbolic rules/reasonableness. This was an initial study and the work can be expanded to include a richer set of data used in decision making, such as vehicle position, cone position, vehicle speed, information from potential other vehicles around the autonomous vehicle. A far more expansive set of rules can also be applied to the car’s behavior and observations. The set of rules should originate from a driving handbook, which details the literal rules set for the vehicles on the road. Additionally, given that autonomous vehicles interact with other vehicles and it would be interesting to apply the anomaly detection and adaptable monitoring system to an autonomous vehicle in the presence of other possibly non-autonomous vehicles.

Currently when a rule is violated, the error is logged along with the violated rule. The rule learning system currently performs the rules updating process by simply checking for repeating anomalies, and automatically adds the anomaly as a rule in the acceptable rule set. This process does not utilize the contextual information from

the vehicle’s state and surroundings in making the decision as to whether or not to add the rule to the rule set. Adding context to each anomaly helps explain why the rule was broken. For example, one rule of the road states that vehicles shall not drive through red lights. However, if a car happens to observe multiple times that an emergency vehicle drives through a red light, it should not add to its own rule set that it should be able to drive through a red light. The context of the rule breaking situation, in this case it is an emergency vehicle that drove through the red light and not other vehicles, is an important factor in deciding whether to augment the given rule set based on observations. A natural next step is to add explanations to each observed anomaly to allow the rules updating system to base its updates on more than just repeated sightings of an anomaly within a short time frame. Context contributes to explanations. For example, the traffic lights could appear out of order because the light itself is physically broken, or because the car misinterpreted one color as another, among other possible reasons. Just because the car interprets the color incorrectly does not mean it should now automatically accept that the traffic lights go in a different order. Taking explanations into account when updating rules is important because there are certain circumstances and contexts that cannot simply be learned by querying data. Explanations are important for learning from few examples and learning in new contexts. For example, some situations with multiple plausible behaviors will not be well-represented in the training data, like flashing headlights or pothole maneuvers, because they are rare events or they change in different contexts. But the machine still has to learn to abstract from these examples, in order to explain the plausibility of their actions (to themselves). This is similar to the ways that humans learn.

Similar work by [19] looks at explaining individual predictions made by an LSTM without any modifications to the underlying model. This is similar to the reason-ableness monitoring work [12], except that this work also aims to learn from those explanations to make better decisions moving forward. This is similar to one-shot learning[17], which aims to learn information from just a few examples.

detection and other contexts. Sarno et al. created financial fraud detection methods that aim to create a list of association rules to better detect malicious behaviors [21]. Rule learning with monitoring, the ongoing work in this project, aims to learn from the explanations of a monitoring system to learn new rules and behaviors to aid in learning over future iterations.

The process we can apply to rule learning in the future is the following. First, we examine and process the explanation for its sentiment. Sentiment analysis is a well-studied area that aims to predict the polarity of a given text. For our use case of a self-driving car, this is important to deem whether the intended behavior is explained to be anomalous (or not). For example, when we learn to drive, when we perceive anomalous driving behavior, we explain it as so, saying for instance that the driver must be in a hurry or the vehicle is an emergency vehicle and therefore follow a different set of rules. Or, if we cannot conjure up an explanation, it means the situation must be anomalous. Other behaviors that should be covered by new rules will have a neutral or positive sentiment. Those explanations should be parsed, and automatically re-input into the system as new rules. These new rules could be strict driving rules, or best practices. For example, a vehicle may perceive several neighboring cars proceeding to turn right through a red light. After seeing the repeated action, the vehicle should reason that this is an accepted behavior because multiple cars have performed the action, thus a neutral sentiment explanation. In other instances, a vehicle may observe several cars driving extremely sporadically, which is accompanied with honking and yelling. This negative sentiment explanation; that vehicles are intersecting lanes aggressively, should not be learned as a new rule.

After the sentiment of the explanation is classified, the explanation is parsed into a rule format. The goal is to take a human readable explanation in plain text, and parse that into an "if this then that" format rule. Usually, this relies on looking for symbolic causal terms in the explanation, like "because" or time-varying symbolic terms like "before" and "after."

Another extension of the project is to implement the work by Gilpin et al.’s [13] work in regards to reasonable monitoring using scene parser and conceptual

primitive decomposition. In place of the traffic light pattern rules, a set of rules derived from a driving handbook can offer a richer set of rules against which one can check reasonableness. To utilize the full set of rules also requires building a richer environment for the RACECAR to emulate a real-world driving situation.

5.2

Summary

Current tools developed for anomaly detection use rule based systems that are generated using training data and humans-in-the-loop. There still remains the problem of edge case training data and the necessity of human intervention which prevents a vehicle from being truly autonomous. My work focuses on addressing these issues in anomaly detection by using a commonsense knowledge base, a predefined set of rules, and a means of updating the base set of rules as anomalies are found and classified. The hardware platform allows for the future development of the project and generates realistic data for evaluation.

In this thesis, I built and implemented a version of the open source MIT RACECAR project as a hardware platform to test the anomaly detection and rules updating framework. The experiments involved navigating the RACECAR around sets of cones that represent traffic light color orders that mimicked real life driving scenarios. The car contained driving rules on how to navigate each colored cone and also rules on valid traffic light transitions and checked each detected cone against the traffic light order rules. Anomalies were introduced to the traffic light color orders so some lights did not appear in the normal green-yellow-red order. The car navigated the course with no anomalies, some anomalies, and repeating anomalies. The car logged all cones that it saw and flagged unexpected traffic light color transitions. The rules updating framework was applied to the logs and was able to find the patterned irregularities.

This traffic light experiment is a simple example of how anomaly detection coupled with a rule updating framework could work together in real life to inform the car of unreasonable and strange sightings. Machine learning relies on training data and previously seen input. However, not all corner cases can be accounted for so it is

impossible to include all scenarios that an autonomous systems might encounter. As autonomous machines become more complex, there are more ways that they can fail. Updating an existing set of rules from anomalies and new situations is a natural way to make these systems more robust.

Appendix A

Example Error Logs

Listing A.1: Log file for traffic lights without transition errors: sequence 1 (Fig. 4.1) 1 . . . . 2 saw g r e e n 3 c o n e l o c a t i o n [ - 0 . 7 0 4 4 6 2 5 3 0 . 2 3 7 0 0 0 8 5 ] 4 car l o c a t i o n ( - 0 . 1 2 5 1 2 8 1 3 5 1 0 1 1 2 3 2 8 , 0 . 4 0 7 2 5 5 2 6 3 3 2 3 5 1 0 4 , 1 . 8 4 3 7 5 5 2 0 1 0 0 0 2 3 2 3 ) 5 t i m e : 1 5 5 3 0 3 8 0 4 1 . 3 5 6 7 . . . . 8 saw g r e e n 9 c o n e l o c a t i o n [ - 6 . 3 8 5 2 6 0 5 1 - 1 . 7 0 9 6 9 4 8 1 ] 10 car l o c a t i o n ( - 6 . 4 9 1 2 5 9 0 2 3 2 8 2 2 8 7 5 , - 1 . 0 6 7 8 8 9 7 1 4 4 4 3 8 8 5 5 , - 2 . 9 8 0 4 1 1 7 4 7 0 9 3 8 8 3 3 ) 11 t i m e : 1 5 5 3 0 3 8 0 6 4 . 3 6 12 13 . . . . 14 saw red 15 c o n e l o c a t i o n [ - 1 1 . 5 0 5 6 3 8 5 9 - 1 . 7 3 8 7 5 6 6 3 ] 16 car l o c a t i o n ( - 1 1 . 4 5 6 4 5 1 3 1 4 8 2 7 8 2 9 , - 2 . 3 1 5 9 9 5 9 5 0 5 3 5 2 7 2 , - 3 . 0 4 8 2 0 1 2 5 6 7 1 0 1 6 6 6 ) 17 t i m e : 1 5 5 3 0 3 8 0 8 6 . 3 8 18 19 saw g r e e n 20 c o n e l o c a t i o n [ - 1 4 . 1 5 2 4 8 7 4 8 0 . 1 3 4 2 0 6 5 3 ] 21 car l o c a t i o n ( - 1 3 . 3 5 1 9 8 4 1 5 1 6 6 7 6 9 9 , 0 . 1 8 3 7 4 8 5 0 2 7 2 7 1 1 7 8 5 , 1 . 6 0 6 6 4 8 5 8 9 1 5 4 3 6 1 8 ) 22 t i m e : 1 5 5 3 0 3 8 0 9 5 . 9 4 23 24 . . . . 25 saw g r e e n 26 c o n e l o c a t i o n [ - 1 9 . 3 0 0 2 2 1 7 5 - 1 . 7 7 0 5 7 1 2 1 ] 27 car l o c a t i o n ( - 1 9 . 4 8 8 9 7 7 2 2 9 3 5 6 4 7 , - 1 . 2 4 8 3 6 6 4 2 0 8 1 5 0 6 3 5 , - 2 . 8 6 5 2 2 5 3 6 4 1 7 9 2 0 7 4 ) 28 t i m e : 1 5 5 3 0 3 8 1 1 8 . 6 2 29 30 saw y e l l o w 31 c o n e l o c a t i o n [ - 2 4 . 6 7 0 4 4 6 5 3 - 1 . 5 7 4 1 7 8 8 2 ] 32 car l o c a t i o n ( - 2 4 . 6 8 7 0 2 8 9 1 7 9 5 4 0 9 , - 2 . 1 3 5 9 8 1 1 6 1 7 5 7 5 9 3 7 , - 3 . 1 0 3 6 8 6 4 2 0 6 7 0 3 3 5 ) 33 t i m e : 1 5 5 3 0 3 8 1 3 1 . 1 34 35 saw red 36 c o n e l o c a t i o n [ - 3 0 . 5 8 4 9 4 4 9 9 - 0 . 7 5 7 7 5 1 8 ] 37 car l o c a t i o n ( - 3 0 . 6 5 8 6 8 6 8 0 3 6 7 8 6 8 4 , - 1 . 3 3 5 3 6 3 7 1 4 5 9 7 0 7 2 3 , 3 . 0 4 5 5 7 4 3 2 8 5 3 6 5 3 4 6 ) 38 t i m e : 1 5 5 3 0 3 8 1 4 4 . 2 4

39 40 saw g r e e n 41 c o n e l o c a t i o n [ - 3 4 . 3 4 0 1 6 1 6 7 2 . 6 2 1 2 0 7 6 9 ] 42 car l o c a t i o n ( - 3 4 . 1 8 0 0 1 4 2 0 2 9 2 4 9 2 , 2 . 7 9 4 2 8 8 1 9 8 8 9 0 3 9 3 , 2 . 3 3 4 3 3 3 7 3 7 9 0 1 0 4 5 6 ) 43 t i m e : 1 5 5 3 0 3 8 1 6 0 . 2 2 44 45 . . . . 46 saw y e l l o w 47 c o n e l o c a t i o n [ - 3 3 . 2 1 2 0 7 1 4 1 7 . 4 7 5 3 0 7 9 8 ] 48 car l o c a t i o n ( - 3 3 . 8 4 0 9 9 1 4 8 0 6 4 9 8 7 6 , 7 . 8 5 6 8 1 5 9 8 5 5 4 2 8 8 6 5 , 1 . 0 4 4 0 1 8 8 1 5 3 5 4 9 8 4 8 ) 49 t i m e : 1 5 5 3 0 3 8 1 7 9 . 6 1 50 51 saw red 52 c o n e l o c a t i o n [ - 3 4 . 1 7 5 8 6 1 7 5 1 1 . 2 8 5 6 3 9 9 8 ] 53 car l o c a t i o n ( - 3 4 . 1 1 7 0 8 0 7 5 0 3 3 8 9 6 4 , 1 1 . 4 1 7 2 9 7 0 3 1 1 6 1 1 0 4 , 2 . 4 4 2 4 6 8 3 6 5 1 8 1 4 0 1 4 ) 54 t i m e : 1 5 5 3 0 3 8 1 8 6 . 2 2 55 56 saw g r e e n 57 c o n e l o c a t i o n [ - 3 1 . 8 3 8 6 8 1 3 2 1 5 . 1 6 9 0 9 0 0 6 ] 58 car l o c a t i o n ( - 3 2 . 1 5 5 1 6 7 8 9 1 4 4 8 3 9 , 1 4 . 5 1 9 5 6 8 6 6 6 3 7 4 5 5 2 , - 0 . 4 7 5 6 4 4 7 8 4 7 1 7 8 7 1 5 ) 59 t i m e : 1 5 5 3 0 3 8 1 9 9 . 0 60 61 . . . .

Listing A.2: Log file for traffic lights with random transition errors: sequence 6 (Fig. 4.2) 1 2 . . . . 3 saw g r e e n 4 c o n e l o c a t i o n [ - 1 . 2 0 3 1 5 8 3 2 - 0 . 5 7 2 2 8 0 4 8 ] 5 car l o c a t i o n ( - 1 . 2 8 0 7 2 9 2 3 7 3 0 4 6 1 6 9 , - 0 . 0 0 8 9 7 1 2 5 6 6 0 0 9 6 9 7 3 1 , - 3 . 0 2 6 4 7 8 3 8 9 4 1 1 3 0 8 5 ) 6 t i m e : 1 5 5 3 0 3 7 6 4 4 . 9 4 7 8 saw y e l l o w 9 c o n e l o c a t i o n [ - 2 . 4 9 7 8 2 2 0 5 - 1 . 9 2 6 3 0 9 9 4 ] 10 car l o c a t i o n ( - 1 . 9 6 3 8 7 9 5 2 8 8 9 9 7 6 3 5 , - 1 . 5 6 8 4 3 6 4 1 3 2 2 2 0 2 9 6 , - 0 . 9 3 2 8 2 2 8 2 5 9 9 8 5 6 ) 11 t i m e : 1 5 5 3 0 3 7 6 5 1 . 4 12 13 saw red 14 c o n e l o c a t i o n [ - 7 . 9 6 0 8 0 2 5 6 - 1 . 8 9 9 9 4 2 9 8 ] 15 car l o c a t i o n ( - 8 . 0 2 9 8 0 9 5 7 1 1 4 7 1 7 , - 2 . 4 6 8 1 8 3 7 0 8 6 5 6 5 9 8 5 , 3 . 0 3 8 2 1 1 5 3 6 2 8 3 1 5 8 8 ) 16 t i m e : 1 5 5 3 0 3 7 6 8 4 . 2 8 17 18 saw g r e e n 19 c o n e l o c a t i o n [ - 9 . 4 4 7 2 7 7 9 1 0 . 3 9 5 7 9 7 2 9 ] 20 car l o c a t i o n ( - 8 . 6 2 1 2 9 6 7 2 7 1 4 3 5 8 9 , 0 . 2 3 0 1 6 4 5 6 5 5 1 5 2 6 2 4 , 1 . 3 7 1 4 1 1 2 9 1 5 0 2 7 2 6 8 ) 21 t i m e : 1 5 5 3 0 3 7 6 9 3 . 0 9 22 23 . . . . 24 saw g r e e n 25 c o n e l o c a t i o n [ - 1 4 . 4 7 1 8 9 7 5 5 - 1 . 5 9 1 1 2 1 5 3 ] 26 car l o c a t i o n ( - 1 4 . 6 5 8 3 1 5 3 7 3 7 0 7 4 9 3 , - 0 . 9 9 9 0 6 7 7 7 7 3 3 0 5 5 4 2 , - 2 . 8 4 1 3 7 6 6 8 7 0 4 7 0 2 9 4 ) 27 t i m e : 1 5 5 3 0 3 7 7 1 4 . 6 5 28 29 . . . . 30 saw g r e e n 31 c o n e l o c a t i o n [ - 1 9 . 4 3 9 4 8 2 5 3 - 0 . 2 0 8 7 7 2 2 5 ] 32 car l o c a t i o n ( - 1 9 . 5 1 0 4 5 6 4 8 3 1 9 4 3 5 7 , 0 . 3 8 9 2 0 0 1 4 3 7 8 1 7 2 7 1 , - 3 . 0 3 3 6 6 5 0 2 5 8 3 6 7 0 3 5 ) 33 t i m e : 1 5 5 3 0 3 7 7 3 3 . 7 9

34 35 . . . . 36 saw y e l l o w 37 c o n e l o c a t i o n [ - 2 1 . 9 1 6 7 4 0 0 7 - 1 . 7 6 0 8 0 7 1 8 ] 38 car l o c a t i o n ( - 2 1 . 6 8 3 7 9 5 0 7 1 9 7 2 1 8 5 , - 2 . 2 4 4 4 0 1 5 7 2 4 4 4 2 3 9 , - 2 . 6 7 7 8 0 8 8 0 1 6 7 5 5 4 8 ) 39 t i m e : 1 5 5 3 0 3 7 7 4 7 . 9 3 40 41 saw g r e e n 42 c o n e l o c a t i o n [ - 2 4 . 1 6 8 4 4 6 1 7 0 . 4 6 7 7 7 8 5 7 ] 43 car l o c a t i o n ( - 2 5 . 2 2 3 3 2 0 3 6 9 4 9 5 8 6 6 , 0 . 3 0 1 8 0 8 0 5 1 7 7 8 5 0 9 5 , 1 . 7 4 2 2 2 1 6 7 3 6 8 4 9 1 4 ) 44 t i m e : 1 5 5 3 0 3 7 7 6 0 . 7 2 45 46 E R R O R g r e e n a p p e a r e d a f t e r y e l l o w 47 48 . . . . 49 saw g r e e n 50 c o n e l o c a t i o n [ - 3 2 . 4 1 6 0 9 8 9 7 0 . 9 6 6 4 0 7 5 5 ] 51 car l o c a t i o n ( - 3 2 . 3 5 5 6 4 7 1 6 0 6 0 2 0 5 , 0 . 9 6 6 1 6 2 1 1 3 2 8 8 6 0 7 8 , 1 . 1 7 1 7 9 4 5 6 3 7 4 7 7 3 2 ) 52 t i m e : 1 5 5 3 0 3 7 7 9 4 . 3 1 53 54 . . . .

Listing A.3: Log file for traffic lights with repeated transition errors: sequence 11 (Fig. 4.3) 1 2 . . . . 3 saw y e l l o w 4 c o n e l o c a t i o n [ - 1 . 3 1 8 8 7 5 9 1 - 1 . 6 4 5 3 6 4 3 3 ] 5 car l o c a t i o n ( - 1 . 2 0 9 0 1 7 3 0 2 8 1 6 8 0 1 4 , - 2 . 2 0 1 9 2 8 8 5 4 4 6 4 9 0 4 3 , - 2 . 9 3 1 1 9 3 8 5 7 8 3 9 8 7 7 3 ) 6 t i m e : 1 5 5 3 0 4 5 0 0 2 . 1 4 7 8 saw g r e e n 9 c o n e l o c a t i o n [ - 4 . 2 8 2 1 3 1 6 9 0 . 3 9 4 2 4 3 4 1 ] 10 car l o c a t i o n ( - 3 . 4 0 2 3 6 6 4 4 2 8 8 5 6 2 , 0 . 4 6 0 0 2 9 9 2 6 6 5 4 7 5 5 7 , 1 . 6 4 1 0 4 2 9 4 5 9 5 4 3 8 3 4 ) 11 t i m e : 1 5 5 3 0 4 5 0 1 2 . 4 1 12 13 E R R O R g r e e n a p p e a r e d a f t e r y e l l o w 14 15 . . . . 16 saw y e l l o w 17 c o n e l o c a t i o n [ - 8 . 9 2 4 3 7 8 2 8 - 1 . 6 6 0 7 8 5 1 2 ] 18 car l o c a t i o n ( - 8 . 4 0 1 9 7 0 5 7 7 2 1 3 9 3 4 , - 1 . 5 3 4 4 9 3 4 1 4 5 1 9 4 7 5 , - 1 . 3 1 5 4 7 3 3 9 7 4 6 3 9 0 9 7 ) 19 t i m e : 1 5 5 3 0 4 5 0 3 3 . 0 4 20 21 saw g r e e n 22 c o n e l o c a t i o n [ - 1 2 . 0 9 8 5 3 3 5 5 0 . 1 1 9 8 4 9 8 7 ] 23 car l o c a t i o n ( - 1 1 . 2 3 6 5 6 9 1 9 1 2 9 3 6 7 , 0 . 2 9 6 5 2 0 9 7 2 3 6 0 8 4 5 4 , 1 . 7 5 9 5 2 0 8 2 0 0 3 5 6 6 4 5 ) 24 t i m e : 1 5 5 3 0 4 5 0 4 6 . 3 3 25 26 E R R O R g r e e n a p p e a r e d a f t e r y e l l o w 27 28 . . . . 29 saw y e l l o w 30 c o n e l o c a t i o n [ - 1 4 . 0 1 3 7 4 1 3 - 1 . 8 6 6 1 4 9 9 1 ] 31 car l o c a t i o n ( - 1 5 . 1 1 9 6 7 9 1 7 8 0 1 2 2 4 , - 1 . 9 6 1 7 3 5 8 1 2 8 9 3 8 5 0 7 , - 1 . 4 9 8 9 6 7 9 5 6 4 9 3 4 9 2 3 ) 32 t i m e : 1 5 5 3 0 4 5 0 6 8 . 6 5 33 34 saw g r e e n 35 c o n e l o c a t i o n [ - 1 8 . 8 9 8 7 6 0 9 9 - 0 . 3 4 7 4 8 2 5 9 ]

36 car l o c a t i o n ( - 1 8 . 9 6 0 2 5 4 0 3 4 8 8 4 6 4 5 , - 0 . 3 4 0 7 1 3 4 1 8 8 4 9 8 4 0 6 , 1 . 7 0 3 2 7 6 4 8 8 9 0 9 1 3 1 4 ) 37 t i m e : 1 5 5 3 0 4 5 0 8 8 . 0 2 38 39 E R R O R g r e e n a p p e a r e d a f t e r y e l l o w 40 41 . . . . 42 saw y e l l o w 43 c o n e l o c a t i o n [ - 2 2 . 2 5 7 3 9 2 2 8 - 1 . 7 5 1 7 6 7 8 ] 44 car l o c a t i o n ( - 2 2 . 1 3 8 4 9 3 7 9 6 5 7 8 8 8 , - 2 . 2 8 1 9 8 8 2 8 3 4 4 4 4 1 9 , - 2 . 8 8 4 3 4 6 0 1 0 2 3 0 8 8 5 3 ) 45 t i m e : 1 5 5 3 0 4 5 1 0 4 . 3 2 46 47 saw g r e e n 48 c o n e l o c a t i o n [ - 2 7 . 1 0 9 3 9 9 2 0 . 4 1 7 9 0 4 0 4 ] 49 car l o c a t i o n ( - 2 8 . 4 0 0 8 5 2 5 9 7 7 3 0 5 6 2 , 0 . 4 3 1 9 8 4 1 2 5 1 5 1 1 8 9 0 6 , 1 . 6 0 9 2 5 9 3 2 2 8 5 6 0 3 9 2 ) 50 t i m e : 1 5 5 3 0 4 5 1 1 7 . 7 6 51 52 E R R O R g r e e n a p p e a r e d a f t e r y e l l o w 53 54 . . . . 55 saw y e l l o w 56 c o n e l o c a t i o n [ - 3 3 . 8 1 2 2 8 7 9 6 5 . 4 4 1 6 5 2 2 8 ] 57 car l o c a t i o n ( - 3 4 . 4 2 7 0 2 1 6 5 0 9 2 0 2 5 , 5 . 3 8 3 3 3 5 7 7 9 5 4 6 8 0 3 , 1 . 6 8 3 6 6 6 6 3 2 2 1 3 8 0 8 5 ) 58 t i m e : 1 5 5 3 0 4 5 1 4 5 . 7 59 60 saw g r e e n 61 c o n e l o c a t i o n [ - 3 3 . 0 3 4 2 2 1 1 1 1 0 . 7 7 6 8 0 5 7 7 ] 62 car l o c a t i o n ( - 3 2 . 5 0 1 4 4 8 9 3 4 3 6 6 8 9 , 1 0 . 7 4 1 6 7 3 7 5 0 7 7 8 8 2 3 , 1 . 4 9 8 0 0 8 3 0 6 1 9 7 7 8 2 ) 63 t i m e : 1 5 5 3 0 4 5 1 5 8 . 7 2 64 65 E R R O R g r e e n a p p e a r e d a f t e r y e l l o w 66 67 . . . .

Bibliography

[1] AI Mistakes Bus-Side Ad for Famous CEO, Charges Her with Jaywalk-ing. https://www.caixinglobal.com/2018-11-22/ai-mistakes-bus-side-a d-for-famous-ceo-charges-her-with-jaywalkingdo-101350772.html. life news.

[2] AIR Web Rule Language. http://dig.csail.mit.edu/2009/AIR/.

[3] Experimental Security Research of Tesla Autopilot. https://keenlab.tencent. com/en/whitepapers/Experimental_Security_Research_of_Tesla_Autopil ot.pdf.

[4] mit-racecar. https://github.com/mit-racecar. Accessed Mar 13, 2018. Github Repository.

[5] PRELIMINARY REPORT HIGHWAY HWY18MH010. https://www.ntsb.gov /investigations/AccidentReports/Reports/HWY18MH010-prelim.pdf. [6] The Grand Challenge. https://www.darpa.mil/about-us/timeline/-grand

-challenge-for-autonomous-vehicles. DARPA.

[7] Uber’s Self-Driving Car Saw the Woman It Killed, Report Says. https://www. wired.com/story/uber-self-driving-crash-arizona-ntsb-report/.

[8] VESC Project. https://vesc-project.com/.

[9] Waymo unveils self-driving taxi service in Arizona for paying customers. https://www.reuters.com/article/us-waymo-selfdriving-focus/waymo -unveils-self-driving-taxi-service-in-arizona-for-paying-customers -idUSKBN1O41M2. arizona.

[10] Ivan Evtimov, Kevin Eykholt, Earlence Fernandes, Tadayoshi Kohno, Bo Li, Atul Prakash, Amir Rahmati, and Dawn Song. Robust physical-world attacks on machine learning models. CoRR, abs/1707.08945, 2017.

[11] Leilani H. Gilpin. Learning symbolic commonsense rules through explanation. 2019.

[12] Leilani H. Gilpin and Lalana Kagal. An adaptable self-monitoring framwork for complex machines. page 3, 2019.

[13] Leilani Henrina Gilpin, Jamie C. Macbeth, and Evelyn Florentine. Monitoring scene understanders with conceptual primitive decomposition and commonsense knowledge. 2018.

[14] Ian Goodfellow, Jonathon Shlens, and Christian Szegedy. Explaining and har-nessing adversarial examples. In International Conference on Learning

Represen-tations, 2015.

[15] Brody Huval, Tao Wang, Sameep Tandon, Jeff Kiske, Will Song, Joel Pazhayam-pallil, Mykhaylo Andriluka, Pranav Rajpurkar, Toki Migimatsu, Royce Cheng-Yue, Fernando A. Mujica, Adam Coates, and Andrew Y. Ng. An empirical evaluation of deep learning on highway driving. CoRR, abs/1504.01716, 2015. [16] A. Inzartsev, A. Pavin, A. Kleschev, V. Gribova, and G. Eliseenko. Application

of artificial intelligence techniques for fault diagnostics of autonomous underwater vehicles. In OCEANS 2016 MTS/IEEE Monterey, pages 1–6, Sep. 2016.

[17] Fei-Fei Li, Rob Fergus, and Pietro Perona. One-shot learning of object categories.

IEEE transactions on pattern analysis and machine intelligence, 28(4):594–611,

2006.

[18] Shaoshan Liu, Jie Tang, Zhe Zhang, and Jean-Luc Gaudiot. CAAD: computer architecture for autonomous driving. CoRR, abs/1702.01894, 2017.

[19] W. James Murdoch, Peter J. Liu, and Bin Yu. Beyond word importance: Con-textual decomposition to extract interactions from lstms. CoRR, abs/1801.05453, 2018.

[20] Ramya Ramakrishnan, Ece Kamar, Besmira Nushi, Debadeepta Dey, Julie Shah, and Eric Horvitz. Overcoming blind spots in the real world: Leveraging comple-mentary abilities for joint execution. 2019.

[21] Riyanarto Sarno, Rahadian Dustrial Dewandono, Tohari Ahmad, Moham-mad Farid Naufal, and Fernandes Sinaga. Hybrid association rule learning and process mining for fraud detection. IAENG International Journal of Computer

Science, 42(2), 2015.

[22] William Saunders, Girish Sastry, Andreas Stuhlmueller, and Owain Evans. Trial without error: Towards safe reinforcement learning via human intervention. In

Proceedings of the 17th International Conference on Autonomous Agents and MultiAgent Systems, pages 2067–2069. International Foundation for Autonomous

Agents and Multiagent Systems, 2018.

[23] Shai Shalev-Shwartz, Shaked Shammah, and Amnon Shashua. Safe, multi-agent, reinforcement learning for autonomous driving. arXiv preprint arXiv:1610.03295, 2016.

[24] Lihua Zhao, Ryutaro Ichise, Seiichi Mita, and Yutaka Sasaki. An ontology-based intelligent speed adaptation system for autonomous cars. 11 2014.

![Figure 3-8: Symbolic breakdown of sentences of scene descriptions and their respective reasonableness [13]](https://thumb-eu.123doks.com/thumbv2/123doknet/14126508.468430/30.918.205.709.193.365/figure-symbolic-breakdown-sentences-scene-descriptions-respective-reasonableness.webp)

![Figure 3-9: Computer architecture for autonomous driving [18]](https://thumb-eu.123doks.com/thumbv2/123doknet/14126508.468430/31.918.284.649.113.363/figure-computer-architecture-for-autonomous-driving.webp)