Adaptive Modality Selection for Navigation Systems

by

Kyle Kotowick

B.Sc., University of British Columbia – Okanagan (2011)

M.A.Sc., University of British Columbia – Vancouver (2013)

Submitted to the Department of Aeronautics and Astronautics

in partial fulfillment of the requirements for the degree of

Doctor of Philosophy in Human Systems Integration

at the

MASSACHUSETTS INSTITUTE OF TECHNOLOGY

September 2018

© Massachusetts Institute of Technology 2018. All rights reserved.

Author . . . .

Department of Aeronautics and Astronautics

June 14, 2018

Certified by. . . .

Professor Julie A. Shah

Research Supervisor

Certified by. . . .

Professor Jeffrey A. Hoffman

Committee Member

Certified by. . . .

Professor David A. Mindell

Committee Member

Accepted by . . . .

Professor Hamsa Balakrishnan

Chair, Graduate Program Committee

Adaptive Modality Selection for Navigation Systems

by

Kyle Kotowick

Submitted to the Department of Aeronautics and Astronautics on June 14, 2018, in partial fulfillment of the

requirements for the degree of

Doctor of Philosophy in Human Systems Integration

Abstract

People working in extreme environments, where their mental and physical capabilities are taxed to the limit, need every possible advantage in order to safely and effectively perform their tasks. When these people — such as soldiers in combat or first responders in disaster areas — need to navigate through various areas in addition to performing other concurrent tasks, the combination can easily result in sensory or attentional overload and lead to major reductions in performance.

Since the tasks that these people must perform often require intense visual attention, such as scanning an area for threats or targets, conventional visual navigation systems (map-based GPS displays) add to that visual workload and put users in danger of divided attention and failure to perform critical functions. This has lead to substantial research in the field of tactile navigation systems, which allow the user to navigate without needing to look at any display or use his or her hands to operate the system. While they have been shown to be extremely beneficial in many applications, tactile navigation systems are incapable of providing the detailed information that visual systems can and they make it more difficult to use the tactile sensory modality for other notifications or alerts due to tactile interference.

This dissertation proposes a novel navigation system technology: one that adap-tively and dynamically selects a navigation system’s modality based on a variety of factors. Each modality has varying levels of compatibility with the different types of concurrent tasks, which forms the basis for the adaptive modality selection (AMS) algorithm. Additionally, there are time-varying factors called switching cost, sen-sory adaptation, and habituation that negatively affect navigation performance over long-duration navigation tasks; by switching between navigation system modalities when these effects have reached a point of notable performance loss, their effects can be mitigated. By considering both the task-specific benefits of each modality as well as the time-varying effects, an AMS navigation system can dynamically react to changes in the user’s mission or environmental parameters to provide consistent, reliable navigation support.

The research presented in this dissertation is divided into three phases, each involving a distinct human-participants experiment. The first phase investigated

methods for selecting which modality to use for providing information to users when they are already completing other high-workload tasks. Results from the 45-participant experiment indicated that the primary consideration should be to avoid presenting multiple sources of information through the tactile modality simultaneously, suggesting that an AMS navigation system should ensure that the tactile modality is never used for navigation while it is also necessary for concurrent tasks.

The second phase investigated the effects of sensory adaptation and habituation on navigation tasks, and evaluated whether it was possible to alleviate those effects by regularly changing between navigation system modalities. Results from the 32-participant experiment indicated that periodically changing between navigation system modalities induces a transient switching cost after each change, but that it also prevents long-term adaptation/habituation. The analysis indicated that the optimal time to change modalities was approximately once every five minutes.

The third and final phase investigated the efficacy of an AMS navigation system algorithm, the design of which was informed by the results from the first two phases of research in combination with results from prior work. Participants were required to navigate while also performing various concurrent tasks while using a conventional single-modality navigation system, a multimodal system, or the novel adaptive system. Results from the 32-participant experiment indicated that when a user must both navigate and perform a concurrent non-navigation task simultaneously, use of an AMS navigation system can result in improved performance on both the navigation and the concurrent task.

Research Supervisor: Julie A. Shah

Title: Associate Professor, Department Aeronautics and Astronautics Committee Member: Jeffrey A. Hoffman

Title: Professor of the Practice, Department Aeronautics and Astronautics Committee Member: David A. Mindell

Title: Professor, Department Aeronautics and Astronautics Dissertation Reader: Hayley J. D. Reynolds

Title: Assistant Group Leader, Lincoln Laboratory Dissertation Reader: Leia A. Stirling

Acknowledgments

To begin, I would like to thank my advisor, Julie Shah, for the years of support and encouragement that have allowed me to complete this work. By accepting me into the Interactive Robotics Group — when I had no plan for my research and was interested in a different field than any of her other graduate students — she took a substantial risk in order to offer me a great opportunity. It has been a pleasure to work under her guidance over the years, and it has introduced me to many fields of knowledge and research that I would not have otherwise experienced. I have also had the honour of learning from some of the most incredibly talented and brilliant people at MIT, including astronaut Jeffrey Hoffman and historian/engineer/entrepreneur David Mindell, both of whom gracefully offered to serve on my Ph.D. committee. Likewise, thank you to Hayley Reynolds and Leia Stirling for agreeing to serve as readers for my dissertation and for providing invaluable guidance.

I would like to recognize MIT Emergency Medical Services for allowing me the opportunity to serve with them during my time here, and for providing me the chance to get away from a computer and work with my hands in the real world — something far too rare in the conventional academic environment. In permitting me to experiment and invent with the ambulance, equipment, and systems, they have helped me to realize a field for which I am truly passionate. More importantly, the community and comradery of the service has helped me through some of the most difficult times of this degree, and I doubt I would have made it through without finding such a supportive and exciting group of people.

Thank you to the undergraduate researchers, MIT machine shop and IT staff, and MIT Lincoln Laboratory staff who have helped me turn my ideas into reality. In particular, thank you to Ron Wiken in CSAIL for taking me under his wing and teaching me the ways of the machine shop, and for being there with solutions whenever I couldn’t figure out how to build something. Ron embodies the creative spirit of MIT, and has made more of a difference at this Institute than most will ever know.

mentorship of several teachers and professors who took the time to teach, encourage, and guide me through the various phases of my education: Mark Wintjes, who taught me at a young age how to continually challenge myself academically; Joanne Hunter and Mark Doolan, who allowed me unprecedented freedoms in the high school laboratories to experiment and discover my love for science; Patricia Lasserre, who introduced me to the world of academic research; and Lutz Lampe and Robert Rohling, who taught me how to conduct my own research and contribute to the academic community.

Finally, I cannot overstate my gratitude and appreciation for my family. My parents, Shelley and Dwain, sacrificed immensely for my education, giving up all aspects of a normal life for many years so I could have some semblance of normality in my own. My tuition was paid with their vacations never taken and work days longer than anyone should have to endure. My brother, Spencer, provided humour and light-heartedness when I needed it the most, and also played a major role in keeping my ego in check. My amazing fiancée, Kirstie, offered boundless emotional support and care which, while I was often too stubborn to admit it, was likely the determining factor in my ability to complete this degree. To all members of my family: know that I am forever grateful, and could not have made it here without you.

Contents

1 Introduction 19

1.1 Context . . . 19

1.1.1 Visual Navigation Systems . . . 20

1.1.2 Tactile Navigation Systems . . . 21

1.1.3 Conventional Navigation System Selection . . . 22

1.1.4 Time-Varying Effects . . . 23

1.2 Problem Statement . . . 24

1.3 Research Outline . . . 24

1.3.1 Phase 1: Modality Selection Strategies . . . 24

1.3.2 Phase 2: Sensory Adaptation and Habituation in Navigation . 25 1.3.3 Phase 3: Adaptive Modality Selection Navigation System . . . 25

2 Prior Work 27 2.1 Multiple Resource Theory . . . 27

2.2 Tactile Support Information . . . 29

2.3 Tactile Navigation Systems . . . 30

2.4 Modality Switching Cost . . . 32

2.4.1 Between-Task Switching Cost . . . 32

2.4.2 Within-Task Switching Cost . . . 33

2.5 Changes in Sensitivity . . . 34

2.5.1 Sensory Adaptation . . . 34

2.5.2 Habituation . . . 37

2.6.1 Visual Attention . . . 38

2.6.2 Hands-On . . . 39

2.6.3 Notification Detection . . . 40

2.6.4 Global Awareness . . . 40

2.7 Multimodal Systems . . . 42

2.7.1 Motivation for a Unimodal Navigation System . . . 42

3 Modality Selection Strategies (Phase 1) 45 3.1 Introduction . . . 45 3.2 Hypotheses . . . 46 3.3 Design . . . 47 3.3.1 Equipment . . . 47 3.3.2 Primary Task . . . 48 3.3.3 Secondary Task . . . 51 3.3.4 Difficulty . . . 52 3.3.5 Procedure . . . 53 3.3.6 Compensation . . . 55 3.3.7 Scoring . . . 55 3.3.8 Data Collection . . . 55 3.3.9 Participant Demographics . . . 57 3.4 Analysis . . . 58 3.4.1 Hypothesis 3.1 . . . 59 3.4.2 Hypothesis 3.2 . . . 63 3.4.3 Subjective Workload . . . 65 3.5 Discussion . . . 67 3.5.1 Hypothesis 3.1 . . . 67 3.5.2 Hypothesis 3.2 . . . 68 3.5.3 Subjective Workload . . . 69 3.5.4 Other Factors . . . 69 3.6 Summary . . . 71

3.6.1 Implications for AMS Navigation Systems . . . 71

3.6.2 Limitations . . . 72

4 Sensory Adaptation and Habituation in Navigation (Phase 2) 73 4.1 Introduction . . . 73 4.2 Hypotheses . . . 73 4.3 Design . . . 75 4.3.1 Equipment . . . 76 4.3.2 Navigation Task . . . 77 4.3.3 Procedure . . . 79 4.3.4 Compensation . . . 83 4.3.5 Scoring . . . 84 4.3.6 Data Collection . . . 84 4.3.7 Participant Demographics . . . 85 4.4 Analysis . . . 86 4.4.1 Hypothesis 4.1 . . . 88 4.4.2 Hypothesis 4.2 . . . 90 4.4.3 Hypothesis 4.3 . . . 91 4.4.4 Subjective Workload . . . 92 4.5 Discussion . . . 95 4.5.1 Hypothesis 4.1 . . . 95 4.5.2 Hypothesis 4.2 . . . 95 4.5.3 Hypothesis 4.3 . . . 97 4.5.4 Subjective Workload . . . 97 4.5.5 Other Factors . . . 97

4.5.6 Participant Preferences and Comments . . . 99

4.6 Summary . . . 99

4.6.1 Implications for AMS Navigation Systems . . . 100

5 Adaptive Navigation Modality Selection (Phase 3) 103 5.1 Introduction . . . 103 5.2 Hypotheses . . . 103 5.3 Design . . . 104 5.3.1 Equipment . . . 104 5.3.2 Navigation Task . . . 104 5.3.3 Concurrent Tasks . . . 108

5.3.4 Adaptive Modality Selection Algorithm . . . 114

5.3.5 Procedure . . . 116 5.3.6 Compensation . . . 117 5.3.7 Data Collection . . . 118 5.3.8 Participant Demographics . . . 120 5.4 Analysis . . . 121 5.4.1 Hypotheses 5.1 and 5.2 . . . 122 5.4.2 Hypothesis 5.3 . . . 123 5.4.3 Subjective Workload . . . 125 5.4.4 Likert Items . . . 129 5.5 Discussion . . . 129 5.5.1 Hypothesis 5.1 . . . 129 5.5.2 Hypothesis 5.2 . . . 130 5.5.3 Hypothesis 5.3 . . . 131 5.5.4 Subjective Ratings . . . 131 5.5.5 Other Factors . . . 132 5.5.6 Participant Comments . . . 133 5.6 Summary . . . 134

5.6.1 Implications for AMS Navigation Systems . . . 134

5.6.2 Limitations . . . 135

6 Contributions 137 6.1 Research Summary . . . 137

6.2 Applications and Future Work . . . 140

A Model Assumptions 143

Acronyms 199

Glossary 201

List of Figures

1-1 Text-based GPS navigation systems . . . 20

1-2 Map-based GPS navigation systems . . . 21

1-3 Variants of the ARL PTN . . . 22

2-1 4-D Multiple Resource Theory model . . . 28

2-2 Defense Advanced GPS Receiver . . . 41

3-1 Graphical representation of Hypotheses 3.1 and 3.2 . . . 48

3-2 Phase 1 experiment equipment . . . 49

3-3 Phase 1 custom tactile belt and armbands . . . 50

3-4 Screen capture of the Phase 1 experiment . . . 52

4-1 Graphical representation of Hypotheses 4.1 and 4.2 . . . 75

4-2 Phase 2 experiment setup . . . 76

4-3 Flip-down GPS pouch used by the U.S. Army’s Nett Warrior navigation system . . . 78

4-4 Phase 2 in-simulation visual navigation display . . . 78

4-5 Phase 2 custom-built tactile navigation built . . . 80

4-6 Phase 2 experiment environments . . . 82

5-1 Phase 3 experiment setup . . . 105

5-2 Phase 3 in-simulation visual navigation display . . . 106

5-3 Phase 3 concurrent tasks . . . 112

5-4 Timeline of concurrent tasks and navigation system modalities used in the Phase 3 experiment . . . 113

A-1 Phase 1: PT1 Error (Visual) Plots . . . 145

A-2 Phase 1: PT1 Error (Auditory) Plots . . . 146

A-3 Phase 1: PT1 Error (Tactile) Plots . . . 147

A-4 Phase 1: PT2 Response Rate (Visual) Plots . . . 148

A-5 Phase 1: PT2 Response Rate (Auditory) Plots . . . 149

A-6 Phase 1: PT2 Response Rate (Tactile) Plots . . . 150

A-7 Phase 1: PT2 Accuracy (Visual) Plots . . . 151

A-8 Phase 1: PT2 Accuracy (Auditory) Plots . . . 152

A-9 Phase 1: PT2 Accuracy (Tactile) Plots . . . 153

A-10 Phase 1: PT2 Response Time (Visual) Plots . . . 154

A-11 Phase 1: PT2 Response Time (Auditory) Plots . . . 155

A-12 Phase 1: PT2 Response Time (Tactile) Plots . . . 156

A-13 Phase 1: ST Response Rate (Visual) Plots . . . 157

A-14 Phase 1: ST Response Rate (Auditory) Plots . . . 158

A-15 Phase 1: ST Response Rate (Tactile) Plots . . . 159

A-16 Phase 1: ST Accuracy (Visual) Plots . . . 160

A-17 Phase 1: ST Accuracy (Auditory) Plots . . . 161

A-18 Phase 1: ST Accuracy (Tactile) Plots . . . 162

A-19 Phase 1: ST Response Time (Visual) Plots . . . 163

A-20 Phase 1: ST Response Time (Auditory) Plots . . . 164

A-21 Phase 1: ST Response Time (Tactile) Plots . . . 165

A-22 Phase 1: Subjective Workload (Overall) Plots . . . 166

A-23 Phase 1: Subjective Workload (Mental) Plots . . . 167

A-24 Phase 1: Subjective Workload (Physical) Plots . . . 168

A-25 Phase 1: Subjective Workload (Temporal) Plots . . . 169

A-26 Phase 1: Subjective Workload (Performance) Plots . . . 170

A-27 Phase 1: Subjective Workload (Effort) Plots . . . 171

A-28 Phase 1: Subjective Workload (Frustration) Plots . . . 172

A-29 Phase 2: Hypothesis 4.1 Navigation Speed Plots . . . 173

A-31 Phase 2: Hypothesis 4.2 Navigation Speed Plots . . . 175

A-32 Phase 2: Hypothesis 4.2 Bearing Error Plots . . . 176

A-33 Phase 2: Hypothesis 4.3 Navigation Speed Plots . . . 177

A-34 Phase 2: Hypothesis 4.3 Bearing Error Plots . . . 178

A-35 Phase 2: Subjective Workload (Overall) Plots . . . 179

A-36 Phase 2: Subjective Workload (Mental) Plots . . . 180

A-37 Phase 2: Subjective Workload (Physical) Plots . . . 181

A-38 Phase 2: Subjective Workload (Temporal) Plots . . . 182

A-39 Phase 2: Subjective Workload (Performance) Plots . . . 183

A-40 Phase 2: Subjective Workload (Effort) Plots . . . 184

A-41 Phase 2: Subjective Workload (Frustration) Plots . . . 185

A-42 Phase 3: Hypotheses 5.1 and 5.2 Navigation Speed Plots . . . 186

A-43 Phase 3: Hypotheses 5.1 and 5.2 Bearing Error Plots . . . 187

A-44 Phase 3: Hypothesis 5.3 Concurrent – VA (Time Near Zombies) Plots 188 A-45 Phase 3: Hypothesis 5.3 Concurrent – HO (Time Hands Occupied) Plots189 A-46 Phase 3: Hypothesis 5.3 Concurrent – ND (Duration) Plots . . . 190

A-47 Phase 3: Hypothesis 5.3 Concurrent – GA (Error) Plots . . . 191

A-48 Phase 3: Subjective Workload (Overall) Plots . . . 192

A-49 Phase 3: Subjective Workload (Mental) Plots . . . 193

A-50 Phase 3: Subjective Workload (Physical) Plots . . . 194

A-51 Phase 3: Subjective Workload (Temporal) Plots . . . 195

A-52 Phase 3: Subjective Workload (Performance) Plots . . . 196

A-53 Phase 3: Subjective Workload (Effort) Plots . . . 197

List of Tables

3.1 Phase 1 modes and task modalities . . . 54

3.2 Results of Phase 1 PT1 models . . . 61

3.3 Results of Phase 1 PT2 models . . . 62

3.4 Results of Phase 1 ST models . . . 64

3.5 Results of Phase 1 subjective workload models . . . 66

4.1 Phase 2 trial types . . . 83

4.2 Phase 2 directional guidance modalities . . . 83

4.3 Results of Phase 2 Hypothesis 4.1 models . . . 89

4.4 Results of Phase 2 Hypothesis 4.2 models . . . 91

4.5 Results of Phase 2 Hypothesis 4.3 models . . . 93

4.6 Results of Phase 2 subjective workload models . . . 94

5.1 Results of Phase 3 Hypotheses 5.1 and 5.2 models . . . 124

5.2 Results of Phase 3 Hypothesis 5.3 models . . . 126

5.3 Results of Phase 3 subjective workload models . . . 128

Chapter 1

Introduction

1.1

Context

Assisting humans with navigation tasks is one of the most common uses for portable electronic devices. Navigation systems are used by pedestrians, drivers, pilots, and essentially any other user trying to get from point A to point B. For the most part, they are used in relatively low-stress environments such as walking to a store or driving to another city, and the primary design criteria for such systems are that they have an intuitive and user-friendly interface. However, this is not always the case. Users who operate in extreme environments — those that involve high-workload, high-stress, and high-risk, such as combat or disaster areas — must prioritize factors related to performance instead of comfort: speed, accuracy, and the ability to multitask. When a soldier is attempting to infiltrate an enemy-occupied area, maintaining a constant awareness of the relative locations of friendly units and landmarks while still perceiving navigation information is crucial; when that soldier is then attempting to navigate to an exfiltration point, reaching it quickly becomes the highest priority. Similarly, a search-and-rescue technician must be able to continuously scan the surrounding area to locate victims while still navigating through a predetermined search sector, and must be able to have both hands free to extricate or carry victims while navigating out of the area. In all of these cases, objective performance is of paramount importance and it is critical that the navigation systems that users employ are able to provide

(a) Garmin GPS II Plus, one of the earliest consumer GPS navigation systems [1].

(b) Precision Lightweight GPS Receiver (PLGR) [2].

Figure 1-1: Text-based GPS navigation systems.

directional guidance in the most effective possible manner.

1.1.1

Visual Navigation Systems

Historically, navigation systems have utilized visual displays. For millennia, maps and compasses — both of which require visual attention from the user — were the primary navigational tools. Over the past several decades, with the development of portable computer systems, visual Global Positioning System (GPS) displays have become by far the most common navigation system across almost all disciplines. Originally, these systems utilized text-based displays, for both consumer and military applications (Figure 1-1). As mobile computing power increased and displays improved, map-based

GPS navigation systems (Figure 1-2) grew in popularity and are now ubiquitous. While visual navigation systems are certainly effective, they are not ideal in all conditions. They require the user to pay visual attention to the device, thereby preventing the user from maintaining a constant awareness of his or her surroundings, and they require that they are held in the correct orientation so that directions they provide correspond to the user’s bearing. They usually require hands to operate, preventing the user from performing various other tasks simultaneously. While they are the most versatile type of navigation system currently available, there are cases

(a) Garmin eTrex 20 [3].

(b) U.S. Army Nett Warrior GPS navigation system [4, 5].

Figure 1-2: Map-based GPS navigation systems.

where they may interfere with other tasks or result in slow navigation.

1.1.2

Tactile Navigation Systems

Users in extreme environments often have many tasks demanding visual attention (scanning for targets, detecting obstacles, etc.), which are interrupted by looking at a visual navigation display. This outcome is associated with Multiple Resource Theory [6–8], which states that humans have limited capacity to absorb information in each sensory modality and that overloading any given modality with excessive information will result in reduced performance. In order to circumvent this constraint, researchers began investigating the tactile1 sensory modality as an alternative path

for conveying navigation information in the late 1990s. This work was based on the idea that by conveying navigation information to the user through a tactile system instead of a visual one, the user is free to devote his or her visual attention entirely to other tasks. The results were, for the most part, quite encouraging and indicated that

1Throughout related literature, the terms “haptic” and “tactile” are used interchangeably.

Specifi-cally, “haptic” refers to the overall sense of touch, including kinesthetic (the sense of position and movement of muscles, tendons, and joints) and tactile (the sense of touch or vibration on the surface of the skin) sensory input. This dissertation uses the term “tactile” throughout for specificity and consistency.

Figure 1-3: Variants of the U.S. Army Research Laboratory’s Personal Tactile Navigator, reproduced from [13, 14].

tactile navigation systems could outperform visual systems in a variety of scenarios. Specifically, multiple studies and research programs have demonstrated the merits of a tactile navigation belt: a belt with vibrating motors which vibrate in the direction the user needs to move (Figure 1-3) [5, 9–13].

As with visual navigation systems, tactile systems are not perfect. They convey a very limited amount of information, consisting only of a bearing and possibly a distance (conveyed through vibration intensity, vibration frequency, or differing intervals between vibration pulses), which does not provide any of the global situation awareness (landmarks, other units, etc.) that a user could obtain from a visual map-based display. A visual system allows a user to easily obtain precise metrics such as speed or distance to a waypoint; a tactile system does not. While there are cases where tactile navigation systems excel, there are also cases where they fail.

1.1.3

Conventional Navigation System Selection

With two types of navigation system modalities available, there comes the choice of which to use for a given application. Since each allows improved performance in specific situations, one approach is to provide both simultaneously, known as a multimodal navigation system. While there are benefits to multimodal systems in terms of navigation speed, there are also substantial consequences on the user’s ability to perform other simultaneous tasks [10].

With each navigation system modality (visual, tactile, or multimodal) having its own set of pros and cons, it is not always clear which is the optimal one to use.

Presently, the best approach is to estimate which types of tasks users will need to perform while navigating, and to determine the relative importance of performance on each of those task types and on the navigation itself: the optimal modality is that which is most compatible with the user’s specific goals. For example, if a soldier must constantly maintain awareness of the relative locations of friendly squads during a given mission, then a visual system which marks those positions would be the preferable system to provide for that soldier; if it is more important for the mission that the soldier be able to continuously watch for threats, then a tactile system would be preferable. While this selection process results in a modality choice that will work well for the majority of expected tasks, it does not account for a variety of task types nor dynamic changes to mission requirements.

1.1.4

Time-Varying Effects

The conventional method for selecting a navigation system modality, described above in Section 1.1.3, is based on fixed criteria with the assumption that the relative merits of each modality type will remain constant. These merits, however, are of a static nature: they only consider the present circumstances and do not allow for time-varying effects. As with any system that provides a repeated stimulus to a user, navigation systems are subject to the dynamic, time-varying effects of sensory adaptation and habituation: physiological and behavioural (respectively) detrimental effects that result in decreased sensitivity to a continuous or repeated stimulus over time [15, 16]. If a navigation system continues to utilize a specific modality, the user will gradually lose sensitivity to it and navigation performance will decrease.

Both of these effects, fortunately, can be “reset” by changing the modality of the stimulus, causing the user to regain full sensitivity to it [16, 17]. If a navigation system were to switch between modalities periodically, it may counteract the effects of sensory adaptation and habituation to provide greater performance stability over long-duration navigation tasks.

1.2

Problem Statement

Since visual, tactile, and multimodal navigation systems each fail under specific condi-tions, there is a clear need for a more robust solution. The aim of this dissertation is to provide such a solution in the form of an adaptive modality selection (AMS) navigation system, specifically for applications in extreme environments where any task failure may be fatal. Instead of using tactile navigation systems to replace or simultaneously augment a visual system, as has been the case with prior work, this dissertation investigates the possibility of using a tactile system as an optional alternative that is automatically selected when appropriate. Based on the immediate context of the user’s environment and mission, the AMS algorithm selects the navigation system modality (visual or tactile) that is expected to be more effective, thereby improving the user’s ability to meet mission objectives in a quick and effective manner. This objective is accomplished through a fusion of the existing prior work on task-specific benefits of each navigation system modality with new research on modality switching cost and sensory adaptation/habituation in navigation systems.

1.3

Research Outline

In order to accomplish the dissertation objective outlined above, three distinct research areas had to be investigated. These areas were addressed in three phases, each involving a separate human-participants experiment.

1.3.1

Phase 1: Modality Selection Strategies

Multiple Resource Theory suggests that sensory inputs for different tasks should use different modalities in order to minimize the risk of sensory overload. The existence of switching cost, however, indicates that all information should be provided in the same modality so that the user doesn’t have to switch his or her attention from one modality to another. Since there are conflicting factors in determining which modality should be used for a navigation system, the first phase of research was

focused on evaluating this trade-off and determining whether switching cost is likely to have a strong enough detrimental effect that an AMS navigation system is not feasible. Specifically, the experiment for this phase addressed the question of whether performance on multiple simultaneous tasks is best when additional information is provided in the same modality as concurrent tasks, a different modality, or a constant modality independent of concurrent task modality. Details of this phase are provided in Chapter 3.

1.3.2

Phase 2: Sensory Adaptation and Habituation in

Navi-gation

As mentioned above, and discussed in depth in Section 2.5.1, users will suffer a reduced responsiveness over time to any stimulus that is continuous or repeated due to sensory adaptation and habituation. Since prior work on navigation systems has not evaluated these effects, the second phase of research for this dissertation was focused on investigating this topic. Specifically, the second experiment was designed to evaluate whether switching between visual and tactile navigation system modalities, in the absence of any other task, could improve a user’s navigation performance compared to using a single, constant modality. Simultaneously, the second experiment also evaluated the impacts of switching cost, in terms of switching between visual and tactile navigation system modalities, on navigation performance. Details of this phase are provided in Chapter 4.

1.3.3

Phase 3: Adaptive Modality Selection Navigation

Sys-tem

The third and final phase of work comprising this dissertation was the development and testing of an AMS algorithm for navigation systems. The algorithm design was informed by data from prior work on tactile navigation combined with the results from the Phase 1 and Phase 2 studies, resulting in a navigation system that switches between modalities based on all of the factors described above. The algorithm was evaluated

through an experiment that compared navigation and secondary task performance while using the AMS navigation system to performance while using single-modality (visual-only or tactile-only) or multimodal systems.2 Details of this phase are provided

in Chapter 5.

2“Single-modality,” “unimodal,” and “multimodal” all have specific definitions in this dissertation.

A single-modality system is one that uses only one modality at a time and never changes that modality. A unimodal system is one that uses only one modality at a time, but may change that modality as conditions change. A multimodal system is one that uses at least two modalities at all times.

Chapter 2

Prior Work

As the research presented in this dissertation integrates concepts from a number of fields, there is a wide range of related prior work. Section 2.1 discusses Multiple Resource Theory, which is the basis for the original work on considering the use of tactile navigation systems as an alternative to visual ones. Section 2.2 discusses the general concept of using the tactile modality to convey information to users, as well as specific pros and cons of tactile systems. Section 2.3 reviews prior work on tactile navigation systems and their benefits. Section 2.4 discusses switching cost and its potential impacts on an adaptive modality selection (AMS) navigation system. Section 2.5 discusses various sensitivity-affecting concepts that may be addressed by an AMS navigation system, including vibrotactile adaptation, stimulus-specific adaptation (SSA), and habituation. Section 2.6 addresses the task-specific benefits of visual and tactile modalities. Finally, Section 2.7 reviews prior work on multimodal systems and discusses the reasons that this dissertation focuses on unimodal systems.

2.1

Multiple Resource Theory

Motivation for the use of tactile feedback as an alternative to visual displays for navigation and other automated supportive systems stems from Wickens’ Multiple Resource Theory [6–8] (Figure 2-1), which follows initial work by Navon and Gopher [18]. The sensory modality channel is one of four dimensions elaborated upon in the

Figure 2-1: 4-D Multiple Resource Theory model, reproduced from [8].

model, which states that these four dimensions are subject to overload if excessive information is conveyed through a single channel (visual, auditory, etc.). The presence of this overload effect was demonstrated in an early study by Wickens et al., showing that resource competition arises between two memory search or tracking tasks when they share the same input or output modality [19]. Similarly, the “cue-overload principle,” a well-established principle in the field of cognitive psychology [20], states that in memory-related tasks (in this case, Brown-Peterson tasks [21, 22]), the greater the amount of information linked to a single cue — and, therefore, presented through a single sensory modality — the less information a user will be able to absorb.

Results from multiple prior studies have suggested that the best way to overcome these issues is to decouple the sensory modalities used for different information inputs, i.e. to ensure that no two inputs use the same modality. Moroney et al. demonstrated that when a flight and navigation primary task is accompanied by a concurrent search or monitoring secondary task, which was presented in either a visual or auditory modality, the primary task performance was better when the secondary task was presented in a modality that did not occupy the same sensory modality [23]. Results from a study by Liu indicated that during a primarily visual task (vehicle navigation and hazard

identification), assistive feedback provided in an auditory-only or auditory-and-visual format aided the user more effectively than feedback provided in a visual-only format [24]. Samman et al. found that study participants were able to remember nearly three times as many items when they were presented in different modalities as opposed to a single modality [25].

These results are particularly applicable to the visual sensory modality. Modern automated devices rely heavily, perhaps excessively, on visual feedback [7, 26]; combined with the fact that humans continuously receive a large amount of information through the visual modality even without any automated devices to look at, this suggests that the visual modality is at especially high risk of overload. Land navigation in particular has been identified as a task typically causing extremely high workload [27]. Numerous works have focused specifically on addressing this concern, and have shown that visual overload can be avoided by providing extra information in non-visual modalities [26, 28–32]. All together, this literature leads to the notion that providing navigation information to users in extreme environments in a non-visual modality can reduce the risk of overload and improve overall navigation performance; this is the hypothesis that has motivated much of the prior work on tactile support information and navigation.

2.2

Tactile Support Information

Use of the tactile sensory modality to convey support information — information which is intended to support the user in his or her mission or objective — to users in extreme environments has been examined for a broad range of applications, with the general concept being motivated by Multiple Resource Theory. The results of a wide array of studies are summarized in several meta-analyses, showing that visual-tactile multimodal feedback is more effective than visual-auditory feedback under high workload conditions [33] and that visual-tactile multimodal feedback is more effective than visual-only feedback [34]; studies included in these meta-analyses investigated tactile support information for alerts/warnings, target acquisition, communication,

navigation, and driving. An overview of several other meta-analyses concluded that tactile-only or visual-tactile cues outperformed visual-only cues for alerts, directional information, and spatial orientation information [35]. These results provide evidence that, at a fundamental level, use of the tactile modality to convey information can be quite beneficial.

Numerous studies have examined the effectiveness of tactile support information in specific applications. A study by Terrence et al. demonstrated that tactile cues could provide spatial/directional information to participants significantly faster than auditory cues regardless of the participant’s body position [36]. A study by Mortimer demonstrated that, in some circumstances, tactile cues for tracking targets were more easily understood by participants than auditory cues [37]. Work by Krausman et al. focused on using tactile cues to alert a user to the availability of support information that would then be presented through different modalities (visual or auditory); the results indicated that tactile alerts could result in response times up to 54% faster than a visual alert when the user was already engaged in a visual task [38]. A report by the U.S. Army Research Laboratory (ARL) on a series of studies detailed the benefits of using the tactile modality for support information in various contexts [11]. The National Aeronautics and Space Administration (NASA) and the U.S. Navy developed the “Tactile Situation Awareness System,” a matrix of tactors applied to the torso and limbs, and demonstrated that it could effectively provide all information necessary to fly a helicopter (pilots were able to fly complex maneuvers while blindfolded) [39– 41]. The encouraging results from this prior work provided motivation for extending the concept of tactile support information to use in navigation systems, detailed in Section 2.3.

2.3

Tactile Navigation Systems

Following from extensive prior research into use of the tactile modality to convey information in general, research into tactile directional guidance became quite active in the mid-2000s for both civilian and military applications.

Research for civilian applications of tactile navigation systems has been conducted independently by numerous groups. A study by Tsukada and Yasumura examined the feasibility of a prototype tactile navigation belt, finding that it was able to assist users in accurately navigating through waypoints [42]. Pielot and Boll conducted similar work by comparing the effectiveness of their prototype tactile navigation belt with conventional visual navigation systems, finding that the tactile system required less attention but was not as effective in helping the user reach the waypoint [43]. That same research group conducted another study to evaluate the use of tactile vibration patterns on a mobile phone to convey navigation information, finding that it required less attention while having no significant navigation performance differences compared to a visual system [44]; they then incorporated these results into a prototype tactile navigation system and conducted a large-scale study of its use in an urban environment and confirmed their earlier findings [45]. Several studies have investigated the design and use of tactile directional guidance for blind users, all with encouraging results [46–49]. Many additional, similar studies on tactile directional guidance for pedestrian use have demonstrated beneficial effects [50–53].

Research into tactile navigation systems for military applications has been con-ducted primarily by the ARL. Their research program included four major experiments on the use of tactile navigation systems for soldiers in the field using a Personal Tactile Navigator (PTN): a tactile belt that vibrates in the direction the soldier needs to move. The first experiment focused exclusively on navigation and did not consider other simultaneous tasks, with the results showing no significant difference between a visual GPS display and the PTN [54]. The second experiment focused on night operations with additional non-navigation tasks for increased workload and compared the PTN to the Army’s Land Warrior visual navigation system; use of the PTN lead to significant improvements in navigation speed and target detection compared to the Land Warrior display [9]. The third experiment compared navigation and additional task performance in three contexts: while using the PTN, while using a commercial visual GPS display, and while using both. Results from this experiment indicated that the visual display allowed improved performance on tasks that require knowledge

of surroundings (global awareness), while the PTN allowed soldiers to spend more time focusing on visual search tasks (such as enemy target detection), detection and avoidance of obstacles, and weapon control [10]. The fourth experiment focused on an improved version of the PTN to augment a visual navigation display while also providing tactile notifications of important alerts; it demonstrated that as workload increased, the presence of a tactile navigation/cuing system became increasingly bene-ficial [13]. Other publications have included details of improvements to the navigation system [14] and a summary of the first three experiments [12].

Overall, the prior work on tactile navigation systems has highlighted two primary findings: that tactile navigation systems become increasingly beneficial as the user’s workload from other tasks increases, and that they often are not as effective as visual navigation systems in helping users maintain global awareness. The fact that there are cases where each type of navigation system modality (tactile or visual) outperforms the other was one of the primary motivators for the research presented in this dissertation: adaptively selecting which modality to use based on current conditions and requirements will allow the best of both options.

2.4

Modality Switching Cost

One of the potential disadvantages of any system that switches between modalities for conveying information, as is the case with an AMS navigation system, is that an effect known as “switching cost” may be introduced. Switching cost is when a user experiences a worsened response time due to having to switch attention from one sensory modality to another. This may take one of two forms: a “between-task” switching cost, or a “within-task” switching cost.

2.4.1

Between-Task Switching Cost

A between-task switching cost occurs when a user receives information from multi-ple sources (tasks), and those sources provide the information in different sensory modalities. Every time the user switches attention from one task to another, he or

she will be switching attention from one sensory modality to another. Extensive work has been published evaluating this effect, particularly with regard to an auditory task interrupting a task that incorporates a different modality. Results from work by Wickens et al. indicated a highly significant increase in error during an ongoing visual task (vehicle tracking) when information for an interrupting task (digit entry) was presented in the auditory modality, compared to when it was presented in the visual modality [55]. Latorella conducted an experiment testing the effects of cross-modality vs. same-cross-modality interrupting tasks (air traffic control instructions) on flight simulator performance, and found that cross-modality interruptions yielded significantly poorer performance [56]. In addition, a summary of a related body of work known as “irrelevant sound” studies highlighted that auditory interruptions usually have a detrimental effect on performance of a task involving a different modality [57]. The results of these studies indicate that a navigation system that switches between different modalities could be either beneficial or detrimental, depending on whether it increases or decreases the amount of time where the modality of the navigation system is different than the modality the user is paying attention to for his or her other tasks.

2.4.2

Within-Task Switching Cost

A within-task switching cost occurs when information from a given source/task is presented to the user in a sensory modality other than the one it was expected in, e.g. if a user expects an alert to be provided auditorily as an alarm, but instead it is presented visually as a warning light. While the body of research assessing this effect is relatively small, numerous studies have shown its influence to be significant. In a study by Klein, participants exhibited slower response times when they received a simple stimulus in a modality different from that which they expected [58]. A later, similar study by Spence et al. yielded the same result for the detection of target locations [59]. Post and Chapman demonstrated that participants responded less quickly to simple stimuli when they did not know which modality to expect them in or when they expected them in the incorrect modality, compared to when their expectations were correct [60]. The results of these studies indicate that a navigation

system that switches between different modalities would be detrimentally impacted by this effect.

In summary, prior work on this topic suggests that any navigation system that changes modalities over time is likely to be subject to modality switching cost, whether it be within-task or between-task. In order to develop an AMS algorithm, it was first required to investigate the specific effects of modality switching cost on systems that switch modalities periodically in a multiple-task environment, and to use the results to inform the algorithm design (Chapter 3).

2.5

Changes in Sensitivity

One key element that has been missing from prior work on tactile navigation is the concept of changes in sensitivity to navigation stimuli over time. Numerous effects, both physiological and psychological, have been found that will cause users of a tactile navigation system to become less sensitive to the information provided by the system as they continue using it over an extended period of time. This section discusses these various effects and the ways that an AMS navigation system would be beneficial.

2.5.1

Sensory Adaptation

Sensory adaptation, also referred to as neural adaptation, is a physiological effect where continuous exposure to a stimulus causes changes in the response properties of the activated neurons, usually resulting in decreased sensitivity to that stimulus over time [61]. The body of work investigating this effect is extensive, beginning with research conducted by Herman von Helmholtz in the 19th century who investigated

visual and auditory adaptation to abnormal stimuli [62]. Similar work was conducted in the field of psychology later that century [63]. In more recent years, research has focused on the specific mechanisms that cause this effect and the evolutionary process that lead to them. Various reasons for the evolution of this trait have been proposed, such as efficient coding of neurological signals (similar to a form of digital compression) and allowing for heightened response to rare or changing stimuli [64–66].

Sensory adaptation can be categorized into two groups: non-specific adaptation (or “vibrotactile adaptation” when referring to the tactile sense), and stimulus-specific adaptation (SSA).

Non-Specific (Vibrotactile) Adaptation

As neurons continue to be activated over time, they gradually produce a weaker response to stimuli. This change is non-specific, i.e. the effect will occur regardless of the specific attributes of the stimuli as long as the same neurons continue to be activated. In other words, this effect is adaptation to the history of the neuron’s activation. In the context of sensing vibrations on the skin, as is necessary for tactile navigation systems, this is referred to as vibrotactile adaptation.

Initial research from the early 20th century investigated gradual reduction in perception of continuous vibration [67, 68] and changes in vibratory “sensibility” with variations in pressure [69]. Since then, countless studies have been published on every aspect of this effect. Key discoveries have related to the time frames over which adaptation occurs and the time required to recover from adaptation and return to full sensitivity; prior work has also looked at how this changes with various vibration frequencies. Work by Berglund and Berglund suggested that at a frequency of 250 Hz, similar to the frequency used by the ARL’s PTN system, the perceived intensity of the vibration decreases exponentially over a seven-minute period of constant vibration and the recovery period is up to three minutes [70]. Work by Hahn showed that adaptation continued to increase after 25 minutes of stimulation, and that full recovery could take more than three minutes after the stimuli ended [71, 72]. Numerous other studies have shown similar results [73–80].

The vast majority of prior research on vibrotactile adaptation has focused on adaptation over a period of minutes, with relatively little work examining effects on a sub-second time scale. Nonetheless, a summary of literature on the topic suggests that bursts of vibration shorter than 500 ms will not cause significant adaptation [81]. This concept was used in the ARL’s series of tactile navigation experiments, which used vibration pulses of 200 ms in order to avoid adaptation [11, 54]. However, their

work did not independently evaluate any differences between the use of continuous vibration vs. pulses of vibration in a tactile navigation system; an investigation of those differences was therefore conducted for this dissertation as one of several hypotheses tested in Phase 2 (Chapter 4).

Stimulus-Specific Adaptation

While non-specific (vibrotactile) adaptation is adaptation to the history of the activa-tion of neurons, a higher-level effect also exists in the form of adaptaactiva-tion to the history of a stimulus. As the neurological system receives the same stimulus continuously for an extended duration — or receives a brief stimulus frequently — the neural response to that stimulus decreases over time [15, 82]. This is known as stimulus-specific adaptation (SSA), and is most widely known for its relevance to the concept of change detection [83].

Although much of the research on this topic has been conducted on rats or primates due to the invasive procedures required to obtain measurements, the effect has also been found to exist in humans for both visual [84–90] and auditory [17, 91–97] stimuli. SSA has been shown to exist for stimuli with durations of 200 ms (the same duration as the vibration pulses used in the ARL’s tactile navigation system experiments) and after only one occurrence of a stimulus [98]. Prior work has suggested that SSA may end after the stimulus has not been received for ~2 seconds [17]. Unfortunately, research into this phenomenon appears to have focused exclusively on visual or auditory stimuli and provides little information regarding how SSA relates to tactile stimuli. However, study results have indicated that auditory SSA occurs in the thalamus [92], and that the thalamus is also involved in the perception of touch [99]. It is likely, then, that SSA exists for tactile stimuli as well; if this is the case, repeated pulses of vibration used for a tactile navigation system (even if limited to a duration of 200 ms) may become less noticeable to the user over time.

The presence of this effect supports the idea that switching between modalities within a navigation system would yield a beneficial effect. Every time the modality (i.e. the stimulus) changes, the user would not be adapted to this new modality and

should therefore not suffer from the detrimental effects of SSA.

2.5.2

Habituation

Habituation, although similar to sensory adaptation, is a behavioural learning effect (as opposed to a physiological one). While adaptation is a passive effect — i.e. a person has no direct control over it — habituation is an active effort by the brain to filter out background stimuli in order to allow more attention to be paid to irregular stimuli. Specifically, habituation is defined as “a behavioural response decrement that results from repeated stimulation and that does not involve sensory adaptation/sensory fatigue or motor fatigue” [16].

Research into habituation has a rich and extensive history. The term was already in widespread use by the beginning of the 20th century [100, 101], with a number

of related terms used to describe the same concept, including “acclimatization” and “accommodation” [102]. Initially, sensory adaptation and habituation were not clearly distinguished as separate effects. A landmark paper published in 1966 by Thompson and Spencer, however, reviewed definitive evidence that the decrease in sensitivity to repeated stimuli could not be entirely attributed to sensory adaptation, and that a separate effect must be present [103]. Further work by Groves and Thompson developed a more concrete theory of habituation [104]. More recent work has indicated that Thompson and Spencer’s theories on habituation have held up remarkably well over the past 50 years [16].

Two elements of habituation are of particular importance in the context of this dissertation: dishabituation and spontaneous recovery, which are two of the char-acteristics of habituation originally specified by Thompson and Spencer [103] and later revised by Rankin et al. [16]. Dishabituation refers to a characteristic wherein “presentation of a different stimulus results in an increase of the decremented response to the original stimulus” [16]. In other words, if a person is habituated to a stimulus and therefore experiencing decreased sensitivity to it, providing him or her with an alternative stimulus will result in increased sensitivity to subsequent presentations of the original stimulus. Spontaneous recovery refers to a circumstance wherein “if the

stimulus is withheld after response decrement, the response recovers at least partially over the observation time” [16]. This means that if a person is habituated to a stimulus and experiencing decreased sensitivity to it, withholding that stimulus for a duration of time will result in increased sensitivity to it upon its reintroduction. Results from various studies have indicated that the time required for spontaneous recovery can range from seconds to weeks depending on multiple factors [103, 105–109].

Both dishabituation and spontaneous recovery provide motivation for a navigation system that switches between modalities over time. If a user has become habituated to stimuli from a given navigation system modality, be it visual or tactile, switching to the alternative modality would improve performance in multiple ways. This alternate modality would represent a new type of stimulus, thereby dishabituating the user from the prior modality and improving his or her sensitivity to it when it is reintroduced later. Switching to a new modality would also provide a break from the stimulus that the user has become habituated to, creating an opportunity for spontaneous recovery to occur.

2.6

Task-Specific Modality Benefits

It is rarely the case that a soldier, first responder, or other user operating within an extreme environment will have no ongoing tasks other than simply navigating to an objective. These users will also typically have additional “concurrent tasks,” which may or may not be related to navigation, that they must complete simultaneously. Different types of concurrent tasks have varying levels of compatibility with different navigation system modalities, and they can generally be categorized into four main types:

2.6.1

Visual Attention

Visual attention (VA) tasks are those that require a person to focus the majority of his or her visual attention on his or her surroundings, usually in an effort to detect targets, threats, or objectives. Common examples include soldiers visually scanning

their surroundings for enemy combatants [110], search and rescue technicians scanning for the victim they are attempting to find, or a person traversing difficult topography scanning the terrain in front of him or her to ensure safe movement. As is expected, following from Multiple Resource Theory (Section 2.1), this type of task has poor compatibility with visual navigation systems: both tasks (navigation and concurrent) compete for the visual attention resource, and performance on at least one of them will suffer. As discussed above, the predominance of visual attention tasks in extreme environments is one of the primary motivations for research into tactile navigation systems. Prior work on this topic has indicated that when a user must perform visual attention concurrent tasks while also navigating, his or her performance on those tasks, as well as his or her navigation performance, is significantly better when using a tactile navigation system as opposed to a visual one [9, 10, 12].

2.6.2

Hands-On

Hands-on (HO) tasks are those that require a person to use both hands to complete them, usually to manipulate a device. Common examples include holding and maintain-ing positive control of a weapon, operatmaintain-ing equipment such as radios, donnmaintain-ing/doffmaintain-ing protective gear such as gas masks or helmets, or carrying an injured person [110]. Most visual navigation systems used in extreme environments today, including the Precision Lightweight GPS Receiver (PLGR) (Figure 1-1b), the Defense Advanced GPS Receiver (DAGR) (Figure 2-2), and the Nett Warrior (Figure 1-2b) navigation systems in use by the U.S. Military, all require the use of hands to operate, whether it be to hold it (PLGR and DAGR), open the tactical vest pouch containing it (Nett Warrior), or navigate through menu items on the display. Since visual navigation systems and this type of concurrent task both require use of hands, they also have poor compatibility: a user will not be able to navigate and perform the concurrent task simultaneously. Prior work on this topic has indicated that when a user must perform hands-on concurrent tasks while also navigating, his or her performance on those tasks, as well as his or her navigation performance, is significantly better when using a tactile navigation system as opposed to a visual one [10, 12].

2.6.3

Notification Detection

Notification detection (ND) tasks are those that require a user to notice, and possibly respond to, an alert or notification. Common examples include threat indications, chemical/biological/radiological/nuclear (CBRN) warnings, updates to mission param-eters, tactical orders, or any other time-critical message or warnings [110]. Prior work by the ARL has suggested that one of the most effective ways to communicate such information to a user, particularly in military environments, is through a tactile belt device similar to the PTN where notifications are provided using various vibration pulse patterns [11, 13, 14, 38]. Unfortunately, following again from Multiple Resource Theory, tactile notifications have a poor compatibility with tactile navigation systems: both tasks (navigation and concurrent) compete for the tactile attention resource, and performance on at least one of them will suffer. Specifically, there are three types of detrimental effects on perceiving multiple simultaneous tactile stimuli [111]: (1) spatial effects, where simultaneous tactile stimuli in different locations partially mask each other such that the apparent location of the vibration is in an entirely differ-ent location [112, 113]; (2) temporal effects, where multiple tactile stimuli presdiffer-ented close in time at similar locations and frequencies can interact to alter the perceived intensity or duration of the stimuli [114–116]; and (3) spatio-temporal interactions, where multiple tactile stimuli presented close in time and space can be perceived as an entirely different stimulus [117, 118]. These effects result in tactile navigation systems having poor compatibility with tactile notification detection concurrent tasks.

2.6.4

Global Awareness

Global awareness (GA) tasks are those that require a user to have situational awareness beyond his or her immediate vicinity, generally with regard to his or her own location or the relative locations of friendly/enemy units or landmarks [110]. Common examples include communicating one’s current position to other units over a radio, knowing whether friendly units are nearby so as not to accidentally target them, or knowing the locations of nearby rivers or roadways. Modern visual navigation systems are usually

Figure 2-2: Defense Advanced GPS Receiver (DAGR) [119].

able to integrate such information into their map displays, allowing the user to easily obtain this information while using such a system. Tactile navigation systems, however, are unable to provide this type of information in any efficient manner, and therefore have poor compatibility with global awareness concurrent tasks. Prior work on this topic has indicated that when a user must maintain a high level of global awareness, his or her ability to do so is significantly better in both objective performance and subjective user ratings when using a visual navigation system as opposed to a tactile one [10, 12].

It is clear, based on prior work, that users in extreme environments often need to perform a variety of concurrent tasks while simultaneously navigating, and that different tasks have varying levels of compatibility with each navigation system modality (visual or tactile). This supports the theory that performance on each of these types of concurrent task, as well as on the ongoing navigation task, can be improved by adaptively selecting which navigation system modality to use.

2.7

Multimodal Systems

Extensive research has been conducted on the use of multimodal interfaces, i.e. devices which present information in multiple modalities simultaneously (e.g. an aircraft warning system that presents alerts as both an audible buzzer and a visual text display). In general, multimodal devices are usually found to improve the effectiveness of communicating information to the user. Two meta-analyses, reviewing 43 and 45 (respectively) studies on the effect of multimodal feedback on user performance, found that multimodal feedback significantly improved a participant’s reaction time to stimuli [33, 120]. Early research by Navon and Gopher on time-sharing [18] suggested that representation using multiple sensory modalities is often beneficial, possibly because it ensures that information is available in a modality other than that which the user is employing for other tasks. Similarly, several additional publications by Wickens et al. have demonstrated an advantage with multimodal displays compared with unimodal displays [7, 34, 121], and a study by Cohen et al. also suggested that multimodal warning alerts are more effective than unimodal alerts [122]. By providing information in several modalities, there is an increased probability that at least one of those modalities will be available to the user (i.e. will not already be occupied with information from another source).

2.7.1

Motivation for a Unimodal Navigation System

While prior work has indicated that multimodal systems have potential benefits, this work has primarily focused on minimizing the response time to the stimulus that is provided multimodally with the assumption that obtaining and responding to this information is the highest priority (e.g. a critical error message in an aircraft). In cases where the user’s other tasks are actually the highest priority, providing additional information in multiple modalities can instead be quite detrimental. When information is provided in several modalities, there is an increased probability that at least one of those modalities will conflict with other information the user is trying to pay attention to. For example, for an infantry soldier in combat, the combat itself is of the highest

priority and the soldier must pay full visual attention to the enemy; navigational cues provided through both the visual and tactile modalities, while possibly allowing the soldier to receive and respond to the navigation information more quickly, would likely cause more interference to the soldier’s combat tasks than navigational cues provided solely through the tactile modality. This effect was found in one of the ARL’s navigation experiments, where the tactile-only system performed better than the tactile-visual multimodal system in allowing the soldier to watch for targets, watch terrain for obstacles, reroute around those obstacles, and allow hands-free operation (thereby allowing for improved weapon control) [10]. There was no significant difference in navigation speeds; the only significant benefits of the multimodal system over the tactile system were related to global awareness. Several studies by Pielot et al. on modality selection for pedestrian navigation found that a tactile-only navigation system was dramatically less distracting (i.e. required less interaction time) than a navigation system using a visual display in addition to the tactile device [44, 123]. These results are to be expected from other prior work on multimodal systems in general, which has indicated that multimodal interfaces may impart a higher workload on the user compared with unimodal interfaces [124]. For users operating in extreme environments, where workload is already very high, additional workload imparted by a navigational device may overload the user and result in decreased performance.

Due to the fact that — for users in extreme environments — it will often be the case where multimodal provision of navigational information would be detrimental to the user’s performance in his or her other tasks, the majority of novel research presented in this dissertation focuses on unimodal provision of navigational information. The experiment presented in Chapter 5, however, compares the final AMS navigation system against a multimodal design.

Chapter 3

Modality Selection Strategies (Phase

1)

3.1

Introduction

Due to the multiple effects that would impact the performance of an AMS navigation system, it is unclear how they would interact. Multiple Resource Theory (Section 2.1) suggests that, since users in extreme environments are already experiencing a very high sensory input load from their concurrent (non-navigation) tasks, adding additional sensory input load through a navigation system that uses the same modality as those current tasks would risk sensory overload; therefore, the navigation system should always use a different modality than that which is required for the concurrent tasks. However, if the navigation system uses a different modality than the concurrent tasks, then the user would suffer a between-task switching cost (Section 2.4.1); therefore, the navigation system should always use the same modality as the concurrent tasks. On the other hand, if the navigation system changes modalities periodically in order to maintain compatibility with the user’s concurrent tasks, then each time it changes modalities the user would suffer a within-task switching cost (Section 2.4.2); therefore, the navigation system should always use one modality and never change.

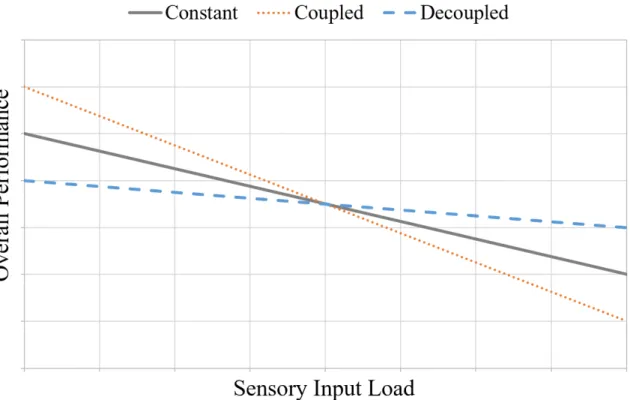

Evidently, these three bodies of prior work are at odds and it is not clear what strategy should be used for selecting a navigation system’s modality. Multiple Resource

Theory indicates that it should be different from the concurrent tasks (decoupled), between-task switching cost indicates that it should be the same as the concurrent tasks (coupled), and within-task switching cost indicates that it should be independent of the concurrent tasks and should never change (constant). As discussed in Section 1.3.1, the first phase of research was designed to examine a broad range of task and modality combinations under each these three strategies, with the intention of highlighting any specific combinations or scenarios that should be either selected or avoided when possible.

The experiment presented in this chapter considers a case in which a user attempts to complete an ongoing task/objective, referred to in this chapter as the primary task (PT), while also receiving, comprehending, and responding to additional unrelated information presented through a different system, referred to as the secondary task (ST).1 It is assumed that the modality of the PT is changing often, as would be the

case for a user in an extreme environment (e.g. a soldier attempting to focus on firing at a target, receiving radio communications, understanding hand/touch signals from other squad members, etc.).

3.2

Hypotheses

Prior work highlights three strategies (modes) for selecting the ST modality in the presence of a PT: Decoupled, Coupled and Constant. As each has been validated in numerous studies, this experiment was designed to evaluate the effects of the Decoupled mode compared with the Constant and Coupled modes under the assumption that the PT modality switches often. It was hypothesized that the optimal strategy for selecting the ST modality would depend on the level of sensory input load that the user was experiencing due to the PT.

At a low sensory input load for the PT, there is low risk of sensory overload caused

1The terms primary task (PT) and secondary task (ST) are used in this chapter to distinguish

between the two types of tasks; however, these terms are not meant to imply any type of preference given to one task over the other and were not used as task descriptors during the experiment. Participants were simply informed that they needed to complete all tasks, and were not instructed to prioritize the tasks in any way.

by the ST and modality switching cost will be the most important factor; therefore, the Coupled or Constant modes will result in optimal performance. At high sensory input loads, however, additional input from the ST is likely to cause a significant performance loss in both the PT and ST due to sensory overload; therefore, the Decoupled mode will result in optimal performance. Specifically:

Hypothesis 3.1: PT performance will be better at low sensory input loads when using the Constant or Coupled modes, and will be better at high sensory input loads when using the Decoupled mode.

Hypothesis 3.2: ST performance will be better at low sensory input loads when using the Constant or Coupled modes, and will be better at high sensory input loads when using the Decoupled mode.

These hypotheses relate to overall performance across all modalities; however, it is possible that specific modalities could be affected differently.

3.3

Design

The experiment, conducted according to a within-participants design, consisted of a primary task (PT) and secondary task (ST) provided on a laptop computer, with two controlled independent variables (“difficulty” and “mode”). In this experiment, “difficulty” refers to the amount of sensory load imposed upon the participant.

3.3.1

Equipment

The experiment was conducted using ARMA 3 simulation software [125] on a Eurocom Sky X9 laptop with a single monitor display.2 ARMA 3 was selected due to its

in-game scripting language that allowed programming the experiment task, control of directional sound effects, and visualization tools to create on-screen graphics, as well as its external C++ extension callback capability for operating the tactile device and

2OS: Windows 10; CPU: Intel Core i7 6700K; Memory: 64GB Mushkin DDR4-2133; SSD: 2x

512GB Samsung 950 Pro M.2; VGA: NVIDIA GTX 980; Audio: Sennheiser HD 202 II headphones; Mouse: Logitech M500

![Figure 1-3: Variants of the U.S. Army Research Laboratory’s Personal Tactile Navigator, reproduced from [13, 14].](https://thumb-eu.123doks.com/thumbv2/123doknet/13861690.445583/22.918.145.784.113.267/figure-variants-research-laboratory-personal-tactile-navigator-reproduced.webp)

![Figure 2-1: 4-D Multiple Resource Theory model, reproduced from [8].](https://thumb-eu.123doks.com/thumbv2/123doknet/13861690.445583/28.918.200.725.110.462/figure-d-multiple-resource-theory-model-reproduced.webp)