Designing an Experimental Platform for a

Split-classroom Comparison Study

by

Deborah L. Chen

S.B., Massachusetts Institute of Technology (2014)

Submitted to the Department of Electrical Engineering and Computer

Science

in partial fulfillment of the requirements for the degree of

Master of Engineering in Electrical Engineering and Computer Science

at the

MASSACHUSETTS INSTITUTE OF TECHNOLOGY

June 2016

c

Massachusetts Institute of Technology 2016. All rights reserved.

Author . . . .

Department of Electrical Engineering and Computer Science

May 23, 2016

Certified by. . . .

Professor Eric Klopfer

Director, MIT Scheller Teacher Education Program

Thesis Supervisor

Accepted by . . . .

Dr. Christopher Terman

Chairman, Masters of Engineering Thesis Committee

Designing an Experimental Platform for a Split-classroom

Comparison Study

by

Deborah L. Chen

Submitted to the Department of Electrical Engineering and Computer Science on May 23, 2016, in partial fulfillment of the

requirements for the degree of

Master of Engineering in Electrical Engineering and Computer Science

Abstract

SimBio, an education-technology company, has created two new online question for-mats which are constrained enough to give students automatic feedback, but open enough to give students ample room for expression. The LabLib, modeled after a MadLib, consists of multiple fill-in-the-blank answer choices, which students select from a drop-down. The WordBytes question format, modeled after refrigerator po-etry, consists of pre-determined tiles that students can drag and drop to form their answer. Automatic feedback is provided on-demand in the form of a popup window. This thesis presents the design and implementation of the first-ever split-classroom study to compare the LabLib and WordBytes format to each other. An experimen-tal platform was created to support the study’s requirements, and a 319-participant study was conducted with high school students from the Greater Boston area. We evaluated the LabLibs and WordBytes on learning gains, completion rates, and stu-dent engagement. Preliminary data reveals no significant difference in learning gains between the two formats. However, completion rates of questions in the LabLib and WordBytes format were higher than that of questions in a Free Response format. The data also suggests students were engaged with the LabLib and WordBytes questions, measured by the number of times students checked their answers.

Thesis Supervisor: Professor Eric Klopfer

Acknowledgments

I’ve had a wonderful time working in the STEP Lab. Thank you to Eric Klopfer and Daniel Wendel, who brought me onto this project. This past year has been incredibly fulfilling.

Thank you to Daniel for all your support. I’ve really enjoyed working with you day to day. Thinking through the design of the platform with you has been a lot of fun and I’ve learned a lot. Thank you also for all the early morning car rides to the high schools!

The classroom study could not have been completed without the work of many people. Thank you to Ilana for your tireless dedication in recruiting teachers for the study. Thank you to Irene for your early feedback on the study design and for helping out in the classroom too. It’s been great getting to know both of you this past semester.

To all the teachers and students who participated in the study, a big thank you! The study would not have been possible without you.

Thank you to Eli, Denise, and Kerry from the SimBio team for all your insights and feedback. I’ve had a great time working with you and SimBio’s technology.

Thank you to all my friends for all your support over the years. I’ve enjoyed our endless gchats, conversations, meals, and the occasional run or swim.

Thank you to my sister Belinda, for always being there for me.

Finally, to my mother and father, thank you, for everything. I cannot express the depth of your sacrifices, love, and support, without which, I would not be who or where I am today.

Contents

1 Introduction 15 1.1 Motivation . . . 15 1.2 Thesis Organization . . . 16 2 Background 19 2.1 LabLibs . . . 19 2.2 WordBytes . . . 21 2.3 BioGraph . . . 21 3 Questions Platform 23 3.1 Design Requirements . . . 23 3.1.1 Student Anonymity . . . 243.1.2 Robust Data Capture . . . 24

3.1.3 Random Question-type assignment . . . 24

3.2 Implementation . . . 24

3.2.1 System Overview . . . 24

3.2.2 Database Models . . . 24

3.2.3 Account Creation . . . 25

3.2.4 Teacher, Classroom, and Student Assignment . . . 27

3.2.5 Question-format Assignment . . . 28

3.3 Integrating LabLibs and WordBytes . . . 28

3.3.1 Saving Student Responses . . . 29

3.4.1 LabLib Grading Rules and Feedback . . . 31

3.4.2 WordBytes Grading Rules and Feedback . . . 31

3.5 Analysis Tools . . . 34

3.5.1 Classroom Status Page . . . 34

3.5.2 Student Answers Page . . . 35

3.5.3 Free Response Categorization/Grading System . . . 35

4 Classroom Study 41 4.1 Something’s Fishy Activity . . . 41

4.2 Questions . . . 43

4.2.1 Permission . . . 44

4.2.2 Group-size . . . 44

4.2.3 Warm-up and Transfer . . . 44

4.2.4 Question 8 . . . 46

4.2.5 Question 13 . . . 48

4.3 Study Procedure . . . 51

4.4 Participation and Compensation . . . 52

4.5 Preparation . . . 52

4.6 Iterations on Questions Platform . . . 53

4.6.1 Iteration 1 . . . 53

4.6.2 Iteration 2 . . . 54

4.6.3 Iteration 3 . . . 55

4.7 Iterations on Something’s Fishy Activity . . . 55

5 Results 57 5.1 Participant Demographics . . . 57

5.2 Question Format Assignment . . . 58

5.3 Completion Rates . . . 58

5.3.1 Completion Rates by Question . . . 58

5.3.2 Completion Rates by Question Format . . . 59

5.4.1 EvoGrader Results . . . 60

5.5 Student Engagement . . . 62

5.5.1 LabLib . . . 62

5.5.2 WordBytes . . . 63

5.6 Feedback Coverage Rate . . . 65

6 Future Work 67 6.1 Extensions to Questions Platform . . . 67

6.1.1 UI for Adding/Creating New Questions . . . 67

6.1.2 Teacher Portal . . . 68

6.2 Smarter Feedback . . . 68

6.3 Research Questions . . . 69

6.3.1 Learning Gains Evaluation . . . 69

6.3.2 Additional Measures of Engagement . . . 69

6.3.3 Additional Feedback Evaluation . . . 69

7 Contributions 71 A Classroom Study Materials 73 A.1 Something’s Fishy Activity . . . 73

A.2 Documents for Participants . . . 87

B Categories and Feedback 91 B.1 Preliminary Misconceptions from BioGraph . . . 91

B.2 LabLib Final Categories and Feedback . . . 96

List of Figures

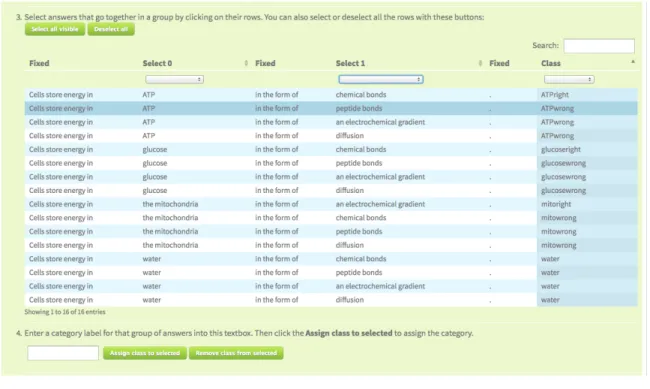

2-1 Example LabLib . . . 20

2-2 LabLib Question Editor . . . 20

2-3 LabLib Feedback Editor . . . 21

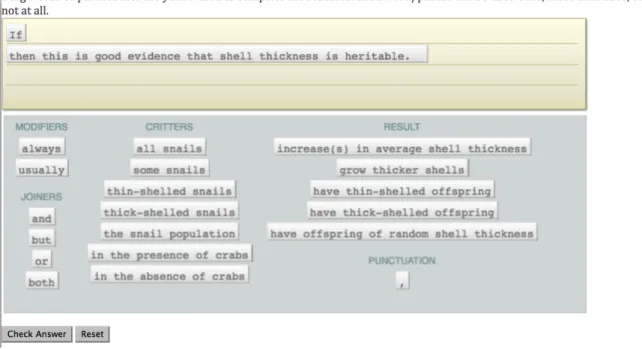

2-4 Example WordByte . . . 22

3-1 Questions Platform Data Models . . . 26

3-2 Code flow of SaveAnswer for LabLib . . . 30

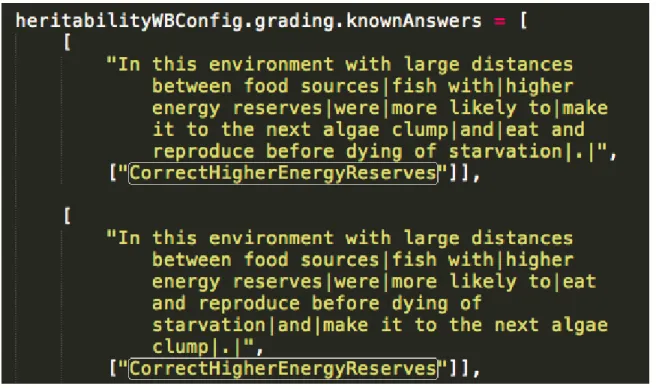

3-3 Example of Known Answers for WordBytes Question 8 . . . 32

3-4 Example of Grading Categories for WordBytes Question 8 . . . 33

3-5 Example of Regular Expression Grading Rules for WordBytes Question 8 34 3-6 Excerpt from Classroom Status Page . . . 36

3-7 Excerpt from Student Answers Page . . . 37

3-8 Sample Grading Page . . . 39

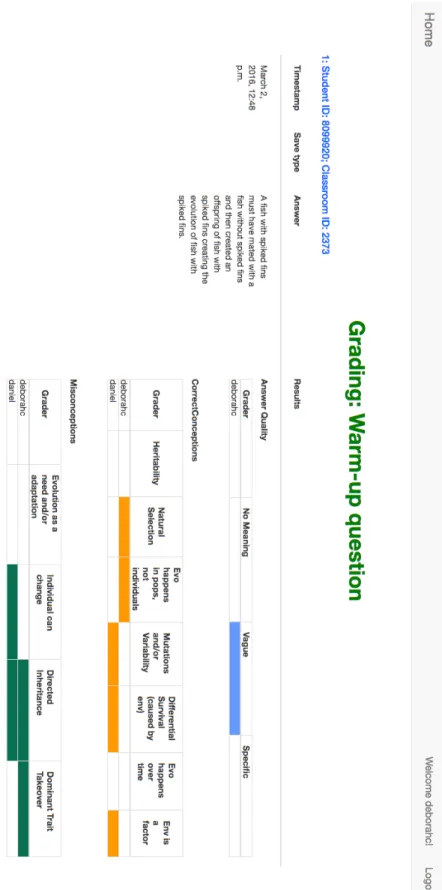

3-9 Sample Grading Results Page . . . 40

4-1 StarLogo Nova Fish Pond Simulation: Uniform Fish . . . 42

4-2 StarLogo Nova Fish Pond Simulation: Multi-colored Fish . . . 43

4-3 Permission Question . . . 44

4-4 Group-size Question . . . 45

4-5 Warm-up Question . . . 45

4-6 Transfer Question . . . 46

4-7 Question 8: Free Response Format . . . 47

4-8 Question 8: LabLib Format . . . 47

4-10 Question 13: Free Response Format . . . 49 4-11 Question 13: LabLib Format . . . 50 4-12 Question 13: WordBytes Format . . . 50 5-1 Conceptions in Pre/Post Question Expressed by Free Response Students 61 5-2 Conceptions in Pre/Post Question Expressed by LabLib Students . . 61 5-3 Conceptions in Pre/Post Question Expressed by WordBytes Students 62 5-4 Explanation of Key and Naive Concepts . . . 62 5-5 Question 8: Number of Checks Used by Student . . . 64 5-6 Question 13: Number of Checks Used by Student . . . 65

List of Tables

3.1 Question Format Assignment via Student ID . . . 28

4.1 Question 8: LabLib Answer Choices . . . 48

4.2 Question 13: LabLib Answer Choices . . . 50

5.1 Participant Demographics . . . 57

5.2 Question Format Assignment Distribution . . . 58

5.3 Completion Rates by Question . . . 58

5.4 Question 8 Completion Rates by Format . . . 59

5.5 Question 13 Completion Rates by Format . . . 60

5.6 Number of Students who Used at Least One Check: LabLib . . . 63

5.7 Number of Students Who Reached a Correct Answer From Incorrect Answer . . . 63

5.8 Number of Students Who Used at Least One Check: WordByte . . . 64

5.9 Number of Students Who Reached a Correct Answer From Incorrect Answer: WordBytes . . . 64

Chapter 1

Introduction

1.1 Motivation

To assess a student’s understanding of a concept, teachers often give assignments that involve multiple choice or free response questions. Multiple choice questions are easy for teachers to grade, but provide limited avenues for students to express their misconceptions about the topic. Furthermore, it can be difficult to glean a student’s level of understanding; we may know a student chose an incorrect answer, but we do not know why they chose that answer, limiting the usefulness of any feedback.

In contrast, free response questions allow students unlimited room to express their understanding of a topic. As long as a student’s answer is sufficiently descriptive, teachers can assess how and why a student arrived at their answer and provide them customized feedback that addresses any misconceptions. However, doing so can be a time-intensive process, and automatic grading systems for unconstrained free response answers are still in their nascency. Students are also only able to get feedback once during this process—after submitting the assignment—and do not get the chance to get feedback as they go along. Another drawback is that without the scaffolding provided by the multiple choices, it may be harder for students to begin to formulate an answer, resulting in blank or vague, non-responsive answers.

SimBio, an education-technology company that creates tools for teaching biology, has created two new online question formats designed to find the “sweet spot” between

multiple choice and free response questions. These formats are constrained enough so that it is feasible to provide students automatic feedback, but open enough to give students ample room for expression, capturing the benefits of the multiple choice and free response formats. They also provide scaffolding to help students form their answers.

The LabLib, modeled after a MadLib, consists of multiple fill-in-the-blank answer choices, which students select from a drop-down. The WordBytes question format, modeled after refrigerator poetry, consists of pre-determined tiles that students can drag and drop to form their answer. The constraints of these formats enable us to create feedback that students can access on-demand as they formulate their answer.

SimBio has deployed LabLibs and WordBytes in many college classrooms across the country. However, no side-by-side comparison study has been done to compare the effectiveness of LabLibs and WordBytes to Free Response questions. Such data would yield insights into whether these formats are useful to students and how to improve them in future iterations.

This thesis presents the design and implementation of the first-ever split-classroom study to compare the LabLib and WordBytes question format to each other. The main technical contribution of the thesis is the creation of an experimental platform to deliver the LabLibs and WordBytes content to students. This thesis also presents preliminary analysis of the data obtained from the classroom study.

1.2 Thesis Organization

Chapter two describes previous work related to the LabLib and WordBytes question formats.

Chapter three describes the implementation of the Questions Platform, an exper-imental platform that supports a split-classroom comparison study. The Platform delivers the LabLib and WordBytes questions to students in a classroom setting.

Chapter four describes the setup of the classroom studies performed to evaluate the LabLib and WordBytes question formats.

Chapter five describes the results of the classroom studies and evaluates the LabLib and WordBytes question formats on learning gains, completion rates, and user engagement.

Chapter six describes future work to extend the Questions Platform and additional data analysis to be performed.

Chapter 2

Background

The LabLib and WordBytes question formats were created by SimBio, the education technology company. In contrast to free response questions, in which students must come up with answer entirely on their own, these formats provide scaffolding to help students form an answer.

Furthermore, because of the constrained answer space imposed by these two for-mats, it becomes feasible to give students automatic and immediate feedback. Ed-ucators can write the question and answer choies in a way that allows students to express common misconceptions about the subject. These misconceptions can then be targeted with specific feedback, which students can access while answering the question. For both question formats, feedback is displayed in the form of a pop-up window.

2.1 LabLibs

The LabLib question format, modeled after a MadLib, consists of multiple fill-in-the-blank answer choices, which students select from a drop-down.

Typically, each drop-down consists of three to four answer choices, designed to al-low the student to express various conceptions and misconceptions about the subject.

Scaffolding is provided in the form of the fixed parts of the answer stem. Figure 2.1 shows an example of a LabLib.

Figure 2-1: Example LabLib

Figure 2-2: LabLib Question Editor

SimBio also developed two visual tools to create LabLibs. The questions editor, shown in Figure 2-2, is a web interface that allows users to edit the fixed answer stem and drop-down choices.

The feedback editor, shown in Figure 2-3, is a web interface that allows users to group sets of drop-down answers into categories and write feedback for those cate-gories.

Figure 2-3: LabLib Feedback Editor

2.2 WordBytes

The WordBytes question format, modeled after refrigerator magnet poetry, is a drag-and-drop environment where students create sentences by selecting phrases from a pre-determined, constrained set of tiles. Scaffolding is provided in the form of optional fixed tiles.

Figure 2-4 shows an example of a WordByte.

There are no visual tools for creating WordBytes. To make a new WordByte, one must edit the JavaScript files that define the WordBytes question directly.

2.3 BioGraph

BioGraph is a four year long NSF-funded collaboration between the MIT Scheller Teacher Education Program (STEP Lab) and the Graduate School of Education at UPenn. The project involved the creation of a series of high school biology lessons, which made use of agent-based computer models, in the form of StarLogo Nova—a blocks-based programming environment—to explore complex systems.[2]

Figure 2-4: Example WordByte

Researchers at the STEP Lab chose one of the BioGraph lessons, titled Some-thing’s Fishy, for LabLib and WordBytes integration. In the activity, students ob-serve a simulation of a fish population in a pond and learn about the role genetic drift and natural selection play in the fish population’s evolution.

The researchers selected two questions, Question 8 and 13, from the activity to write in LabLib and WordBytes form. These questions were chosen because they were reasonably complex, as they ask students to reason about why a phenomenon occurred and support their claim with evidence.

Researchers analyzed the data from previous BioGraph classroom studies involv-ing the activity to determine the most common ideas and misconceptions expressed by students in their free response answers to Question 8 and 13. From this, the re-searchers created a paper version of the LabLib and Wordbytes question that enabled students to express these conceptions, which were incorporated into the LabLib an-swer choices and the WordBytes tiles. The initial set of misconceptions are shown in Appendix B.1

Chapter 3

Questions Platform

The goal of the study was to run a split-classroom experiment where 1

3 of the students

received the Free Response format, 1

3 the LabLib format, and 1

3 the WordBytes

for-mat.To perform data analysis, the research team also needed a record of every answer submitted by a student and the feedback shown to them.

To support these goals and deploy the activity to the classroom, the Questions Platform was created. The Platform includes support for the two study questions— Question 8 and 13, a Pre and Post question to assess student’s understanding of evolution before and after the activity, and some additional research information—a record of whether the student has given permission to the research team to use their data, and whether they worked individually or with a partner.

The Platform is a web application that allows students to login and submit their answers to the above questions, which are captured in a database. This chapter describes the technical implementation of the Platform and the constraints informing its design.

3.1 Design Requirements

Based on an analysis of the study’s needs, we formulated the following initial high-level requirements for the Questions Platform.

3.1.1 Student Anonymity

All participating students’ names and identities must be anonymous to the research team, but not to their teachers, who need to match students’ answers to their names for grading.

3.1.2 Robust Data Capture

Student’s answers should be captured by our system. Students should be able to log in and out of the system and see their most recently submitted or saved answer. All submit and check answer events must be saved.

3.1.3 Random Question-type assignment

The application needs to automatically and randomly assign students to either a Free Response, LabLib, or WordBytes questions format that applies to both Question 8 and 13, resulting in an approximately equal distribution of formats. This assignment must be preserved upon future logins by the same student.

3.2 Implementation

3.2.1 System Overview

The Questions Platform is a Django application backed by a PostgreSQL database, hosted on Amazon Web Services. The LabLib and Wordbytes questions format were implemented as a standalone JavaScript application by Kerry Kim, a developer at SimBio. Minor modifications were made to the LabLib and WordBytes code to inte-grate them into the Questions Platform.

3.2.2 Database Models

Each Teacher has at least one Classroom, and each Classroom has multiple Students. Each Student is linked to one Django User for login and authentication purposes.

Each Student will answer multiple True/False1 and Free Response questions, so

they will have many instances of TrueFalseStudentAnswers and FreeResponseStudentAnswers. Students are randomly assigned a single question format to answer for both

ques-tions 8 and 13—either Free Response, LabLib, or WordBytes. Depending on the assignment, a Student may have 0 or many instances of LabLibStudentAnswers, or 0 or many instances of WordByteStudentAnswers. (Students will always have many instances of FreeResponseStudentAnswers because the Pre and Post questions are assigned to all students and are always in the Free Response format.)

There are four models that correspond to each question format in the Platform: TrueFalseQuestion, FreeResponseQuestion, LabLibQuestion, and WordByteQuestion. Each *StudentAnswer instance is linked to the respective *Question instance.

To preserve anonymity of all students and teachers, Teacher IDs are randomly generated 3-digit positive integers, Classroom IDs are randomly generated 4-digit positive integers, and Student IDs are randomly generated 7-digit positive integers. A default Teacher and Classroom instance was also created with ID -1.

3.2.3 Account Creation

Before any classroom studies took place, we created 100 Teacher instances, 300 Classroom instances, and 1000 User/Student instances, using the randomly gen-erated IDs. Creating more instances than needed gave us the flexibility to reuse the system for future experiments or re-run the current experiment with more partici-pants. No identifying information is stored within these instances, as internally, the system refers to teachers, classrooms and students by their IDs.

1The True/False model supports two questions: the Permission question, which asks if students

consent to share their data with the research team, and the Group-size question, which asks if students worked by themselves or with a partner. The Group-size question was implemented as an instance of the TrueFalseQuestion to save time, with True referring to ‘By Myself’ and False referring to ‘Partner.’ A better implementation would have created a MultipleChoiceQuestion model which would support both questions.

Figure 3-1: Questions Platform Data M odels

Account creation was done using custom Django Management commands. A generate_accounts.py command creates 3 CSV files: simbio_teachers.csv, con-taining the randomly generated Teacher IDs, simbio_classrooms.csv concon-taining the randomly generated Classroom IDs, and simbio_accounts.csv containing the randomly generated User IDs and passwords. For simplicity, each password is in the format pw + User ID, e.g. pw8876373. This is sufficiently secure, because IDs are hard to guess, so knowledge of the ID is a sufficient credential to access the account, though a more secure system would have used randomly generated passwords. One benefit of the current system however, is that if students forget their password, it is easy for them to recover it.

A import_accounts.py command reads in the CSV files and creates Teacher, Classroom, and User/Student instances for each ID in the file. By default, each Classroom is assigned to the Teacher with ID -1, and each User is assigned to the Classroom with ID -1. Recall that each Student instance is assigned to one and only one User instance.

3.2.4 Teacher, Classroom, and Student Assignment

The day before each classroom study, we manually assigned the needed number of Classroom instances to a respective Teacher instance. This was done by changing each Classroom’s Teacher attribute from -1 to the actual Teacher instance in the Django administrative interface. Teacher names are never stored in the system, and the mapping of teacher names to Teacher IDs was only used to make sure that during the study, the correct packets were given to the correct classrooms. The details of how students are pre-assigned to classrooms are described in Section 4.5.

When students log in to Questions Platform, they type in their pre-assigned User credentials and a pre-assigned Classroom ID. A process_login Django view func-tion modifies the User’s Student instance so that it is associated with the correct Classroom instance, based on the inputted Classroom ID.

3.2.5 Question-format Assignment

Student ID mod 3 Question Format Assignment

0 Free Response

1 LabLib

2 WordBytes

Table 3.1: Question Format Assignment via Student ID

At login time, the process_login Django view discussed in Section 3.2.4 also calls a Student method, get_question_type, which determines which question format the student should see for Question 8 and 13. The format is determined by Student ID mod 3, as seen in Table 3.1. The format is stored in a Django Session variable and is accessed by the index Django view function to generate links to the correct versions of Question 8 and 13.

Because the format assignment is deterministic by ID, it is up to the research team to ensure that the Student IDs assigned to a classroom will result in a roughly equal distribution between the three formats.

3.3 Integrating LabLibs and WordBytes

A large part of the work in developing the Questions Platform was to integrate the standalone implementations of LabLibs and WordBytes.

The process is similar for both question formats, so for simplicity, the process for LabLibs is described below.

1. Copy all LabLib JavaScript, CSS, and image assets into a Static folder in the Questions Platform.

2. Use the LabLib Visual Editor to create a new LabLib. Copy the JavaScript file that defines the LabLib into the Static folder.

3. Create a lablib.html Django template that references the needed files from steps 1 and 2. Insert into the template a JavaScript snippet that upon page

load, creates a new instance of a JavaScript LabLib object and fills in the LabLib drop-downs with the student’s most recently saved answers, provided by the View function in the next step. Edit the ‘submit’ or ‘check’ buttons to call the appropriate saveAnswer method described in Section 3.3.1.

4. Create a Django Views.py function that takes in a questionID, queries the database for the student’s most recently saved answer, and renders the correct LabLib template.

5. Create an instance of LabLibQuestion in the database, which can be done either from the command line or the Django administrative interface.

Note that the actual content for a LabLib—the question, the answer choices, and feedback—is completely described by a set of JavaScript files, which must be loaded by a template. The LabLibQuestion model is used so that on the backend, student answers can be associated with the correct question.

3.3.1 Saving Student Responses

For research purposes, the Platform needs to store a record of each time a student checks or submits an answer. It was imperative that our system did not lose any student responses, so to this end, the system automatically saves Free Response, LabLib, and WordBytes answers when a student navigates away from the page.2

Saving LabLibs When a student clicks the ‘submit’ or ‘check’ answer button, the method saveAnswerWrapper inside LabLib.js is called. saveAnswerWrapper takes in two inputs: a questionId—the LabLib’s ID in the database—and saveType, either ‘submit’ or ‘check.’ This function then calls a helper method to generate feedback for the student’s answer and determine if the answer is correct. These additional parameters are passed into saveAnswer, which makes an AJAX call to a Django

2Originally, we had only planned on saving LabLib and WordBytes answers upon navigation.

However, after the first classroom study, we observed that many students forgot to press the submit button for Free Response answers, so we updated the system to save Free Response answers upon navigation as well.

Figure 3-2: Code flow of SaveAnswer for LabLib

Views.py lablib_response method. Finally, lablib_response creates a new in-stance of LabLibStudentAnswer associated with the LabLibQuestion and saves it to the database. This execution flow is shown in Figure 3-2.

When a student navigates away from the LabLib page, detected by window.onbeforeunload, saveAnswerWrapper is called with the ‘navigation’ input to save the current answer

to the database.

Saving WordBytes The process to save a WordBytes answer is similar to saving a LabLib, except that the saveAnswer method in WordBytes.js takes in two additional inputs: gradingRulesMatched—an array of all grading rules’ category names that apply to the student’s answer—and gradingRulesDisplayed—an array containing the category name of the grading rule that corresponds to the feedback shown to the

student.3

3.4 Grading Rules and Feedback

We developed a grading scheme with categorization rules that automatically cate-gorizes a student’s answer to a LabLib or WordBytes question. This category is associated with a piece of feedback that is displayed to the student in the form of a pop-up window. For example, in LabLib Question 8, if a student selects a drop-down to say that some fish were able to survive because “they adapted quickly,” which is incorrect, the system would categorize their answer as “adapted”, and display the associated feedback, “These fish are already well-suited to their environment. Adap-tation is not a reason that some survive and others do not.” A detailed list of all LabLib and WordBytes answer categories, grading rules, and feedback is available in Appendix B.

3.4.1 LabLib Grading Rules and Feedback

Each LabLib is contained in its own JavaScript file and has its own categories/feedback Object. This object can be generated with the LabLib Visual Feedback editor and must be pasted in the proper location. Because LabLibs typically only have up to four drop-downs with up to four options each, creating feedback for every combination of answers is feasible.4

3.4.2 WordBytes Grading Rules and Feedback

For WordBytes, generating grading rules and feedback is more complicated. Students drag and drop tiles to form their answer, which can result in hundreds of thousands of

3Even if multiple rules are matched, only one piece of feedback is shown to the

stu-dent.gradingRulesDisplayed is an array to allow flexibility to display multiple pieces of feedback in the future.

4In practice, it is not necessary to write unique feedback for every single combination of answers,

tile permutations. Because it would be infeasible to manually categorize every single permutation, the system offers two ways to define a grading rule for a WordByte.

First, inside a model.js file , one can define a knownAnswer, which is a hardcoded answer string associated with a particular category. For example, we can hardcode a correct answer, “In this environment with large distances between food sources, fish with higher energy reserves were more likely to make it to the next algae clump and eat and reproduce before dying of starvation,” and associate it with the category CorrectHigherEnergyReserves, as shown in Figure 3-3. Answers that matched this category would be given the feedback “Good! Fish that had higher energy reserves were more likely to make it to the next clump of algae, and eat and reproduce before dying of starvation.” An example of the categories with their associated feedback is shown in Figure 3-4.

Figure 3-3: Example of Known Answers for WordBytes Question 8

Second, inside the same model.js, one can define a JavaScript regular expression that if evaluated to true on an answer, will result in a specified category being applied. For example, to detect that an answer contains the tile “adapt quickly,” one can use

Figure 3-4: Example of Grading Categories for WordBytes Question 8

the regular expression ans.ans.match(/adapt quickly/), as shown in Figure 3-5. For each such rule, one can also specify if the system should stop grading upon a match via a stopGradingIfMatch variable. By default, stopGradingIfMatch is set to false to allow the system to match multiple rules.

To grade an answer, the WordBytes grading system first checks if the answer matches any of the known answers. If so, the feedback associated with the first matched category will be shown to the student. If not, the system will continue to check if any of the regex rules are matched and display the feedback associated with the first matched category. If the answer does not match any known answer or regex rule, then a default message, “We’re unable to automatically provide feedback for your answer. Please re-read your answer and submit or check it again when you’re ready,” is shown. To minimize complexity, both student answers and known answers

Figure 3-5: Example of Regular Expression Grading Rules for WordBytes Question 8

are stripped of linking words— such as “and,” “they were,” and “so”—and punctuation, before being graded. We also implemented rules to detect if an answer was too long or too short.

Because multiple rules can be matched, but only the first piece of feedback is dis-played, it is important to order the rules so that higher priority feedback is displayed first. A full list of known answers, rules, and categories is available in Appendix B.3.

3.5 Analysis Tools

To assist with data analysis, we implemented the following features in an Analysis application contained within the larger Questions Platform project.

3.5.1 Classroom Status Page

The Classroom Status page, shown in Figure 3-6, provides a snapshot of a classroom’s progress in the activity. For each student, the page lists their most recently submitted

answer to each question, or if that does not exist, the most recently saved answer. The research team referred to this page during the classroom studies to get a sense of how far along the students were and manage time accordingly.

3.5.2 Student Answers Page

The Student Answers page, shown in Figure 3-7 contains, for each student, a list of every answer they’ve submitted to the Questions Platform. Each answer entry contains a timestamp, the save type, and the student’s answer. For answers to LabLib or WordBytes format questions, the feedback and whether or not the answer was correct is displayed as well.

3.5.3 Free Response Categorization/Grading System

The activity contains a Pre and Post question designed to assess students’ under-standing of evolution. By comparing the conceptions or misconceptions the student expressed in their answer to the Post question with the ones in their answer to the Pre question, we can determine if the student’s understanding improved from doing the activity.

Because the Pre and Post question are in the Free Response format, manual categorization and grading is needed to determine the conceptions expressed.5

We created a tool to allow human graders to do this categorization online. For each larger umbrella category, such as ‘AnswerQuality,’ graders apply sub-categories, such as ‘No meaning’, ‘Vague’, or ‘Specific’ by clicking a check-box, as shown in Figure 3-8. Their selections are automatically saved and tallied by the system, which eliminates the need to deal with spreadsheets and manually merge results from different graders. On the front-end, each time a checkbox is clicked, a two-second timer begins. If the grader selects another checkbox in the same umbrella category before the two seconds expire, the timer is reset. Otherwise, if there is no other activity within the two seconds, an AJAX call is made to the Questions Platform to save the grader’s

5We did also run these answers through EvoGrader, as described in Section 5.4.1, but determined

Figure 3-6: Exc erpt from Classro om Status Page

Figure 3-7: Exc erpt from Studen t Answ ers Page

current selections. Waiting two seconds before saving the state minimizes unnecessary calls to the database. A sample results page is shown in Figure 3-9.

On the backend, an instance of FreeResponseStudentAnswerCategory is created for each umbrella category. The grading_response View function first checks if a FreeResponseStudentAnswerCategory instance already exists for the answer being graded. If it does, its categories attribute is updated with the passed in categories. Otherwise, a new instance is created.

The FreeResponseStudentAnswerCategory model contains the following attributes:

• categories: an Array of sub-categories applied

• category_name: the String name of the umbrella category • grader: a Foreign Key to the grader’s User account instance

• student_response: a Foreign Key to the FreeResponseStudentAnswer in-stance being graded

• iteration: a String that describes the current round of grading, e.g. ’Round1.’ This allows multiple passes through the data with different coding schemes can be separated out.

Figure 3-8: Sam pl e Grading Page

Figure 3-9: Sam ple Grading Results Page

Chapter 4

Classroom Study

This section discusses the materials used in the study, our experimental procedure, and iterative changes made to the Questions Platform to improve the student expe-rience.

4.1 Something’s Fishy Activity

The LabLib and WordBytes question formats are delivered to students in the form of an activity about evolutionary biology, titled Something’s Fishy. In the activity, students learn about two factors that contribute to evolution—genetic drift and nat-ural selection—by observing a simulation of a fish pond population that changes over time.1

The activity has three components–a paper packet which guides students through three experiments involving the fish pond, the online StarLogo Nova simulation, and the Questions Platform, which delivers selected questions from the activity online in the form of Free Response, LabLib and WordBytes questions. Students are directed to write all their answers on the paper packet, with the exception of the questions described in Section 4.2.

1In the activity, genetic drift is defined as “a process by which the genetic traits in a population

change due to the random chance survival and reproduction of particular individuals.” Natural selection is defined as “a process by which individuals with certain genetic traits are more likely to survive and pass on those traits to their offspring, making those traits more common in the population.”

Experiment 1, shown in Figure 4-1, familiarizes students with the simulation. Students observe a population of genetically identical yellow fish that swim in a pond and log the size of the fish population after running the simulation for a certain amount of time. The algae food source is evenly distributed throughout the pond. Students also examine the StarLogo Nova programming blocks to understand how the fish behave. Fish gain energy when they eat algae and expend energy to swim. They also reproduce when they have stored enough energy and die if they are too old or their energy levels reach zero.

Figure 4-1: StarLogo Nova Fish Pond Simulation: Uniform Fish

In Experiment 2, students observe a pond of multi-color fish that are genetically identical, save for color. Students run the simulation three times and record which color fish had the highest number of surviving fish. In this environment, as shown in Figure 4-2, the algae is clumped and distributed unevenly throughout the pond, and the fish are randomly placed at the start of the simulation. In Question 8, delivered by the Questions Platform, students are asked to explain why in one run of the simulation, one color fish might survive longer than another color, but see the opposite in another run of the simulation.

In Experiment 3, students observe a pond of multi-color fish that reproduce at different energy levels and pass different amounts of energy on to their offspring. For example, red fish have a “very fast” reproductive strategy that only requires

Figure 4-2: StarLogo Nova Fish Pond Simulation: Multi-colored Fish

20 energy units to reproduce and passes 8 energy units on to their offspring, while magenta fish have a “very slow” reproductive strategy that requires 100 energy units to reproduce and passes on 40 energy units to their offspring. As in Experiment 2, the algae is clumped and distributed unevenly throughout the pond, and the fish are randomly placed. In Question 13, students are asked to explain why having higher energy reserves, a trait associated with slower reproducing fish, is advantageous in this environment.

4.2 Questions

This section describes the six questions students are asked to answer on the Platform. The Permission, Group-size, Pre, and Post question are in the same format for all students, with the Pre question referred to as the “Warm-up,” and the Post as the “Transfer.” For Question 8 and 13, a format is randomly assigned to a student and applies to both questions. Students write their answers to all other questions in the paper packet.

4.2.1 Permission

Students’ participation in the study is optional and anonymous, in accordance with the study protocol approved by the Committee on the Use of Humans as Experimental Subjects. When students log in to the Platform, they are asked:

Do you wish to participate in this research study by sharing your anonymous an-swers with the MIT STEP researchers? You can change your decision at any time during this activity using the button below. Please ask your teacher or a researcher if you have any questions.

By default, the No option is toggled, and students can opt-in by selecting Yes.

Figure 4-3: Permission Question

4.2.2 Group-size

Depending on a high school’s resources, some students had to share a computer and work in pairs, which would be counted as a single User in the system. We stored this information so that we could separate out individual vs. pair data for analysis.

Students are asked: Are you working by yourself or with a partner?

4.2.3 Warm-up and Transfer

The Warm-up question is a free response question used to gauge students’ understand-ing of evolutionary change before completunderstand-ing the activity. It is the first question in

Figure 4-4: Group-size Question the activity proper, also known as the Pre question.

The question was worded so that responses would be gradeable by EvoGrader [1], an online grader that uses machine-learning to assess students’ understanding of evolutionary change.2

A species of fish has spiked fins. How would biologists explain how a species of fish with spiked fins evolved from an ancestral fish species where most individuals did not have spiked fins?

Figure 4-5: Warm-up Question

The Transfer question is the last question in the activity proper, also known as the Post question. It is essentially the same as the Warm-up question, with the phrase

2Though we did run the answers through EvoGrader (results are discussed in Section 5.4.1), we

determined hand-grading was also needed for more precision, which resulted in the creation of the grading tool described in Section 3.5.3.

“spiked fins“ in the Warm-up question replaced by “is poisonous.“

A species of fish is poisonous. How would biologists explain how a species of fish that is poisonous evolved from an ancestral fish species where most individuals were not poisonous?

Figure 4-6: Transfer Question

Because the Warm-up and Transfer questions are nearly identical, comparing a student’s responses to these questions allow us to assess their learning gains from doing the activity.

4.2.4 Question 8

Students are randomly assigned either a Free Response, LabLib, or WordBytes format for Question 8, as described in Section 3.2.5 and Section 4.5. The text of the question is the same for all formats:

We ran the simulation twice. In our first run, blue lasted longest because more blue fish ate algae and reproduced before they starved. In the second run, red lasted longest for the same reasons. Why were more red fish able to eat and reproduce in the second run?

Figure 4-7 shows a Free Response version of Question 8. Figure 4-8 and Table 4.1 show a LabLib version. Figure 4-9 shows a WordBytes version.

Explanation of Answer A correct answer would say that in the second run, the red fish were randomly placed closer to a clump of algae, so they were more likely to bump into and eat algae and reproduce before dying of starvation. Thus, random luck was the main factor contributing to survival.

Figure 4-7: Question 8: Free Response Format

Drop-down 1 Drop-down 2 Drop-down3 they were randomly

placed closer to algae

their random movements led them to more algae clumps

random luck

they swam fastest were able to cover more territory which color was better they adapted quickly passed their adaptations on to their

off-spring

how hard the fish fought

they had better traits had greater stomach capacity

Table 4.1: Question 8: LabLib Answer Choices

Figure 4-9: Question 8: WordBytes Format

4.2.5 Question 13

As with Question 8, students are randomly assigned either a Free Response, LabLib, or WordBytes format for Question 13. The text of the question is the same for all formats:

We ran the simulation many times. (Remember, in this model, the clumps of algae are spaced far apart, and fish colors represent different reproductive strategies.) Turquoise and magenta, the colors representing slower reproductive strategies, tended

to last longer in most of the runs. Slower-reproducing fish tend to have higher energy reserves, which we believe is what caused the difference in survival. Why were higher energy reserves advantageous in this environment?

Figure 4-10 shows a Free Response version of Question 8. Figure 4-11 and Table 4.2 show a LabLib version. Figure 4-12 shows a WordBytes version.

Explanation of Answer A correct answer would say that in this environment with large distances between algae clumps, fish with higher energy reserves were more likely to make it to the next algae clump and eat and reproduce before dying of starvation. Thus, the trait of higher energy reserves makes these fish better suited to their environment, leading to their survival.

Figure 4-11: Question 13: LabLib Format

Drop-down 1 Drop-down 2 Drop-down3

higher energy reserves higher energy reserves reproduce lower energy reserves higher energy reserves grow bigger faster adaptations faster adaptations adapt faster

Table 4.2: Question 13: LabLib Answer Choices

4.3 Study Procedure

We recruited six biology teachers from three high schools in the Greater Boston area, who altogether, taught 17 introductory biology classes.

Each classroom study took place over two normal class periods, either over two different days or during one double block.

Students were asked to complete the Something’s Fishy activity and instructed to write all their answers in the packet, except for the Warm-up question, Question 8, Question 13, and the Transfer question, which were to be answered online on the Questions Platform. Students were allowed to discuss the activity and compare answers with other students, but were told all answers should be their own.

At the beginning of the class period, a member of the research team introduced the study, explained the concepts of natural Selection and genetic Drift, and demonstrated how to open and use the StarLogo Nova fish simulation. Students were instructed not to write their names on the packet to preserve their anonymity.

During the activity, members of the research team walked around the classroom to answer students’ questions.

At the end of the activity, a member of the research team led the students through a class discussion on their results, reinforcing the concepts of genetic drift and natural selection. The researcher discussed the students’ answers to Question 8 and 13 as well as the interplay between genetic drift and natural selection in the evolution of the fish population.

Students used desktop or laptop computers provided by their high school to com-plete the activity. Depending on availability of computers, students worked in pairs or by themselves, with one student or one pair to a computer. Pairs were given one packet and counted as one participant in the Questions Platform.3 To maximize the

number of data points, we asked students to work individually and only double up as needed.

3The Questions Platform asks students if they worked by themselves or with a partner, and

In total, 319 participants 4, all in 9th or 10th grade, opted in to share their data

with the research team. Some classrooms had already seen a lesson about evolution, while others were being introduced to the concept during our study.

4.4 Participation and Compensation

For students, choosing to share their data—their written answers on the Something’s Fishy activity packet and their online answers on the Questions Platform—with the research team was optional. Students who declined to share their data were given a version of the activity packet that contained no online component for the Warm-up, Question 8, Question 13, and Transfer questions. These students were asked to write all their answers in their packet, which was handed in directly to the teacher after completion.

All students, regardless of whether they shared their data or not, were required to complete the activity as part of their regular classwork.

Teachers were asked to complete the Something’s Fish activity before the date of the classroom study to familiarize themselves with the lesson. They were compensated $200 for each participating class, up to three classes. Students were not compensated for their participation.

4.5 Preparation

Before each classroom study, we assigned the needed number of Classrooms to Teach-ers in the Questions Platform, as described in Section 3.2.4, so that students’ answTeach-ers would be associated with the correct teacher.

All activity packets were printed and prepared beforehand. We printed self adhe-sive labels containing login ID, password, and classroom ID information and affixed them to the packets. The labels were created using Microsoft Word’s Mail Merge feature.

4A participant is either a single student, or a pair of students. Essentially, one packet per

Because the Questions Platform assigns a question format based on the student’s seven-digit ID, it is up to the research team to manually ensure that the IDs selected from the 1000 pre-created accounts result in a balanced distribution. In most cases, we knew the number of students in a classroom beforehand, so we were able to select IDs appropriately.

Later iterations of the labels had a small shape printed on them to identify the assigned question format. This helped the research team to evenly distribute the different question formats among the classroom to reduce the potential for copying.

Finally, we created a spreadsheet for teachers of all student IDs in each classroom. Teachers wrote student’s names next to their IDs so they could match packets to student names for grading purposes without the need to write down each seven-digit ID. This mapping was never shared with the research team; this way, the answers collected by the Platform were anonymous, but teachers could still grade them and give students credit.

4.6 Iterations on Questions Platform

During the study, the research team was on the lookout for issues with the Platform and improvements to be made. This section describes the changes to the Platform as the study progressed.

4.6.1 Iteration 1

After the first classroom study, we made the following usability improvements to the Platform. These changes were deployed to all subsequent classroom studies.

Remove Pagination We removed the links to the next and previous question on each page because their presence created a confusing experience for the students. After completing the Warm-up question, they instinctively clicked ‘Next question’ and were directed to Question 8, and then again to Question 13 and the Transfer question, bypassing the rest of the questions in the paper packet. To fix this, we

redirected students to the Index page upon submitting an answer. The Index page contains links to all questions, which signals to students they should only access a question when they’ve gotten to that part of the activity.

Classroom Status Page In the first classroom study, the only way for us to tell how far students had progressed in the activity was to walk around and inspect their packets, an inefficient process.

To help with time management, we created a Classroom Status Page,” as described in Section 3.5.1, that for each student, listed their most recently submitted or saved answer to each question.

Confirm Answer Submission After a student clicked the ‘submit’ button to sub-mit their answer, the original Platform did not display any confirmation that their answer had indeed been saved. This created uncertainty in the students’ minds and led them to continue clicking the submit button in the hope of receiving a confirma-tion. To fix this, we updated the Platform to display a pop-up window confirmation after each submission.

Automatically Save Free Response Answers The first iteration of the Platform automatically saved students’ LabLib and WordBytes answers if they navigated away from the page without saving. For Free Response questions, however, we had expected students would reliably use the submit button to save their answers. We found this was not the case in the first classroom study, so we updated the Platform to save Free Response answers automatically upon navigation as well.

4.6.2 Iteration 2

Add in WordBytes Feedback Due to time constraints, we were not able to provide WordBytes feedback for students who participated in classroom studies at our first high school. In this iteration, we added in grading and feedback for Question 8 and 13 WordBytes. A detailed list of all grading rules and feedback can be found

in Appendix B.3.

4.6.3 Iteration 3

Add Link to Simulation To save time during the class period, we added a link to the StarLogo Nova simulation in the Questions Platform header. This saved students the need to navigate to the StarLogo Nova homepage and search for the correct Something’s Fishy activity.

New Categories for WordBytes feedback After the first round of classroom studies with WordBytes feedback, we were able to provide feedback for 96% of all student answers. To improve our coverage rate, we added several new grading rules.

We also made minor changes to the phrasing of existing feedback.

4.7 Iterations on Something’s Fishy Activity

The “Something’s Fishy Activity” was shortened to make it easier for students to finish within the allotted amount of time. The final version of the activity can be found in Appendix appendixASomethingFishy. Some instructions were also re-worked to be more clear to students. The StarLogo Nova fish simulation interface was also updated to remove unnecessary buttons and consolidate multiple actions into a single button to make the UI easier for students to understand.

Chapter 5

Results

This chapter presents a preliminary analysis of the data from the classroom study. In addition to completion rates, learning gains, and student engagement, which are discussed here, the dataset presents many avenues for future analysis, some of which are discussed in Section 6.3.

5.1 Participant Demographics

In total, 319 participants, all 9th or 10th grade students in Introductory Biology classes (some honors, some regular), opted in to share their data. These partici-pants came from three different high schools in the Greater Boston area, spanning six teachers and 17 classrooms, as shown in Table 5.1.

Population Count High Schools 3

Teachers 6

Classrooms 17 Participants 319

5.2 Question Format Assignment

We assigned each student a question format to achieve a balanced distribution of Free Response, LabLib, and WordBytes responses for Question 8 and 13.

Number of Students

Free Response LabLib WordBytes

113 104 102

Percentage (out of 319)

35.4% 32.6% 32.0%

Table 5.2: Question Format Assignment Distribution

The actual distribution is show in Table 5.2. Deviation from a perfectly balanced distribution can be attributed to students opting out of sharing their data or being absent on the day of the study.

5.3 Completion Rates

5.3.1

Completion Rates by Question

Of the 319 participants, 95.3% completed the Warm-up question, 80.3% completed Question 8, 67.7% completed Question 13, and 61.4% completed the Transfer ques-tion, as shown in Table 5.3. Assuming students worked on the activity in order, we can infer that 61.4% completed the activity, up to the Transfer question.

Free Response LabLib WordBytes Total Percent (out of 319)

Warm-up Question 304 304 95.3%

Question 8 75 93 88 256 80.3%

Question 13 61 81 74 216 67.7%

Transfer Question 196 196 61.4%

Table 5.3: Completion Rates by Question

A submission is counted as complete if the student clicked the “submit” button at least once on the question. However, this does not necessarily mean the student

actually answered the question, as they could have left the placeholder answer in or submitted a blank answer.

At the same time, some students may have typed in an answer to a Free Response question, but did not click submit, so their answers are not counted as complete. However, in some cases, their answers can still be recovered and analyzed. For Free Response questions, for all but three classrooms, the system saves student answers when they navigate away from the page (or click submit.) For Lablibs and WordBytes, the system saves students answers when they navigate away from the page, click submit, or check an answer.

5.3.2 Completion Rates by Question Format

The data shows that for both Question 8 and 13, the completion rate for a question was highest for the LabLib format and lowest for the Free Response format, with WordBytes in the middle, as shown in Table 5.4 and 5.5.

For Question 8, the completion rate for Free Response trailed LabLib’s rate by 23 percentage points and WordByte’s by 19.9 percentage points.

The trend is consistent for Question 13, where the completion rate for Free Re-sponse trailed LabLib’s rate by 23.9 percentage points, and WordByte’s by 18.5 per-centage points.

This suggests that students who encountered the LabLib and WordBytes format were more likely to attempt the question and submit an answer, suggesting the in-teractive nature of the question and the scaffolding provided made a difference.

Question 8

Number Completed Number Assigned Percent

Free Response 75 113 66.4%

LabLib 93 104 89.4%

WordBytes 88 102 86.3%

Question 13

Number Completed Number Assigned Percent

Free Response 61 113 54.0%

LabLib 81 104 77.9%

WordBytes 74 102 72.5%

Table 5.5: Question 13 Completion Rates by Format

5.4 Learning Gains

To get a preliminary assessment of learning gains, we ran student’s Pre and Post answers through EvoGrader [1], an online grading tool for analyzing written expla-nations of evolutionary change. The following graphs in this section were generated by EvoGrader.

5.4.1 EvoGrader Results

Figure 5-1, 5-2, 5-3, show the conceptions expressed by students given the Free Re-sponse, LabLib, and WordBytes question format, respectively. Figure 5-4, provides an explanation of each of the concepts. Item 1 refers to the Pre question, and Item 2 to the Post question. In each question type, the percentage of the naive “Adapt,” “Need,” and “Use” ideas declined between the Pre and Post question, which suggests students’ misconceptions decreased. However, the trend for each question type are relatively similar and do not suggest significant differences in learning gains between students assigned to Free Response, LabLib, and WordBytes questions. It is possible that the effect size is very small and that more precision is needed to detect it. To this end, we plan to manually categorize student answers using the grading tool described in Section 3.5.3 to see if even a small effect exists.

Figure 5-1: Conceptions in Pre/Post Question Expressed by Free Response Students

Figure 5-3: Conceptions in Pre/Post Question Expressed by WordBytes Students

Figure 5-4: Explanation of Key and Naive Concepts

5.5 Student Engagement

One measure of student engagement with a LabLib or WordBytes question is whether students checked their answer and changed it in light of the feedback. In this section, we discuss how many checks students used on each question and of the students who checked an answer, how many of them eventually submitted a correct answer.

5.5.1 LabLib

For Question 8, 58.1% of students who submitted an answer used at least one check. For Question 13, that percentage was 56.8%.

LabLib

No. Students Used at Least One Check No. Completed Responses Percent

Question 8 54 93 58.1%

Question 13 46 81 56.8%

Table 5.6: Number of Students who Used at Least One Check: LabLib

Of the students who used at least one check, 29.6% started with an incorrect answer and reached a correct answer for Question 8. For Question 13, that percentage was 37.0%. This suggests that for these students, they were able to interpret the feedback given and use it to revise their answer accordingly.

LabLib No. Students Checked and

Reached Correct Answer No. Students Used at LeastOne Check Percent

Question 8 16 54 29.6%

Question 13 17 46 37.0%

Table 5.7: Number of Students Who Reached a Correct Answer From Incorrect An-swer

5.5.2 WordBytes

Six of the 17 classes did not have WordBytes feedback available to them.1 The

following analysis is drawn only from the 11 classrooms, consisting of 233 students (78 LabLib, 78 WordByte), that did have WordBytes feedback.

As seen in Table 5.8, for Question 8, 61.2% of students who submitted an answer used at least one check. For Question 13, that percentage was 62.7%.

As seen in Table 5.9, of the students who used at least one check, 26.9% started with an incorrect answer and reached a correct answer for Question 8. For Question 13, that percentage was 33.3%.

Figures 5-5 and 5-6 show the number of checks used per student for Question 8 and 13, respectively. The graphs contain data from all 17 classrooms. We see that for both questions, most students used 0 or 1 checks for LabLibs and WordBytes, but

1Students could click the “Check Answer” button, but would be shown the message “This question

does not yet have automatically generated feedback. Please re-read your answer and submit it when you’re ready.”

Table 5.8: Number of Students Who Used at Least One Check: WordByte WordByte

No. Students Used At Least

One Check No. Completed Responses Percent

Question 8 41 67 61.2%

Question 13 32 51 62.7%

WordByte No. Students Checked and

Reached Correct Answer No. Students Used at LeastOne Check Percent

Question 8 18 67 26.9%

Question 13 17 51 33.3%

Table 5.9: Number of Students Who Reached a Correct Answer From Incorrect An-swer: WordBytes

that students tended to use more checks for WordBytes questions, suggesting higher engagement with the question format.2

Figure 5-5: Question 8: Number of Checks Used by Student

2Anecdotally, we observed some students were very excited to get a WordBytes question correct

Figure 5-6: Question 13: Number of Checks Used by Student

5.6 Feedback Coverage Rate

11 of the 17 classrooms had WordBytes feedback. As shown in Table 5.10, the grading rules we wrote were able to provide feedback for the majority of student answers.3

After the first round of classroom studies with WordBytes feedback, we updated the grading rules to capture additional student answers. These updated rules were deployed in Round 2. Though the coverage rate for Question 13 in Round 2 is 15.2 percentage points lower, the rate is skewed by the low number of student responses to Question 13 and a student checking the same answer multiple times. Overall, the high feedback coverage rate suggests the system is able to provide feedback for many types of student answers, though more analysis is needed to determine if the feedback was actually topical.

3The coverage rate is based on the number of answers the grading system was able to provide

feedback for. It does not necessarily mean that the feedback was able to directly address the student’s line of reasoning or misconceptions. Future analysis is needed to assess whether the feedback was truly topical.

Round 1 (8 Classrooms) Number of

Checked Answers Number Answerswith Feedback Percent

Question 8 122 122 100%

Question 13 60 58 96.7%

Round 2 (3 Classrooms) Number of

Checked Answers Number Answerswith Feedback Percent

Question 8 38 38 100%

Question 13 27 22 81.5%

Chapter 6

Future Work

On the technology side, there is future work to be done in improving the Questions Platform and the feedback system. On the research side, our data set presents many avenues of future analysis.

6.1 Extensions to Questions Platform

6.1.1 UI for Adding/Creating New Questions

Currently, adding a new LabLib or WordBytes question into the Questions Platform is a manual, technically involved process, as described in Section 3.3.

After creating a new LabLib using the LabLib editor, one must copy all the JavaScript files into the Django application, create a new html template that loads the correct JavaScript files, and create an instance of LabLibQuestion in the database. The process is similar for WordBytes, although there is no visual editor, so one must edit the JavaScript files directly to determine the tiles and feedback.

The Questions Platform would be improved by creating a UI layer that allows admin users to create and add new LabLibs and WordBytes to the Platform auto-matically. This would save time and eliminate the need for technical expertise when preparing the Platform for future studies.

6.1.2 Teacher Portal

Currently, after a classroom study ends, the research team emails the teacher a copy of their students’ online answers, one document for each classroom. The system would be improved with the creation of a “Teacher Portal” that a teacher could log in to and view their students’ answers online.

6.2 Smarter Feedback

The WordBytes feedback system could be extended to detect when a student has encountered the same piece of feedback multiple times and give them a different piece of feedback that will allow them to make progress on other parts of her answer.

Currently, a student’s WordBytes answer may match multiple feedback rules, but only one piece of feedback—the first rule matched—will be displayed.

For example, in Question 8, suppose a student writes and checks the answer “The red fish were closer to the algae so they were more likely to bump into and eat algae.” The student would be shown the feedback, “Your answer is missing something. Can you add why the red fish were closer to the algae?” even though feedback asking why being more likely to bump into and eat algae helped the red fish population survive would be relevant as well.

However, constantly showing the same piece of feedback, even when other feedback applies, can result in a student getting “stuck” if they continuously checks the same answer without making progress.

An improvement to the system would keep track of how many times a student has encountered a piece of feedback in a row without making progress and randomly select another piece of relevant feedback to display when the counter exceeds a set threshold. In the above example, the system would eventually ask the student about how being more likely to bump into and eat algae helped the red fish population survive, which would give the student a chance to get closer to the correct answer.

6.3 Research Questions

This thesis presented preliminary findings from the data set collected. There are many more research questions to be explored, some of which are discussed below.

6.3.1 Learning Gains Evaluation

Using results from EvoGrader, we compared the learning gains of students assigned Free Response vs. LabLib. vs WordBytes questions. A more fine-grained analysis of learning gains is in progress and will be done by manually grading and categorizing each Pre and Post question. This process will be done using the grading tool described in Section 3.5.3.

6.3.2 Additional Measures of Engagement

In addition to counting the number of checks used by each student, we can also calculate how long each student spent thinking about their answer between successive checks, as well as how long they spent on the question overall.

6.3.3 Additional Feedback Evaluation

To better evaluate if the feedback we wrote was helpful to students, we can calculate the probability of a student improving their answer—either by reaching a correct answer or removing an incorrect component—given that they saw a piece of feedback. We can also manually go through each student answer and determine if the feedback actually addressed the student’s line of reasoning or misconception.