HAL Id: hal-01573852

https://hal.archives-ouvertes.fr/hal-01573852

Submitted on 10 Aug 2017HAL is a multi-disciplinary open access archive for the deposit and dissemination of sci-entific research documents, whether they are pub-lished or not. The documents may come from teaching and research institutions in France or abroad, or from public or private research centers.

L’archive ouverte pluridisciplinaire HAL, est destinée au dépôt et à la diffusion de documents scientifiques de niveau recherche, publiés ou non, émanant des établissements d’enseignement et de recherche français ou étrangers, des laboratoires publics ou privés.

The Power of Self-evaluation based Cross-sparring in

Developing the Quality of Engineering Programmes

Katriina Schrey-Niemenmaa, Robin Clark, Asrun Matthiasdottir, Fredrik

Georgsson, Juha Kontio, Jens Bennedsen, Siegfried Rouvrais, Paul Hermon

To cite this version:

Katriina Schrey-Niemenmaa, Robin Clark, Asrun Matthiasdottir, Fredrik Georgsson, Juha Kontio, et al.. The Power of Self-evaluation based Cross-sparring in Developing the Quality of Engineering Pro-grammes. WEEF & GEDC 2016 : 6th World Engineering Education Forum & The Global Engineering Deans Council, Nov 2016, Seoul, South Korea. pp.158 - 174. �hal-01573852�

* Corresponding Author’s e-mail address :katriina.schrey@metropolia.fi

* Acknowledgement : ERASMUS+, EU, Project: 2014-1-IS01-KA203-000172

THE POWER OF SELF-EVALUATION BASED CROSS-SPARRING IN

DEVELOPING THE QUALITY OF ENGINEERING PROGRAMMES

Katriina SCHREY-NIEMENMAA1*, Robin, CLARK2 , Ásrún MATTHÍASDÓTTIR3, Fredrik GEORGSSON4, Juha KONTIO5, Jens BENNEDSEN6 ,Siegfried ROUVRAIS7, Paul, HERMON8

1 Department of Electrical Engineering, Helsinki Metropolia University of Applied Sciences, Finland 2 School of Engineering and Applied Science at Aston University, UK

3 School of Science and Engineering, Reykjavik University, Iceland 4 Faculty of Science and Technology, Umeå University, Sweden 5

Faculty of Business, ICT and Chemical Engineering, Turku University of Applied Sciences, Finland

6 Aarhus University School of Engineering, Denmark 7 School of IT, Institut Mines Telecom Bretagne, France

8 School of Mechanical and Aerospace Engineering, Queen's University Belfast, UK

This paper discusses how the quality of engineering education can be improved in practice by using a process of sharing and critique. Starting with a self-evaluation followed by a cross-sparring with critical friends, this new approach has proven successful in initiating change. With a focus on quality enhancement as much as quality assurance, the engagement in and attractiveness of the engineering education are key considerations of the development activities that are inspired by the process. The approach has been developed in an ERASMUS+ project involving eight European universities called QAEMP (Quality Assurance and Enhancement Market Place).

Keywords: Engineering programme development, improvement, self-evaluation, cross-sparring, quality

enhancement, quality assurance

INTRODUCTION

In Higher Education today, institutions are constantly trying to balance the time spent and resource allocated to the areas of Quality Assurance (QA) and Quality Enhancement (QE). Often the quality assurance element dominates as this is what is most closely linked to the measures identified by institutions and accrediting authorities to ensuring a high level and consist tertiary learning provision. Quality enhancement is often only identified in bespoke projects or it is left to the enthusiasm and energy of programme managers and individual teachers. In the European Union Erasmus+ project described in this paper, the focus is on continuous improvement, a subject very familiar to engineering practitioners. Using self-evaluation as a tool to reflect and then find the best possible cross-sparring partner, the process results in the generation of effective development plans that are focused on producing more dynamic, engaging and effective engineering education.

OBJECTIVES

The objectives of the approach are to support continuous quality enhancement by identifying an improvement plan and sharing working practices from different programmes in different universities. This process of sharing and critique based on the quality assurance metrics adopted in producing the self- evaluation framework, have shown to promote reflection and planned steps toward a more relevant and engaging engineering education provision. This also leads to a more practical quality assurance model that is able to sustain continuous reform between accreditation rounds.

BACKGROUND TO THE PROJECT

The European Commission Report on ‘Progress in Quality Assurance in Higher Education [1] underlines the importance of developing a quality culture in higher education institutions and also points to the value of institutional evaluation which it states “empowers academics and HEIs (Higher Education Institutions) to build curricula and to ensure their quality, avoiding the need for formal, external accreditation of each individual programme”. Responding to that statement, eight European Universities:

• Reykjavik University (RU), Iceland

• Turku University of Applied Sciences (TUAS), Finland • Aarhus University (AU), Denmark

• Helsinki Metropolia University of Applied Sciences (Metropolia), Finland • Umeå University (Umu), Sweden

• Telecom Bretagne (TB), Institut Mines-Telecom, France • Aston University (ASTON), United Kingdom

• Queens University Belfast (QUB), United Kingdom

have been working together for two years developing and piloting a practical tool box for enhancing the quality of engineering education at the programme level. Prior to this project some of the participants had worked together in two different regional projects to lay the foundations for this larger project. From this earlier work, the goal of the new project became clear - to create a platform for engineering education programme teams to find critical friends for helping in the development and enhancement of the programmes identified. Before entering the platform, programmes need to go through a guided self-evaluation to be able to produce the necessary data about themselves and their programe for a suitable critical friend to be identified. In Figure 1 the overview of the created platform (QAEMP Market Place) is presented.

The entire toolbox consists of a self-evaluation questionnaire with guidelines on how to use it, a software based pairing tool, guideline for preparing and executing the cross-sparring exercise following the pairing and finally encouragement to implement the development activities discovered during the process.

This paper reports on the experiences of the project team. The funded project is now finished and the aims have been realised with the production of the self-evaluation tool and the on-line market place for programme teams to find matched cross-sparring partners for quality enhancement opportunities for their programmes.

METHODOLOGY

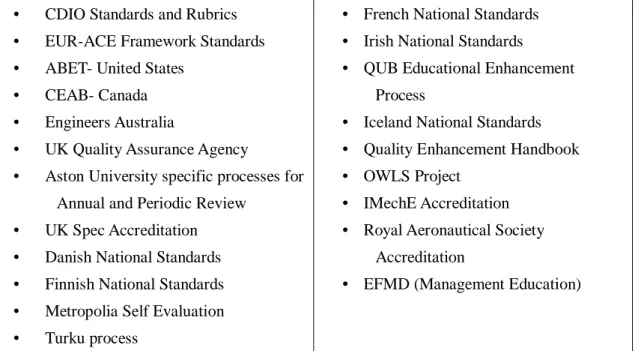

At the outset of the project a selection of the most relevant engineering education accreditation and evaluation standards were studied for the development of the self-evaluation tool [2]. These included, for instance, the CDIO Standards [3] and the EUR-ACE Framework [4]. These two examples were particularly relevant as the project partners were all from Europe and particularly interested in the CDIO Approach to engineering education. The other QA Systems considered as part of the project are given in Figure 2.

• CDIO Standards and Rubrics • EUR-ACE Framework Standards • ABET- United States

• CEAB- Canada • Engineers Australia

• UK Quality Assurance Agency

• Aston University specific processes for Annual and Periodic Review

• UK Spec Accreditation • Danish National Standards • Finnish National Standards • Metropolia Self Evaluation • Turku process

• French National Standards • Irish National Standards

• QUB Educational Enhancement Process

• Iceland National Standards • Quality Enhancement Handbook • OWLS Project

• IMechE Accreditation • Royal Aeronautical Society

Accreditation

• EFMD (Management Education)

Figure 2: The set of QA Systems considered in the development of the Self-Evaluation Tool

Based on the different questionnaires and approaches to self-evaluation in these systems, a new set of questions focusing on the enablers of excellent education was created. Questions concerning finance and management were left out as these were deemed outside the learning and teaching focus of the project. The result was a questionnaire of 28 questions. The definition of the maturity model based rubrics and application of the self-evaluation have been presented earlier [2].

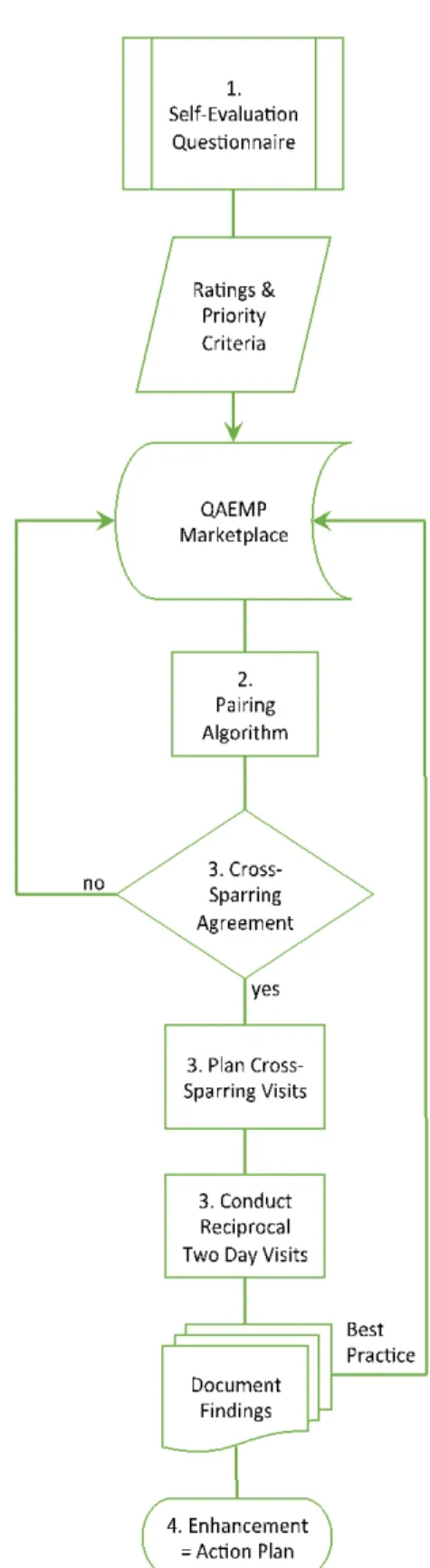

The self-evaluation results were entered into an online ‘Market Place’ which pairs the participants according to their developmental priorities. After pairing, the institutions exchanged the self-evaluation reports, studied the background information of each other and finally each paid a site-visit to their partner institution. Many valuable development ideas and suggestions for educational enhancement were then identified and used to stimulate action plans for implementation [5] [6]. The

whole process is illustrated more fully in the flowchart presented in Figure 3.

Some additional detail on the different elements of the process is now presented.

As part of the self-evaluation process it has been important to gather the argumentation and indicators to justify the maturity scores. This has allowed for the development of the self-evaluation tool and is required for the partners to benchmark their individual interpretations of the self-evaluation rubrics.

Paired institutions had to come to a mutual agreement that they wish to continue to the cross-sparring phase before any further work commenced. In consultation with one another, plans were then drawn up for the visits to each of the cross-sparring partners. This required the following:

• A review of the priority criteria and identification of the 5 criteria on each side to be used as the focus of the visit • Drawing up of a detailed timetable of

activities to examine the practice in each priority area.

Travelling to each other’s institutions allowed the participants to observe first-hand how the criteria are managed / delivered and the environment in which they were operating. These visits helped ensure a depth of understanding and knowledge was gained.

A single pro-forma document for each institutional visit was completed by both parties together to capture this new learning.

After both visits, each institution compiled an action plan for self-improvement based on their cross-sparring experience and the insights gained.

Figure 3: A flow chart illustrating the full process

CROSS-SPARRING (CS)

For the cross-sparring, the first step was the pairing of the programmes. In the pilot phase of the project two elements were considered: the priority areas for programme improvement and the rating scores that resulted from the self-evaluation exercise in each institution.

The CS is composed of four main activities:

- Initialisation – participants from each partner institution agreed on their own priority criteria (for enhancement) from the self-evaluation work and the focus, boundaries, roles, responsibilities and composition of the CS team. This activity was conducted in advance of either of the two visits

- Organisation and Preparation – the team detail, the self-evaluation results, the visit agendas and production and validation of the CS plan are shared and agreed

- Sparring – at each institution, during the visit, the partners identify evidence related to the priority criteria, best practices, challenges and potential improvement actions

- Feedback and Development Plan – reporting of the visit findings to ensure the action focused objectives of the project can be embedded in each institution.

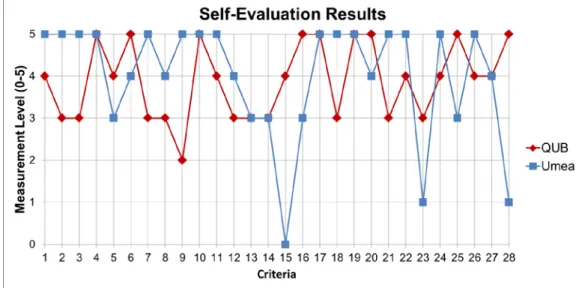

The 8 participating universities made up 4 pairs for the cross sparring. As an example, in Figure 4, Umeå University and Queens University Belfast were paired together. Belfast had chosen their Priority criteria to be numbers 1,2,7,9 and 18 while Umeå selected numbers 5,8,16,23 and 25.

Figure 4: Self-Evaluation – determining priority criteria

In most cases the selection of the priority areas was based on where an institution rated themselves low against the maturity rubrics. This captured their desire to improve their capability in that particular area. In some cases the partner institutions scored the priority areas similarly, thus allowing for a fruitful discussion to take place that would lead to the opportunity for mutual improvement.

RESULTS

The idea of cross-sparring is seen as a productive way to facilitate study programme development. The pairing of the partners demonstrated the potential to generate a wider range of creative and productive ideas based on the different strengths and contexts of the institutions involved. The optimum case would see the cross-sparring sustained beyond the “one hit” such that the institutions become mutual mentors to each other and identify opportunities for ongoing collaboration and support.

One such example from the project resulted from the Aston – Turku pairing in which each institution identified the need for further work in the area of staff development and training to support the implementation of active learning in each institution. The recognition of this need has resulted in the generation of a brief for a collaborative project exploring the needs, existing resources, the generation of new resources and a delivery phase that will also encourage staff exchange.

The cross-sparring model was developed to complement the accreditation system in each context and facilitate the dissemination of best practices in quality enhancement and engineering education among HEIs. The identification of best practices takes place when the cross-sparring is conducted. Institutions come together to learn from each other as partners for a short period of time rather than as competitors. They are given the opportunity to identify their sparring-partner’s strengths and challenges free from bias and to provide more immediate feedback for development actions. An effective external collaborator (cross-sparrer) can help a partner institution (cross-sparree) reflect with greater impartiality and obtain a more objective view of its strengths and potential improvements, and at the same time identify best practices that can be useful for their own institution.

In addition to the indicators to support the maturity rankings, the ideas and examples of best practice are going to be used to create the next iteration of the self-evaluation tool and the content of the QAEMP Market Place. By doing this, the self-evaluation document will provide additional guidance to the programme teams completing the self-evaluation exercise and ensure greater consistency in the rankings produced. In addition the on-line Market Place will become an archived database of good practice and evidence that users will be able to access and reference.

Discussion is continuing on how future pairs should be matched. In the future it might be beneficial to give the participating units an opportunity to identify their preferences not only based on the evaluation criteria, but also based on the match of discipline. In this piloting phase the mismatch in disciplines between QUB and Umeå (Mechanical Engineering and Software Engineering) proved to be beneficial and not a shortcoming. This was because the partners were able to focus on the features of the learning and teaching and were not distracted by discussions about content. The Aston – Turku pairing shared this view. On the other hand, Metropolia found it very beneficial to have Aarhus as a cross sparring partner as both focused on a programme in Health Care Engineering and thus got useful ideas about developing the content of the programme as well as the learning and teaching process identified in the self-evaluation. Reykjavik and Telecom Bretagne reported the benefits of having different disciplines, as then the cross-sparring was really concentrating on the issues raised by the self-evaluation without the pressures to consider the content of the teaching. Where Reykjavik and Telecom Bretagne did experience problems was in the understanding of each other’s educational systems. The private v public differences and the general environment in which each institution

operated required time to understand and thus had an impact on what could be accomplished around the identified priority areas.

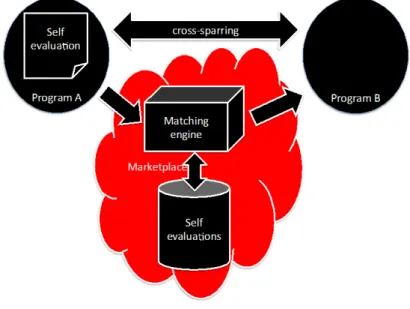

More work and experiences are needed to create a fully working ‘Market Place’ to fulfil the needs of different programmes. Figure 5 illustrates the goal for the Market Place to be a database of programmes who have submitted their self-evaluation reports and priority areas. As a new programme joins, its information is compared to the information already available and the best match will be offered to the programmes as potential cross-sparring pairs. One consideration will be the time for which a self-evaluation will be considered current such that all potential cross-sparrings identified are valid.

Figure 5: Cross-sparring Market Place

CONCLUSIONS

In this paper, the authors have introduced a cross-sparring model and offered their initial thoughts on the strengths and weaknesses of the model and process. This paper is continuation to the workshop held [7] and paper [8] presented in the WEEF2015 conference in Florence, Italy in September 2015. The combination of a self-evaluation and cross-sparring is seen as a process that promotes reflection and dialogue and that unlocks a larger range of opportunities for enhancement than if an institution were to remain inward looking and conduct the exercise alone. The reflective enhanced self-evaluation used in this project is a powerful and objective tool.

The overall cross-sparring principles of the QAEMP project were met: to get to know each other, to learn and inspire each other, to be “critical friends”, to openly evaluate and analyse rather than audit. Learning from others and sharing best practice showed that it is a valuable medium for improving educational quality, and thus performance. The pilot cross-sparring allowed the project team the opportunity to test the models and tools created such that they can be used further in the future.

The dissemination conducted to this point through presentations and active workshops has generated considerable interest and the hope is that future funding will allow for the further use and development of the outputs from this project. Some areas of the work that will need further thought concern the need to ensure that the use of the process remains practical and does not significantly impact programme team time and resources. Some institutions did find the self-evaluation process lengthy, despite also highlighting its value both in the short and long term. Balancing the visit length and cost versus the priority areas to be addressed was also a topic of discussion within each pairing. The argument from this would be that once a visit has taken place, however short, follow up on additional priority areas would then be more easily accomplished via electronic means such as e-mail exchanges and the use of skype or an equivalent.

Embedding and committing to a plan for development within the institution is the ultimate test for the success of the QAEMP process. Each of the 8 institutions have started the first steps towards making this happen and in some cases the relationships will likely develop around specific projects and areas of mutual developmental interest. By engaging in this process, each institution sees the experience as contributing to their statutory QA requirements, but in a way that keeps QE firmly in the minds of all involved.

As the project concludes, engineering programmes around the world are invited to use the self-evaluation created as part of the project and to then enter their results into the on-line Market Place. The more programmes there are that do so, the greater the selection of possible cross-sparring partners for participant institutions will be. It is important to invest sufficient effort in the process from the very beginning though. In the case of this project there was a good combination of strengths and development areas identified which has resulted in a solid foundation for the on-line Market Place.

This work has been funded with support from the European Commission in the context of the 2014-2016 Erasmus+ QAEMP (Key Action2, cooperation, innovation and the exchange of good practices). This paper reflects only the views of the authors. The Commission is not responsible for any use that may be made of the information contained therein. More information on the QAEMP project can be found at www.cross-sparring.eu.

REFERENCES

[1] Clark, R., Bennedsen, J., Rouvrais, S., Kontio, J., Heikkinen, K., Georgsson, F., Mathiasdottir, A., Soemundsdottir, I., Karhu, M., Schrey-Niemenmaa, K., & Hermon, P. (2015). Developing a robust Self Evaluation Framework for Active Learning: The First Stage of an ERASMUS+

Project (QAEMarketPlace4HEI), Proceedings of the 43rd Annual SEFI Conference, Orléans,

France.

[2] European Commission. (2014). Report on progress in quality assurance in higher education. Report to the Council, the European Parliament, the European Economic and Social

Committee and the Committee of the Regions. Retrieved from

http://ecahe.eu/w/images/e/ee/EU_Report_on_Progress_in_Quality_Assurance_in_Higher_Ed ucation_%282014%29.pdf

[3] Crawley, E.F., Malmquist, J, Brodeur, D.R., Östlund, S. (2007), Rethinking Engineering Education - The CDIO Approach, Springer-Verlag, New York.

[4] http://enaee.eu/eur-ace-system/eur-ace-framework-standards/guidelines-on-programme-self-assessment-review-by-hei-and-accreditation-requirements-of-agency/

[5] Clark, R, Thomson, G, Kontio, E, Roslöf, J, Steinby, P, (2016), Experiences On Collaborative Quality Enhancement Using Cross-Sparring Between Two Universities, Proceedings of the 12th International CDIO Conference. Turku, Finland.

[6] Bennedsen, J, Schrey-Niemenmaa, K, (2016), Using Self-Evaluations For Collaborative Quality Enhancement, Proceedings of the12th International CDIO Conference. Turku, Finland. [7] Clark,R, Bennedsen,J, Schrey-Niemenmaa,K, Rouvrais,S

Quality Enhancement In Engineering Education – A Workshop To Explore A Collaborative Framework For Continuous Improvement, WEEF2015 Conference in Florence, Italy in September 2015

[8] Bennedsen,J, Clark,R, Rouvrais,S, Schrey-Niemenmaa,K,

Using Accreditation Criteria For Collaborative Quality Enhancement, Proceedings of WEEF2015 Conference in Florence, Italy in September 2015