Superpixel-based saliency detection

Texte intégral

Figure

Documents relatifs

With respect to our 2013 partici- pation, we included a new feature to take into account the geographical context and a new semantic distance based on the Bhattacharyya

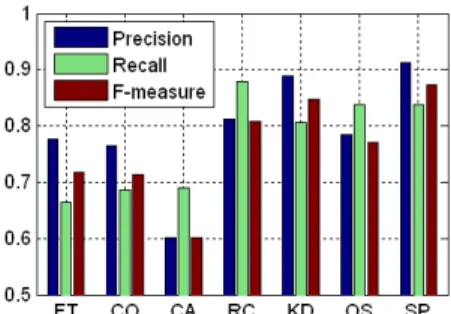

We model the colour distribution of local image patches with a Gaussian density and measure the saliency of each patch as the statistical distance from that density..

However, in order to resolve the issue of the deficient abstraction of the palm-print line features in the region of interest (ROI), an amended finite Radon transform with a

● Fast superpixel matching, constrained to capture the global color palette.. ● Color fusion based on spatial and

In this paper, we have presented an extension of the Dahu distance to color images, which allows for computing some saliency maps for object detection purpose. We have proposed

Due to the clear superiority of CNN-based methods in any image classification problem to date, we adopt a CNN framework for the frame classification is- sue attached to the

We use only one algo- rithm called max-norm based online matrix decomposition scheme that processes each homogeneous region from one frame per time instance to separate the L and

Based on the same idea as [8], it uses the original image (before warping) to infer correctly the missing depth values. The algorithm is detailed in Sec. 2, and is compared