Combining Fast Search and Learning for Scalable Similarity

Search

by

Hooman Vassef

Submitted to the Department of Electrical Engineering and Computer Science

in partial fulfillment of the requirements for the degrees of

Bachelors of Science in Computer Science and Engineering

and

Master of Engineering in Electrical Engineering and Computer Science

at the

MASSACHUSETTS INSTITUTE OF TECHNOLOGY

@

Hooman

May 2000

Vasse, MM. All rijhts reserved.

The author hereby grants to MIT permission to reproduce and distribute publicly

paper and electronic copies of this thesis document in whole or in part.

A u th or ...

Department of Electrical Engineering and Computer Science

May 22, 2000

C ertified by ...

.. ...

Tommi Jaakkola

Assistant Professor

Thesis Supervisor

Accepted by ...

Artu

..

Arthur C. Smith

Chairman, Department Committee on Graduate Students

MASSACHUSETTS INSTITUTE

OF TECHNOLOGY

Combining Fast Search and Learning for Scalable Similarity Search

by

Hooman Vassef

Submitted to the Department of Electrical Engineering and Computer Science on May 22, 2000, in partial fulfillment of the

requirements for the degree of Master of Engineering in Computer Science

Abstract

Current techniques for Feature-Based Image Retrieval do not make provisions for efficient indexing or fast learning steps, and are thus not scalable. I propose a new scalable simul-taneous learning and indexing technique for efficient content-based retrieval of images that can be described by high-dimensional feature vectors. This scheme combines the elements of an efficient nearest neighbor search algorithm, and a relevance feedback learning algorithm which refines the raw feature space to the specific subjective needs of each new application, around a commonly shared compact indexing structure based on recursive clustering.

After a detailed analysis of the problem and a review the current literature, the design rationale is given, followed by the detailed design. Results show that this scheme is capable of improving the search exactness while being scalable. Finally, future work directions are given towards implementing a fully operational database engine.

Thesis Supervisor: Tommi Jaakkola Title: Assistant Professor

Acknowledgments

This work was conducted at the I.B.M. T. J. Watson Research Center, and was funded in part by NASA/CAN contract no. NCC5-305.

Contents

1 Introduction: Towards Scalable and Reliable Similarity Search 7

1.1 Objectives ... ... 7

1.2 Similarity Search: The Basic Approach . . . . 7

1.3 Towards Reliable and Scalable Similarity Search . . . . 8

1.3.1 Improving Search Time: The Need for Efficient Indexing . . . . 8

1.3.2 Improving Accuracy: The Need for a Learning Scheme . . . . 8

1.3.3 Combining efficient Indexing, Search and Learning . . . . 10

1.4 Proposed Approach . . . . 10

2 Design Rationale: Combining Indexing and Learning 12 2.1 Search Space and Metric . . . . 12

2.1.1 Objective and Subjective Information Spaces . . . . 12

2.1.2 Objective and Subjective Information Discriminated . . . . 12

2.1.3 Objective and Subjective Information Mixed . . . . 13

2.2 Designing a Dynamic Search Index . . . . 13

2.2.1 The Need for Clustering . . . . 13

2.2.2 The Need for Hierarchy . . . . 13

2.2.3 The Need for Flexibility . . . . 14

2.3 Designing a Scalable Learning Algorithm . . . . 14

2.3.1 Insights from the S-STIR Algorithm . . . . 14

2.3.2 Reshaping The Result Set with Attraction/Repulsion . . . . 14

2.3.3 Generalization Steps . . . . 15

2.4 Fast Nearest Neighbors Search . . . . 16

2.4.1 Insights from The RCSVD Algorithm . . . . 16

2.4.2 Adapting the Search Method to a Dynamic Index . . . . 16

3 Methods 17 3.1 The Database Index . . . . 17

3.1.1 Structure of the Index . . . . 17

3.1.2 Building the index . . . . 19

3.1.3 Dynamics of the index . . . . 19

3.1.4 Auxiliary Exact Search Index . . . . 21

3.2 The Leash Learning Algorithm . . . . 21

3.2.1 Adapting the Result Set . . . . 21

3.2.2 Generalization . . . . 23

3.3 Searching for the Nearest Neighbors . . . . 26

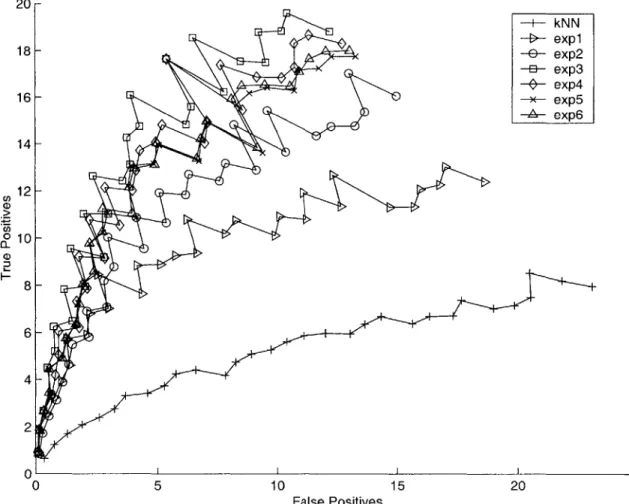

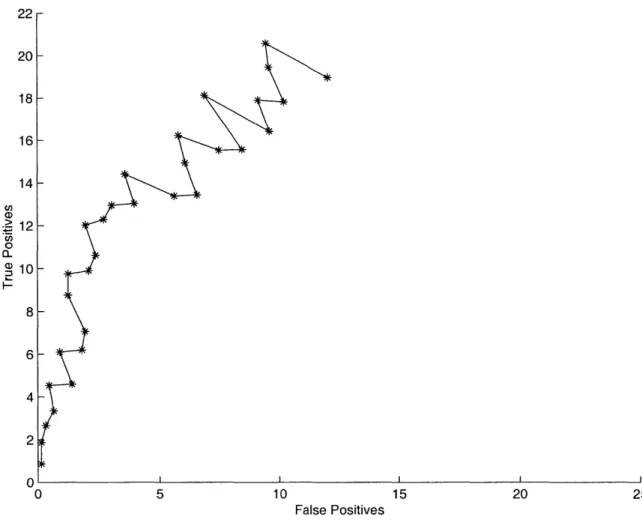

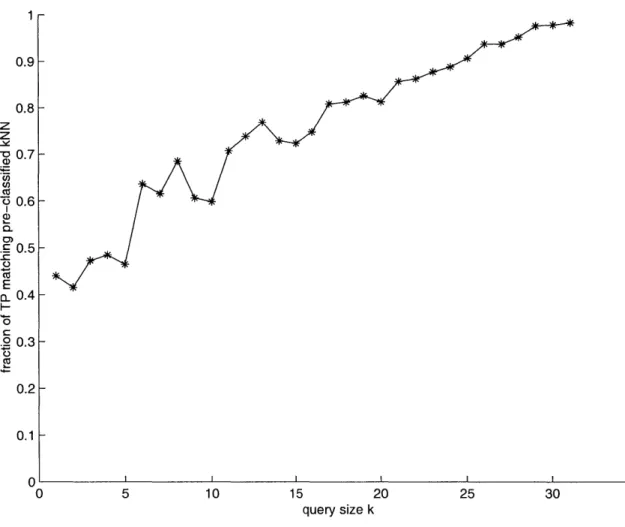

3.3.2 Collecting the Nearest Neighbors 4 Results 29 4.1 Class Precision . . . . 29 4.1.1 Experimental Setup . . . . 29 4.1.2 Motivation . . . . 29 4.1.3 Test Results. . . . . 30 4.2 Structure Preservation . . . . 30 4.2.1 Experimental Setup . . . . 30 4.2.2 Motivation . . . . 30 4.2.3 Test Results. . . . . 32 4.3 Objective Feedback . . . . 33 4.3.1 Experimental Setup . . . . 33 4.3.2 Motivation . . . . 33 4.3.3 Test Results. . . . . 33 5 Discussion 36 5.1 Contributions . . . . 36 5.2 Future Research . . . . 36

5.2.1 Improving Learning Precision . . . . 36

List of Figures

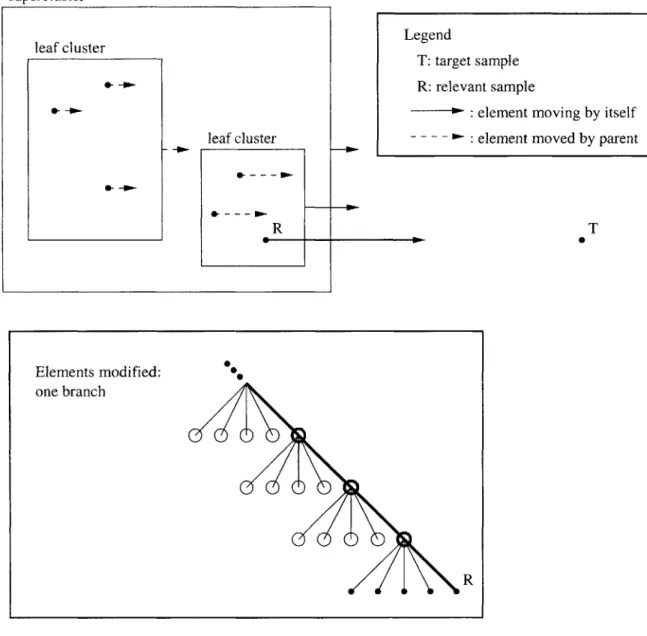

2-1 Learning Illustration: Reshaping the Result Set . . . . 15

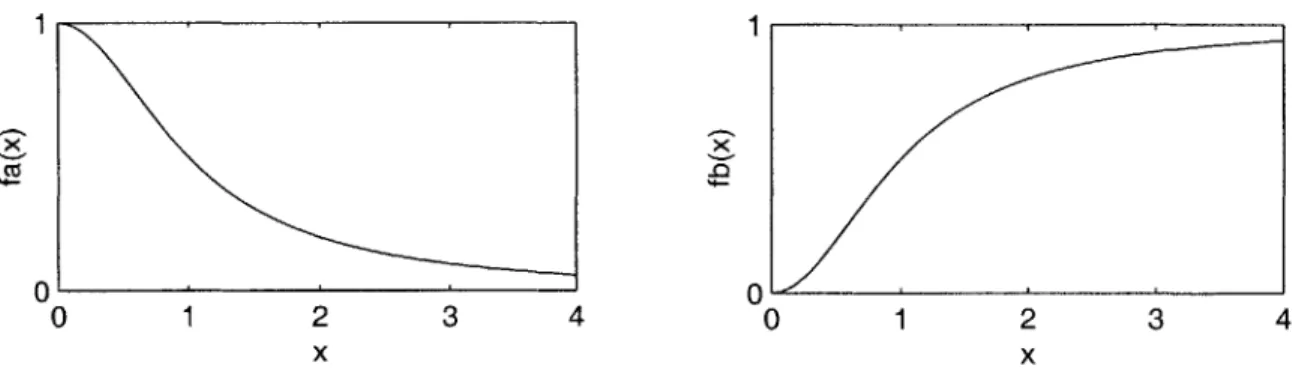

3-1 fa and fb, transition functions (here a, b = 2) . . . . 23

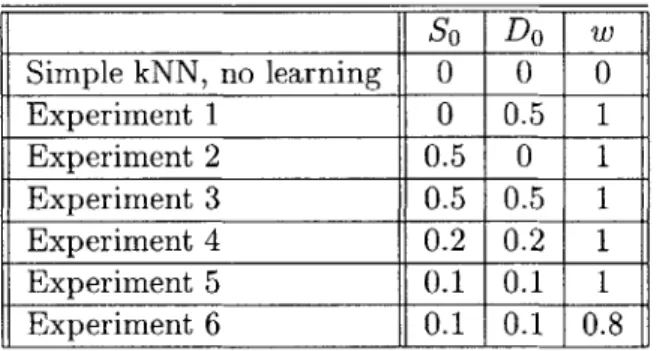

3-2 Learning Illustration: Generalization . . . . 25

3-3 Learning Illustration: Generalization Variant . . . . 27

4-1 Class Precision of the Leash Algorithm . . . . 31

4-2 Structure Preservation Results for the Leash algorithm . . . . 32

4-3 Class Precision with Objective Feedback . . . . 34

Chapter 1

Introduction: Towards Scalable

and Reliable Similarity Search

1.1

Objectives

The continuous increase in size, availability and use of multimedia databases has brought a demand for methods to query elements by their multimedia content. Examples of such databases include: a digital image archive scanned from geological core samples obtained during an oil/gas exploration process, where search for a particular rock type would help identify its strata; an archive of satellite images, where search by content would be used to identify regions of the same land cover (a particular type of terrain) or land use (a populated area, a particular kind of crop, etc.).

One approach would be to explicitly label archived elements and query them by pro-viding a description, but the more practical and popular approach is to query by example. In other words, content-based retrieval can be done by performing a search in the database for the elements which are the most similar to a query sample.

While numerous such search methods have been proposed in the recent literature, the specific purpose of this research was to design a similarity search engine which is reliable, yet scalable to arbitrarily large multimedia databases.

1.2

Similarity Search: The Basic Approach

Most of the recent methods for retrieving images and videos by their content from large archives use feature descriptors to index the visual information. That is, digital processing methods are used to compute a fixed set of features from each archived element (image, video, or other), and the element is then represented by a high-dimensional vector combining these features in the search index. Search by example then consists of a similarity search on the vector space, with as a target the feature vector corresponding to the query sample provided by the user.

In the simplest approach, this is done by performing a nearest neighbors search on the space of the feature vectors used to represent the database elements in the index. Example of such systems include the IBM Query by Image Content (QBIC) system [8], the Virage visual information retrieval system [1], the MIT Photobook [14], the Alexandria project at

UCSB [12, 2], and the IBM/NASA Satellite Image Retrieval System [16]. In these systems,

typically based upon a simple fixed and objective metric, such as the Euclidean distance or the quadratic distance [16], in order to reduce computational costs.

1.3

Towards Reliable and Scalable Similarity Search

The basic approach can be optimized towards two logical goals: making it scalable, and making it more reliable. Scalability is attained by limiting the individual query processing time and the size of the database index. Reliability is attained by improving the search accuracy. These two research directions have thus far been explored mostly in an orthogonal fashion.

1.3.1 Improving Search Time: The Need for Efficient Indexing

Naive Nearest Neighbors Search

Improving the query processing time inevitably requires a specialized database index, even for a simple nearest neighbors search. Indeed, the naive approach, which consists of go-ing through all the elements to collect the k nearest samples to the target sample, where k is the search number, requires touching every sample in the database, which for very large databases, is unthinkable. The desired query processing time, roughly equivalent in complexity to the number of touched samples, is sub-linear.

Common High-Dimensional Indexing Methods are Inadequate

Indexing methods such as B-Trees, R-Trees or X-Trees [3] have been proposed to optimize

lookup time for one particular sample vector. However, their performance for nearest neigh-bors search, while satisfactory in low-dimensional spaces, is poor in high-dimensional vector spaces, due to the "curse of dimensionality". Indeed, in a high-dimensional space, individual samples are all in relative proximity of each other, and a search of those indexes ends up therefore touching most of the samples in the database by exploring across all dimensions.

Fast Nearest Neighbors Search with RCSVD

The RCSVD index, proposed by Thomasian et al. [17], is designed specifically as a compact index meant to improve nearest neighbors search to a sub-linear time complexity. RCSVD builds an index by recursively performing a combination of clustering to reduce the search range, and uses singular value decomposition to reduce the dimensionality of the clusters, thus facilitating the nearest neighbors search within a given cluster.

1.3.2 Improving Accuracy: The Need for a Learning Scheme

Class Precision

The tasks performed on those database examples given earlier (Section 1.1) require class

precision property: in any similarity search, the result set samples should all belong to the same class (e.g. rock type, or terrain) as the query sample. This issue is trivial if the feature vector space corresponding to the database discriminates classes naturally by its topography, i.e. is such that any similarity search query will return only samples of the same class (provided the query number is less than or equal to the number of samples of that class).

However, a straight nearest neighbors search based on Euclidean distance, which is the query method of choice for its computational simplicity, is unfortunately very unlikely to yield class precision for several reasons.

First, no feature extractor is perfect, as in essence they all discard a significant amount of information. In particular, if the database images are complex such that they require a cognitive process and a certain knowledge base to interpret, it is not likely that any feature extractor will discriminate samples with a good enough approximation of human perceptual distance. Second, as mentioned in Section 1.3.1, in a high-dimensional space all samples are in relative proximity to each other, i.e. distances are not very discriminative. Thus, enhancing the feature extractors themselves to capture generally more relevant information may provide only little improvement in search accuracy, if it implies adding noisy feature dimensions to the vectors. Empirical results show indeed that a nearest neighbors search does no discriminate classes well on most high-dimensional feature vector spaces.

Many recent approaches focus instead on developing some learning algorithm which uses subjective information provided by the user, to refine some aspect of the search, either the query itself, or for example the vector comparison metric. This refinement is meant to overcome the disparity between human perceptual distance and the objective metric used to compare feature vectors, and to induce class precision.

Learning as Implicit Classification

The intended applications would then greatly benefit from a form of classification of the database samples, which would allow the similarity search to be restricted to one particular class. For example, the user may have some explicit high-level knowledge of the features used to index the archive, or similarly s/he might provide explicit subjective labels of which the database might take advantage for classification.

However, as indicated earlier in Section 1.1, in this work I consider only search by example, specifically in instances where no explicit cognitive knowledge is shared by the database and the user. This constraint is often desirable for several reasons, notably the difficulty for automatic generalization by the computer, and the impracticality of entering such labels individually by the user. Furthermore, explicit classification would be done by manually labeling all the samples, which is unrealistic in terms of manpower for very large databases.

The need for a learning scheme is therefore apparent as the remaining alternative. Using information provided by the user, a learning scheme can either modify the search method, or create a new search space reflecting both that new information and the original information of the vector space. Once again, because a nearest neighbors search is the desirable search method for optimization, I will choose the latter scheme.

Learning from Relevance Feedback

Although the user and the database share no explicit knowledge base, implicit subjective information can be provided by the user in the form of relevance feedback. This consists usually of an iterative refinement process in which the user indicates the relevance or ir-relevance of retrieved items in each individual query, where ir-relevance is equivalent to class precision.

Previously studied extensively in textual retrieval, an approach towards applying this technique to image database has been proposed recently by Minka and Picard [13]. In

this approach, the system forms disjunctions between initial image feature grouping (both intra- and inter-image features) according to both positive and negative feedback given

by the users. A consistent grouping is found when the features are located within the

positive examples. A different approach is proposed by one of the authors later and becomes PicHunter [6, 51. In PicHunter, the history of user selection is used to construct the system's estimate of the user's goal image. A Bayesian learning method, based on a probabilistic model of the user behavior, is combined with user selection to estimate the probability of each image in the database. Instead of revising the queries, PicHunter tries to refine the answers in reply to user feedback. Alternatively, an approach has also been proposed to learn both feature relevance and similarity measure simultaneously [4]. In this approach, the local feature relevance is computed from a least-square estimate, and a connectionist reinforcement learning approach has been adopted to iteratively refine the weights. A

heuristic approach for the interactive revision of the weights for the components of the feature vector has been proposed by Rui et al. [15]. An algorithm, S-STIR, based on nonlinear multidimensional scaling of the feature space has been proposed by Li et al. [10].

1.3.3 Combining efficient Indexing, Search and Learning

While all of these methods definitely provide some improvement in the accuracy of the search, none of them take into account the need for efficient updates to an index designed for fast search. Nor do they optimize the per-query learning time, which counts as part of the query processing time, because the user may provide feedback after each query.

On the other hand, the RCSVD index relies on information which is valid only in a static search space, thus inhibiting all incremental refinement of the index by relevance feedback. Consequently, RCSVD is restricted to an objective nearest neighbors search and performs with poor subjective accuracy in applications where class precision is important.

In summary, none of the previous methods are directly suitable to building a system which is both scalable and reliable. The challenge is to build an index designed to both allow for fast search, and be flexible to modifications by a relevance feedback learning algorithm. Furthermore, a small (sub-linear) time complexity for each query is equally important for the learning algorithm as it is for the search algorithm.

1.4

Proposed Approach

In this paper, I propose a new algorithm that combines a learning process similar to the S-STIR method in that it refines the feature space based on user feedback, and an ef-ficient high-dimensional indexing technique inspired by RCSVD, to address the issue of

simultaneously efficient and reliable search and learning. Specifically, the proposed method approximates the idea of S-STIR's non-linear transformation of the whole feature space,

by a series of linear transformations which affect a significant fraction of the database, yet

have a sub-linear time complexity in terms of the database size, and can thus affordably be applied after each query and make the system scalable. This learning algorithm directly affects the index structure, which is created by recursive clustering, and directly shared with the search algorithm. Cumulated learning steps will make the vector space converge to a configuration where the search space for each query can be substantially pruned.

Chapter 2 is a preliminary statement of the goals and previous work leading directly to our proposed solution. which itself is sketched in Chapter 3. The results in Chapter 4 show

the current level of reliability obtained with the system, and Chapter 5 concludes with a discussion of the current results and of future research directions.

Chapter 2

Design Rationale: Combining

Indexing and Learning

The purpose of this chapter is to walk through and justify each step of the design rationale for the proposed approach. Once again, the primary objective of this research work is to design an image search engine capable of learning from user interactions, and scalable to large databases.

2.1

Search Space and Metric

The first step in designing a search engine is to decide on the search space and metric.

2.1.1 Objective and Subjective Information Spaces

The common approach of many learning algorithms is to maintain a distinction between the objective information, namely the original space of feature vectors, and subjective infor-mation provided by the user. The subjective inforinfor-mation space could possibly consist of an unbounded record of user feedback, but that poses problems in terms of index size growth. It will preferably remain within a fixed-size representation, where the past information is weighted down to allow the accumulation of new feedback. While this effectively discards some of the resolution from previous feedback information, it is usually designed such that the overall cumulated subjective information will ideally converge towards a stable and accurate representation of the user(s)'s knowledge.

2.1.2 Objective and Subjective Information Discriminated

Given the two information spaces, one option is to design the search metric to reflect information from both spaces at the same time. However, this makes the search algorithm harder to implement and scale.

The other option is to map the two spaces onto a new vector space, on which a straight nearest neighbors search can be performed. This is notably the case for the S-STIR algo-rithm (Similarity Search Through Iterative Refinement) [10]. Specifically, S-STIR proposes a learning method where the user information is combined with the original vector space us-ing non-linear multidimensional scalus-ing. This is done by first clusterus-ing the original feature space, then mapping it to a new high-dimensional space where each dimension represents a sample's proximity to the centroid of one given cluster. This space is then scaled by a matrix

W which is effectively the subjective information space, because the "iterative refinement" process consists of adjusting the coefficients of W by relevance feedback. Specifically, W is chosen such that in the new vector spaces, the structure of each class is preserved, while the distances between elements within that class are minimized. This method ideally con-verges until the clusters reflect the subjective classes, in a space where they can be easily

discriminated.

Unfortunately, while a simple nearest neighbors search can be used to perform queries, the difficulty resides now in designing, for that new vector space, a versatile search index which would easily adapt to changes in W.

2.1.3 Objective and Subjective Information Mixed

Alternatively, one could accumulate the subjective information within the objective space, modifying the original vectors directly to reflect the user's feedback. This has the drawback that it destroys the original feature description of each sample, and results in a partial and irreversible loss of the objective information. However, if this method can still be made to converge to a stable and accurate representation of the user's knowledge (assuming the user's feedback maintains a certain coherence), the information loss is inconsequential.

I chose this approach, for it has the advantage of simplifying the search and indexing

problem, because the search space is then the same as the original space, and the metric can be as simple as Euclidean distance or quadratic distance.

2.2

Designing a Dynamic Search Index

The database index in this scalable system needs to fulfill two roles: it must provide the necessary structure and landmarks for a fast nearest neighbors search algorithm, and all the while allow a dynamic and quick modification to the whole indexed vector space to reflect the information provided by the user feedback. This section steps through the logic requirements of such an index.

2.2.1 The Need for Clustering

An index for similarity search must keep similar elements closely connected. The estimate of similarity between two elements, in any given state of knowledge of the database, is reflected in the correlations between their feature vectors. Separating elements in clusters of correlated features, as done in RCSVD, reflects the physical layout of the samples in the vector space and consequently allows to greatly prune the search space. Furthermore, regardless of the specifics of the learning algorithm, it should intuitively generalize in some way the feedback information pertinent to one sample onto other samples in the same cluster, as they are correlated.

2.2.2 The Need for Hierarchy

An unbounded and unorganized set of clusters does not facilitate search or learning. In a tree-like hierarchy of clusters, delegation is possible, a prerequisite for sub-linear search and learning time complexity. Indeed, in a hierarchy, each learning step, being always focused around one target sample, can have an impact progressing from the local environment to the global environment, following the hierarchy up along one branch. Similarly, a search

starting from the bottom of the hierarchy will remain along one branch, if the search space is adequately pruned.

2.2.3 The Need for Flexibility

While a search index is usually meant to be built with only a one-time cost and make every subsequent query efficient, this index needs to be dynamically modified to reflect the changing state of subjective knowledge of the database. These modifications, while possibly impacting the whole database, need to be achieved with little cost for each query, in order to keep the efficiency. At the top of the hierarchy, a modification should have a global effect, while at the bottom it should only have a local effect - yet both need to be achieved in constant time to achieve the logarithmic search and learning time we seek. This requires each node of the index to have some sort of control handle over all its subnodes, such that it can cause modifications to all of them in a single operation. Furthermore, the initial distribution of samples in the clusters might be found an inaccurate subdivision of the space, as this space gets modified by the learning process. Thus, this might require a dynamic transfer of samples and tree nodes across the index, possibly at each query. This process of restructuring the index must therefore also be achieved quickly at each query.

2.3

Designing a Scalable Learning Algorithm

This Section provides a high-level overview of how the learning process reshapes the vector space to reflect the user's feedback, by applying criteria learned from the S-STIR algorithm

[10].

2.3.1 Insights from the S-STIR Algorithm

The S-STIR algorithm, described in Section 2.1.2, is not suitable as a scalable learning scheme, principally because its per query learning step is super-linear in terms of the database size, and furthermore it provides no support for an efficient index. However, it uses two key concepts in the design of a learning algorithm which modifies the search space:

Intra-Class Distance Reduction

Type inclusion can be achieved if the distances between elements of the same class are reduced, making them all relatively closer to each other and farther from other classes.

Intra-Class Structure Preservation

Within one given class, samples should maintain a topography similar to that of the original vector space. Otherwise, the original objective information is completely lost and the rela-tive position and similarity measure of samples in the vector space may become nonsensical.

2.3.2 Reshaping The Result Set with Attraction/Repulsion

The scheme proposed to reshape the feature space consists of intuitive concepts: samples of the same type attract each other, samples of different types repulse each other. Intuitively again, similar samples which are already quite close together need not attract each other

Legend

T: target sample R: relevant sample

R I: irrelevant sample

T

Figure 2-1: Learning Illustration: Reshaping the Result Set

any closer. Similarly, different samples which are already quite far apart need not repulse each other any further. This process is illustrated in Figure 2-1.

The learning algorithm must thus take the form of geometrical transformations which reflect these intuitive behaviors. These transformations would modify the samples of a query result set with respect to the target sample of the query, according to the feedback given by the user. This process should reduce intra-class distance, and relatively increases the inter-class gaps. Only the samples in the result set would be touched, generally an insignificant number with respect of the database size.

2.3.3 Generalization Steps

The process just described affects only the samples in the result set. By itself, it is useless, affecting only a very small portion of a large database, and even potentially detrimental, breaking the local topography and thus disrespecting the structure preservation criterion. Ideally, the local space of samples around the query result set should smoothly follow the

movements of the samples in that set, a transformation which could be achieved nicely using for example topographical maps. However, topographical maps are computationally expensive and are not easy to index.

Instead, I propose a scheme using the hierarchy of clusters in the index to approximate such a transformation. The high-level idea (illustrated in Chapter 3, Figure 3-2), is the following: if s is a moving sample, then any cluster along the hierarchy to which s belongs should move in the same direction as s, by a fraction of the displacement of s. This fraction is inversely proportional to the cluster's weight. This scheme is meant to implement structure preservation, and generalize the impact of one query to the rest of the database, by following a simple directive: if the sample s is moving, then the samples nearby should follow it a bit to preserve the smoothness of the space, and the samples nearby are likely to be those in the same cluster as s.

In the index, the information is relative across the hierarchy (see Section 2.2.3): specif-ically, this would imply that vector coordinates are expressed in with respect of the coordi-nates of the cluster immediately above. Thus, translating all the samples in a cluster can be simply done by changing the cluster coordinates. Consequently, this generalization step can be achieved by only performing one action at each level of the hierarchy when moving up an index branch, making the process sub-linear as desired.

2.4

Fast Nearest Neighbors Search

The proposed index replicates many features of the RCSVD index which make the RCSVD search method scalable, and which we will presently describe. However, due to the dy-namic nature of the new index, some features had to be discarded, and others re-adjusted accordingly, the details of which are described in the second part of this section.

2.4.1 Insights from The RCSVD Algorithm

Briefly, the RCSVD indexing method consists of creating a recursive clustering hierarchy, which is emulated in the present design. Additionally, RCSVD uses Singular Value Decom-position to find the directions of least variance for the samples in a particular cluster, and discards them while preserving a reasonable amount of the total variance. Therefore, when those features are discarded, the new "flattened" cluster is still a good approximation of the original. When the dimensions are reduced to a sufficiently low number, the implementation of a compact index using the Kim-Park method [9] is possible. This within-cluster index allows a very fast nearest neighbors search method.

2.4.2 Adapting the Search Method to a Dynamic Index

The Kim-Park method relies on the static nature of sample vectors, and can therefore not be used in the new index. Furthermore, dimensionality reduction is also undesirable in a space where samples change constantly. These losses are compensated in scalability by pushing the hierarchy further down to include less samples in the leaf clusters of the tree. However, singular value decomposition will still be applied to keep tight hyper-rectangular boundaries for the clusters, in order to prune the search space.

Chapter 3

Methods

This chapter presents the details of the proposed approach for a scalable and reliable content-based retrieval engine. It is organized in three main sections, each describing one of the novel designs combined by this approach: a compact indexing structure, made to reflect the topographical organization of the vector space, and made flexible to facilitate changes to said vector space; a learning algorithm made to accurately translate the user's feedback on a query into changes in the vector space, and to perform those changes efficiently on the index, i.e. in sublinear time; and finally, a nearest neighbors search algorithm which makes proper use of the index structure so as to perform a query also in sublinear time.

3.1

The Database Index

The database index is a versatile structure: its purpose is to accommodate both nearest neighbors queries and changes by the learning algorithm in sublinear time. First, the structure of the index must be designed to prune the search space as much as possible for nearest neighbors queries. Second, a process has to be defined by which such an index is constructed on a database of raw feature vectors. Last, the dynamics of the index must be established to provide the learning algorithm with methods for adapting, in sublinear time, the vector space to the user's feedback, so as to improve the search accuracy without however decreasing search speed.

3.1.1 Structure of the Index

A Tree of Clusters

In order to provide a quick access to all the database samples, the they are indexed by a reasonably balanced tree hierarchy, as stated previously in Section 2.2.2. At each tree node, the subdivision of the database sample in the index must reflect their spatial distribution. Indeed, spatial locality is the basis of similarity search, therefore the search space can only be pruned if said spatial locality corresponds to locality in the index. Hence, the structure of the index is a hierarchy of clusters, much like the RCSVD index [17].

Structure of a Cluster

Each node of the index tree is a cluster. The leaf nodes of the tree (leaf clusters) di-rectly index a set of samples, while the internal node clusters (superclusters) index a set of

subclusters.

The versatility of the index originates in the versatility of its cluster nodes. Aside from the standard functions of a tree node, which are to hold index pointers to all its subnodes and a back-pointer to its parent, each cluster serves two main purposes. On one hand, as a delegate and container for all its subnodes, a cluster node captures certain useful collective information about all its subnodes, which can be checked first, and potentially avoid having to check more detailed information in its whole subtree, if the latter is found unnecessary. On the other hand, as the root of a whole subtree, a cluster node exercises certain control over its contents, which potentially avoids having to make individual modifications in each

element of the subtree, if modifications to this nodes are enough.

Standard Tree Node Contents As a standard tree node, the cluster contains a pointer

to its parent as well as pointers to its children. For a leaf cluster, children consist of a set of samples. For a supercluster, children are a set of subclusters.

In both cases, there exists a motivation to create a within-cluster index structure to allow efficient access and sorting of the set of children. An example is the Kim-Park index used inside RCSVD clusters [9, 17]. However, such an index is difficult to maintain here, because the information on which it would rely is subject to changes by the learning algorithm (in the case of the Kim-Park method, the relative location of the children). Instead, the set of children is simply indexed as a linear array, for which the access and sorting costs can remain low so long as the branching factor and the maximum leaf size are kept small enough.

Collective Information on the Cluster Contents Besides the evident centroid vec-tor and cluster weight (total number of samples in this cluster), a cluster keeps track of information pertaining to its boundaries, and the variance of its elements.

Boundaries allow the search algorithm to check whether the clusters as a whole is beyond the search radius, before trying to search its contents. Thus, these boundaries should be as tight as possible, so as to effectively prune the search space. For example, a spherical bound is inadequate given the amount of overlap it would cause with neighboring clusters, especially in a high-dimensional space. Instead, I use hyper-rectangular boundaries. While ellipsoid boundaries would be generally tighter, hyper-rectangular boundaries offer a good tradeoff between tightness and computational simplicity for a bounds check. They are recorded in the cluster by an orthonormal basis for the rectangle's directions, and a pair of boundary distance values from the cluster's centroid, in each direction of the basis.

The variance of elements in a cluster offers a representation of the cluster's context. This allows to consider a cluster element relatively to its context, and proves useful in the learning algorithm, as shown later (Section 3.2.1), as well as in index maintenance checks, to see if a particular sample or subcluster fits better in the context of another cluster. The information recorded is the variance in each direction of the cluster's orthonormal basis

(from which the variance in any direction can be easily calculated).

Collective Control on the Cluster Contents As later discussed in Section 3.2.2, one

factor which allows the learning algorithm to run in sublinear time on this index is the ability to translate all the elements contained in a cluster in one single operation, at any level of the hierarchy. This element of control is given to the cluster over its subtree by the cluster's reference point. All vectors in a cluster and its children use this relative reference

point as their origin. Thus, their absolute position can be translated, simply by translating the cluster's reference point with respect to the cluster's parent.

3.1.2 Building the index Clustering

A hierarchy of clusters is obtained by recursively clustering the vector space. A number

of clustering algorithms have been proposed in the scientific literature, with varying time complexities and degrees of optimality in the subdivision of space. Here, K-means, LBG

[11], TSVQ, and SOM [7] were considered. Currently the system uses either K-means or

TSVQ, for their time efficiency.

The number of clusters at each level is the tree's branching factor b. The clustering algorithm is applied recursively until a leaf node reaches a size less or equal to a maximum leaf size 1. Both b and 1 should again remain relatively small to keep within-cluster indexing simple. Using K-means, b can be specified to remain within two values bmin and bmax. The algorithm is run with an initial b = bma,, and run again if it converges with no more than bmax - bmin empty clusters. Also,

I

should be chosen accordingly to give K-means a good probability of convergence.SVD Boundaries

While it is a difficult operation to find the vector directions for the tightest hyper-rectangular boundaries for a cluster, a good approximation is to compute the Singular Value Decom-position (SVD) of the set of samples in a cluster, and use the singular vectors as directions for the rectangular boundaries. Furthermore, the singular values provide the variance in-formation for the cluster.

For a leaf cluster, the scalar boundary values for each of those vectors are determined as the maxima of the projections of all the samples on each singular vector direction. For a supercluster, the furthest vertex of each child's bounding rectangle is determined in each singular vector direction, and the scalar boundary value for that vector is thus calculated. Additionally, a bit vector is recorded for each child and each singular vector direction, v =

{

1, --1}d (where d is the number of dimensions), indicating which combination of the child's singular vectors form the furthest vertex in that particular direction. Indeed, as long as the child's singular vectors (i.e., boundary directions) remain unchanged, the furthest vertex in a particular direction stays the same.3.1.3 Dynamics of the index

As suggested in Section 2.3, the learning algorithm intends to modify sample vectors in the index. In fact, in addition to displacing some sample vectors individually, it will displace entire clusters by their reference points (see Section 3.1.1). These changes are bound to disrupt the established structure of the index, and this structure must be brought back to a coherent state by a series of updates. The different kinds of updates needed are described in this Section, and define the dynamics of the index.

Boundaries Updates

When any sample is displaced, the boundaries of its leaf cluster must be checked to see if they must be accordingly extended or contracted. This operation is simple for a single

sample vector: the vector is projected on each of the cluster's singular vector and checked against the cluster's boundary values.

This update must also be propagated up to the cluster's parents. For each singular vector direction of a supercluster, the furthest vertex of a modified child cluster's bounding rectangle can be quickly found, using the bit vector v sign, previously recorded for each child and each singular vector direction (see Section 3.1.2). The child's singular vectors are scaled by the respective elements of vsign, then added together. The vector is then checked to see if it exceeded the corresponding bound.

This operation is time-logarithmic in terms of database size, and could be expensive if repeated often. However, as shown later, the vectors modified by one iteration of the learning algorithm are all along one branch of the tree, so the boundaries updates can be done collectively along that branch.

Sample Transfer

The purpose of the index is to reflect and discriminate, with individual clusters or super-clusters, the ideal groups of a classification for the database. However, it is very unrea-sonable to expect all clusters or super-clusters to exactly match a class for the database, without prior knowledge. In consequence, any initial clustering of the space should be expected to place samples in clusters in which, subjectively, they do not belong. This is therefore likely to cause an inflation of the clusters' boundaries resulting in reduced search performance, after several iteration of subjective feedback and modifications by the learning algorithm, as samples move away from their original clusters. Samples in a cluster must remain densely packed in order to effectively prune the search space.

To solve this problem, it should be made possible to transfer samples across the hierarchy. If a sample is found to have diverged too far and seems to belong better in another cluster, it should be transfered to that cluster. A sample should belong in a cluster if it fits the cluster's context best. Thus, dividing the sample's distance to the cluster centroid by the standard deviation of the cluster in the sample-centroid direction yields a better measure of the sample's fitness for that cluster than its absolute distance to the centroid. This standard deviation is easily calculated by the variance information contained in the cluster.

The same applies to any level of the hierarchy: if an entire cluster is found to belong better in another supercluster, it should be transfered there. The process for determining whether a cluster fits a certain supercluster better than another is similar to the fitness test process outlined above for a single sample.

Now, this transfer operation disturbs the index structure. Indeed, for any transfered element (sample or cluster), two branches need to be modified: the branch above the original location of the element, and the branch above its new location. Indeed, each cluster in those two branches will need its weight, centroid and bounds updated to reflect the disappearance/appearance of the element in its subtree. A single transfer operation has therefore a time-logarithmic cost in terms of database size. However, if a fitness test is performed for every element of a branch of the index, the updates needed by the transfers found necessary can be all performed together along the branch. It is therefore possible to perform fitness tests and element transfers along a whole branch still in logarithmic time. Thus, at each query, it can be reasonably done once, and a good candidate for this operation is the branch leading up from the target sample.

SVD Refresh

Sometimes however, a sample may seem to be diverging from a cluster, when it is actually the cluster that has changed. Indeed, the directions of the bounding rectangle may become inadequate for tight boundaries. Thus, clusters in the database should periodically undergo a maintenance check, where the singular value decomposition is recalculated, and the re-quired updates are made to the cluster's parent (specifically, a recalculation of the vsign

vectors for this child).

3.1.4 Auxiliary Exact Search Index

The main index does not provide any means for locating the target sample, given a query sample. Initially, the cluster subdivision of space has the optimal clustering property: at any level, the closest cluster centroid to a given sample vector is the centroid of the cluster containing the sample. This is used by RCSVD at each query to first locate the target sample in the index, the initial step of a nearest neighbors search. Unfortunately, in this index, samples and clusters move about, such that the optimal clustering property is lost, and the target sample must be found differently.

To this effect, each sample in the main index is doubly indexed by an auxiliary index. This can be an inexpensive structure such as a B-Tree, where samples are classified in their original form. The exact sample lookup thus takes logarithmic time in terms of database size.

3.2

The Leash Learning Algorithm

This section describes a family of incremental learning algorithms, where each learning incre-ment can be performed in sublinear time in terms of the database size, given the appropriate index structure. This family is defined by a set of principles for both adapting the result set of a query to user feedback, and then generalizing the effect to the rest of the database. In each case, the principles are stated, followed by a few proposed implementations.

3.2.1 Adapting the Result Set

Principle

Relevance feedback information, as given by the user, applies directly to the samples of the result set of the query. The first learning step should then consist of reflecting this subjective information onto those samples. As explained in Section 2.3.2, the sample vectors are directly modified by following these heuristics:

1. If two samples are judged to be different, they should be pushed apart if they are

close. On the other hand, it is unnecessary to push them further apart if they are already far apart;

2. If two samples are judged to be similar, they should be brought steadily closer, unless they are very close already.

These updates are implemented as linear transformations, in order to maintain com-putational simplicity. These transformations must be chosen so as to make the database converge towards a reliable and stable state.

Implementation

Main Approach Assume that the user's feedback is encoded by the variable u E [-1, 11, for which values between -1 and 0 indicate varying degrees of difference between s and T,

and values between 0 and 1 indicate varying degrees of similarity.

The simplest transformation proposed is to move each sample in the result set either directly towards or away from the target sample T. That is, for each sample s in the result set, v* being the vector from s to T, s is translated by a vector g collinear to v . The scaling factor f from v to g is logically proportional to u: indeed, with positive feedback s should be translated towards T, with negative feedback away from T, and by an amount reflecting the degree of similarity/difference.

Furthermore, f should take into account the present distance 11 between s and T, so as to reflect the learning heuristics above (Section 3.2.1): if s and T are similar and already close together, they need not be brought much closer, and

f

should have an accordingly smaller magnitude; if s and T are different but already quite far apart, they need not be pushed much farther, andf

should have an accordingly smaller magnitude. This effect is captured by two transition functions, fa and fb. Lastly, the distance 11 V 11 between s and T needs to be scaled to the context of T's cluster, hence it is divided by a, the standard deviation of said cluster in the direction of V+ (calculated using the singular vectors and values).This gives:

9 ( o ,U) = f ( 7,u)- V1, (3.1) 1. for -1 <u<0, f(x,u) =Do-u-fa(x),

2. for 0 < u < 1, f (x, u) = S u

fb(x),

where:1 1

fa(X) ± Af() = (1- ). (3.2)

+ a I1+ X

The constant parameters Do, So C [0,1] adjust the weights for the similarity and differ-ence displacements, and a, b > 2 are exponents used to give varying degrees of steepness to the transition functions fa and fA. The illustration in Figure 3-1, shows how these transition functions implement the learning heuristics.

While they are computationally simple, these linear transformations have the disadvan-tage that they are not commutative, and difficult to formulate in a closed form. Hence, it is easier to experimentally determine the values for the parameters Do, So, a and b that

facilitate the convergence of the the algorithm (see Section 4).

Issues and Variations It should be noted about the learning function above that it is not

designed to converge to a fixed point. Furthermore, those linear transformations can cause drastic changes to the concerned samples, and given the fact that these transformations are not commutative, this implies that the order of the queries could create an undesired bias in learning. Two variations from the main approach are thus proposed, addressing those two respective issues.

.1. 1

01

0*

0 1 2 3 4 0 1 2 3 4

x x

Figure 3-1: fa and fb, transition functions (here a, b = 2)

The first variation attempts to relax the transformation by only delaying its effect. If an infinite number of queries were to be performed with this sample in the result set, the displacement of the sample s would end up the same as in the main approach. However, the inertial delay this creates can result in a smoother transition between queries, and reduce the bias introduced by their order.

This delay is induced as the main transformation,

f

- v is reduced by a weight w C (0, 1), and combined with the previous move, g q_1:Sq (Tq~u, 9q-1) w -

f(

,u). V +(1 - w). gq-1 . (3.3) The second variation is intended to cause a quick convergence. It consists of freezing samples as more and more queries are performed on them. This is done by scalingf

using a decreasing function of the total number of queries q made on the considered sample. For example:1. for -1 <u<0, f(x,u) =Do. u -fa(X), 2. for 0 < u < 1, f(x, u) = S U -

fb(X).

The disadvantage is, however, that samples might thus converge too fast to a database state which is far from the desired state. One compromise is to select slower decreasing functions of q:

1. for -1 <u < 0, f(x,u) =Do. --'- -. fa(x),

2. for 0 < u < 1, f(x,u) = So u - -f(x).

Alternatively, database administrators can reset q for all or some selected samples, if they seem to have converged to a stable, yet unsatisfactory state.

3.2.2 Generalization

Principle

The second step in the learning process is to generalize the feedback information captured by

the transformations applied to the samples of the result set. As explained in Section 2.3.3,

generalization is accomplished by hierarchically extending the effect of the transformation of each vector in the result set to the whole database.

There are two motivations for this process. First, the learning process consists of smoothly combining the current information of the database with the user's feedback; therefore, it should ensure that the structure of the local space around the target sam-ple is preserved by smoothing out the disruption caused by the learning step previously described (Section 3.2.1), because said structure captures the state of the database prior to feedback. Second, the actual process of generalization consists in extending the effects of the feedback to all the samples to which it may apply. Given two samples, the probability that they belong to the same class is likely to increase, the lower their lowest common parent cluster is in the hierarchy. In other words, samples should be bound by a similar fate if they belong to the same local cluster. Thus, when a sample is displaced in the first learning step, it is somewhat logical that each cluster in the index branch above it should follow the sample by an amount inversely proportional to the cluster's weight, as if "dragged on a leash".

While it may potentially cause displacements in a number of samples comparable to the size of the whole database, this transformation can be achieved in sublinear time, because the index is designed such that the entire contents of a cluster can be displaced in one operation, by simply displacing its reference point (as explained in Section 3.1).

Within the hierarchical cluster structure, the generalization process consists of propa-gating the individual displacements of each sample vector in the result set, up along its branch of superclusters until a cluster is reached which contains both the target and the moving sample. Specifically, each cluster in the hierarchy is displaced by a vector integrat-ing the desired displacements for all the vectors which belong to that cluster and are thus correlated to the moving sample, towards or away from the target, and weighted down by an inertia factor for the cluster.

Implementation

Simple Approach The simplest transformation affects only the branch of clusters di-rectly above the sample, and is illustrated in Figure 3-2.

First, each leaf cluster containing one sample s in the query result set is displaced in the same direction as s, except by a fraction 1/IC of the amount by which s moves, where IC is an inertia factor which can simply be the weight of the cluster. If the leaf cluster contains several samples in the result set, it moves by the sum of the individual sample moves, again scaled down by 1/IC. This is done in one operation by simply moving the cluster's reference point. However, because those cluster's samples which belong to the result set already made a move on their own towards or away from the target sample, it makes no sense to make them move again by the displacement incurred on the cluster's reference point. Hence, that displacement should be subtracted from those samples in the result set.

Next, the same process is applied to the superclusters containing one or more cluster which have been displaced. Once again, the resulting displacement for a supercluster's reference point should be subtracted from those clusters among its children, which have been displaced on their own already.

With C being the reference point of a cluster, 1c the cluster's inertia factor, vi the displacement of a sample vector, Ci the reference point of an internal cluster's sub-cluster, and P the displacement of the parent cluster, this can be formulated by the following, for a leaf cluster:

leaf cluster

leaf cluster

R

0-Figure 3-2: Learning Illustration: Generalization supercluster

Legend

T: target sample R: relevant sample

element moving by itself ---- :element moved by parent

T 0 Elements modified: one branch R

'

"

A C= (I - A V-AP, (3.4)

and for an internal node cluster:

AC=- (- EA CZ) - A P. (3.5)

1C i

This simple transformation consists of only one operation at each level above each sample in the result set. Thus, the running time for this step is O(k log n), where n is the database size and k the query size.

Variation In the simple approach, all the clusters in the branch above a sample in the

result set are displaced in the exact same direction, regardless of their shape or size. A smoother transformation would be, for examples in the case of samples in a leaf cluster, to have all the samples in the cluster move independently towards or away from the target by a distance equal to 1/Ic times the distance traveled by their sibling from the result set, instead of having them all move in the same direction by moving the parent leaf cluster. Similarly, it would be smoother if clusters move independently in a supercluster.

This transformation consists therefore in displacing a result sample's siblings instead of its parent leaf cluster, and displacing the siblings of a cluster containing a result sample, instead of the cluster's parent cluster. This is illustrated in Figure 3-3. While it uses more displacement operations overall, given a reasonably small and consistent branching factor

b, this variant is still sublinear, as it runs in O(kblogn).

3.3

Searching for the Nearest Neighbors

With the proposed database index structure, a specific nearest neighbors search algorithm can be used, capable of significantly pruning the search space and avoid searching through the entire index. This similarity search algorithm is once again inspired by the search algorithm designed for the RCSVD approach [17]. It involves first a preliminary exact search for the target sample corresponding to the query sample. This allows the nearest neighbors search to go from the bottom up, by progressively increasing the search radius, and every cluster in the index whose boundaries are beyond the search radius can be effectively pruned out of the search, ideally resulting in only a small subset of the database being explored.

3.3.1 Exact Search for the Target

As explained earlier (Section 3.1.4), the exact search for the target sample is performed by using the auxiliary index. The target sample vector corresponding to the query sample is then found, and its parent is designated as the primary cluster for the nearest neighbors search.

Before starting the nearest neighbors search however, a check is performed on all of the sample's branch, to transfer, if found necessary, the sample and/or any of its parents to the cluster where they each respectively belong, as described in Section 3.1.3.

leaf cluster

R

R

Figure 3-3: Learning Illustration: Generalization Variant supercluster

leaf cluster Legend

T: target sample R: relevant sample

- : element moving by itself

- - : element moved by parent

T

Elements modified: all the siblings of a branch

3.3.2

Collecting the Nearest Neighbors

Given the query's primary cluster, the task is then to collect the k nearest neighbors (NN)

of the target sample, working up along the hierarchy. The proposed algorithm is outlined below. Note that this is an exact NN search, not an approximation, within the current vector space.

A k NN search is first performed in the primary cluster. If k exceeds the number of

elements in the cluster, some of the k NNs belong to the neighboring clusters. Even if k samples are retrieved, samples in neighboring clusters might be closer to the target than some of the samples retrieved so far in the primary cluster.

The search must therefore be extended to neighboring clusters in the rest of the database. Let C, be the primary cluster, T be the target sample, P(Ci) be the parent cluster of the cluster C, and S be the ordered set of I retrieved samples so far, where 1

<

k. Let D(C, T) denote the distance of the target sample to the boundaries of the cluster Ci, and D(sj, T) the distance of the ith sample of S to the target (where S is ordered by increasing distance to the target). The search is performed following this double-iterative procedure:" For all the clusters C siblings of C1 (i.e., P(Ci) = P(C)), excluding CI itself, in order

of their distance D(Ci, T) to the target, and while (I < k) XOR D(Ci, T) < D(sk, T) (i.e. while Ci is a candidate which could possibly contain some of the k NNs), do:

- Find j such that D(sj_, T) < D(C2,T) < D(sjT). Samples in S from 1 to

(j - 1) are retained in the the result set during this iteration, for they are closer

to the target than anything in C could be.

- Find the ordered set S' of (k - j) NNs of T in Ci. If Ci is not a leaf cluster, a

procedure very similar to this iteration is recursively applied to its children. - Merge S' with the current elements j through k of S in increasing order, and

retain the first (k -j) elements of the merged list as the new j through k elements

of S.

" Set C1 to be P(CI), and repeat the above loop procedure until the root is reached,