Dynamic Programming for Mean-field type Control

Texte intégral

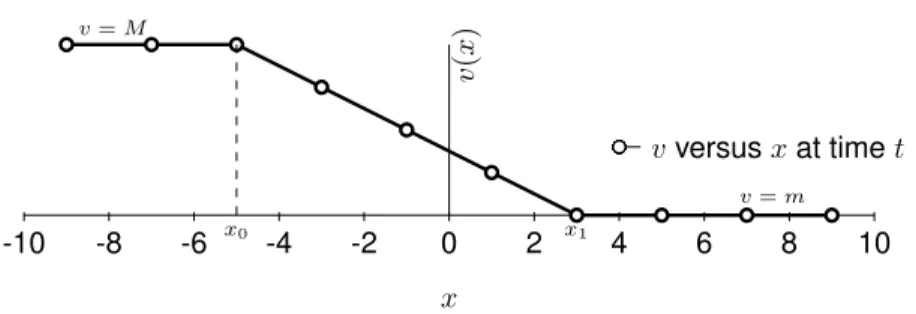

Figure

Documents relatifs

If we naively convert the SFR function to the mass function of the star-forming galaxies by assuming that the galaxy main sequence is already in place at z ∼ 7 and β is constant

Each class is characterized by its degree of dynamism (DOD, the ratio of the number of dynamic requests revealed at time t > 0 over the number of a priori request known at time t

Deterministic Dynamic Programming is frequently used to solve the management problem of hybrid vehicles (choice of mode and power sharing between thermal and electric

Under general assumptions on the data, we prove the convergence of the value functions associated with the discrete time problems to the value function of the original problem..

Then, a convergence result is proved by using the general framework of Barles-Souganidis [10] based on the monotonicity, sta- bility, consistency of the scheme (a precise definition

Stochastic dynamic programming is based on the following principle : Take the decision at time step t such that the sum ”cost at time step t due to your decision” plus ”expected

Indeed, when the scenario domain Ω is countable and that every scenario w(·) has strictly positive probability under P , V iab 1 (t 0 ) is the robust viability kernel (the set