Parallel Euclidean distance matrix computation on big datasets

Texte intégral

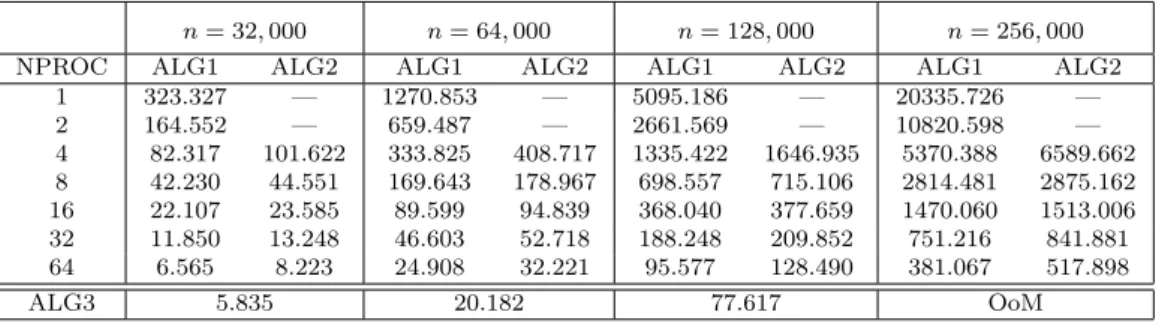

Figure

Documents relatifs

In this paper, we propose two techniques, lock-free schedul- ing and partial random method, to respectively solve the locking problem mentioned in Section 3.1 and the

Keywords: Matrix factorization, non-negative matrix factorization, binary matrix fac- torization, logistic matrix factorization, one-class matrix factorization, stochastic

We discussed performance results on different parallel systems where good speedups are obtained for matrices having a reasonably large number of

In this work, we will examine some already existing algorithms and will present a Two-Phase Algorithm that will combine a novel search algorithm, termed the Density Search Algorithm

In this paper we describe how the half-gcd algorithm can be adapted in order to speed up the sequential stage of Copper- smith’s block Wiedemann algorithm for solving large

For CRS-based algorithms, the speedups are computed by using the execution time of the CRS-based sequential algorithm.. For the COO-based ones, we used the COO-based

A learning environment which allows every learner to enhance his or her thinking skills in a unique and personally meaningful way, and most importantly it

This is also supported by our estimated proportion of chronic pain sufferers with neuropathic characteristics in the general population (25 of sufferers of chronic pain of at