Applying Natural Language Models and Causal

Models to Project Management Systems

by

Caroline Morganti

Submitted to the Department of Electrical Engineering and

Computer Science

in partial fulfillment of the requirements for the degree of

Master of Engineering in Electrical Engineering and Computer

Science

at the

MASSACHUSETTS INSTITUTE OF TECHNOLOGY

February 2018

c

○ Massachusetts Institute of Technology 2018. All rights reserved.

Author . . . .

Department of Electrical Engineering and Computer Science

January 19, 2018

Certified by . . . .

Kalyan Veeramachaneni

Principal Research Scientist

Thesis Supervisor

Accepted by . . . .

Christopher Terman

Chairman, Masters of Engineering Thesis Committee

Applying Natural Language Models and Causal Models to Project

Management Systems

by

Caroline Morganti

Submitted to the Department of Electrical Engineering and Computer Science on January 19, 2018, in partial fulfillment of the

requirements for the degree of

Master of Engineering in Electrical Engineering and Computer Science

Abstract

This thesis concerns itself with two problems. First, it examines ways in which to use natural language features in time-varying data in predictive models, specif-ically applied to the problem of software project maintenance. We attempted to integrate this natural language data into our existing predictive models for project management applications. Second, we began work on creating an easy-to-use, extensible causal modeling framework, a Python package called CEModels. This package allows users to create causal inference models using input data. We tested this framework on project management data as well.

Thesis Supervisor: Kalyan Veeramachaneni Title: Principal Research Scientist

Acknowledgments

I would like to thank Kalyan Veeramachaneni for being my advisor and offering advice and feedback throughout the process of this project. I also thank my lab-mates in the Data to AI Lab for providing me with plenty of support and random entertainment. In addition, thank you to Jin "Gracie" Gao who has been a wonder-ful source of support as DAI Lab’s administrative assistant, as well as thanks to Francisco Jaimes for ordering much Milk Street food for LIDS throughout the last two semesters. Finally, thank you to my friends and family who have provided much support and encouragement.

Contents

1 Introduction 15

1.1 Overview and Motivation . . . 15

1.2 Goals/Achivements . . . 16

1.3 Outline of thesis . . . 16

2 Natural Language Models 17 2.1 Background and previous work . . . 17

2.1.1 Introduction to word vectors . . . 17

2.1.2 Training word vectors . . . 19

2.1.3 Word vectors used for phrases and entities . . . 19

2.1.4 Reducing dimensionality of word vectors . . . 20

2.2 Structure of natural language data . . . 21

2.2.1 Input data . . . 21

2.2.2 Output data . . . 22

2.3 Overview of natural language pipeline . . . 22

2.4 Pre-processing and tokenization of data . . . 23

2.5 Summary Statistics . . . 25

2.5.1 Non-empty values . . . 26

2.5.2 Unique values . . . 26

2.5.3 Most common values per column . . . 28

2.5.4 Total comments per project . . . 30

2.6 Experiments . . . 32

2.6.2 Clustering word vectors . . . 32

2.6.3 Feature matrix generation . . . 34

2.6.4 Predictive modeling . . . 34

3 Causal Modeling Approach 37 3.1 Brief introduction to causal inference . . . 37

3.1.1 Necessary conditions for causal inference . . . 38

3.1.2 Treatment effects . . . 39

3.1.3 Current causal inference techniques . . . 40

3.1.4 Propensity scores . . . 41

3.1.5 Matching . . . 42

3.1.6 Causal models in machine learning . . . 42

3.1.7 Current causal inference software . . . 43

3.2 Overview of models in CEModels . . . 43

3.3 Nearest Neighbor Model . . . 44

3.4 Simple Propensity Model . . . 47

3.5 Rubin-Rosenbaum Model: Balancing on propensity scores . . . 50

3.5.1 Estimating Treatment Effects with the Rubin-Rosenbaum Model 51 3.5.2 F-ratios . . . 51

3.5.3 Expected results . . . 55

3.5.4 Example . . . 55

3.6 Categorical data . . . 56

3.7 Handling missing data . . . 56

3.8 Limitations of system . . . 56

4 CEModels: python package 59 4.1 Motivation for developing a library . . . 59

4.2 Data input . . . 60

4.3 Abstractions . . . 60

4.4 PropensityModel(CausalModel)class . . . 61

4.6 RubinModelclass . . . 64

4.7 NearestNeighborModelclass . . . 66

4.8 Extensibility . . . 67

5 Causal Effects: Software application maintenance 69 5.1 Overview of data . . . 71

5.2 Developing a causal model . . . 72

5.2.1 Step 1: Identify when the outcome of interest happened . . . 72

5.2.2 Step 2: Raw data to covariates . . . 73

5.2.3 Step 3: Define “treatment” variables . . . 75

5.2.4 Step 4: Filter features related to “treatment” variables . . . 77

5.2.5 Step 5: Modeling and analysis . . . 78

5.3 Results . . . 78

5.3.1 Nearest Neighbor and Simple Propensity Models . . . 78

5.3.2 Rubin-Rosenbaum Model . . . 84

5.4 Prediction of individual treatment effects (ITEs) . . . 85

5.5 Key findings . . . 86

6 Conclusions and Future Work 87 6.1 NLP . . . 87

6.1.1 Future work . . . 88

6.2 Causal Modeling . . . 89

6.2.1 Future work . . . 89

A Tables 93 A.1 Summary statistics for dataset in Chapter 5 . . . 93

List of Figures

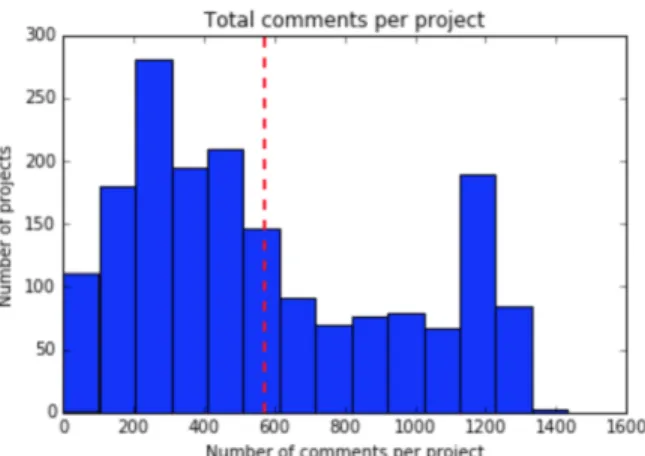

2-1 Histogram showing total number of comments per project. The mean number of comments per project (represented by the red dot-ted line) is approximately 570.15. . . 30 2-2 Histograms showing comment lengths for "delivery" and

"finan-cials" columns. The means for each histogram (represented by the red dotted lines) are 78.705 and 22.049. . . 31

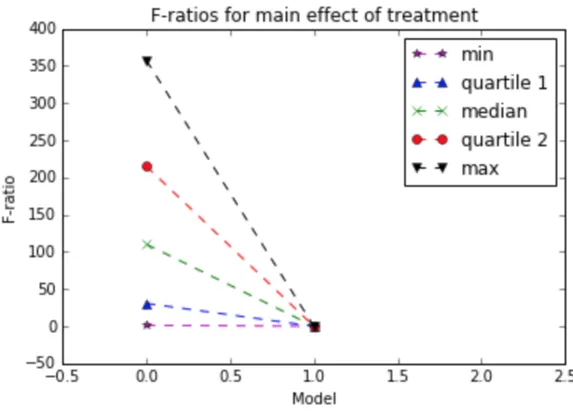

3-1 Notation for mathematical expressions in Chapter 3 . . . 43 3-2 Graphs for the example Rubin-Rosenbaum model’s covariate F-ratios

before and after forward selection for the main effect (top) and the treatment-quantile interaction (bottom) . . . 57

4-1 Overview of attributes and methods in the abstract CausalModel class . . . 62 4-2 Overview of attributes and methods in the PropensityModel class 63 4-3 Overview of attributes and methods in the SimplePropensityModel

class . . . 64 4-4 Overview of attributes and methods in the RubinModel class . . . . 65 4-5 Overview of attributes and methods in the NearestNeighborModel

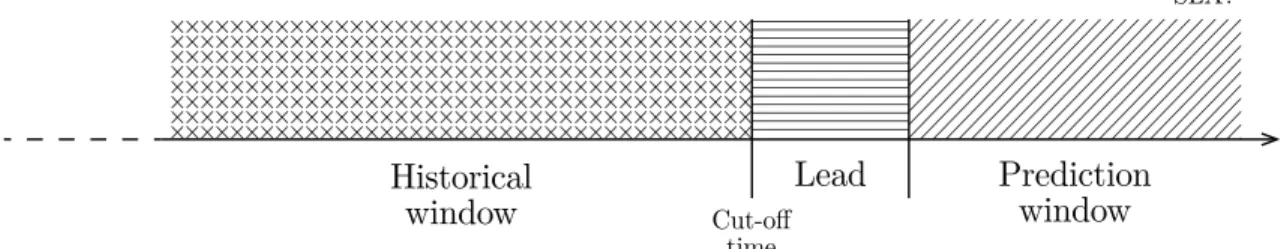

5-1 Whether an SLA is violated or not is evaluated in the weeks that correspond to prediction window. The lead corresponds to how far ahead we would like to predict the SLA violation, and histori-cal window represents the amount of historihistori-cal data we can use to study its effects on future SLA violation. In our study, historical window was set to 56 days, the lead to 4 weeks, and the prediction window to 56 days. . . 71 5-2 ATE of Resolution Time Performance, SimplePropensityModel,

NearestNeighborModelwith n_neighbors=1 and n_neighbors=

5. . . 80 5-3 ATE of Response Time Performance, SimplePropensityModel,

NearestNeighborModelwith n_neighbors=1 and n_neighbors=

5. . . 80 5-4 ATE of Backlog Processing Efficiency, SimplePropensityModel,

NearestNeighborModelwith n_neighbors=1 and n_neighbors=

5. . . 83 5-5 ATE of all treatments for Rubin-Rosenbaum model . . . 84

List of Tables

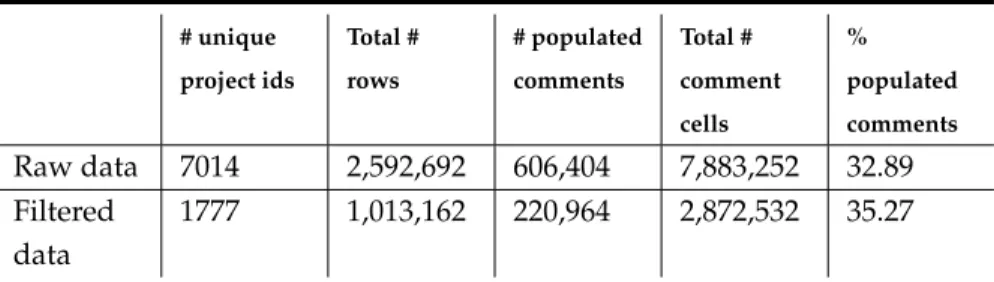

2.1 Statistics on overall density of comment data. From left to right, the columns include the number of unique project identification num-bers, the total number of rows in the dataset, the total number of populated free-text (comment) cells in the dataset, the total number of comment cells in the dataset, and the percentage of comment cells in the dataset that are populated. . . 25

2.2 Statistics (by column in our dataset) on density of raw comment data. From left to right, the columns in this table represent the col-umn in our dataset, the number of populated comments within the respective column, and the percent of comments within that column that are populated. (Note the last row in this table is corresponds to the “Raw Data” row in Table 1.) . . . 26

2.3 Statistics (by column in our dataset) on density of time-series fil-tered comment data. From left to right, the columns in this table represent the column in our dataset, the number of populated com-ments within the respective column (after filtering), and the percent of comments within that column that are populated (after filtering.) (Note the last row in this table is corresponds to the “Filtered Data” row in Table 1.) . . . 27

2.4 Statistics (by column) on uniqueness of time-series filtered comment data. From left to right, columns represent the column in our dataset, the number of unique comments in each column, the number of populated comments in each column, the percentage of unique com-ments among those comment cells that are populated, and the per-centage of unique comments among all comment cells. . . 29 2.5 Precision, recall, and F1 score for models using feature vectors

de-rived from different types of word vectors . . . 35 5.1 Average treatment effect of aggregates calculated from Resolution

Time Performance. Using SimplePropensityModel, NearestNeighborModel with n_neighbors=1 and n_neighbors=5. . . 79

5.2 ATE of Response Time Performance, SimplePropensityModel, NearestNeighborModelwith n_neighbors=1 and n_neighbors=

5. . . 81 5.3 ATE of Backlog Processing Efficiency, SimplePropensityModel,

NearestNeighborModelwith n_neighbors=1 and n_neighbors=

5. . . 82 5.4 ATE of all treatments for Rubin-Rosenbaum model . . . 83 A.1 Summary statistics for the treatment columns (excluding NaN values). 94 A.2 Summary statistics regarding NaN values for the treatment columns. 94

Chapter 1

Introduction

1.1

Overview and Motivation

This thesis concerns itself with two problems.

First, we would like to examine ways in which to use natural language fea-tures in time-varying data in predictive models. Currently, we have a prediction pipeline which we are testing on a project management dataset that uses numeri-cal and categorinumeri-cal features, which were derived through feature engineering from the original dataset (provided by the consulting company Accenture.) However, while the original data contains a large amount of natural language text, we have not yet used it in our predictive models. We would like to see if we can integrate this natural language data into our predictive models for project management ap-plications.

Our other goal relates to creating an easy-to-use, extensible causal modeling framework, which we will also test on similar project management data. Our motivation for this is as follows. In general, predictive machine learning mod-els (which comprise the vast majority of modmod-els used today) measure correlation between given input values and outcomes. However, because correlation does not imply causation (except in the case of controlled, randomized experiments), these types of models cannot directly help in decision making. However, causal models, which estimate the causal effect of an given action, can directly help in decision

making. In this thesis, we describe our Python package CEModels, which allows users to create different types of causal models using input data. We also describe three classes that represent three types of causal models, and include results of using these models on our data.

1.2

Goals/Achivements

In this thesis, we set out to achieve the following goals:

1. To our predictive models, we added a temporal component and figured out how to process natural language data when that component is added

2. We developed abstractions for causal modeling

3. We then surveyed three types of causal models by implementing Python classes uses these abstractions

4. We applied our work on the above three items to data on project management systems

1.3

Outline of thesis

In Chapter 2 we will discuss our natural language data and our methods for trans-forming this data into feature vectors for use in our predictive models.

Chapter 3 contains a description of the mathematical and theoretical concepts represented by our models, including a description of the three types of causal models we implemented and tested on our dataset.

In Chapter 4 is a more detailed description of the specific architecture of our implementation.

In Chapter 5, we give an overview of our dataset, explain the steps we took to develop our models, analyze different models, and present key findings.

In Chapter 6 we review our conclusions and discuss possibilities for future work.

Chapter 2

Natural Language Models

Our project management data has extensive free-text content, which we would like to utilize in our prediction pipeline. In order to do this, we need to represent our data as feature vectors, which we will derive from word vector representations.

First, we will present a survey of previous research on using natural language data in machine learning applications. Then, we will give an overview of our dataset and describe our pre-processing methods, while also giving summary statis-tics of the data before and after pre-processing. Finally, we will detail the process of our experiments and summarize our results.

2.1

Background and previous work

We will now explain previous work on the use of natural language data in machine learning models.

2.1.1

Introduction to word vectors

While our current set of features for our data are either numeric or categorical, we also have a large collection of free-text (natural language) comments which we currently do not utilize in our predictive models. In order to do this, we need to represent our data as feature vectors, which we will derive from word vectors.

Word vectors (or word embeddings) are a technique for learning semantic-level information of natural language is the use of word vectors.[10] Word embeddings are representations of the terms in a vocabulary as vectors of real numbers, which are derived by training on an unannotated corpus. Word embeddings were first used in a neural network system developed by Bengio et al in 2003 [28]. While there are various approaches to deriving mathematical word representations such as one-hot vectors and various types of clustering [5], word embeddings have gained much popularity in the area of natural language processing with the intro-duction of word2vec, an algorithm for training such embeddings using large cor-pora [8]. An alternative algorithm with a similar goal, GloVe ("Global Vectors for Word Representation") followed shortly after[10]. These two algorithms remain popular choices for those using word embeddings for various applications. While a full explanation of these algorithms is beyond the scope of this paper, both algo-rithms use measures of how closely words occur relative to one another in order to train the embeddings.

Below is an example of vector operations with word embeddings, demonstrat-ing how the semantic information of words is captured in the vector representa-tions:

king−man+woman≈queen

Paris−France+Poland≈Warsaw [20]

The central premise of word vectors is that we can manipulate such mathemati-cal representations of language to derive semantic insights about text. In the above examples, the semantic value of a monarch is represented in the value king−man. When the vector value for the word woman is added, the resulting vector approxi-mates that of the word queen. Similarly, the vector value for Paris (the capital city of France) minus the the vector value for France results in a vector approximating the semantic concept of "capital city." Adding the vector value for Poland results in a vector approximately equal to Warsaw (the capital city of Poland.)[20]

2.1.2

Training word vectors

When using word embeddings, one can use either pre-trained embeddings or em-beddings trained on one’s own training data. Advantages of using pre-trained embeddings include avoiding the need to separate training data from one’s own data set. Moreoever, since pre-trained embeddings typically use very large train-ing corpora (e.g. large parts of Wikipedia) in order to determine the values of their word embeddings, they are generally recognized as being a very reliable represen-tation of words in typical English usage. On the other hand, if one’s data contains a large amount of specialized vocabulary and/or usage, such that a general English corpus may prove insufficient for yielding an accurate semantic representation of the terms within the experimental data, then training one’s own word vectors on a segment of one’s own data set aside for training, or training on an existing English corpus augmented with said data, is likely be a better choice.

2.1.3

Word vectors used for phrases and entities

Furthermore, it is important to note that word embeddings do not need to corre-spond to individual words, but can also represent multi-word phrases or entities present within the text. When training one’s own word vectors, an entity detection algorithm can first be run in order to detect commonly co-occurring words in the corpus as entities, which can then be used as tokens in the training of word vec-tors rather than the individual words themselves. This allows the model to better capture the meaning of certain phrases rather than inferring them in the training stage, along with training unique embeddings for said entities. To give a simple example, we would not want our word vector model to view a phrase such as "Massachusetts Institute of Technology" as four separate words. Rather, we would much prefer to begin with the knowledge that "Massachusetts Institute of Technol-ogy" is its own entity and should have a unique embedding trained accordingly. Either phrase/collocation detection algorithm or a named entity recognition (NER) algorithm (more specific to named entities, which generally correspond to proper

nouns in English) could detect that "Massachusetts Institute of Technology" is an entity and tag the phrase as belonging to a single token during the pre-processing stage.

Using phrase detection and/or named entity recognition prior to training word embeddings can be particularly useful when the testing data consists of a spe-cialized vocabulary that is not found in general English corpora. There are sev-eral implementations of algorithms for these applications available online, such as the gensim phrases model (which detects commonly collocated words)[36] and nltk’s chunk package, which includes a specific module for named-entity recog-nition.[40]

2.1.4

Reducing dimensionality of word vectors

When using word vectors to extract meaning from large sets of sentences or doc-uments, various techniques may be used depending on the specifications of the problem. In our case, we have a large set of natural language comments in our project management dataset that we would like to categorize as belonging some set of general topics. While we have column names that describe whether the com-ments contained therein are related to financials or quality control, for example, we do not have any further information on what topics the comments contain. For this reason, we would like to use an unsupervised learning algorithm to detect topics among comments within each column.

In addition, we would like whatever information we get regarding the com-ments’ topical content to be easily integrated into our existing predictive model pipeline, which uses various models in sklearn, all of which take input feature vectors.

In order to satisfy both of these goals, one technique is to perform K-means clustering on the set of word vectors found during training, creating a mapping from a given term in the training vocabulary to an integer representing the number cluster to which the term belongs. For each comment, we can then represent each

word found in our training corpus as a hot vector. We can then sum the one-hot vectors for all words in each comment and normalize the resulting sum vector, which will then act as an input feature in our predictive model pipeline. This is what we will do in this chapter in an attempt to produce useful features from natural language comments in our software projects dataset.

2.2

Structure of natural language data

Now we will describe our dataset, which is structured as follows.

2.2.1

Input data

As described above, we have columns with project identification numbers (project ids), associated timestamps, and various information about the status of the project, including free text data which includes comments made by employees overseeing the given project. Each database entry can be uniquely identified by a project id and a timestamp.

We use the free text data in the following fields:

1. pe__facilities_infrastructure_comments 2. "milestones__comments" 3. "pe__financials_comments" 4. "pe__staffing_resourcing_comments" 5. "pe__client_satisfaction_comments" 6. "pe__service_introduction_knowledge_transition_comments" 7. "pe__risks_comments" 8. "pe__project_admin_comments" 9. "pe__delivery_comments"

10. "pe__quality_comments"

11. "pe__people_engagement_comments" 12. "ip__next_comments"

13. "pe__information_security_comments"

2.2.2

Output data

Furthermore, we have various types of output data (labels) for projects, which are typically binary indicators of a project’s status. These labels are each associated with a given cutoff timestamp, making it possible to split our data for any given project id between data gathered until the cutoff and data gathered after the cutoff. The labels in our data represent a general indicator about the status of the project: red (problem) status or green (OK) status.

2.3

Overview of natural language pipeline

The basic pipeline for converting our natural language data into numerical features for our model is as follows:

1. Perform train/test split, filtering data based on cutoff dates

2. Pre-processing and tokenization of data: Pre-process/clean our free-text data in order to make it suitable for modeling

3. Word vector representation: Train word vectors on free-text/comment data or load existing pre-trained word vectors

4. Clustering word vectors: For each set of vectors (pre-trained), use k-means clustering to classify words in vocabulary into clusters

5. Feature matrix generation: generates feature vectors for project / time stamp cutoff combinations

6. Modeling: Uses feature vectors to train sklearn models and report scores

2.4

Pre-processing and tokenization of data

Before we perform analysis on our data, we pre-process it in order to remove var-ious things that may interfere with our modeling. This includes:

1. Removing dates and numeric info: while these could be useful in a model that takes into account the context of this info, these are not helpful for the modeling we are using with word vectors. We replace dates with the token [DATE].

2. Removing all excess whitespace and punctuation: This is necessary for training word vectors

3. Converting all alphabetical characters to lowercase: We assume that our data is case-insensitive

4. Replacing Git commit strings with a special token: specific Git commits will interfere with training our own word vectors, so we take these out and replace them with the token [GITCOMMIT] to represent a general Git commit string in the data

5. Identifying all variations of null values present in the data and replacing with empty strings: Null values in our data include strings such as "N/A", "NA", and "Not applicable". We simply ignore null values in our data by replacing them with empty strings.

6. Detecting multi-word entities (if not using pre-trained word vectors): Since there are multi-word expressions that might be useful in providing informa-tion, we use gensim’s phrases package to detect multi-word entities. Ex-ample of this would be identifying phrases such as "high priority" or "bud-getary limit." Identifying such phrases before training word vectors means

that our resulting word vectors will consider these phrases as a single entity (and train a resulting word vector for the phrase), rather than considering the words separately. However, since our pre-trained word vectors only repre-sent single words, we do not perform this step when using pre-trained word vectors.

Below is an example of one of our comments, showing the original tokens and the tokens after entity detection has been performed:

∙ Original comment tokens = [’no’, ’issues’, ’now’, ’a’, ’days’, ’citrix’, ’is’, ’slow’, ’as’, ’if’, ’now’, ’its’, ’under’, ’control’]

∙ Comment tokens after phrase detection = [u’no’, u’issues’, u’now’, u’a’, u’days’, u’citrix’, u’is’, u’slow’, u’as’, u’if’, u’now’, u’its’, u’under_control’]

7. Removing common English stop words: in the generation of feature ma-trices, stop words only add noise to the vector space. Thus, by removing common stop words, we hope to improve the efficiency of our model train-ing.

Steps 1 through 5 can be done without worrying about splitting our data into train and test data sets. However, since our phrase detection works by training a model to find common word collocations, we need to split our data into train and test sets in order to avoid leakage in our training. Because we have time-series data, we cannot simply split all entries in our table randomly. Instead, we perform a train/test split on our labels/output data and identify which project ids will be in the train set and which will be in the test set, and then find the entries in our data which have dates before the respective cutoff timestamps for a given project id. (These same train/test datasets will be used below in our models.)

Finally, we remove stop words from our data. We did not do this in earlier steps because we might detect phrases in the previous step that include stop words.

# unique project ids Total # rows # populated comments Total # comment cells % populated comments Raw data 7014 2,592,692 606,404 7,883,252 32.89 Filtered data 1777 1,013,162 220,964 2,872,532 35.27

Table 2.1: Statistics on overall density of comment data. From left to right, the columns include the number of unique project identification numbers, the total number of rows in the dataset, the total number of populated free-text (comment) cells in the dataset, the total number of comment cells in the dataset, and the per-centage of comment cells in the dataset that are populated.

For example, the phrase "on track" (commonly used to indicate a favorable status) might appear in our data, but "on" is a common English stopword and would get filtered out if not classified as part of a longer phrase. Thus, if we were to filter stop words before phrase detection, we would lose valuable information for our models.

After pre-processing, we generate summary statistics as detailed in the next section.

2.5

Summary Statistics

First, we generated statistics on the number of total entries in our data before and after time-series filtering (i.e. excluding the entries in the database that are after the project id’s cutoff timestamp in our output labels, or have a project id that is not present in our output labels.) We also report overall statistics on the number and percentage of populated comment fields. The overall percentage of populated comments both before and after filtering stays relatively the same, with only a slight increase after filtering. These statistics are shown in Table 2.1.

Database column # populated comments % populated comments milestones__comments 37,260 6.14 pe__facilities_infrastructure_comments 252,783 41.69 pe__financials_comments 253,087 41.74 pe__staffing_resourcing_comments 256,343 42.27 pe__client_satisfaction_comments 255,440 42.12 pe__service_introduction_knowledge_transition_comments 240,398 39.64 pe__risks_comments 254,364 41.95 pe__project_admin_comments 71,701 11.82 pe__delivery_comments 260,089 42.89 pe__quality_comments 254,971 42.05 pe__people_engagement_comments 251,851 41.53 ip__next_comments 27,684 4.56527 pe__information_security_comments 176,721 29.14 Total 2,592,692 32.89

Table 2.2: Statistics (by column in our dataset) on density of raw comment data. From left to right, the columns in this table represent the column in our dataset, the number of populated comments within the respective column, and the percent of comments within that column that are populated. (Note the last row in this table is corresponds to the “Raw Data” row in Table 1.)

2.5.1

Non-empty values

In order to see how the statistics above differed by row, we gathered the same statistics broken down by individual column for both the raw data (Table 2.2) and the time-series filtered data (Table 2.3). As in the overall statistics, the percentage of populated comments increases slightly after time-series filtering. This is the case with all thirteen columns.

2.5.2

Unique values

Another metric we wanted to measure was how repetitive our data was. In our initial analyses of the data, we found that the exact text of comments was repeated in multiple entries over time. For example, this might occur if there was no update on a certain issue pertaining to the project, causing the project manager to enter the same text for that comment field. In Table 2.4, we report for each column:

Database column # of populated comments % populated comments milestones__comments 10,138 4.59 pe__facilities_infrastructure_comments 98,170 44.43 pe__financials_comments 99,202 44.8950 pe__staffing_resourcing_comments 100,473 45.47 pe__client_satisfaction_comments 99,774 45.15 pe__service_introduction_knowledge_transition_comments 93,497 42.31 pe__risks_comments 99,719 45.13 pe__project_admin_comments 30,449 13.78 pe__delivery_comments 101,909 46.12 pe__quality_comments 99,950 45.23 pe__people_engagement_comments 98,709 44.67 ip__next_comments 14,419 6.53 pe__information_security_comments 66,753 30.21 Total 1,013,162 35.27

Table 2.3: Statistics (by column in our dataset) on density of time-series filtered comment data. From left to right, the columns in this table represent the column in our dataset, the number of populated comments within the respective column (after filtering), and the percent of comments within that column that are popu-lated (after filtering.) (Note the last row in this table is corresponds to the “Filtered Data” row in Table 1.)

2. the number of populated comments

3. the percent ratio of unique comments over the number of populated com-ments cells

4. the percent ratio of unique comments over the all comment cells (i.e. includ-ing null-valued cells)

Overall, our dataset’s ratio of unique comments to all populated comments is a little over 25 percent, which means that in general, we have a large amount of repeated data (roughly, we can expect each comment to appear four times within its column.) However, some columns have a more diverse set of populated values, with the highest of these being milestone_comments (whose overall percentage is dragged down by the large number of null values in the column) and pe__delivery. By contrast, we can expect to find a comment in the pe__project_admin column repeated about ten times.

2.5.3

Most common values per column

We also wanted to see the most common values per column for comments in or-der to get an idea of how many times comments might be repeated. We did this after tokenization in order to make sure that variations in whitespace and punc-tuation did not interfere with the results. We found that some columns had most frequent values that were unsurprising, such as "good." However, other comments had long, very specific comments as their most commonly occurring entries, such as "indonesia is asking for additional time to extract data for image migration and the timelines shall be beyond the current contract period and the team mem-bers availability to be secured mitigation client team is engaging the client exe-cution is moved as a cr under equinox contract with no risk to this contract clo-sure in delta loading from [DATE] till date to be taken up as a cr mitigation client team is engaging the client execution planned to be moved as a cr under equinox contract with no risk to this contract closure," which occurred several times in

Database column # of unique comments # of populated comments % unique comments (populated cells) % unique comments (all cells) milestones__comments 8,916 10,138 87.95 4.04 pe__facilities_infrastructure_comments 12,073 98,170 12.30 5.46 pe__financials_comments 16,381 99,202 16.51 7.41 pe__staffing_resourcing_comments 36,640 100,473 36.47 16.58 pe__client_satisfaction_comments 19,037 99,774 19.08 8.62 pe__service_introduction_knowledge_ transition_comments 16,385 93,497 17.52 7.42 pe__risks_comments 28,027 99,719 28.11 12.68 pe__project_admin_comments 2,880 30,449 9.46 1.30 pe__delivery_comments 69,644 101,909 68.34 31.52 pe__quality_comments 26,352 99,950 26.37 11.93 pe__people_engagement_comments 16,669 98,709 16.89 7.54 ip__next_comments 4,170 14,419 28.92 1.88 pe__information_security_comments 6,158 66,753 9.23 2.79 Total 263,332 1,013,162 25.99 9.17

Table 2.4: Statistics (by column) on uniqueness of time-series filtered comment data. From left to right, columns represent the column in our dataset, the number of unique comments in each column, the number of populated comments in each column, the percentage of unique comments among those comment cells that are populated, and the percentage of unique comments among all comment cells.

Figure 2-1: Histogram showing total number of comments per project. The mean number of comments per project (represented by the red dotted line) is approxi-mately 570.15.

pe__risks_comments. Several other columns had similar comments as their most frequent value. While we can show more detailed statistics here, this indi-cates that our data has many instances of project managers copying and pasting the same text for a comment field when, presumably, no change in status has occurred relevant to that column.

2.5.4

Total comments per project

We also perform analysis to see how many comments (total) we have per project. In Figure 2-1 is a histogram showing the distribution of comments per project for all 1777 projects. We also find that the mean number of comments per project is 570.15 comments, the median is 467 comments, and the standard deviation is 379.02.

Finally, we wanted to see the distribution of the length of comments for each column. Most distributions were similar, with shorter comments being the most common and longer comments being less frequent. In Figure 2-2 are two examples

of histograms showing the number of words in comments in the pe__delivery_comments (mean:78.705, median: 52.0, standard deviation: 77.633) and pe__financials_comments columns (mean: 22.049, median: 10.0, standard deviation: 33.816).

Figure 2-2: Histograms showing comment lengths for "delivery" and "financials" columns. The means for each histogram (represented by the red dotted lines) are 78.705 and 22.049.

2.6

Experiments

In this section, we will detail the our modeling process and the results of our ex-periments.

2.6.1

Word vector representation

In order to represent the text data in a feature vector format, we first use clustered word vectors.

We have several choices regarding our source of word vectors: we can train our own word vectors or we can use pre-trained word vectors. Training our own word vectors might be useful given the specialized vocabulary likely present in our data; on the other hand, training useful word vectors often requires a large amount of data.

Furthermore, we can also use different word vectorization algorithms. One popular word vectorization algorithms is GloVe.[10] During modeling, there are various hyper-parameters to set, such as dimensionality, the minimum number of times a word needs to occur in order to be included in the model, learning rate, window size, and number of training epochs. For training with GloVe, we chose a dimensionality of 100, a minimum word count of 5, a learning rate of 0.05, a window size of 3, and 10 training epochs.

2.6.2

Clustering word vectors

After obtaining word vector representations either through our own training or pre-trained word vectors, we cluster word vectors using k-means clustering.

We run the sklearn MiniBatchKMeans algorithm with the default values (k =8, running the algorithm ten times with randomly chosen centroid seeds and using the best performing set of centroids, with a maximum of 100 iterations each time).

word vectors, including the vectors we trained on our own data and pre-trained GloVe vectors.

The pre-trained GloVe vectors have a vocabulary of 400, 001 words. However, before clustering, we filter for only the words that appear in our data. We do this out of concern that such a large vocabulary taken from corpora regarding com-pletely different topics than our own will create entire clusters composed mostly or even entirely of words that do not appear in our dataset at all.

When training our own vectors, the resulting word to cluster hashmap had 86, 879 entries, corresponding to the number of unique words and multi-word phrases that appeared in our data during the training process.

Clustering example words Self-trained (GloVe):

∙ Cluster 0: hostname, advertisement, debit_card, excel_file, dependiencies (note misspelling), environmental_dependencies, unresolved_dependencies, interdependencies

∙ Cluster 5: solvency, annual_feedbacks, sucessfully_upgraded, loosing_revenue, shopping_cart, christmas_shutdown

Pretrained GloVe:

∙ Cluster 1: ’appropriation’, ’designing’, ’affiliates’, ’affiliated’, ’music’, ’ya-hoo’, ’travel’, ’vouchers’, ’estimate’, ’ministries’, ’service’, ’regulator’, ’tech’, ’irs’, ’employ’, ’corporate’, ’municipal’, ’dues’, ’chain’, ’recipients’, ’drilling’, ’receipt’, ’sponsor’, ’internet’

∙ Cluster 2: ’feasibility’, ’sustaining’, ’deferred’, ’inevitable’, ’lifeline’, ’fix’, ’sta-bilised’, ’encourage’, ’rewards’, ’positively’, ’shipments’, ’committing’, ’di-minishing’, ’altogether’, ’extend’, ’extent’, ’eradicate’, ’workforce’, ’safeguard’, ’complications’, ’paperwork’, ’outage’, ’oversight’, ’defaults’, ’hindered’, ’im-pacted’, ’approving’

∙ Cluster 6: ’ruchi’, ’torgerson’, ’aren’, ’matthias’, ’mittal’, ’thornburg’, ’wilshire’, ’sasikiran’, ’lacan’, ’tripple’, ’solon’, ’romesh’, ’manoj’, ’vittal’, ’raghavendra’, ’venkata’, ’tapp’, ’jal’, ’jah’, ’jai’, ’jad’, ’jab’, ’abba’, ’ananda’, ’haris’, ’alessan-dra’, ’tsb’, ’chakrabarty’, ’allergan’, ’cvs’, ’eaton’, ’pauls’, ’gibbs’, ’paula’, ’kuehne’, ’karani’, ’pham’, ’wef’, ’wee’, ’tico’, ’emma’, ’schmitt’

2.6.3

Feature matrix generation

After words in our vocabulary have been assigned to clusters, we then generate feature matrices as follows:

1. Filter data by date: For each project’s cutoff timestamp, take all free-text data occurring before the cutoff. This will give us a list of comments in various columns.

2. Generate feature vector for each column: For each column (i.e. each type of comments), we generate a feature vector by taking the bag-of-words (BoW), counting the number of words present in each cluster, and projecting this vec-tor onto the probability simplex (i.e. finding the percentage of words present in each cluster.) Any word in our data that is not present in our word vectors is ignored. If we have k clusters, we will thus generate a length-k vector for each comment field. In the chance that all comments for a given column are empty (or all words were not present in our word vectors), we use a length-k vector with numpy NaN entries that will be imputed during the training of our model.

3. Concatenate vectors: We concatenate these vectors together into a feature matrix for the project.

2.6.4

Predictive modeling

Finally, we use our feature matrices as input data for various sklearn predictive models.

Self-trained GloVe Pretrained GloVe Precision 0.1101 0.1048

Recall 0.9231 0.5641

F1 score 0.1967 0.1767

Table 2.5: Precision, recall, and F1 score for models using feature vectors derived from different types of word vectors

Our current sklearn pipeline includes an sklearn StandardScaler, which scales each feature independently, followed by an Imputer which imputes the missing feature vectors described above with the mean value along each column. Finally, we can apply the model of our choice. Currently, we are using sklearn’s RandomForest Classfierwith 400 estimators.

As we can see from Table 2.5, there is little difference in precision and F1 score results between models that use vectors trained on our own data and those that use pre-trained vectors. In both types of models, our recall is higher; however, our model that used self-trained GloVe vectors significantly outperformed the one that used pre-trained GloVe vectors. Thus, while more experiments using different sets of pre-trained word vectors should be used, we should probably prefer word vec-tors trained on our own data. This makes sense, given that software managment systems often use specialized terminology whose semantics we would not expect word vectors trained on general English language corpora to reflect.

Furthermore, the fact that our model using the self-trained vectors has a high recall rate of means that it is currently very sensitive to detecting projects with a dangerous status, although of the projects it flags, only a small proportion (around eleven percent) actually turned out to have such a status at their cutoff date. Ide-ally, we would like to keep this high recall and boost our precision in order to have fewer "false alarms" for users (which can waste users’ resources by causing them to devote extra attention to projects that are not actually at risk.)

Chapter 3

Causal Modeling Approach

In this paper, we describe our Python package CEModels, which allows users to create different types of causal models using input data. This section contains a de-scription of the mathematical and theoretical concepts represented by our models, including a description of the three types of causal models we implemented and tested on our dataset. In Chapter 4 is a more detailed description of the specific architecture of our implementation.

First, we will present an introduction to causal inference and a survey of past and current research. Then, we will give an overview of our three types of models.

3.1

Brief introduction to causal inference

Causal inference is a technique for determining the causal effect of a given treatment. Causal inference is currently used extensively in statistics and related fields, par-ticularly those in which it is parpar-ticularly important to understand whether a given variable has a causal effect on an outcome rather than simply being correlated with the outcome. In this way, causal modeling differs from other statistical and ma-chine learning methods, which merely attempt to model correlational (rather than causal) effects.

However, measuring causation is usually not straightforward: under most con-ditions, correlation does not imply causation. Only under a completely

random-ized experiment, given a large enough sample size, does correlation actually im-ply causation (and even then, this is only an estimate within certain confidence ranges.) Usually such a randomized experiment is unrealistic or impossible (such as when we have observational data), and so various causal inference techniques (described below) attempt to emulate the conditions of different types of random-ized experiments for observational data and thus link correlation in the (adjusted) model to causation.

In general, predictive machine learning models (the vast majority of models used today) measure correlation between given input values and outcomes. How-ever, these models have limitations, as correlations cannot directly help in decision making. On the other hand, estimation of the causal effect of an given action can allow decisions to be made that optimize for our preferred outcomes.

3.1.1

Necessary conditions for causal inference

There are three conditions necessary for measuring causality. (Sometimes called "identifiability conditions").[37] These include the following for observational data:

1. Consistency: the interventions (or "treatments") must be well-defined

2. Exchangeability: "the conditional probability of receiving every value of treat-ment...depends only on the measured covariates"

3. Positivity: "the conditional probability of receiving every value of treatment is greater than zero, i.e. positive"[37]

Consistency is satisfied by ensuring that the treatments that we propose are well-defined and measureable (i.e. that there is no ambiguity as to whether a given participant in our data received the treatment or not.) Complications arise when treatments are not binary, especially when they take on a range of continuous val-ues. Ways for dealing with this are described elsewhere.

In order to ensure exchangeability, one must adjust for confounding factors, selection biases, and measurement errors. This is generally the most difficult

con-dition to satisfy, as there is no guarantee that there are not unmeasured covari-ates that affect exchangeability. (In other words, there may be factors that are un-measured or unaccounted for that render the treated and untreated populations nonexchangeable, thus breaking the assumption that correlation and causation are equivalent after adjusting for differences in measured covariates.)

Regarding positivity, while it is generally not possible to guarantee that a given set of observational data satisfies this condition without looking at the values, col-lecting a large enough set of data and making sure the possible values of the mea-sured covariates include values (or ranges of values) that occur with a large enough frequency usually takes care of this condition.

3.1.2

Treatment effects

The usual output of a causal model is called a treatment effect. Two main types of treatment effects exist: average treatment effect (ATE) and individual treatment effect (ITE).

Average treatment effect (ATE)

The ATE is an approximation of the average (or overall) effect of the designated treatment on the outcome, accounting for (or making assumptions about the ab-sence of) confounding factors and sampling biases in the data. For example, sup-pose one was trying to see if a certain type of fertilizer increased plant growth. One might have a data set with a set of covariates x (including, for example, the species of the plant and the amount of water it receives daily), a binary treatment t (whether or not the new fertilizer was added to the soil), and an outcome variable y (the height of the plant in centimeters three months after planting). A model’s estimation of an ATE of 5.5 would indicate that using the fertilizer would be ex-pected to increase plant growth by 5.5 centimeters. Conversely, estimating an ATE of−5.5 would indicate that using the fertilizer would decrease the plant growth by 5.5 centimeters. (That is, according to the model, the fertilizer has a negative effect

on plant growth.)

Individual treatment effect (ITE)

The ITE is an approximation of the effect of the designated treatment on the out-come for an individual data point, given the covariate values x of that data point. ITE values can be calculated both for data points that exist in the training set and for new or hypothetical data points for which we do not yet know the outcome. For existing data points, the ITE estimates the causal effect that the treatment had on the outcome (though other factors could have also influenced the outcome.) For a new data point, the ITE estimates what causal effect the treatment would have on the yet-to-be-determined outcome. (While, as before, other factors could also influence the outcome, taking action towards the preferred outcome using infor-mation from the ITE should improve the chances that the preferred outcome will occur.)

ITE values are numerically interpreted similarly to ATE values (e.g. an ITE value of 0.1 means an expected increase in the outcome of 0.1), except that the ITE is estimated for the specific data point. ITE is generally much more difficult to estimate reliably, given that a data set might not have few or no points with similar covariate values. For example, if we only have a few plants of species q that receive full sunlight in our hypothetical data set described above, if we wanted to know whether we should use fertilizer on a newly planted seed of species q, any estimate of ITE from the existing data would be unreliable due to such a small sample size. However, if we had a larger number of data points with similar covariate values, we could make a more reliable ITE estimation (assuming we were measuring all relevant covariates) and make a decision on whether we should use the fertilizer.

3.1.3

Current causal inference techniques

Causal inference techniques for observational data vary depending on the com-plexity of the data at hand, the types of covariates measured (binary,

categori-cal, continuous, discrete, time-varying, etc.), and the outcome that is being mea-sured. In the subsections below is a summary of the types of causal inference tech-niques in use in statistical analysis today. For further reading, consult Hernán and Robins textbook on causal inference[37] or Pearl’s "Causal inference in statistics: An overview".[4]

3.1.4

Propensity scores

Propensity scores are a metric of a given data point’s propensity to receive a desig-nated treatment. They are defined as follows. Suppose we have n data points, each with a vector of covariates x and an outcome y. If tiis an indicator variable

repre-senting whether a data point(xi, yi)received a given treatment, the the propensity

score pi is equal to P(ti = 1|xi). Since propensity scores are probabilities, they are

typically represented as numbers between 0 and 1.

In order to calculate propensity scores from a set of data, the usual procedure is as follows:

1. Define a treatment and determine which data points received it (i.e. divide the data into an untreated class, where ti =0, and a treated class, where ti =1.

2. Use a logistic regression (or similar method) to model propensity score val-ues. The propensity score for each data point is equal to its respective proba-bility estimate for the treatment class.

3. Estimate the average treatment effect by weighting data points by inverse propensity scores, as follows: dATE= 1n(∑i|ti=1yi

pi −∑i|ti=0

yi

1−pi)[39][37]

One can then calculate the average treatment effect (ATE) of a given treatment, using the propensity scores to weight data points. Since data points with a lower propensity score are less likely to receive the treatment due to their covariate val-ues, we weight them more heavily in order to simulate a randomized control trial.

3.1.5

Matching

However, propensity scores have some limitations, one of which is that, by them-selves, they can only estimate the average treatment effect over a population. If one wants to estimate the individual treatment effect (ITE) of a single data point, one needs to employ other techniques. One technique that can be used in conjunc-tion with propensity scores is matching. This involves finding similar data points with opposite treatment values and comparing their outcomes. In this way, one can estimate the treatment effect on this subset of the data in order to predict the treatment effect for a given data point. There are many algorithms for matching, including matching variations using 1-nearest neighbors and propensity scores. For an overview of algorithms for matching on propensity score, see [9].

3.1.6

Causal models in machine learning

In recent years, as fields such as biostatistics have influenced machine learning research, there has been a great increase in attempts to apply machine learning techniques to causal inference. New algorithms for causal inference now include machine learning methods, widely used for predictive models and retooled for causal models, such as random forests[31][22], regression trees[13][6], Gaussian processes[3][7][21], LASSO[23][11][14], representation learning[15][30], and deep learning.[19][15][17][26][16][27]

In addition, since one of the most difficult parts of causal inference involves knowing the ways in which covariates interact with one another (which even do-main experts might be unable to do, such as if they are researching health outcomes among patients using an experimental drug), methods for learning the causal struc-ture of a set of data with limited or no prior domain knowledge have also arisen. Suppes-Bayes Causal Networks, which attempts to learn the causal structure of Bayesian networks when domain knowledge is lacking, is one such method.[29][18][25][24] (While learning the structure of Bayesian networks is generally an NP-hard prob-lem[2], certain constraints apply in Suppes-Bayes Causal Networks that reduce the

Notation Explanation

i index of point in dataset N number of points in dataset

x vector of covariates (input data) in dataset t binary treatment value in data set

y labels (outcome label) in dataset

(xi, ti, yi) a single data point, with input covariates and outcome label

Figure 3-1: Notation for mathematical expressions in Chapter 3

complexity.)

3.1.7

Current causal inference software

Current software for creating causal models from data includes Causal Inference in Python (or Causalinference), which allows users to estimate estimate treatment effects via propensity scores, matching, and a few regression methods (such as ordinary least squares and regression within blocks, after the data has been strati-fied.)[34]

There are also many existing repositories that are implementations of algo-rithms in recently published papers. Causal Bayesian NetworkX[12] is a Python package for representing a modified version of Bayesian networks that represent causal processes. cfrnet[35] is written in Python and uses TensorFlow in order to implement the deep representation learning algorithms described in [15] and [30]. grf: generalized random forests contains implementations in C++ and R of the algo-rithms found in [22].

3.2

Overview of models in CEModels

We will now present an overview of the models represented in CEModels. Each model, after training, can estimate the causal effects of a given treatment on the outcome. The models described in the sections below estimate both the ATE and ITE using various machine learning and statistical procedures.

Below is a summary of the three models:

∙ The Nearest Neighbor Model is a model that takes all user-designated co-variates and performs matching using a nearest neighbor algorithm.

∙ The Simple Propensity Model is a model that takes all user-designated co-variates, performs a logistic regression in order to estimate propensity scores. It uses the propensity scores it calculates in order to estimate treatment ef-fects.

∙ Finally, the Rubin-Rosenbaum Model is a more complex type of propensity score model that attempts to find the optimal model on propensity scores through a procedure involving forward selection of both first- and second-order covariates. This allows for a distribution with greater overlap between the treated and untreated groups among the selected covariates, allowing for more accurate estimations.

For all of the models we will present an example using a small sample data set (using Boston housing value data) and interpretation of the results given by the model. This dataset consists of 506 entries concerning housing districts in the greater Boston area, including information such as levels of nitrous oxides, per capita crime rate, property tax rate, and median home value. See Appendix B for more information.

In the sections below, mathematical notation follows the conventions described in Figure 3-1. Notation specific to the models themselves is explained in the respec-tive sections.

3.3

Nearest Neighbor Model

Our first model uses nearest neighbor matching on all covariates indicated by the user. The nearest neighbor algorithm is a procedure that finds the distance (accord-ing to a specified distance metric) between each point relative to each other point in

a data set, selecting the n points with the smallest distance from a point as the near-est neighbors. Our model uses a Euclidean distance metric by default, though a user can specify any other distance metric from scikit-learn or scipy.spatial. distance.

In our model, we use a modified nearest neighbor search, in which each point is matched with points that have similar covariate values but opposite treatment values in order to estimate outcomes for different treatment choices. The user can also indicate the number n of neighbors to be matched. Increasing the number of neighbors for matching will decrease the expected bias of the treatment effect estimation, with a tradeoff of increasing the variance.

Estimating Individual Treatment Effect

In order to estimate individual treatment effect with nearest neighbor matching, given a data point, we find the nearest n neighbors in each of the treated and untreated groups. (If the data point is already included in the training data, we include it as one of the nearest neighbors in its respective group.) If T is the set of nearest neighbors in the treated group and U is the set of nearest neighbors in the untreated group, then the individual treatment effect (ITE) for the new data point is estimated as follows:

d

ITEj = 1n ∑i∈Tyi−n1∑i∈Uyi

In other words, the approximation of the ITE is equal to doing the following:

1. Sum the outcome values for the nearest neighbors in the treated group

2. Subtract the sum of outcome values for the nearest neighbors in the untreated group

3. Divide the resulting difference by n, the number of neighbors we are finding for each point

Estimating Average Treatment Effect

To estimate the ATE of a given treatment using nearest neighbor modeling, we calculate the ITE for a given point (as described above). Then we sum over all points in the data set and divide by the total number of points in the data set in order to estimate the ATE.

d

ATE = N1(∑iITEdi)[39] Example

Using the Boston housing data from the Carnegie Mellon University StatLib Datasets Archive (see Appendix B), we attempt to find the ATE of having above average ni-tric oxides on per capita crime rate.

We designate the treatment as a binary variable indicating whether the ni-tric oxide concentration is above the 75th percentile, which we easily add to our dataframe under a new column heading. We designate the outcome to be the per capita (per 100, 000 residents) crime rate. (The original source of this data, listed in Appendix B, does not specify what types of crimes are included, only that the values come from 1970 FBI statistics.) Similarly, we designate the covariates as all other columns that are not the treatment (or binarization thereof) or outcome. Using 10 for the number of neighbors, after training the model, we call the ATE estimation method and get 8.2665. This is plausible, since the mean crime rate is 3.6135, with a maximum value of 88.9762 and and minimum value of 0.00632. This means that according to the model, having nitric oxide concentrations above the 75th percentile causes the crime rate to increase by 8.2665.

We can similarly estimate the ITE by inputting covariate values for a new data point. Inputting the mean value of each covariate column as our input data point, using n = 10 for our number of neighbors, we get an estimated ITE of 9.3257 for this hypothetical district. This means that for a district with the covariate values, we would expect having nitric oxide concentration above the 75th percentile to in-crease the per capita crime rate by 9.3257. Interestingly, this is higher than the ATE

calculated above, which estimates the treatment effect of nitric oxides on crime rate in general. This is probably because disrticts with a high concentration of ni-tric oxides tend to have other covariate values that deviate from the mean, which make it more likely that these districts will receive the "treatment" of higher nitric oxide concentrations (for example, they might have a higher proportion of indus-try in the area). Thus, for these districts, the model estimates the treatment effect of the nitric oxide concentration itself to be lower because the higher proportion of industry also has some effect on the outcome.

3.4

Simple Propensity Model

Our next model is a calculation of propensity scores using all covariates indicated by the user. As described in Section 3.1.4, the propensity score is a numerical value between 0 and 1 indicating the likelihood of a given data point to receive the des-ignated treatment, or p(ti =1|xi). In order to first estimate the propensity score, a

logistic regression is performed on the data, using the covariates as inputs and the binary treatment values as outputs. The propensity score for each data point (at index i) is then equal to logistic regression’s the probability estimate for the ti =1

category.

Estimating Average Treatment Effect with the Simple Propensity Model

As described in Section 3.1.4, estimating the ATE using propensity scores is straight-forward, and can be calculated as follows:

d ATE = N1(∑i|ti=1 yi pi −∑i|ti=0 yi 1−pi),

where piis the propensity score. [39]

Intuitively, the approximation of the ATE using propensity scores is equal to doing the following:

1. For each point in the treated group (ti = 1), divide the outcome label (yi) by

dividing by the propensity score has the effect of more heavily weighting data points that are less likely to receive the treatment, but nonetheless do, in our approximation. (In other words, this is correcting for confounding factors that make receiving treatment more or less likely.)

2. For each point in the untreated group (ti = 0), divide the outcome label (yi)

by the conditional probability on the covariates that the treatment value is 0 (p(ti =0|xi) =1−pi). Sum the resulting values.

3. Subtract the sums calculated in the above two steps.

4. Divide the resulting difference by N, the number of data points in the data set.

Estimating Individual Treatment Effect with the Simple Propensity Model Because propensity scores cannot be used on their own to estimate individual treatment effect (only average treatment effect), we need to use them in combina-tion with other methods to estimate ITE. One common strategy is to use matching on the propensity scores (there are many algorithms in use for doing so, see [9]). For simplicity, we find the closest propensity scores within a designated radius (user selected, default 0.05) in order to estimate individual treatment effect.

Comparison of matching propensity scores with matching in Nearest Neighbor Model

Compared to the Nearest Neighbor Model (described above in 3.3), matching on propensity scores has advantages and disadvantages. On one hand, propensity scores take into account the expected probability that a given data point will re-ceive the treatment, which will give less weight to covariates that might be less important (as opposed to simple nearest neighbor matching, which does not take into account the relative importance of different covariates.) On the other hand, representing each data point by a single numerical value involves a loss of

infor-mation that does not occur in simple nearest neighbor matching, possibly decreas-ing the accuracy of the modeldecreas-ing.

Example

Using the same data, covariates, treatment, and outcome as in the previous sec-tion, we train a Simple Propensity Model. We call the ATE estimation method and get −1.2846, which is very different the result calculated above using the Nearest Neighbor Model. So according to the Simple Propensity model, having nitric oxide concentrations above the 75th percentile causes the per capita crime rate to decrease by −1.2846. One reason for this result might be that unlike the nearest neighbor model, this model takes into account the likelihood (based on other covariate val-ues) that a given district will have high nitric oxide concentrations. This model seems to have concluded, in general, other factors that high nitric oxide districts tend to have (which increase their likelihood of having high nitric oxide) are the driving force behind high crime rate, not the nitric oxide levels themselves.

We can similarly estimate the ITE by inputting covariate values for a new data point. Inputting the mean value of each covariate column as our input data point as in the previous section, we get an estimated ITE of 2.9253. This means that for a district with the covariate values, we would expect having nitric oxide concen-tration above the 75th percentile to increase the per capita crime rate by 2.9253. We note that this treatment effect is positive, which means that the model believes that for a data point with these covariate values, a high nitric oxide concentration will cause the crime rate to increase (as was the prediction by the nearest neighbor model.) This estimation probably results from having few high nitric oxide dis-tricts with a similar propensity score, which means that the model assumed that districts that did have a similar propensity score were affected by the nitric oxide itself rather than other covariates.

3.5

Rubin-Rosenbaum Model: Balancing on

propen-sity scores

Our third type of model, an implementation of the algorithm described in Rubin and Rosenbaum [1], also uses both propensity scores and matching. However, rather than simply considering all covariates equally as the above two models do, we attempt to model the propensity scores by selecting only certain covariate val-ues to be included in our model. Additionally, we may also include second-order terms (squares of covariates and/or products between two different covariates). Including each of these in our model is an attempt to produce a dataset in which data points within the treated and untreated groups have similar propensity score distributions.

The procedure is as follows. Suppose we have a dataset with a designated outcome, treatment, and possible covariates. This model is summarized as follows: 1. An initial step-wise discriminant analysis, which performs forward selection

of covariates to include in the model from among the possible covariates 2. Additional step-wise discriminant analysis to add cross-products or

interac-tions of covariates selected in the previous step

3. Estimate the propensity score using maximum likelihood logistic regression (Rubin and Rosenbaum used the SAS model, but we use the sklearn LogisticRegressionmodule.)

4. Sub-classify on the quantiles of the propensity score distribution of the pop-ulation (Rubin and Rosenbaum used quantiles with n=5, or quintiles.) We then evaluate the balance that this first model achieves among the subpop-ulations by comparing the F-ratios (see explanation below) of the main effect of the treatment for all the covariates.

Then we create a subsequent models by balancing the propensity score distri-bution. We do this by adding previously excluded covariates with large F ratios to

the new model, or, if this covariate is already in the model, we add the square of the term and/or products with other covariates.[1]

3.5.1

Estimating Treatment Effects with the Rubin-Rosenbaum Model

Because the Rubin-Rosenbaum model is a propensity score model, the ATE and ITE is identical to the process described for the Simple Propensity Model in the section above. As detailed in Chapter 4, there is a PropensityModel class, of which both SimplePropensityModel and RubinModel are subclasses.

3.5.2

F-ratios

This subsection will give a further explanation of the statistical ideas behind how the Rubin-Rosenbaum model works.

In refinement of subsequent models after the initial forward selection, we use a statistical measure known as the "F-ratio," which is a measure of similarity between two distributions. A simple definition is that it is the ratio of the "variance between groups over the variance within groups."[42] Conceptually, this means that the variance calculated over all the groups (relative to the overall mean) is compared to the variance calculated within each group. If an F-ratio is close to 1, this means that the two distributions are similar, with a great deal of overlap.[42] If an F-ratio is much greater than 1, this means that the two distributions overlap very little, as the between-group variance (calculated relative to the data set’s overall mean) is large relative to the within-group variance.

First, we will explain how to calculate the one-way analysis of variance (ANOVA), which is equivalent the initial F-ratio for each covariate that is calculated before creating the Rubin-Rosenbaum model. Then we will explain the similar, yet slightly more complex method for calculating the two-way ANOVA, which is the statistic the Rubin-Rosenbaum model uses in the refinement process.