Context-based Visual Feedback Recognition

by

Louis-Philippe Morency

Submitted to the Department of Electrical Engineering and Computer

Science

in partial fulfillment of the requirements for the degree of

Doctor of Philosophy in Computer Science and Engineering

at the

MASSACHUSETTS INSTITUTE OF TECHNOLOGY

October 2006

@

Massachusetts Institute of Technology 2006. All rights reserved.

p

I/i

61

Autho

.

...- . V... .... ...

E in...n0

S'in.

Department of Ele frical Engineering and Computer Science

October 30th, 2006

C ertified by ...

.... ...

Trevor Darrell

Associate Professor

Thesis Supervisor

Accepted by...

....

...

.,,.,...

Arthur C. Smith

Chairman, Department Committee on Graduate Students

MASSACHUSETTS

11H

iNST

E

OF TECHNOLOGYAPR

3

0

2007

I IDADIE~ARCHIVES

__ I ~-sp~--ccContext-based Visual Feedback Recognition

by

Louis-Philippe Morency

Submitted to the Department of Electrical Engineering and Computer Science on October 30th, 2006, in partial fulfillment of the

requirements for the degree of

Doctor of Philosophy in Computer Science and Engineering

Abstract

During face-to-face conversation, people use visual feedback (e.g., head and eye ges-ture) to communicate relevant information and to synchronize rhythm between par-ticipants. When recognizing visual feedback, people often rely on more than their visual perception. For instance, knowledge about the current topic and from previ-ous utterances help guide the recognition of nonverbal cues. The goal of this thesis is to augment computer interfaces with the ability to perceive visual feedback gestures and to enable the exploitation of contextual information from the current interaction state to improve visual feedback recognition.

We introduce the concept of visual feedback anticipation where contextual knowl-edge from an interactive system (e.g. last spoken utterance from the robot or sys-tem events from the GUI interface) is analyzed online to anticipate visual feedback from a human participant and improve visual feedback recognition. Our multi-modal framework for context-based visual feedback recognition was successfully tested on conversational and non-embodied interfaces for head and eye gesture recognition.

We also introduce Frame-based Hidden-state Conditional Random Field model, a new discriminative model for visual gesture recognition which can model the sub-structure of a gesture sequence, learn the dynamics between gesture labels, and can be directly applied to label unsegmented sequences. The FHCRF model outperforms previous approaches (i.e. HMM, SVM and CRF) for visual gesture recognition and can efficiently learn relevant contextual information necessary for visual feedback anticipation.

A real-time visual feedback recognition library for interactive interfaces (called Watson) was developed to recognize head gaze, head gestures, and eye gaze using the images from a monocular or stereo camera and the context information from the interactive system. Watson was downloaded by more then 70 researchers around the world and was successfully used by MERL, USC, NTT, MIT Media Lab and many other research groups.

Thesis Supervisor: Trevor Darrell Title: Associate Professor

Acknowledgments

First and foremost, I would like to thank my research advisor, Trevor Darrell, who with his infinite wisdom has always been there to guide me through my research. You instilled in me the motivation to accomplish great research and I thank you for that. I would like to thank the members of my thesis committee, Candy Sidner, Michael Collins and Stephanie Seneff, for their constructive comments and helpful discussions. As I learned over the years, great research often starts with great collaboration. One of my most successful collaborations was with two of the greatest researchers: Candy Sidner and Christopher Lee. I have learned so much while collaborating with you and I am looking forward to continuing our collaboration after my PhD.

Over the past 6 years at MIT I have worked in three different offices with more than 12 different officemates and I would to thank all of them for countless interesting conversations. I would like to especially thank my two long-time "officemates" and friends: C. Mario Christoudias and Neal Checka. You have always been there for me and I appreciate it greatly. I'm really glad to be your friend. I would like to thank my accomplice Ali Rahimi. I will remember the many nights we spent together writing papers and listening to Joe Dassin and Goldorak.

I would like to thank Victor Dura Vila who was my roommate during my first three years at MIT and became one of my best friends and confident. Thank you for your support and great advice. I will always remember that one night in Tang Hall where we used your white board to solve this multiple-choice problem.

My last three years in Boston would not have been the same without my current roommate Rodney Daughtrey. I want to thank you for all the late night discussions after Tuesday night hockey and your constant support during the past three years. Dude!

I would like to thank Marc Parizeau, my undergraduate teacher from Laval Uni-versity, Quebec city, who first introduced me to research and shared with me his passion for computer vision.

pro-gramming and computer science in general. I will always remember all the summer days spent at Orleans Island in your company. Thank you for sharing your wisdom and always pushing me to reach my dreams.

Merci chere maman pour tout ce que tu as fait pour moi. Durant plus de 20 annnees (et encore aujourd'hui), tu m'as donne ton amour et ta sagesse. Tu m'as appris a partager et a respecter les autres. Je ne pourrai te le dire assez souvent:

Merci maman. Je t'aime.

Merci cher papa de m'avoir encourage a toujours aller plus loin. C'est grace a toi si j'ai fait ma demande a MIT. Durant ma derniere annee a Quebec, j'ai decouvert ce que la complicite pere-fils signifiait. Merci pour tout ce que tu as fait pour moi. Je t'aime papa.

Contents

1 Introduction 19

1.1 Challenges ... . ... .. 20

1.2 Contributions ... ... . . 22

1.2.1 Visual Feedback Anticipation . ... 23

1.2.2 FHCRF: Visual Gesture Recognition . ... 24

1.2.3 User Studies with Interactive Interfaces . ... 25

1.2.4 AVAM: Robust Online Head Gaze Tracking . ... 26

1.2.5 Watson: Real-time Library ... . 27

1.3 Road map ... ... 27

2 Visual Feedback for Interactive Interfaces 29 2.1 Related Work ... ... . ... 31

2.1.1 MACK: Face-to-face grounding . ... 33

2.1.2 Mel: Human-Robot Engagement ... 34

2.2 Virtual Embodied Interfaces ... .... 35

2.2.1 Look-to-Talk ... ... . 36

2.2.2 Conversational Tooltips ... .. 39

2.2.3 Gaze Aversion ... ... . 42

2.3 Physical Embodied Interfaces ... ... . . 47

2.3.1 Mel: Effects of Head Nod Recognition . ... 47

2.4 Non-embodied Interfaces ... ... 53

2.4.1 Gesture-based Interactions ... . 53

3 Visual Feedback Recognition 65

3.1 Head Gaze Tracking ... 66

3.1.1 Related W ork ... 67

3.1.2 Adaptive View-Based Appearance Model ... 69

3.1.3 Tracking and View-based Model Adjustments ... 70

3.1.4 Acquisition of View-Based Model . ... 75

3.1.5 Pair-wise View Registration . ... . . . . 76

3.1.6 Experiments ... . ... 79

3.2 Eye Gaze Estimation ... 82

3.2.1 Eye Detection ... 85

3.2.2 Eye Tracking ... 87

3.2.3 Gaze Estimation ... 88

3.2.4 Experiments ... 89

3.3 Visual Gesture Recognition ... 91

3.3.1 Related W ork ... 92

3.3.2 Frame-based Hidden-state Conditional Random Fields . . . . 95

3.3.3 Experiments . . .. .. ... .. . ... 101

3.3.4 Results and Discussion ... 107

3.4 Summary ... .. 111

4 Visual Feedback Anticipation: The Role of Context 115 4.1 Context in Conversational Interaction . ... 118

4.2 Context in Window System Interaction . ... 121

4.3 Context-based Gesture Recognition . ... 122

4.4 Contextual Features ... 125 4.4.1 Conversational Interfaces ... ... ... 127 4.4.2 Window-based Interface ... .. 130 4.5 Experim ents ... 131 4.5.1 D atasets . . . 132 8

4.5.2 M ethodology ... 134 4.5.3 Results and Discussion ... ... 135 4.6 Sum m ary ... ... 142

5 Conclusion 143

5.1 Future Work ... ... 144

List of Figures

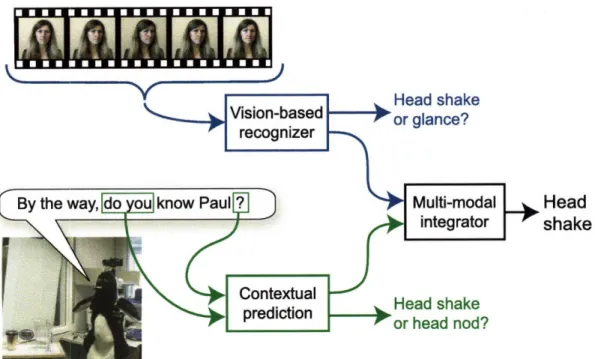

1-1 Two examples of modern interactive systems. MACK (left) was de-signed to study face-to-face grounding [69]. Mel (right), an interactive robot, can present the iGlassware demo (table and copper cup on its right) or talk about its own dialogue and sensorimotor abilities. . . . 20 1-2 Contextual recognition of head gestures during face-to-face interaction

with a conversational robot. In this scenario, contextual information from the robot's spoken utterance helps to disambiguate the listener's visual gesture .. . . . . 24

2-1 MACK was designed to study face-to-face grounding [69]. Directions are given by the avatar using a common map placed on the table which is highlighted using an over-head projector. The head pose tracker is used to determine if the subject is looking at the common map. . . . 34 2-2 Mel, an interactive robot, can present the iGlassware demo (table and

copper cup on its right) or talk about its own dialogue and sensorimotor abilities. ... 35 2-3 Look-To-Talk in non-listening (left) and listening (right) mode... .. 37 2-4 Multimodal kiosk built to experiment with Conversational tooltip. A

stereo camera is mounted on top of the avatar to track the head position and recognize head gestures. When the subject looks at a picture, the avatar offers to give more information about the picture. The subject can accept, decline or ignore the offer for extra information. ... 40

2-5 Comparison of a typical gaze aversion gesture (top) with a "deictic" eye movement (bottom). Each eye gesture is indistinguishable from a single image (see left images). However, the eye motion patterns of each gesture are clearly different (see right plots). . ... . . 44 2-6 Multimodal interactive kiosk used during our user study. ... 45 2-7 Overall Nod Rates by Feedback Group. Subjects nodded significantly

more in the MelNodsBack feedback group than in the NoMelNods group. The mean Overall Nod Rates are depicted in this figure with the wide lines .. . . . . 50 2-8 Nod with Speech Rates by Feedback Group. Again, subjects nodded

with speech significantly more frequently in the MelNodsBack feedback group than in the NoMelNods group. ... 51 2-9 Nod Only Rates by Feedback Group. There were no significant

differ-ences among the three feedback groups in terms of Nod Only Rates. . 52 2-10 Experimental setup. A stereo camera is placed on top of the screen to

track the head position and orientation. . ... . . 55 2-11 Preferred choices for input technique during third phase of the

exper-iment ... .. 58 2-12 Survey results for dialog box task. All 19 participants graded the

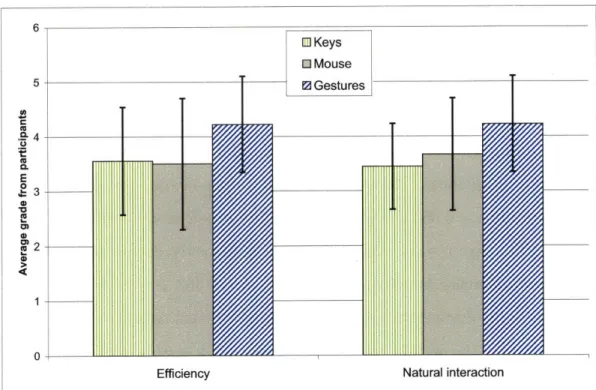

naturalness and efficiency of interaction on a scale of 1 to 5, 5 meaning best. ... .. 60 2-13 Survey results for document browsing task. All 19 participants graded

the naturalness and efficiency of interaction on a scale of 1 to 5, 5 meaning best ... ... 61

3-1 The view-based model represents the subject under varying poses. It also implicitly captures non-Lambertian reflectance as a function of pose. Ob-serve the reflection in the glasses and the lighting difference when facing up and down. . . . .... 71

3-2 Comparison of face tracking results using a 6 DOF registration algorithm. Rows represent results at 31.4s, 52.2s, 65s, 72.6, 80, 88.4, 113s and 127s. The thickness of the box around the face is inversely proportional to the uncertainty in the pose estimate (the determinant of xt). The number of indicator squares below the box indicate the number of base frames used during tracking ... ... 80

3-3 Head pose estimation using an adaptive view-based appearance model. 81

3-4 Comparison of the head pose estimation from our adaptive view-based ap-proach with the measurements from the Inertia Cube2 sensor. . ... 83

3-5 6-DOF puppet tracking using the adaptive view-based appearance model. 84

3-6 Example image resolution used by our eye gaze estimator. The size of the eye samples are 16x32 pixels. ... 85

3-7 Experimental setup used to acquire eye images from 16 subjects with ground truth eye gaze. This dataset was used to train our eye detector and our gaze estimator ... 86

3-8 Samples from the dataset used to train the eye detector and gaze esti-mator. The dataset had 3 different lighting conditions. . ... . 87

3-9 Average error of our eye gaze estimator when varying the noise added to the initial region of interest. ... 90

3-10 Eye gaze estimation for a sample image sequence from our user study. The cartoon eyes depict the estimated eye gaze. The cube represents the head pose computed by the head tracker. . ... 91

3-11 Comparison of our model (frame-based HCRF) with two previously published models (CRF [56] and sequence-based HCRF [38, 112]). In these graphical models, xj represents the jth observation (correspond-ing to the jth frame of the video sequence), hj is a hidden state assigned to xj , and yj the class label of xj (i.e. head-nod or other-gesture). Gray circles are observed variables. The frame-based HCRF model combines the strengths of CRFs and sequence-based HCRFs in that it captures both extrinsic dynamics and intrinsic structure and can be naturally applied to predict labels over unsegmented sequences. Note that only the link with the current observation xj is shown, but for all three models, long range dependencies are possible. . ... 94 3-12 This figure shows the MAP assignment of hidden states given by the

frame-based HCRF model for a sample sequence from the synthetic data set. As we can see the model has used the hidden states to learn the internal sub-structure of the gesture class. . . ... . . 100 3-13 ROC curves for theMelHead dataset. Each graph represents a different

window size: (a) W=0, (b) W=2, (c) W=5 and (d) W=10. The last graph (e) shows the ROC curves when FFT is applied on a window size of 32(W=16). In bold is the largest EER value. . ... 106 3-14 Accuracy at equal error rates as a function of the window size, for

dataset MelHead. ... 108 3-15 ROC curves for the WidgetsHead dataset. Each graph represents a

different window size: (a) W=0, (b) W=2, (c) W=5 and (d) W=10. 109 3-16 Accuracy at equal error rates as a function of the window size, for

dataset WidgetsHead ... 111 3-17 ROC curves for theAvatarEye dataset. The ROC curves are shown for

a window size of 0 (a), 2 (b) and 5 (c). ... 112 3-18 Accuracy at equal error rates as a function of the window size, for

4-1 Contextual recognition of head gestures during face-to-face interaction with a conversational robot. In this scenario, contextual information from the robot's spoken utterance helps disambiguating the listener's visual gesture .. . . . . . . . ... 117 4-2 Simplified architecture for embodied conversational agent. Our method

integrates contextual information from the dialogue manager inside the visual analysis module ... 119 4-3 Framework for context-based gesture recognition. The contextual

pre-dictor translates contextual features into a likelihood measure, similar to the visual recognizer output. The multi-modal integrator fuses these visual and contextual likelihood measures. The system manager is a generalization of the dialogue manager (conversational interactions) and the window manager (window system interactions). . ... 122 4-4 Prediction of head nods and head shakes based on 3 contextual

fea-tures: (1) distance to end-of-utterance when ECA is speaking, (2) type of utterance and (3) lexical word pair feature. We can see that the con-textual predictor learned that head nods should happen near or at the end of an utterance or during a pause while head shakes are most likely at the end of a question. ... 129 4-5 Context-based head nod recognition results for a sample dialogue. The

last graph displays the ground truth. We can observe at around 101 seconds (circled and crossed in the top graph) that the contextual information attenuates the effect of the false positive detection from the visual recognizer . ... 137 4-6 Dataset MelHead. Head nod recognition curves when varying the

de-tection threshold. ... 138 4-7 Dataset MelHead.Head shake recognition curves when varying the

4-8 Dataset WidgetsHead. Average ROC curves for head nods recogni-tion. For a fixed false positive rate of 0.058 (operational point), the context-based approach improves head nod recognition from 85.3% (vi-sion only) to 91.0% ... ... 140 4-9 Dataset AvatarEye. Average ROC curves for gaze aversion

recogni-tion. For a fixed false positive rate of 0.1, the context-based approach improves head nod recognition from 75.4% (vision only) to 84.7%. .. 141

List of Tables

2.1 How to activate and deactivate the speech interface using three modes: push-to-talk (PTT), look-to-talk (LTT), and talk-to-talk (TTT).

38

2.2 Breakdown of participants in groups and demos. . ... 49 3.1 RMS error for each sequence. Pitch, yaw and roll represent rotation

around X, Y and Z axis, respectively. . ... 82 3.2 Accuracy at equal error rate for different window sizes on the MelHead

dataset. The best model is FHCRF with window size of 0. Based on paired t-tests between FHCRF and each other model, p-values that are statistically significant are printed in italic. . ... . 107 3.3 Accuracy at equal error rate for different window sizes on the WidhetsHead

dataset. The best model is FHCRF with window size of 2. Based on paired t-tests between FHCRF and each other model, p-values that are statistically significant are printed in italic. . ... . 110 3.4 Accuracy at equal error rates for different window sizes on the AvatarEye

dataset. The best model is FHCRF with window size of 0. Based on paired t-tests between FHCRF and each other model, p-values that are statistically significant are printed in italic. . ... 113 4.1 True detection rates for a fixed false positive rate of 0.1. . ... 138 4.2 Dataset AvatarEye. Three analysis measurements to compare the

per-formance of the multi-modal integrator with the vision-based recog-nizer. ... ... ... 142

Chapter 1

Introduction

In recent years, research in computer science has brought about new technologies to produce multimedia animations, and sense multiple input modalities from spoken words to detecting faces from images. These technologies open the way to new types of human-computer interfaces, enabling modern interactive systems to communicate more naturally with human participants. Figure 1-1 shows two examples of modern interactive interfaces: Mel, a robotic penguin which hosts a participant during a demo of a new product [84] and MACK, an on-screen character which offers directions to visitors of a new building [69]. By incorporating human-like display capabilities into these interactive systems, we can make them more efficient and attractive, but doing so increases the expectations from human participants who interact with these sys-tems. A key component missing from most of these moderns systems is the recognition of visual feedback.

During face-to-face conversation, people use visual feedback to communicate rel-evant information and to synchronize rhythm between participants. When people interact naturally with each other, it is common to see indications of acknowledg-ment, agreeacknowledg-ment, or disinterest given with a simple head gesture. For example, when finished with a turn and willing to give up the floor, users tend to look at their conversational partner. Conversely, when they wish to hold the floor, even as they pause their speech, they often look away. This often appears as a "non-deictic" or gaze-averting eye gesture while they pause their speech to momentarily consider a

Figure 1-1: Two examples of modern interactive systems. MACK (left) was designed to study face-to-face grounding [69]. Mel (right), an interactive robot, can present the iGlassware demo (table and copper cup on its right) or talk about its own dialogue and sensorimotor abilities.

response [35, 86].

Recognizing visual feedback such as this from the user can dramatically improve the interaction with the system. When a conversational system is speaking, head nods can be detected and used by the system to know that the listener is engaged in the conversation. This may be more natural interface compared to the original version where the robot had to ask a question to get the same feedback. By recognizing eye gaze aversion gestures, the system can understand delays resulting from the user thinking about their answer when asked a question. It is disruptive for a user to have a system not realize the user is still thinking about a response and interrupt prematurely, or to wait inappropriately thinking the user is still going to speak when the user has in fact finished their turn. The main focus of this thesis is visual feedback recognition for interactive systems.

1.1

Challenges

Recognition of visual feedback for interactive systems brings many challenges:

Ambiguous Gestures Different types of visual feedback can often look alike. For example, a person's head motion during a quick glance to the left looks similar to

a short head shake. A person's head motion when looking at their keyboard before typing looks similar to a head nod. We need a recognition framework which is able to differentiate these ambiguous gestures.

Subtle Motion Natural gestures are subtle and the range of motion involved is often really small. For example, when someone head nods to ground (which is intended as an acknowledgement) a sentence or part of a sentence, the head motion will usually be really small and often only one nod is performed.

Natural Visual Feedback Which visual gestures should we be looking for? While a human participant may potentially offer a large range of visual feedback while interacting with the system, which visual feedback, if correctly recognized by the interactive system, will improve the system performance and which visual gestures are naturally performed by the user?

User Independent and Automatic Initialization Recognition of the visual

feed-back from a new user should start automatically even though this new user never interacted with the system before and was not in the system's training data. Also, our algorithms should be able to handle different facial appearances, including people with glasses and beards, and different ways to gesture (short head nod versus slower head nod).

Diversity of System Architectures Each interactive system has its own architec-ture with a different representation of internal knowledge and its own protocol for exchange of information. An approach for visual feedback recognition should be easy to integrate with pre-existing systems.

1.2

Contributions

The contributions of this thesis intersect the fields of human-computer interaction (HCI), computer vision, machine learning and multi-modal interfaces.

Visual Feedback Anticipation is a new concept to improve visual feedback recog-nition where contextual knowledge from the interactive system is analyzed online to anticipate visual feedback from the human participant. We developed a multi-modal framework for context-based visual recognition was successfully tested on conversa-tional and non-embodied interfaces for head gesture and eye gestures recognition and has shown a statistically significant improvement in recognition performance.

Frame-based Hidden-state Conditional Random Fields is a new discriminative model for visual gesture recognition which can model the sub-structure of a gesture sequence, can learn the dynamics between gesture labels and be directly applied to label unsegmented sequences. Our FHCRF model outperforms previous approaches (i.e. HMM and CRF) for visual gesture recognition and can efficiently learn relevant contextual information necessary for visual feedback anticipation.

Visual Feedback User Study In the context of our user studies with virtual and physically embodied agents, we investigate four kinds of visual feedback that are naturally performed by human participants when interacting with interactive inter-faces and which, if automatically recognized, can improve the user experience: head gaze, head gestures (head nods and head shakes), eye gaze and eye gestures (gaze aversions).

Adaptive View-based Appearance Model is a new user independent approach

for head pose estimation which merges differential tracking with view-based tracking. AVAMs can track large head motion for long periods of time with bounded drift. We experimentally observed an RMS error within the accuracy limit of an attached

inertial sensor.

Watson is a real-time visual feedback recognition library for interactive interfaces

that can recognize head gaze, head gestures, eye gaze and eye gestures using the images of a monocular or stereo camera. Watson has been downloaded by more then 70 researchers around the world and was successfully used by MERL, USC, NTT, Media Lab and many other research groups.

The following five subsections discuss with more details each contribution.

1.2.1

Visual Feedback Anticipation

To recognize visual feedback efficiently, humans often use contextual knowledge from previous and current events to anticipate when feedback is most likely to occur. For example, at the end of a sentence during an explanation, the speaker will often look at the listener and anticipate some visual feedback like a head nod gesture from the listener. Even if the listener does not perform a perfect head nod, the speaker will most likely accept the gesture as a head nod and continue with the explanation. Similarly, if a speaker refers to an object during his/her explanation and looks at the listener, even a short glance will be accepted as glance-to-the-object gesture (see Figure 1-2).

In this thesis we introduce' the concept of visual feedback anticipation for human-computer interfaces and present a context-based recognition framework for analyzing online contextual knowledge from the interactive system and anticipate visual feed-back from the human participant. As shown in Figure 1-2, the contextual information from the interactive system (i.e. the robot) is analyzed to predict visual feedback and then incorporated with the results of the vision-only recognizer to make the final de-cision. This new approach makes it possible for interactive systems to simulate the natural anticipation that humans use when recognizing feedback.

Our experimental results show that incorporating contextual information inside the recognition process improves performance. For example, ambiguous gestures like a

Head

shake

Figure 1-2: Contextual recognition of head gestures during face-to-face interaction with a conversational robot. In this scenario, contextual information from the robot's spoken utterance helps to disambiguate the listener's visual gesture.

head glance and head shake can be differentiated using the contextual information (see Figure 1-2). With a conversational robot or virtual agent, lexical, prosodic, timing, and gesture features are used to predict a user's visual feedback during conversational dialogue. In non-conversational interfaces, context features based on user-interface system events (i.e. mouse motion or a key pressed on the keyboard) can improve detection of head gestures for dialog box confirmation or document browsing.

1.2.2

FHCRF: Visual Gesture Recognition

Visual feedback tends to have a distinctive internal sub-structure as well as regular dynamics between individual gestures. When we say that a gesture has internal sub-structure we mean that it is composed of a set of basic motions that are combined in an orderly fashion. For example, consider a head-nod gesture which consists of moving the head up, moving the head down and moving the head back to its starting position. Further, the transitions between gestures are not uniformly likely: it is clear

that a head-nod to head-shake transition is less likely than a transition between a head-nod and another gesture (unless we have a very indecisive participant).

In this thesis we introduce a new visual gesture recognition algorithm, which can capture both sub-gesture patterns and dynamics between gestures. Our Frame-based Hidden Conditional Random Field (FHCRF) model is a discriminative, latent variable, on-line approach for gesture recognition. Instead of trying to model each gesture independently as previous approaches would (i.e. Hidden Markov Models), our FHCRF model focuses on what best differentiates all the visual gestures. As we show in our results, our approach can more accurately recognize subtle gestures such as head nods or eye gaze aversion.

Our approach also offers several advantages over previous discriminative models: in contrast to Conditional Random Fields (CRFs) [56] it incorporates hidden state variables which model the sub-structure of a gesture sequence, and in contrast to previous hidden-state conditional models [79] it can learn the dynamics between ges-ture labels and be directly applied to label unsegmented sequences. We show that, by assuming a deterministic relationship between class labels and hidden states, our model can be efficiently trained.

1.2.3

User Studies with Interactive Interfaces

While a large range of visual feedback is performed by humans when interacting face-to-face with each other, it is still an open question as to what visual feedback is performed naturally by human participants when interacting with an interactive system. Another important question is which visual feedback has the potential to improve current interactive systems when recognized.

With this in mind, we performed a total of six user studies with three different types of interactive systems: virtual embodied interfaces, physical embodied inter-faces and non-embodied interinter-faces. Virtual embodied interinter-faces, also referred to as Embodied Conversational Agent (ECA) [16] or Intelligent Virtual Agent (IVA) [3], are autonomous, graphically embodied agents in an interactive, 2D or 3D virtual environment which are able to interact intelligently with the environment, other

em-bodied agents, and especially with human users. Physical emem-bodied interfaces, or robots, are physically embodied agents able to interact with human user in the real world. Non-embodied interfaces are interactive systems without any type of embodied character, which include traditional graphical user interfaces (GUIs) and information kiosks (without an on-screen character).

In our user studies, we investigate four kinds of visual feedback that have the potential to improve the experience of human participants when interacting one-on-one with an interactive interface: head gaze, head gestures, eye gaze and eye gestures. Head gaze can be used to determine to whom the human participant is speaking and can complement eye gaze information when estimating the user's focus of attention. Head gestures such as head nods and head shakes offer key conversational cues for information grounding and agreement/disagreement communication. Eye gaze is an important cue during turn-taking and can help to determine the focus of attention. Recognizing eye gestures like eye gaze aversions can help the interactive system to know when a user is thinking about his/her answer versus waiting for more information.

1.2.4

AVAM: Robust Online Head Gaze Tracking

One of our goal is to develop a user independent head gaze tracker that can be initialized automatically, can track over a long period of time and should be robust to different environments (lighting, moving background, etc.). Since head motion is used as an input by the FHCRF model for head gesture recognition, our head pose tracker has to be sensitive enough to distinguish natural and subtle gestures.

In this thesis, we introduce a new model for online head gaze tracking: Adaptive View-based Appearance Model (AVAM). When the head pose trajectory crosses itself, our tracker has bounded drift and can track an object undergoing large motion for long periods of time. Our tracker registers each incoming frame against the views of the appearance model using a two-frame registration algorithm. Using a linear Gaussian filter, we simultaneously estimate the pose of the head and adjust the view-based model as pose-changes are recovered from the registration algorithm. The adaptive

view-based model is populated online with views of the head as it undergoes different orientations in pose space, allowing us to capture non-Lambertian effects. We tested our approach on a real-time rigid object tracking task and observed an RMS error within the accuracy limit of an attached inertial sensor.

1.2.5

Watson: Real-time Library

We developed a real-time library for context-based recognition of head gaze, head ges-tures, eye gaze and eye gestures based on the algorithms described in this thesis. This library offers a simplified interface for other researchers who want to include visual feedback recognition in their interactive system. This visual module was successfully used in many research projects including:

1. Leonardo, a robot developed at the Media lab, can quickly learn new skills

and tasks from natural human instruction and a few demonstrations [102]; 2. Mel, a robotic penguin developed at MERL, hosting a participant during a

demo of a new product [89, 92];

3. MACK was originally developed to give direction around the Media Lab and now its cousin NU-MACK can help with direction around the Northwestern University campus [69];

4. Rapport was a user study performed at the USC Institute for Creative Tech-nologies to improve the engagement between a human speaker and virtual hu-man listener [36].

1.3

Road map

In chapter 2, we present our user studies with virtual embodied agents, robots and non-embodied interfaces designed to observe natural and useful visual feedback. We also present some related user studies where part of our approach for visual feedback recognition was used.

In chapter 3, we first present our novel model, AVAM, for online head gaze es-timation with a comparative evaluation with an inertial sensor. We then present our approach for eye gaze estimation based on view-based eigenspaces. We conclude Chapter 3 with our new algorithm, FHCRF, for gesture recognition, and show its performance on vision-based head gestures and eye gestures recognition.

In chapter 4, we present our new concept for context-based visual feedback recog-nition and describe our multi-modal framework for analyzing online contextual in-formation and anticipating head gestures and eye gestures. We present experimental results with conversational interfaces and non-embodied interfaces.

In chapter 5, we summarize our contributions and discuss future work, and in Appendix A, we provide the the user guide of Watson, our context-based visual feedback recognition library.

Chapter 2

Visual Feedback for Interactive

Interfaces

During face-to-face conversation, people use visual feedback to communicate relevant information and to synchronize communicative rhythm between participants. While a large literature exists in psychology describing and analyzing visual feedback during human-to-human interactions, there are still a lot of unanswered questions about natural visual feedback for interactive interfaces.

We are interested in natural visual feedback, meaning visual feedback that hu-man participants perform automatically (without being instructed to perform these gestures) when interacting with an interactive system. We also want to find out what kind of visual feedback, if recognized properly by the system, would make the interactive interface more useful and efficient.

In this chapter, we present five user studies designed to (1) analyze the visual feedback naturally performed by human participants when interacting with an in-teractive system and (2) find out how recognizing this visual feedback can improve the performance of interactive interfaces. While visual feedback can be expressed by almost any part of the human body, this thesis focuses on facial feedback, since our main interest is face-to-face interaction with embodied and non embodied interfaces. In the context of our user studies, we discuss forms of visual feedback (head gaze, eye gaze, head gestures and eye gestures) that were naturally performed by our

partici-pants. We then show how interactive interfaces can be improved by recognizing and utilizing these visual gestures.

Since interactive systems come in many different forms, we designed our user stud-ies to explore two different axes: embodiment and conversational capabilitstud-ies. For embodiment, we group the interactive interfaces into three categories: virtual em-bodied interfaces, physical emem-bodied interfaces and non-emem-bodied interfaces. Virtual embodied interfaces are autonomous, graphically embodied agents in an interactive, 2D or 3D virtual environment. Physical embodied interfaces are best represented as robots or robotic characters that interact with a human user in the real world. Non-embodied interfaces are interactive systems without any type of Non-embodied character such as a conventional operating system or an information kiosk.

The conversational capabilities of interactive systems can range from simple trigger-based interfaces to elaborated conversational interfaces. Trigger-trigger-based interfaces can be seen as simple responsive interfaces where the system performs a pre-determined set of actions when triggered by the input modality (or modalities). Conversational interfaces usually incorporate a dialogue manager that will decide the next set of actions based on the current inputs, a history of previous actions performed by the participants (including the interface itself) and the goals of the participants. Some interfaces like the conversational tooltips described later in this chapter are an inter-mediate between a trigger-based approach and a conversational interface since they use a trigger to start the interaction (i.e. you looking at a picture) and then start a short conversation if you accept the help from the agent.

The following section reviews work related to the use of visual feedback with inter-active interfaces. We focus on two interinter-active systems that are particularly relevant to our research: MACK, an interactive on-screen character and Mel, an interactive robot. In Section 2.2, we present three user studies that we performed with virtual embodied agents: Look-to-talk, Conversational Tooltips and Gaze Aversion. In Sec-tion 2.3, we present a collaborative user study made with researchers at MERL about head nodding for interaction with a robot. In Section 2.4, we present a user study with two non-embodied gesture-based interfaces: dialog box answering/acknowledgement

and document browsing. Finally, in Section 2.5, we summarize the results of the user studies and present four kinds of natural visual feedback useful for interactive interfaces.

2.1

Related Work

Several systems have exploited head-pose cues or eye gaze cues in interactive and conversational systems. Stiefelhagen has developed several successful systems for tracking face pose in meeting rooms and has shown that face pose is very useful for predicting turn-taking [98]. Takemae et al. also examined face pose in conversation and showed that, if tracked accurately, face pose is useful for creating a video summary of a meeting [101]. Siracusa et al. developed a kiosk front end that uses head pose tracking to interpret who was talking to whom in a conversational setting [93]. The position and orientation of the head can be used to estimate head gaze, which is a good estimate of a person's attention. When compared with eye gaze, head gaze can be more accurate when dealing with low resolution images and can be estimated over a larger range than eye gaze [65].

Several authors have proposed face tracking for pointer or scrolling control and have reported successful user studies [105, 52]. In contrast to eye gaze [118], users seem to be able to maintain fine motor control of head gaze at or below the level needed to make fine pointing gestures'. However, many systems required users to manually initialize or reset tracking. These systems supported a direct manipulation style of interaction and did not recognize distinct gestures.

A considerable body of work has been carried out regarding eye gaze and eye motion patterns for human-computer interaction. Velichkovsky suggested the use of eye motion to replace the mouse as a pointing device [108]. Qvarfordt and Zhai used eye-gaze patterns to sense user interest with a map-based interactive system [80]. Li and Selker developed the InVision system which responded to a user's eye fixation patterns in a kitchen environment [60].

In a study of eye gaze patterns in multi-party (more than two people) conversa-tions, Vertegaal et al. [109] showed that people are much more likely to look at the people they are talking to than any other people in the room. Also, in another study, Maglio et al. [61] found that users in a room with multiple devices almost always look at the devices before talking to them. Stiefelhagen et al. [99] showed that the focus of attention can be predicted from the head position 74% of the time during a meeting scenario.

Breazeal's work [14] on infantoid robots explored how the robot gazed at a person and responded to the person's gaze and prosodic contours in what might be called pre-conversational interactions. Davis and Vaks modeled head nods and head shakes using a timed finite state machine and suggested an application with an on-screen

embodied agent [28].

There has been substantial research in hand/body gesture in for human-computer interaction. Lenman et al. explored the use of pie- and marking menus in hand gesture-based interaction[59]. Cohen et al. studied the issues involved in control-ling computer applications via hand gestures composed of both static and dynamic symbols[24].

There has been considerable work on gestures with virtual embodied interfaces, also known as embodied conversational agents (ECAs). Bickmore and Cassell de-veloped an ECA that exhibited many gestural capabilities to accompany its spoken conversation and could interpret spoken utterances from human users [8]. Sidner et al. have investigated how people interact with a humanoid robot [91]. They found that more than half their participants naturally nodded at the robot's conversational contributions even though the robot could not interpret head nods. Nakano et al. analyzed eye gaze and head nods in computer-human conversation and found that their subjects were aware of the lack of conversational feedback from the ECA [69]. They incorporated their results in an ECA that updated its dialogue state. Numerous other ECAs (e.g. [106, 15]) are exploring aspects of gestural behavior in human-ECA interactions. Physically embodied ECAs-for example, ARMAR II [31, 32] and Leo [13]-have also begun to incorporate the ability to perform articulated body tracking

and recognize human gestures.

2.1.1

MACK: Face-to-face grounding

MACK (Media lab Autonomous Conversational Kiosk) is an embodied conversational agent (ECA) that relies on both verbal and nonverbal signals to establish common ground in computer-human interactions [69]. Using a map placed in front of the kiosk and an overhead projector, MACK can give directions to different research projects of the MIT Media Lab. Figure 2-1 shows a user interacting with MACK.

The MACK system tokenizes input signals into utterance units (UU) [78] cor-responding to single intonational phrases. After each UU, the dialogue manager decides the next action based on the log of verbal and nonverbal events. The dia-logue manager's main challenge is to determine if the agent's last UU is grounded (the information was understood by the listener) or is still ungrounded (a sign of miscommunication).

As described in [69], a grounding model has been developed based on the verbal and nonverbal signals happening during human-human interactions. The two main nonverbal patterns observed in the grounding model are gaze and head nods. Non-verbal patterns are used by MACK to decide whether to proceed to the next UU or elaborate on the current one. Positive evidence of grounding is recognized by MACK if the user looks at the map or nods his or her head. In this case, the agent goes ahead with the next UU 70% of the time. Negative evidence of grounding is recog-nized if the user looks continuously at the agent. In this case, MACK will elaborate on the current UU 73% of the time. These percentages are based on the analysis of human-human interactions. In the final version of MACK, Watson, our real-time visual feedback recognition library, was used to estimate the gaze of the user and detect head nods.

Figure 2-1: MACK was designed to study face-to-face grounding [69]. Directions are given by the avatar using a common map placed on the table which is highlighted using an over-head projector. The head pose tracker is used to determine if the subject is looking at the common map.

2.1.2

Mel: Human-Robot Engagement

Mel is a robot developed at Mitsubishi Electric Research Labs (MERL) that mimics human conversational gaze behavior in collaborative conversation [89]. One important goal of this project is to study engagement during conversation. The robot performs a demonstration of an invention created at MERL in collaboration with the user (see Figure 2-2).

Mel's conversation model, based on COLLAGEN [84], determines the next move on the agenda using a predefined set of engagement rules, originally based on human-human interaction [90]. The conversation model also assesses engagement information about the human conversational partner from a Sensor Fusion Module, which keeps track of verbal (speech recognition) and nonverbal cues (multiview face detection[1 10]). A recent experiment using the Mel system suggested that users respond to changes in head direction and gaze by changing their own gaze or head direction[89]. Another interesting observation is that people tend to nod their heads at the robot during

Figure 2-2: Mel, an interactive robot, can present the iGlassware demo (table and copper cup on its right) or talk about its own dialogue and sensorimotor abilities.

explanation. These kinds of positive responses from the listener could be used to improve the engagement between a human and robot.

2.2

Virtual Embodied Interfaces

In this section, we explore how visual feedback can be used when interacting with an on-screen character. For this purpose we present three user studies that range from a trigger-based interface to simple conversational interfaces. The "look-to-talk" experiment, described in Section 2.2.1, explores the use of head gaze as a trigger for activating an automatic speech recognizer inside a meeting environment. The "con-versational tooltips" experiment, described in Section 2.2.2, explores an intermediate interface between trigger-based and conversational using head gaze and head gestures. The "gaze aversion" experiment, described in Section 2.2.3 looks at recognition of eye gestures for a scripted conversational interface.

2.2.1

Look-to-Talk

The goal of this user study was to observe how people prefer to interact with a simple on-screen agent when meeting in an intelligent environment where the virtual agent can be addressed at any point in the meeting to help the participants1. In

this setting where multiple users interact with one another and, possibly, with a multitude of virtual agents, knowing who is speaking to whom is an important and difficult question that cannot always be answered with speech alone. Gaze tracking has been identified as an effective cue to help disambiguate the addressee of a spoken utterance [99].

To test our hypothesis, we implemented look-to-talk(LTT), a gaze-driven in-terface, and talk-to-talk (TTT), a spoken keyword-driven interface. We have also implemented push-to-talk(PTT), where the user pushes a button to activate the speech recognizer. We present and discuss a user evaluation of our prototype system as well as a Wizard-of-Oz setup.

Experimental Study

We set up the experiment to simulate a collaboration activity among two subjects and a software agent in an the Intelligent Room [23] (from here on referred to as the I-Room). The first subject (subject A) sits facing the front wall displays, and a second "helper" subject (subject B) sits across from subject A. The task is displayed on the wall facing subject A. The camera is on the table in front of subject A, and Sam, an animated character representing the software agent, is displayed on the side wall (see Figure 2-3). Subject A wears a wireless microphone and communicates with Sam via IBM ViaVoice. Subject B discusses the task with subject A and acts as a collaborator. The I-Room does not detect subject B's words and head-pose.

Sam is the I-Room's "emotive" user interface agent. Sam consists of simple shapes forming a face, which animate to continually reflect the I-Room's state (see Fig-ure 2-3). During this experiment, Sam reads quiz questions through a text-to-speech

'The results in Section 2.2.1 were obtained in collaboration with Alice Oh, Harold Fox, Max Van Kleek, Aaron Adler and Krzysztof Gajos. It was originally published at CHI 2002 [72].

Figure 2-3: Look-To-Talk in non-listening (left) and listening (right) mode.

synthesizer and was constrained to two facial expressions: non-listening and listening. To compare the usability of look-to-talk with the other modes, we ran two experiments in the I-Room. We ran the first experiment with a real vision- and speech-based system, and the second experiment with a Wizard-of-Oz setup where gaze tracking and automatic speech recognition were simulated by an experimenter behind the scenes. Each subject was asked to use all three modes to activate the speech recognizer and then to evaluate each mode.

For a natural look-to-talk interface, we needed a fast and reliable computer vision system to accurately track the users gaze. This need has limited gaze-based interfaces from being widely implemented in intelligent environments. However, fast and reliable gaze trackers using state-of-the-art vision technologies are now becoming available and are being used to estimate the focus of attention. In our prototype system, we estimate gaze with a 3-D gradient-based head-pose tracker [83] (an early of version of our head gaze tracker described in Section 3.1) that uses shape and intensity of the moving object. The tracker provides a good non-intrusive approximation of the user s gaze.

There were 13 subjects, 6 for the first experiment and 7 for the Wizard-of-Oz experiment. All of them were students in computer science, some of whom had prior experience with talk-to-talk in the I-Room. After the experiment, the subjects rated each of the three modes on a scale of one to five on three dimensions: ease of use, naturalness, and future use. We also asked the subjects to tell us which mode they liked best and why.

Mode Activate Feedback Deactivate Feedback PTT Switch the micro- Physical status Switch the micro- Physical status

phone to "on" of the switch phone to "mute" of the switch LTT Turn head Sam shows lis- Turn head away Sam shows

nor-toward Sam tening expression from Sam mal expression TTT Say computer Special beep Automatic None

(after 5 sec)

Table 2.1: How to activate and deactivate the speech interface using three modes: push-to-talk (PTT), look-to-talk (LTT), and talk-to-talk (TTT).

mode of interaction in counterbalanced order. In the Wizard-of-Oz experiment, we ran a fourth set in which all three modes were available, and the subjects were told to use any one of them for each question. Table 2.1 illustrates how users activate and deactivate the speech recognizer using the three modes and what feedback the system provides for each mode.

Results and Discussion

As we first reported in [72], for the first experiment, there was no significant difference (using analysis of variance at D=0.05) between the three modes for any of the surveyed dimensions. However, most users preferred talk-to-talk to the other two. They reported that talk-to-talk seemed more accurate than look-to-talk and more convenient than push-to-talk.

For the Wizard-of-Oz experiment, there was a significant difference in the natu-ralness rating between push-to-talk and the other two (p=0.01). This shows that, with better perception technologies, both look-to-talk and talk-to-talk will be better choices for natural human-computer interaction. Between look-to-talk and talk-to-talk, there was no significant difference on any of the dimensions. However, five out of the seven subjects reported that they liked talk-to-talk best compared to two subjects who preferred look-to-talk. One reason for preferring talk-to-talk to look-to-talk was that there seemed to be a shorter latency in talk-to-talk than look-to-talk. Also, a few subjects remarked that Sam seemed disconnected from the task, and thus it felt awkward to look at Sam.

Despite the subjects' survey answers, for the fourth set, 19 out of 30 questions were answered using look-to-talk, compared with 9 using talk-to-talk (we have this data for five out of the seven subjects; the other two chose a mode before beginning the fourth set to use for the entire set, and they each picked look-to-talk and talk-to-talk). When asked why he chose to use look-to-talk even though he liked talk-to-talk better, one subject answered " I just turned my head to answer and noticed that the Room was already in listening mode." This confirms the findings in [61] that users naturally look at agents before talking to them.

This user study about the look-to-talk concept shows that gaze is an important cue in multi-party conversation since human participants naturally turn their head toward the person, avatar or device they want to talk to. For an interactive system, to recognize this kind of behavior can help distinguish if the speaker is addressing the system or not.

2.2.2

Conversational Tooltips

A tooltip is a graphical user interface element that is used in conjunction with a mouse cursor. As a user hovers the cursor over an item without clicking it, a small box appears with a short description or name of the item being hovered over. In current user interfaces, tooltips are used to give some extra information to the user without interrupting the application. In this case, the cursor position typically represents an approximation of the user attention.

Visual tooltips are an extension of the concept of mouse-based tooltips where the user's attention is estimated from the head-gaze estimate. There are many applica-tions for visual tooltips. Most museum exhibiapplica-tions now have an audio guide to help visitors understand the different parts of the exhibition. These audio guides use proxy sensors to determine the location of the visitor or need input on a keypad to start the prerecorded information. Visual tooltips are a more intuitive alternative.

We define visual tooltips as a three-step process: deictic gesture, tooltip, and answer. During the first step, the system analyzes the user's gaze to determine if a specific object or region is under observation. Then the system informs the user

Figure 2-4: Multimodal kiosk built to experiment with Conversational tooltip. A stereo camera is mounted on top of the avatar to track the head position and recognize head gestures. When the subject looks at a picture, the avatar offers to give more information about the picture. The subject can accept, decline or ignore the offer for extra information.

about this object or region and offers to give more information. During the final step, if the user answers positively, the system gives more information about the object.

To work properly, the system that offers visual tooltips needs to know where the user attention is and if the user wants more information. A natural way to estimate the user's focus is to look at the user's head orientation. If a user is interested in a specific object, he or she will usually move his or her head in the direction of that object [98]. Another interesting observation is that people often nod or shake their head when answering a question. To test this hypothesis, we designed a multimodal experiment that accepts speech as well as vision input from the user. The following section describes the experimental setup and our analysis of the results.

Experimental Study

We designed this experiment with three tasks in mind: exploring the idea of visual tooltips, observing the relationship between head gestures and speech, and testing our head-tracking system. We built a multimodal kiosk that could provide information

about some graduate students in our research group (see Figure 2-4). The kiosk consisted of a Tablet PC surrounded by pictures of the group members. A stereo camera [30] and a microphone array were attached to the Tablet PC.

The central software part of our kiosk consists of a simple event-based dialogue manager. The dialogue manager receives input from the Watson tracking library (described in Appendix A) and the speech recognition tools [71]. Based on these inputs, the dialogue manager decides the next action to perform and produces output via the text-to-speech routines [2] and the avatar [41].

When the user approaches the kiosk, the head tracker starts sending pose informa-tion and head nod detecinforma-tion results to the dialogue manager. The avatar then recites a short greeting message that informs the user of the pictures surrounding the kiosk and asks the user to say a name or look at a specific picture for more information. Af-ter the welcome message, the kiosk switches to listening mode (the passive inAf-terface) and waits for one of two events: the user saying the name of one of the members or the user looking at one of the pictures for more than n milliseconds. When the vocal command is used, the kiosk automatically gives more information about the targeted member. If the user looks at a picture, the kiosk provides a short description and offers to give more information. In this case, the user can answer using voice (yes, no) or a gesture (head nods and head shakes). If the answer is positive, the kiosk describes the picture, otherwise the kiosk returns to listening mode.

For our user study, we asked 10 people (between 24 and 30 years old) to interact with the kiosk. Their goal was to collect information about each member. They were informed about both ways to interact: voice (name tags and yes/no) and gesture (head gaze and head nods). There were no constraints on the way the user should interact with the kiosk.

Results and Discussion

10 people participated in our user study. The average duration of each interaction was approximately 3 minutes. At the end of each interaction, the participant was asked some subjective questions about the kiosk and the different types of interaction

(voice and gesture).

A log of the events from each interaction allowed us to perform a quantitative evaluation of the type of interaction preferred. The avatar gave a total of 48 expla-nations during the 10 interactions. Of these 48 explaexpla-nations, 16 were initiated with voice commands and 32 were initiated with conversational tooltips (the user looked at a picture). During the interactions, the avatar offered 61 tooltips, of which 32 were accepted, 6 refused and 23 ignored. Of the 32 accepted tooltips, 16 were accepted with a head nod and 16 with a verbal response. Our results suggest that head gesture and pose can be useful cues when interacting with a kiosk.

The comments recorded after each interaction show a general appreciation of the conversational tooltips. Eight of the ten participants said they prefer the tooltips compared to the voice commands. One of the participants who preferred the voice commands suggested an on-demand tooltip version where the user asked for more information and the head gaze is used to determine the current object observed. Two participants suggested that the kiosk should merge the information coming from the

audio (the yes/no answer) with the video (the head nods and head shakes).

This user study about "conversational tooltips" shows how head gaze can be used to estimate the user's focus of attention and presents an application where a conversation is initiated by the avatar when it perceives that the user is focusing on a specific target and may want more information.

2.2.3

Gaze Aversion

Eye gaze plays an important role in face-to-face interactions. Kendon proposed that eye gaze in two-person conversation offers different functions: monitor visual feedback, express emotion and information, regulate the flow of the conversation (turn-taking), and improve concentration by restricting visual input [51]. Many of these functions have been studied for creating more realistic ECAs [107, 109, 34], but they have tended to explore only gaze directed towards individual conversational partners or objects.

contact, deictic gestures, and non-deictic gestures. Eye contact implies one partici-pant looking at the other participartici-pant. During typical interactions, the listener usually maintains fairly long gazes at the speaker while the speaker tends to look at the lis-tener as he or she is about to finish the utterance [51, 70]. Deictic gestures are eye gestures with a specific reference which can be a person not currently involved in the discussion, or an object. Griffin and Bock showed in their user studies that speakers look at an object approximately 900ms before referencing it vocally [37]. Non-deictic gestures are eye movements to empty or uninformative regions of space. This ges-ture is also referred to as a gaze-averting gesges-ture [35] and the eye movement of a thinker [86]. Researchers have shown that people will make gaze-averting gestures to retrieve information from memory [85] or while listening to a story [95]. Gaze aversion during conversation has been shown to be a function of cognitive load [35].

These studies of human-to-human interaction give us insight regarding the kind of gestures that could be useful for ECAs. Humans do seem to make similar gestures when interacting with an animated agent. Colburn et al. looked at eye contact with ECAs and found a correlation between the time people spend looking at an avatar versus the time they spend looking at another human during conversation [25].

We have observed that eye motions that attend to a specific person or object tend to involve direct saccades, while gaze aversion gestures tend to include more of a "wandering" eye motion. Looking at still images may be inconclusive in terms of deciding whether it is a gaze aversion gesture or a deictic eye movement, while looking at the dynamics of motion tends to be more discriminative (Figure 2-5). We therefore investigate the use of eye motion trajectory features to estimate gaze aversion gestures.

To our knowledge, no work has been done to study gaze aversion by human par-ticipants when interacting with ECAs. Recognizing such eye gestures would be useful for an ECA. A gaze aversion gesture while a person is thinking may indicate the person is not finished with their conversational turn. If the ECA senses the aversion gesture, it can correctly wait for mutual gaze to be re-established before taking its turn.

![Figure 1-1: Two examples of modern interactive systems. MACK (left) was designed to study face-to-face grounding [69]](https://thumb-eu.123doks.com/thumbv2/123doknet/14469113.521972/20.918.137.782.116.361/figure-examples-modern-interactive-systems-mack-designed-grounding.webp)