Action recognition using bag of features extracted from a beam of trajectories

Texte intégral

Figure

Documents relatifs

Wang, “Hierarchical recurrent neural network for skeleton based action recognition,” in IEEE conference on computer vision and pattern recognition (CVPR), 2015, pp. Schmid,

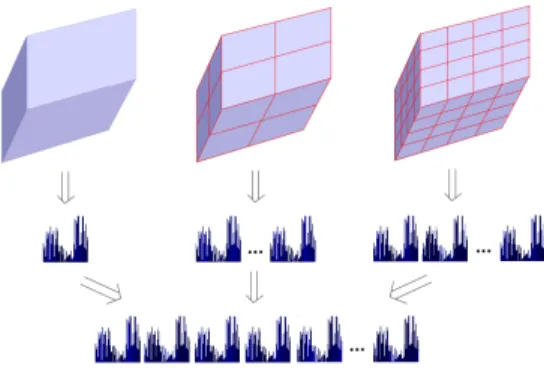

The encoding we propose it follows a soft-approach taking into account the radius of the covering region corresponding to each visual word obtained during the generation of the

Centre de recherche INRIA Paris – Rocquencourt : Domaine de Voluceau - Rocquencourt - BP 105 - 78153 Le Chesnay Cedex Centre de recherche INRIA Rennes – Bretagne Atlantique :

El Modelo de Saneamiento Básico Integral – SABA, es una experiencia exitosa de articulación de actores públicos y privados para la gestión sostenible de los servicios de agua

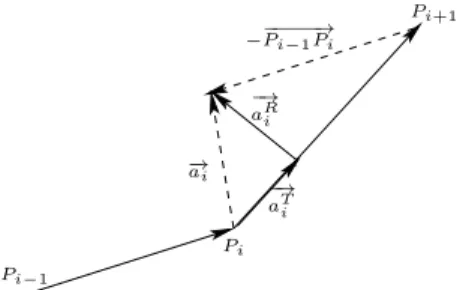

Our representation captures not only global distribution of features but also focuses on geometric and appearance (both visual and motion) relations among the fea- tures.. Calculating

For each one of the aforementioned datasets, we report the obtained recognition accuracy using the proposed Localized Trajectories and compare it to the classical Dense Trajectories

Using two benchmark datasets for human action recognition, we demonstrate that our representation enhances the discriminative power of features and improves action

• Spatio-temporal SURF (ST-SURF): After video temporal segmentation and human action detection, we propose a new algorithm based on the extension of the SURF descriptors to the