LIBRARY

OFTHEMASSACHUSETTS

INSTITUTE

OF

TECHNOLOGY

)28

1414

m~

Z

APR

35

1975Center

for

Information

Systems Research

MassachusettsInstituteofTechnology

Alfred P.Sloan SchoolofManagement

50 Memorial Drive

DESIGN OF A GENERAL HIERARCHICAL STORAGE SYSTEM

Stuart

E.Madnick

REPORT

CISR-6

SLOAN WP- 769-75

March

3,1975

*MR fflttrHT3

DESIGN OF A GENERAL HIERARCHICAL STORAGE SYSTEM

Stuart

E.Madnick

Center

for

Information Systems Research

Alfred

P.Sloan School of Management

Massachusetts Institute of Technology

Cambridge,

Massachusetts

02139

ABSTRACT

By

extending the concepts used in contemporary two-level hierarchical

storage

systems,

such as cache or paging systems,

it

ispossible to develop

an orderly

strategy for the design of large-scale automated hierarchical

storage systems.

Inthis

paper systems encompassing up to six levels of

storage

technology are studied.

Specific techniques,

such as page

splitting,

shadow storage,

direct transfer,

read through,

store behind,

and distributed

control,

are

discussed and are shown to provide considerable

advantages

for

increased systems performance and reliability.

DESIGN OF A GENERAL HIERARCHICAL STORAGE SYSTEM

StuartE.Madnick

Centerfor Information Systems Research

AlfredP. Sloan School of Management

Massachusetts Institute of Technology

Cambridge, Massachusetts 02139

INTRODUCTION and

The evolution of computer systems has been marked

byacontinually increasingdemand for faster, larger,

andmoreeconomicalstorage facilities. Clearly, if

wehadthe technologytoproduce ultra-fast

limitless-capacitystorage devicesfor miniscule cost, the

demand could be easily satisfied. Due tothecurrent

unavailability of suchawonderous device, the

requirements of high-performance yet low-cost are

bestaatisfied byamixture of technologies combining

expensive high-performance devices withinexpensive

lowar-performance devices. Suchastrategy is often

calleda hlernrchicalstorage system or multilevel

storagesystem.

CONTEMPORARY HIERARCHICAL STORAGE SYSTEMS Range of Storage Technologies

TableIindicatesthe range of performance and

costcharacteristics for typicalcurrent-day storage

technologies dividedinto6cost-performance levels.

Although this tableisonly a simplified summary,it

does illustratethe factthat thereexists aspectrum

of devicesthat spanover6 orders of magnitude

U00,000,000\) inboth cost and performance. Locality of Reference

Ifallreferences toonline storage were equally

likely, the use ofhierarchicalstorage systemswould

be ofmarginal valueatbest. Forexample, consider

ahierarchical system, M',consisting of two types of

devices.Mil) andM(2), with access times A (l)-.05xA(2)

andcosts C(D-20xC(2), respectively. If thetotal

storage capacityS*were equally divided between M(l)

and M(2), i.e., S(l)-S(2), then we can determine an

overall cost per byte,

C,

and effective access time.C

-.5xC(l)+ .5xC(2)C* -10.5 x C(2) - .5045x C(l)

A' - .5xAll) + .5 xA(2)

A* - .525xA(2) - 10.5 x A(l)

From these figures,wesee thattheeffective

system, M', costs about half as much as M(l) butis

more than 10 times as slow. On theotherhand, M*

is almosttwice as fast asM(2), butitcostsmore

than 10tirn^sasmuch per byte.

Fortunately, most actual programs and

applica-tions cluster their references so that, during any

interval of time, onlya small subset ofthe

infor-mation is actually used. Thisphenomenonisknown

aslocality ofreference. Ifwe couldkeep the

"current" information ina smallamount of M(l), we

could produce hierarchical storage systems with an effective access time close to M(l) but an overall cost per byte close to M(2).

The actual performance attainable by such

hier-archical storage systems isaddressed by theother

papers in this session12<14<2°. 23and thegeneral

literature2'3<4-6•7«8'10.11.13,15,18,19,21, 22. In

many actualsystems ithasbeenpossible to find

over 90% of all references inM(l) even though M(l)

wasmuchsmaller than M(2)13-l 5.

Automated Hierarchical Storage Systems

There are at leastthree ways in whichthe

locality of reference can be exploited in a

hier-archical storagesystem: static, manual, orautomatic.

Ifthepattern of reference isconstant and

predictable overa long period of time (e.g.,days,

weeks,ormonths), the information can be statically

allocated to specific storage devices so that the most frequently referenced information is assigned to

the highest performancedevices.

If the pattern ofreference isnotconstant but

ispredictable, theprogrammer can manually (i.e., by

explicit instructions in theprogram) causefrequently

referenced informationtobemoved onto high

perfor-mance devices while being used. Such manual control

places aheavy burden on the programmer to understand

the detailed behaviorof theapplication and to

determinethe optimum way to utilize the storage

hierarchy. This can be verydifficultif the

applica-tioniacomplex (e.g.,multiple access data base

•ystem) orisoperating ina multiprogramming

environ-nent with otherapplications.

Inan automated storage hierarchy, all aspects

ofthephysical information organization and

distribu-tion and movementofinformation are removed from

theprogrammer's responsibility. The algorithms that

managethe storagehierarchymay be implemented in

hardware, firmware, system software or some combination.

Contemporary Automated Hierarchical Storage Systems

To date, most implementations of automated

hierarchical storage systems have been large-scale

cache systems or paling systems. Typically, cache

systems,usinga combination of level1andlevel 2

storagedevicesmanaged by specialized hardware, have

been usedin large-scalehigh-performance computer

.4,10,23 storage

storagedevices managed mostly by system software with

limitedsupportfromspecialized hardware and/or

firmware*.11, 12.

Impact of HewTechnology

A* a result of new storage technologies,

especial-lyfor levels 3and6,as wellas substantially

reduced costsfor implementing the necessary hardware

control functions,nany new types of hierarchical

storagesystems haveevolved. Several of these

advances are discussed inthis session.

Bill Strecker23 explains how the cache system

concept can be extendedfor use inhigh-performance

minicomputer systems.

Bernard Greenberg and Steven Weber 12discuss the

design andperformance of a three-level paging system

usedin theMulticsOperating System (levels 2, 4, and

5 intheearlierHoneywell 645 implementation; levels

2, 3, and5 inthemore recent Honeywell 68/80

imple-mentation).

Suran Ohnigian-" analyzesthehandling of data

baseapplicationsina storage hierarchy.

Clay Johnson*'* explains howa new level6

tech-nology isbeingused inconjunction with level5

devices toformavery largecapacity hierarchical

Storagesystem, the IBM 3850MassStorage System.

Thevariousautomatedhierarchical storage systems

discussed in this session aredepictedinFigure1.

Cache

©

Main©

Block©

Backing©

Secondary©

Masso

t

©_

Q

z%

i s i2

c I S?

)©

i?

{645 68/80J r iO

i©

o

Figure 1Various Automated Hierarchical Storage Systems GENERAL HIERARCHICAL STORAGE SYSTEM All of the automated hierarchical stoarge systems

described thusfar have only dealt with two or three

levels of storage devices (the Honeywell68/80 Hultiea

system actually encompasses four levels since it

containsacache memory which ispartially controlled

by the system software). Furthermore,manyofthese

systems wereinitially conceived and designed several

years agowithout the benefit of ourcurrentknowledge

aboutmemory and processor capabilities and costs. In

thissection the structure ofageneralautomated

hierarchical storage systemisoutlined (seeFigure2)

.

Continuous and Corplete Hierarchy

Anautomatedstoragehierarchy must consist of a

continuous and complete hierarchy that encompasses the

fullrange of storage levels. Otherwise, theuser

would beforced to rely on manual or semi-automatic

storage management techniques to deal with the storage

levelsthat are not automatically managed.

PageSplitting and Shadow Storage

Theaveragetime, Tm, required to move apage,a

unit of information,between two levels of the

hier-archyconsists of the sum of (1) the average access

time,T, and (2) thetransfer time,which is the

product of the transfer rate,R, andthesize ofa

Level 0. Processor francfez kilt 5. Secondary figure2 GeneralAutomated

Hierarchical Storage Systea

Ocice

cfFaceS:ze. By exa:tative devices indicated in T^cle

tinesvar;. audi-ore than transfer rates. Asaresult,

Taisvery sensitive to the transfer unit size, S, for

devices with s-sll values of T [e.g.. levels 1and 2).

Thus, for these devicessi-allpage sizessuch as N=16

or32bytes are typically used. Conversely, for

devices with large values forT, themarginal cost of

increasing thepacesize is quite ssaall, especially in

view of the benefits possible due to locality of

reference. Thus,page sizes such as N-4D96 bytes are

typicalfor levels ;a-d5. "uch larger units of

informationtransfer are used for level 6devices.

Since themarginal increase in Ta decreases none—

tonically asa function of storage level, ther.-ier cf

bytestransferred between levels should ^-crease

correspondingly. Inorder to simplify the

implementa-tion ofthe systea and tobeconsistentwith the

normal mappingsfroraprocessor address references to

page addresses, itisdesirable thatall:a;esices be

apower cftwo. 7-.echoice foreach pagesize depends

upon the characteristics of theprogramstoberunand

the effectiveness of the overall stcrace system.

Pre-liminary measurements indicate thata ratio ofu.:1 cr

8:1between levels is reasonable. Meade:?has reported

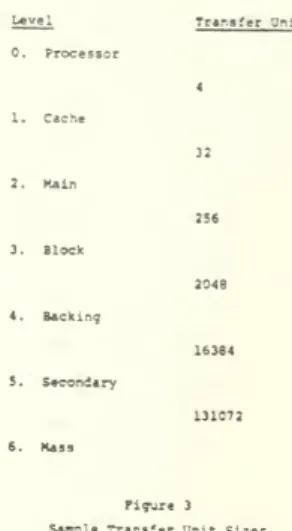

inilarfindings. Figure 3 indicates possible transfer

unit sizes between the6 levels presented inTable I.

The particular choice of size will, of course, depend upon the particulars of the devices.

Figure3

Saaple Transfer Unit Sizes

PageSol

—

tiro, sowletus cc-.siderthe art.ilmovement of

:::->t.:-

i- the stcrace -lerarr-v. Attime : the processor generatesa refere-re to*

logicaladdress a. kssone that the corresponding

Information _s not currently stored i- - . :.-

-but -s found in M<3!. For simplicity, assume that

pace sizesare doubled as-- :r iota the ttierarcbj

(i.e.,N(2.3)-2xN(1.2). S(3,4)-2x.N(2,3)-4xS(1,2).etc.

,

see Figure•). The zzzecf sizeB12.3 containing

ais copied from M(3) to M(2). n(2) now ro-ta.-s the

reeded ir.form-aticr., sowerep-eat theprocess. Ota

page of size

U.2]

containing a .scopied froe M(2)toM(l). sow, finally,th.e pagecf sizeN(0,1)

containinga iscopied free - l and fcrwaroedtothe

processor. In t-eprocess thepage cf Information is

splitrepeatedly as it moves up the biexaa

ic:

i.r

--resultof the splitting

t--3t is reoe..-: b]

5fta s - 5d o .

consisting of itself

Indinall the

process, the page

theprocessor -is

and itsadjacent t

praa rappl

localityofreference.-a-, of t--- . igeswill

be refer- ;fter--ardand oe

owed

fartherbv i- the hierarchy also.

:--p

:i-rof Fares. I- t-estrateov presented.

capesareart-allycopied as theymove up the hierarchy]

a pageatlevel i -as ore copyof itself ;- ear- |

-levels]> i. :r *read' rep-.ests

' " wite- requests tor-est applications

• - rams t.-.e

thus

.fa

pagehasnot been :-i-:e: and -s selectedto oeremoved

Level to a Lower ivel, it reednot o-e

act. all- tu-i-sferredsince avalidcopyalready exists

in the lowerlevel. Theoo-ter.ts cf any level of t--e

hierarchyis always asucset of t_-.einformation

TT.

I IN(0,1)I

•N(2,3)V////////^///////A

-i I N(3,4)1"

T

Page Splitting and Shadow Storage

Direct Transfer

In thedescriptionsaboveit isimplied that

information isdirectlytransferred between adjacent

levels. Bycomparison, most presentmultiplelevel

storagesystems are based upon an indirect transfer

(e.g., theMultics PageMultilevelsystem1^). in an

indirect transfersystem,all information is routed

through level 1or level2. Forexample, tomovea

page fromlevel n-1to leveln, thepage ismovedfrom

leveln-1 tolevel 1andthen fromlevel 1to leveln.

Clearly, anindirectapproachisundesirable

since itrequires extra pagemovementand its

associ-atedoverhead as well as consuminga portion of the

limited M(l) capacity in the process. In thepast

such indirect schemes were necessary since all

inter-connections were radial from themain memoryor

pro-cessorto thestoragedevices. Thus, alltransfers

hadtoberoutedthrough the central element.

Further-more, duetothedifferences intransfer rate between

devices, especiallyelectro-mechanicaldevices that

must operate at a fixedtransfer rate, direct transfer

was not possible.

Based upon current technology, these problems can

besolved. Many of the newer storage devices are

non-electromechanical (i.e., strictlyelectrical). In

these cases thetransfer rates can be synchronized to

allow direct transfer. Forelectromechanicaldevices.

directtransfer ispossible iftransferrates are

similar ora small scratchpadbuffer, such as low-cost

semiconductorshift registers, are usedtoenable

synchronization between the devices. Direct transfer

isprovided between the level 6 and level 5storage

devices in the IBM 3850 Mass Storage System14

.

Read Through

Apossible implementation of the page splitting

strategy could be accomplished by transferring

infor-mation from leveli to theprocessor (level0) ina

series of sequential steps: (1) transfer page of size

N(i-l,i) fromlevelito leveli-1, andthen (2)

extract theappropriate page subset of size N(i-2, i-1)

and transfer it from level l-l to level i-2, etc.

Undersuchascheme, atransferfromlevel itothe

processor would consist ofa series ofisteps. This

would result inaconsiderable delay, especially for

certain devices that require a large delay for

reading-recently written information (e.g., rotationaldelay

toreposition electromechanical devices).

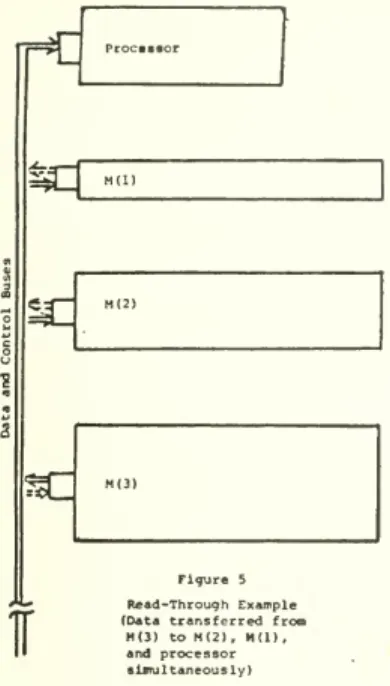

The inefficiencies of the sequential transfers can be avoided by having the Information read through

toall the upper levels simultaneously. For example,

assume the processor references logical address a which

iscurrently stored at level i (andall lowerlevels,

ofcourse). The controller for M(i) outputs the

N(i-l,i) bytesthat contain a onto the data buses

along with their corresponding addresses. The

con-troller for M(i-l) willaccept all of these N(i-l,i)

bytes and store them inM(i-l). At thesame time,

thecontroller for M(i-2) willextract the particular

N(i-2, i-1) bytes thatitwants and storethemin

M(i-2). Inalikemanner, all ofthe controllers can

simultaneously extract the portion that is needed.

Using thismechanism, theprocessor (level0)extracts

theN(0,1) bytesthat itneeds without any further

delays

—

thusthe information from level iisreadthrough to the processor without any delays. This

processis illustrated inFigure 5.

The read through mechanism also offers some

important reliability, availability, andserviceability

advantages. Since allstorage levels communicate

anonymously over thedata buses, if astorage level

must be removed from thesystem, there are nochanges

needed. In thiscase, the information is "read

through"thislevel as if itdidn't exist. Nochanges

are needed toany of theother storage levels or the

storagemanagement algorithms although we would expect

theperformance to decrease asa result of themissing

storagelevel. This reliabilitystrategyisemployed

inmostcurrent-day cache memorysystems^.

Store Behind

Under steady-state operation, we would expect all

the levels of the storage hierarchy to be full (with

theexception of the lowest level, L). Thus, whenever

a pageis to bemoved intoa level, it isnecessary

toremove a current page. If thepage selectedfor

removalhas not been changed by means ofaprocessor

write, thenew page can beimmediately stored into

the levelsincea copy of the page to be removed

already exists inthe nextlower level ofthe

hier-archy. But, if theprocessorperforms a write

opera-tion,all levelsthat containa copy of the

informa-tion beingmodified must be updated. This can be

accomplished ineither of at least three basic ways:

(1) storethrough, (2) store replacement, or (3)

rr€

processorFigure 5

Read-Through Example

(Datatransferred from

M(3) to M(2), M(l),

andprocessor

simultaneously)

StoreThrough. Undera store through strategy,

alllevels are updated simultaneously. This is the

logical inverse of theread through strategy but it

hasacrucial distinction. The store through is

limited by the SDeed of the lowest level, L, ofthe

hierarchy, whereas the readthrough islimited by the

speed ofthe highestlevelcontainingthedesired

information. Storethrough isefficientonlyif the

accesstime oflevel Lis comparableto theaccess

time of level1, such as ina two-level cache system

(e.g., itisused inthe IBMSystem/370Models 158

and 168).

.torereplacement

StoreReplacement. Under

strategy, the processor only updates M(l)andmarks

thepage as "changed." If suchachanged page is

later selected for removalfrom M(l), it isthenmoved

tothenext lowerlevel, fl(2), immediately prior to

being replaced. Thisprocessoccurs at every level

and, eventually, level L willbeupdated but only

after thepage hasbeen selectedfor removal from all

the higherlevels. Duetotheextradelays caused by

updating changed pages before replacement, the

tffective access tinefor reads is increased. Various

versions of store replacement are used inmost

two-levelpaging systems since itofferssubstantially

Betterperformance than store through for slow storage

levices (e.g., disks and drums).

StoreBehind. In bothstrategies above, the

storagesystem was required toperform the update

operation at some specifictime, either atthe timeof

write or replacement. Oncethe information has been

storedinto M(l), theprocessor doesn'treally care

how or when the other copies are updated. Store

behindtakes advantage of this degree of freedom.

The maximum transfer capability betweenlevelsis

rarelymaintained since,at anyinstantof time,a

storage levelmay not have anyoutstanding requests

for service oritmay be waiting for proper

position-ingtoservicea pending request. During these

"idle-periods,data can betransferreddown to the next

lower levelofthe storage hierarchywithoutadding

anysignificant delays to the read or storeoperations.

Since these"idle"periods areusually quitefrequent

under normal operation, therecan beacontinual flow

ofchangedinformationdown through the storage

hier-archy.

Avariation of this store behind strategy i3 used

in the MulticsPageMultilevel system12whereby a .

certainnumber of pages at each level are kept

avail-ableforimmediate replacement. Ifthe number of

replaceable pages dropsbelow the threshold, changed"

pages are selected to beupdated tothenextlower

level. Theactualwriting of these pagestothe lower

levelisscheduled totakeplace when thereisno

real requestto bedone.

The store behindstrategy can also be used to

provide high reliability inthestorage system.

Ordinarily, achanged pageis not purgedfroma level

untilthenext lower level acknowledges that the

corresponding page has been updated. Wecanextend

thisapproach to require two levels ofacknowledgement.

Forexample, achanged page isnotremoved from M(l)

until thecorresponding pages inboth M(2) and M(3)

have been updated. Inthis way, there will be at

leasttwocopies of each changed piece of information

at levels M(i) and M(i*l) inthehierarchy. Ifany

level malfunctions or must be serviced, itcan be

removedfrom the hierarchy without causing any

infor-mation to be lost. There are twoexceptions to this

process, levels M(l) andM(L). Tocompletely

safe-guard thereliability of the system, it may be

necessary to store duplicate copies ofinformation at

theselevels. Figure6illustrates this process.

DistributedControl

There isa substantialamount of work required

tomanage thestorage hierarchy. Itisdesirable to

remove asmuch as possible of the storage management

from the concern of theprocessor. In the

hier-archicalstorage system described inthis paper, all

ofthemanagement algorithms can be operated based

uponinformationthatis local toa level or, at most,

inconjunction with information from neighboring

levels. Thus, it ispossibleto distributecontrol

ofthe hierarchyinto the levels, thisalso

facili-tatesparallel and asychronous operation in the

hierarchy (e.g., the storebehind algorithm).

Theindependent control facilitiesforeach

level can be accomplished by extending the

function-ality ofconventional device controllers. Most

current-day sophisticated device controllers are

actually microprogrammed processors capable of

per-forming the storage management function. TheIBM

REFERENCES

1. Ahearn, G. R., Y.Dishon, and R.N. Snively,

"Design Innovations of the IBM 3830 and 2835

StorageControl Units,"IBM Journal of Research

andDevelopment,Vol. 16,No. 1, pp. 11-18,

January, 1972.

2. Anacker, W. andC. P. Wong, "Performance

Evaluation ofComputer Systems with Memory

Hier-archies," IEEE-TC, Vol. EC-16, No. 6, pp. 765-773,

December1967.

3. Arora,S. R.andA. Gallo, "OptimalSizing,

Loading andRe-loading in aMulti-level Memory

Hierarchy System," SJCC,Vol. 38, pp. 337-344,

1971.

4. Bell, GordenC. andDavid Casasent,

"Implementa-tion of aBufferMemory inMinicomputers,"

ComputerDesign, pp. 83-89, November 1971.

5. Benaoussan,A., C. T. Clingen,andR. C.Daley,

"The MulticsVirtual Memory," Second ACM Symposium

onOperating Systems Principles, Princeton

University, pp. 30-42, October 1969.

6. Brawn,B. S.,and F.G. Gustavson, "Program

Behavior ina Paging Environment," FJCC,Vol. 33,

Pt. 11,pp. 1019-1032, 1968.

7. Chu,W. W., andH.Opderbeck, "Performance of

Replacement Algorithms withDifferentPage Sizes,"

Computer,Vol. 7, No. 11, pp. 14-21,November 1974.

17. Madnick, S. E.,andJ. J.Donovan,Operating

Systems,McGraw-Hill, New York,1974.

18. Mattson, R., et al., "Evaluation Techniques for

Storage Hierarchies," IBMSystems Journal, Vol.9

No. 2, pp. 78-117,1970.

19. Meade,R. M., "OnMemory System Design," FJCC,

Vol.37, pp. 33-44, 1970.

20. Ohnigian, Suran, "RandomFile Processingina

Storage Hierarchy,"IEEEINTERCON, 1975.

21. Ramamoorthy,C. V. andK. M.Chandy,

"Optimiza-tion ofMemory Hierarchies inMultiprogrammed

Systems," JACM,Vol.17, No. 3, pp. 426-445,

July 1970.

22. Spirn,J. R.,andP. J.Denning, "Experiments

with Program Locality," FJCC, Vol.41, Pt. 1,

pp. 611-621. 1972.

23. Strecker, William, "CacheMemoriesfor

Mini-computer Systems," IEEEINTERCON, 1975.

24. Wilkes,M. v., "Slave MemoriesandDynamic

StorageAllocation." IEEE-TC,Vol. EC-14,

No. 2,pp. 270-271,April 1965.

25. Withlngton,F. G., "Beyond 1984i ATechnology

Forecast," Datamation, Vol.21, No. 1,pp. 54-73,

January 1975.

8. Coffman, E.B., Jr., andL.C. Varian, "Further

Experimental Data on the Behavior of Programs in

•PagingEnvironment," CACM, Vol. 11, No. 7,

pp. 471-474, July1968.

9. Considine, J. P., andA. H.Weis. "Establishment

and Maintenance ofa StorageHierarchyfor an

On-line Data Ba3e under TSS/360," FJCC, Vol. 35,

pp. 433-440, 1969.

10. Conti, C.J., "Concepts forBuffer Storage,"

IEEEComputerGroupNews, pp. 6-13,March1969.

11. Denning, P. J., "VirtualMemory,"ACMComputing

Surveys,Vol. 2,No. 3, pp. 153-190, September

1970.

12. Greenberg, Bernard S.,andStevenH. Webber,

"MulticsMultilevel Paging Hierarchy," IEEE

INTCRCON, 1975.

13. -Hatfield, D.J., "Experiments on Page Size,

Program AccessPatterns, and VirtualMemory

Performance," IBM Journal of Research and

Development,Vol. 16, No. 1, pp. 58-66,January

1972.

Johnson, Clay,

IEEEINTERCON,

'IBM 3850

—

MassStorage System,L975.

Madnick, S. E., "StorageHierarchySystems,

MITProject MAC Report TR-105,Cambridge,

Massachusetts, 1973.

16. Madnick, S. E., "INFOPLEX

—

HierarchicalDecom-position ofaLargeInformationManagement System