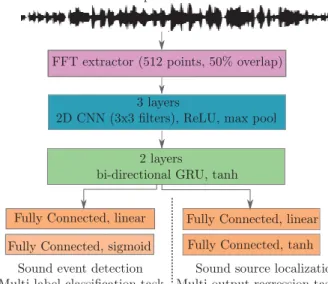

Cross-modal interaction in deep neural networks for audio-visual event classification and localization

Texte intégral

Figure

Documents relatifs

The audio modality is therefore as precise as the visual modality for the perception of distances in virtual environments when rendered distances are between 1.5 m and 5

In the context of DOA estimation of audio sources, although the output space is highly structured, most neural network based systems rely on multi-label binary classification on

In Experiment 1, 4.5- and 6-month-old infants’ audio-visual matching ability of native (German) and non-native (French) fluent speech was assessed by presenting auditory and

• a novel training process is introduced, based on a single image (acquired at a reference pose), which includes the fast creation of a dataset using a simulator allowing for

Visual attention mechanisms have also been broadly used in a great number of active vision models to guide fovea shifts [20]. Typically, log-polar or foveated imaging techniques

In our system (see Figures 1 and 2), the global camera is static and controls the translating degrees of freedom of the robot effector to ensure its correct positioning while the

The computational cost is dependent of the input image: a complex image (in the meaning of filter used in the SNN) induced a large number of spikes and the simulation cost is high..

The computational models of visual attention, originally pro- posed as cognitive models of human attention, nowadays are being used as front-ends to numerous vision systems like

![Figure 8.1. Fusion architectures for event recognition. Visual and audio features are obtained with DenseNet [ 194 ] and a CNN [ 240 ], respectively.](https://thumb-eu.123doks.com/thumbv2/123doknet/2894715.74090/115.892.195.731.268.452/figure-fusion-architectures-recognition-features-obtained-densenet-respectively.webp)