To link to this article :

URL :

http://www.iariajournals.org/software/tocv7n34.html

To cite this version :

Bergé, Louis-Pierre and Perelman, Gary and Hamelin,

Adrien and Raynal, Mathieu and Sanza, Cédric and Houry-Panchetti, Minica

and Cabanac, Rémi and Dubois, Emmanuel Smartphone Based 3D Navigation

Techniques in an Astronomical Observatory Context: Implementation and

Evaluation in a Software Platform. (2014) International Journal on Advances

in Software, vol. 7 (n° 3 & 4). pp. 551-566. ISSN 1942-2628

O

pen

A

rchive

T

OULOUSE

A

rchive

O

uverte (

OATAO

)

OATAO is an open access repository that collects the work of Toulouse researchers and

makes it freely available over the web where possible.

This is an author-deposited version published in :

http://oatao.univ-toulouse.fr/

Eprints ID : 15228

Any correspondence concerning this service should be sent to the repository

administrator:

staff-oatao@listes-diff.inp-toulouse.fr

Smartphone Based 3D Navigation Techniques in an Astronomical Observatory

Context: Implementation and Evaluation in a Software Platform

Louis-Pierre Bergé

1, Gary Perelman

1, Adrien Hamelin

1, Mathieu Raynal

1, Cédric Sanza

1, Minica

Houry-Panchetti

1, Rémi Cabanac

2, and Emmanuel Dubois

11 University of Toulouse

IRIT Toulouse, France

{Louis-Pierre.Berge, Emmanuel.Dubois}@irit.fr

2 Télescope Bernard Lyot, Observatoire Midi-Pyrénées

University of Toulouse Tarbes, France remi.cabanac@obs-mip.fr

Abstract—3D Virtual Environments (3DVE) come up as a good solution to transmit knowledge in a museum exhibit. In such contexts, providing easy to learn and to use interaction techniques which facilitate the handling inside a 3DVE is crucial to maximize the knowledge transfer. We took the opportunity to design and implement a software platform for explaining the behavior of the Telescope Bernard-Lyot to museum visitors on top of the Pic du Midi. Beyond the popularization of a complex scientific equipment, this platform constitutes an open software environment to easily plug different 3D interaction techniques. Recently, popular use of a smartphones as personal handled computer lets us envision the use of a mobile device as an interaction support with these 3DVE. Accordingly, we design and propose how to use the smartphone as a tangible object to navigate inside a 3DVE. In order to prove the interest in the use of smartphones, we compare our solution with available solutions: keyboard-mouse and 3D keyboard-mouse. User experiments confirmed our hypothesis and particularly emphasizes that visitors find our solution more attractive and stimulating. Finally, we illustrate the benefits of our software framework by plugging alternative interaction techniques for supporting selection and manipulation task in 3D.

Keywords-museum exhibit, 3D environment, software platform, interactive visualization, interaction with smartphone, 3D navigation, experiment.

I. INTRODUCTION

In comparison to our initial work [1], this paper explored the context of astronomical observatory in museum exhibit, integrating our previous experiment and creating a larger software platform for public demonstrations. Nowadays, 3D Virtual Environments (3DVE) are no longer restricted to industrial use and they are now available to the mass-market in various situations: for leisure in video games, to explore a city on Google Earth or in public displays [2], to design a kitchen on a store website or to observe 3D reconstructed historical sites on online virtual museum [3]. The use of a 3DVE in a museum, to engage people and facilitate the transmission of knowledge by adding fun and modern aspect [4], is the core of this work. As part of collaboration with the Telescope Bernard-Lyot (TBL), we intended to provide a support for helping visitors to better understand how this telescope operates. Indeed, access to the real telescope is restricted to keep the best possible values of temperature and

hygrometry, crucial parameters for collecting good observation results. The telescope only works at night and thus cannot be observed in a running context during the day. Therefore, an interactive application support was required to help visitors discover the TBL. It has been shown that 3D virtual environments can help understand a complex system by improving the spatial representation users have of the objects or by allowing cooperation between different users [5]. Therefore, we developed an interactive 3D environment representing the TBL and its behavior.

However, in a museum context, the visitor’s attention must be focused on the content of the message and not distracted by any difficulties caused by the use of a complex interaction technique. This is especially true in a museum where the maximization of the knowledge transfer is the primary goal of an interactive 3D experience. Common devices, such as keyboard and mouse [6] or joystick [7] are therefore widely used in museums. To increase the immersion of the user, solutions combining multiple screens or cave-like devices [8] also exist. However, these solutions are cumbersome, expensive and not widespread.

Alternatively, the use of a smartphone, as a personal handheld computer, is commonly and largely accepted. Smartphones provide a rich set of features and sensors that can be useful to interact, especially with 3DVE and with remote, shared and large displays. Smartphones also create the opportunity for the simultaneous presence of a private space of interaction and a private space of viewing coupled with a public viewing on another screen. Furthermore, many researches have already been performed with smartphones to study their use for interacting with a computer. They explore multiple aspects such as technological capabilities [9], tactile interaction techniques [10], near or around interaction techniques [11]. Given the potential in terms of interaction support and the availability of smartphones in anyone's pocket, we explore the benefits and limitations of the use of a smartphone for interacting with a 3DVE displayed in a museum context in this article.

Beyond the development of an interactive 3D environment for science popularization with only one set of interaction techniques, we took the opportunity to design and implement a platform on which it is easy to plug interaction techniques of different forms. The contribution presented in this paper is thus threefold.

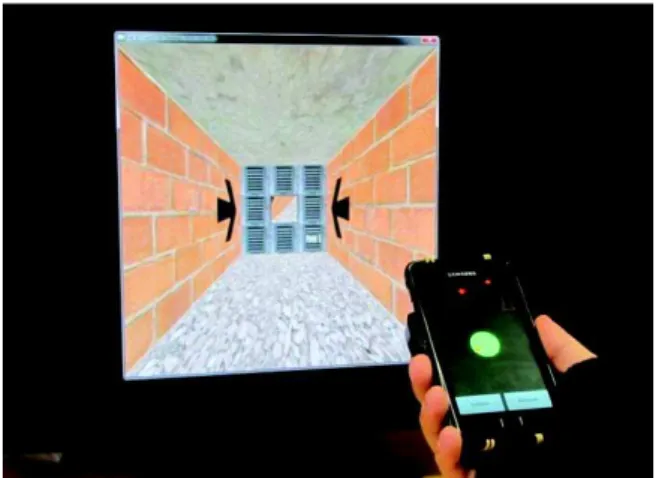

Figure 1. Smartphone-Based interaction techniques to navigate into a 3D museum exhibit: a) principle of use, b) controlled experiment, c) integration in an in-situ exhibit.

First, we adopt a user-centered approach to address the concrete need of the Telescope Bernard-Lyot: providing a 3D interactive platform for museum exhibit, which facilitates the understanding of the telescope behavior.

Second, we describe the design and implementation of the interactive platform. The platform is based on an open architecture in order to be able to plug different interaction modalities easily. Fundamental features integrated in the platform are then presented, such as 3D rendering, data visualization and interaction based on widows, mouse and 3D mouse device. As a result, this platform provides a pedagogical environment in which different interaction techniques can easily be plugged into one specific 3D environment: the objects, the size of the elements and the available actions are the same for every technique.

Third, we design and evaluate a new smartphone-based technique for navigating inside the 3D interactive platform: indeed navigation is the most predominant task a user will have to perform in order to discover and understand the virtual space. Concretely, our technique translates the motions of the smartphone into motions of the point of view in the 3DVE. We thus propose 1) to consider a smartphone as a tangible object, in order to integrate it smoothly into a museum environment and because it has been proven to be easier to apprehend by newcomers [12], 2) to display feedback and/or personalized information on the smartphone display, 3) to deport the display of the 3DVE on a large screen, in order to provide a display space visible by multiple users as required in museum contexts. As a result, our technique combines the use of a popular and personal portable device, the physical space surrounding the device and the user’s gestures and input for navigating inside a 3DVE. We compared our designed solution to the use of more common and available technologies: the keyboard-mouse device and a 3D keyboard-mouse device. We proposed a controlled evaluation focused on the interaction techniques: out of a specific museum context, the user will not be distracted by pedagogical content. We measure its usability and attractiveness in conjunction with performance considerations. The results confirm the interest of considering the use of personal mobile devices for navigating inside a 3DVE: the results are particularly significant in terms of a user’s attractivity.

The paper is structured as follow. We first position our work with regards to the context of 3D interactive

application in museums (Section II). Then we introduce our design approach of the platform and the relevant software considerations related to the platform implementation in Section III. After, we detail our smartphone-based interaction technique (Section IV) with the settings of the user experiment (Section V) and the results (Section VI). We finally discuss (Section VII) the validation of our 3D interactive platform, the originality and limitations of our smartphone-based solution and other interaction techniques integrated in our platform (Section VIII). We conclude with perspectives and future works.

II. BUILDING A3DINTERACTIVE PLATFORM FOR

MUSEUM EXHIBIT:RELATED WORKS

As already mentioned, interactive 3D virtual environments are emerging in public space applications and especially in museum contexts where they contribute to the immersion and engagement of visitors.

In this field, many works have already been done and we propose in this section a review of already well known and explored challenges. First, we focus on the different forms a 3D interactive visiting application can take in a museum. Then we synthesize technological considerations of importance and finally look at the design approaches for building a 3D interactive virtual exhibit.

A. Visiting assistance

In order to enhance the visit in museums, tour guides or audio-guide are available. These guides provide information about the different sections of a museum and guide the user through they visit by defining a specific path to follow. In the context of 3D, this raises specific challenges. First, the application must help the visitor so that he does not get lost in the 3D environment. Offering landmarks, maps, etc. are thus required [13]. The realism of the scene also plays a role [14]. To complement this immersion, avatars, conversational agents, realistic humanoids are also inserted in such app to guide or provide explanations to the visitors [15].

Alternatively, a smooth mix of real and virtual enables the visitor to proceed through a real museum, to collect elements of interests, and after the visit to virtually re-explore in 3D those collected elements [16]. This is close to the attempts of using 3D virtual environments to support post-visit of a museum [17].

Beyond those individual visits, 3D virtual visits also raise the possibility to develop true collaborative visits so that remote visitors can visit it together [18]. To some extent, it is also a possible solution to explore in 3D some of the objects exposed in the museum. The concept of collaborative exhibit is especially relevant for blind visitors [19].

B. Technological considerations

To settle these different forms of 3D interactive museum visits, several technical issues are raised and addressed in the scientific literature. In the museum context, two main considerations emerge from the literature: the 3D environment realism and the remote access.

1) 3D use and constraints

Although not specific to museum contexts, many ways of modeling museum objects into a 3D scene have been explored. It ranges from the modeling of the objects using dedicated approaches [20] to really precise modeling with a 3D laser scanner [21] through image based reconstruction [22]. In addition, the rendering of the resulting 3D meshes can be crucial in order to give the user a good experience during a virtual visit: it involves a large graphical management as the handling of light effects on the objects or realistic shadows [23]. More complex treatments may also be required to obtain high resolution, accurate and complete 3D models of parts of an exhibit. This is for example possible through the combination of different 3D model types [24].

2) Collaborative access, distant access

Once the virtual exhibit has been built, it remains necessary to allow its use in the context of a museum. Specific software design aspect must be considered to provide a support to an effective distant and collaborative access [25]. Indeed, as many visitors may come at the same time to the museum section, they have to be able to interact with the environment at the same time without having to wait the other visitors to leave. Many software architectures have been defined in the scientific literature to deal with such problems [26]. For example, if the 3D virtual environment allows a visitor to manipulate an element of the virtual museum, control over the environment must be handled by the platform. Apart from the collaborative or simultaneous access, visitors may have access to the virtual exhibit from everywhere: to this end, web access has been explored [14].

C. Building support to virtual exhibits

Behind the form, rendering and software considerations, developing a virtual exhibit also requires to think of the structure of the application. This must be done in two ways.

1) Management systems

The first way consists in structuring the development of the application. Tool chains to create, store, manage and visualize all the data needed in virtual exhibits have been defined [27]. Other approaches have also been proposed where non-familiarized-in-computer-science users can edit their own application [27]. Such systems help the museum staff to manage their virtual museum easily and allow dynamic modifications (adding or removing objects, adding museum sections that does not exists in the real museum, etc.) [27].

2) Learning perspectives

The second way consists in structuring the knowledge transfer approach. Indeed, once the 3D environment has been built, there is still the need to consider the ultimate goal of this virtual environment: its knowledge transfer capabilities. Some researchers have been led to determine if the use of a 3D environment helps the user in the learning process [4]. After experiments with several museums with or without a 3D virtual environment, it has been shown that these virtual accesses allow a better understanding of the museum information. This finding is based on the fact that the users are not forced to follow a specific path during their visit and thus are more concentrated on the museum section they are visiting.

3D virtual spaces also offer tools and support for a pedagogical transfer. The impact of different tools is studied to reveal how it may enhance the learning experience [28].

D. Interaction techniques for 3D museum applications

Finally, the fourth major areas of research related to interactive 3D virtual environment in museum is focusing on the user’s interaction with and within the 3D environment. Many interaction techniques dedicated to a 3D virtual museum environment have been studied in the past few years. Indeed, interacting with a 3D virtual exhibit application can be achieved using a simple GUI interface [29], where each object is represented by a tab that can be activated. It is possible to use tactile devices [30] to allow zooming on particular objects or turning them around to see them on a different point of view. Haptic devices can also be used [8] to enhance the spatial knowledge of the users when they manipulate the 3D objects of the application. Finally, augmented reality can be used to upgrade the immersive capabilities of the virtual exhibit [31].

E. Outcome of the state of the art

According to the scientific literature, the use of 3D in a museum context has been widely addressed over the last years. Many different preoccupations have been raised and considered such as the integration of such application in a museum, 3D rendering issues and interaction techniques.

Technological advances now largely allow the use of various and advanced forms of interactions. It is therefore required to provide a way to change interaction techniques easily in order to support the opportunity offered by new or emerging forms of interaction.

Furthermore, little attention has been paid so far to the use of personal devices for navigating 3D in a museum context. Exploring the potential support personal smartphone can offer to perform complex interaction such as tasks in 3D environment are thus also required.

In our work, the visiting assistance we are interested in is limited to a virtual interactive application, used in a museum with museum mediators available to explain the information provided by the application or using the application to catch an audience. From a technological point of view, we are seeking to provide a support to easily test different interaction techniques. Therefore, we need a software platform allowing easy plug and play of multiple interaction

techniques. Regarding the application supporting the visit, we are focusing on a way to disseminate information about the Telescope Bernard Lyot (TBL) to none experts of astronomy and telescopes. We are more specifically focusing on the understanding of the operators’ activity on the TBL. Finally, in terms of interaction techniques we are mainly interested in providing a support for individual visitor to use a smartphone device to perceive a detailed area of one part of the 3D scene rendered on the remote and large screen.

From these considerations, we designed, implemented and evaluated a set of interaction techniques based on the use of a smartphone for 3D virtual museum exhibit. The different interaction techniques have been plugged into a new platform that handles a 3D virtual museum exhibit based on a user-centered design approach. The aim of the platform is also to contribute to better take into account the user when developing a 3D interactive museum exhibit by offering a support to easily plug new interaction techniques: our goal behind the platform is thus to plug and compare different interaction techniques in a scenario and a 3D environment that serve as references. As a consequence, the platform architecture, which has been designed according to the state of art, also contains particular features that allow a better handling of the multiple interaction techniques that can be plugged on it.

III. DESIGNING THE 3DINTERACTIVE PLATFORM

As already mentioned, the platform is simultaneously intended to be a support for explaining how a telescope works and easy to plug interaction techniques for museum 3D virtual environments.

The user-centered design approach we followed to reach these two goals includes two steps. It firstly includes an observation step in which the goal is to identify the requirements for the interactive exhibit of the TBL. The design software architecture and the interaction techniques have been led in a second place. In the following sections we report the design tools used along the different steps and the design decisions taken to develop the platform.

Figure 2. The Telescope Bernard-Lyot outside (left) and inside (right).

A. Context

The Telescope Bernard-Lyot (TBL) is located on top of the Pic-du-Midi (Figure 2). It is the largest telescope in Europe (2m.) and it takes advantage of a very good position: high altitude, far from city lights, etc. The operators of the TBL are in charge of planning and executing observations of “stars”. These observations are requested by European researchers.

To perform these observations, a Windows, Icons, Menus and Pointing device (WIMP) application allows the monitoring of relevant data and the emission of “command-lines” to position the telescope and its dome. Several screens are displaying all the required information in a very basic form as illustrated on the Figure 3.

Figure 3. Current operators’ desktop (top) and their WIMP applications (bottom).

B. Domain analysis

In this context, we performed two different forms of analysis: an in-situ observation to identify the tasks and data used by the current operators, and semi-guided interviews to define the potential end-users and the scenario describing the use of the TBL.

During the in-situ observation, we watched the operators of the TBL during their work in the supervision room. We also visited the dome to have a better representation of the telescope and its components. We discussed with them to understand the tasks they performed more finely and we observed the current WIMP application they used. We formalized this task analysis through a task tree expressed with K-MADe [32]. The modeling of the overall activity leads to the identification of approximately 60 tasks. This provides a representation of the operator’s activity and the required data can easily be linked to the appropriate subtask. It constitutes a good support to organize the data retrieved in this first step of the analysis.

After that, we organized semi-guided interviews with the operators and the personnel in charge of the visits (at the existing museum and at the dome) to define their additional or more specific needs that did not appear in the first observations. These multidisciplinary and participative meetings were especially important to identify the audience and the most important steps of the activity of an operator that must be explained to the different audiences.

From this domain analysis step, we established a list of data that are the most relevant when performing an observation with the telescope:

· The two angles of the TBL

· The position of the seal of the dome (two angles).

· Inside and outside temperatures and hygrometry are also required to ensure a good quality observation.

We also ended with two potential targets, sharing a common scenario:

· A museum visitor

· An astronomy student who learns how such telescopes work.

Out of the task analysis we built with animators of the museum a defined pedagogical scenario allowing a random person to understand the set of operations performed to operate the telescope. It is split in five different steps, which globally represent how the telescope works and gathers data from a selected star:

· Activation of the hydraulic system · Selection of a star

· Alignment of the telescope and the dome in the star direction

· Activation of the earth rotation and movement of the telescope to follow the star movement · View of the gathered data

These steps are further detailed and illustrated in Section III.C.3. Knowing all these informations, we were able to develop a platform that not only answers the required features, but also provides an easy way to plug the interaction techniques of different modalities and connect them to the 3D environment.

C. Design

Obviously, the basis of the 3D interactive platform is the 3D scene. We thus started by building this environment on the basis of 3D meshes provided by the Telescope Bernard-Lyot. These meshes had been built in SketchUp [33] on the basis of the 2D blueprints of the TBL. Our 3D environment has been built using the open source 3D renderer: Irrlicht [34].

Beyond the 3D environment, the design of the platform was decomposed into three subparts: the software and architecture of the system, a first set of interaction techniques and the instantiation of the pedagogical scenario.

1) Software design

The software design must allow plugging different interaction techniques to be used and evaluated. The software perspective must also take into account the context of use of the platform: several museum visitors may interact simultaneously from different devices or even places, time constraints for the visit exists, etc. This resulted into three design considerations: the architecture, the multi-users management and the components communication.

To ensure a clear decomposition of the interaction and system parts, and allow for a modular implementation, an adaptation of the MVC architecture model is used to structure our platform.

The concept of Model is used in our case to handle the data related to the operated telescope: position of the different elements, temperature, humidity, etc. The TBL being only operated at night, a simulation is required at day time to demonstrate its behavior to visitors. Therefore, the concept of Model applied to our platform allows a connection to the real TBL and/or a simulation of it. The first

Model, called “real telescope” (Figure 4), gathers the data of

the TBL and transfers it to the platform. The second one, called “simulated telescope” (Figure 4), emulates the behavior of the TBL. Actually, the 3D software platform allows visitors to manipulate only the simulated telescope and visualize the real one.

The need to visualize the telescope data with a WIMP interface for example can directly be mapped to the concepts of View of the MVC pattern (Figure 4). Moreover, the need to manipulate the telescope or to navigate inside the 3D view can directly be mapped to the concepts of Controller of the MVC pattern (Figure 4). The 3D view is a mix of View and

Controller concepts. In fact, the 3D view allows a

visualization of the telescope data and also permits to manipulation element that control the scenario of the software platform (see Section III.C.3).

In the middle of these components, a "Dialog Controller" orchestrates these components and their synchronization (Figure 4). It also includes a waiting queue to dispatch the control over the multi-user setting. This software implementation supports an easy reconfiguration of the platform to fit with different types of tasks, settings, features and interaction techniques.

Figure 4. MVC architecture with different plugged components.

Finally, to ensure an easy-to-plug, multi-platform compliant architecture and multi-programming language, we based the software communication protocol on the Ivy network API [35]. We created a set of textual predefined messages to support the communication over the different components types. These messages are generic for plugging

different interaction technique into our software platform. As an example, controller components supporting manipulation of the 3D virtual environment has specific network messages to take control over the 3D scene and to control the telescope; view components have messages to update information regarding the telescope data modification. Those communications are encapsulated into DLLs in order to help future interaction technique developer to worry only about the interaction technique and not about their link to the platform.

2) Interaction techniques design

The second perspective of the design focuses on the way the users will concretely act on the 3D environments and its features. Users’ interaction design is more specifically dedicated to the definition of a concrete interactive application to support the explanation and popularization of the scientific equipment. It aims at offering flexibility to the user in terms of access to the interactive system, i.e., to provide multiple interaction types to perform the three tasks we defined previously in the 3D environment.

We first lead a participatory design session, involving telescope operators, visitors, HCI specialists, 3D specialists and museum facilitators to produce mockups describing the organization and representation of the data required when operating the telescope. Results permits to design two different forms of data visualization and two sets of interaction technique.

a) Data visualization

Two forms of visualization are available on the current platform: a textual view and a 3D view of the data. The textual view is made of a set of labels with information such as the angles of the telescope and the position of the seal on the dome (Figure 5-left). It also contains hydrometric and temperature data, inside and outside the dome (Figure 5-right).

Figure 5. WIMP based view of the telescope data.

The 3D view provides a graphical representation of the current position and orientation of the elements of the telescope in 3D (Figure 6). Relevant data are also labeled where appropriate: angle values are displayed on the rotation axis, temperature is a flying label in the always visible dome, etc. The 3D view thus aggregates information in a single graphical and a realistic fairly representation.

Further, on-going iterations will optimize the presentation of the data. For example, recent participatory design sessions produced mock-ups for iconic representations of the labels: once developed they will be used to replace the textual label in the 2D view.

Figure 6. 3D view of the telescope.

b) Keyboard-mouse interaction technique

To cover all these manipulations, the simplest solution consists in offering the user traditional windows and buttons interface. This also constitutes the most realistic interaction with regard to the actual settings used by the real operators of the TBL (Figure 3). Figure 7-left show our WIMP interface to manipulate the elements of the telescope and to select a star from a list. In comparison to the initial WIMP interface, our domain analysis permits to limit the number of buttons and therefore simplify the interface for non-expert users. A similar interface allows controlling the earth rotation and thus observing the stars movement in the 3D view.

Figure 7. WIMP based telescope manipulator.

To complement this widget set and allow navigation in the 3D environment we also developed two alternatives. The first one involves the use of the mouse and keyboard, which are very common in video games. The keyboards arrow keys are used to perform translations in the environment and the mouse motions allow the user to orient its point of view in the scene. The second is a WIMP interface for different buttons for controlling the position and orientation in the 3D environment (Figure 8).

c) 3D mouse interaction technique

We have also integrated a commercial device dedicated to navigate inside the 3D environment: a 3D mouse, the Space Navigator [36] (Figure 9-left corner). This 3D mouse can be used to control the three rotation axis and three translation axes. A WIMP interface permits to calibrate and select the sensibility of the translation and rotation movement (Figure 9).

Figure 9. WIMP interface to configure the 3D mouse named Space Navigator [36] (left corner).

3) Instantiating the scenario on the platform

The third and last perspective clearly addresses the need to develop a concrete application to explain the behavior of the Telescope Bernard-Lyot through a scenario.

Given the 3D environment and the interaction techniques we developed, the five steps of the pedagogical scenario defined in close collaboration with the TBL and museum facilitators (see Section III.B) has been instantiated as follow.

The first step, “activation of the hydraulic system” consists in finding a red button placed in the 3D environment. This navigation task can be achieved using a WIMP interface or a 3D mouse navigator. Coming close to the button triggers a pressure on it. This button, although it does not match any button on the real telescope, represents the first step that the operators do when they use the telescope: initiating the equipment.

The second step, “selection of a star”, consists in selecting the star to observe. To do this, the dome of the 3D environment fades and the user can see all the stars currently added in the 3D scene. Selecting a star can be done either by clicking on it thanks to the mouse in the 3D scene or by choosing a star in the WIMP interface (Figure 7-left).

The third step, “alignment of the telescope and the dome in the star direction”, consists in manipulating all the elements of the 3D scene in order to observe the star. To do that, the user can move the 3D objects by using the WIMP interface (Figure 7-left). Alternatively, an automatic resolution can be executed by the platform to perform the appropriate translations and rotations of the different elements of the TBL.

The fourth step, “activation of the earth rotation and movement of the telescope to follow the star movement”, emulates the earth rotation around its axis. From a human point of view, it seems that the stars are rotating in the sky around the polar star. As a real observation can last for hours, it is important to perceive the impact of the earth rotation on the work of the operator. The sky rotation in the

3D environment is of course a lot faster than in reality. The stars start rotating and the user has to constantly align the telescope in the star direction. Another interest of this step is to underline that the telescope has been built in such a way that there is only one axis that changes over time.

The fifth and last step, “view of the gathered data”, shows to the user the data collected from the star observation performed through the previous steps (Figure 10). These data were gathered on real stars by the Telescope Bernard-Lyot and are shown to the user with an explanatory text. The data gathered are the spectrum of the star light obtained with a spectropolarimeter, one scientific originality of the equipment offered at the TBL.

Figure 10. Spectrum of a star light (left) and its associated magnetic field (right).

As illustrated here, the set of interaction techniques, based on windows, label, keyboard-mouse and 3D mouse is well suited for professional contexts and for users who are familiar with the use of 3D environments. But targeted audience is also made of museum visitors who may be very occasional users of 3D. To better engage the visitor and to explore the adequacy of other forms of interactive techniques, we have therefore designed a second type of interaction techniques to navigate inside the 3D environment based on the use of a smartphone. In the next section, we will detail our interaction technique.

IV. OUR SMARTPHONE-BASED INTERACTION TECHNIQUE

As described in the introduction, our interaction technique is based on the handling, manipulation and use of a smartphone, a familiar and personal object for most users. Three major characteristics define our interaction technique: tangible manipulation of the smartphone, personalized data displayed on the smartphone and 3DVE displayed on a remote screen.

We restricted the degrees of freedom of the navigation task in order to be close to human behavior and existing solutions in video games with standard device: two degrees of freedom (DOF) are used for translations (front/back and left/right) and two for rotations (up/down and left/right). We did not include the y-axis translation and the z-axis rotation since they are not commonly used for the navigation task. To identify how to map the tangible use of the smartphone to these DOF, we first performed a guessability study as performed by other work [37].

A. Guessability study

14 participants have been involved and they were all handling their own smartphone in the right hand. To facilitate the understanding, the guessability study dealt with only one translation and one rotation. A picture of a 3DVE was presented to the participants on a vertical support (Figure 11-left). It included a door on the left of the 3D scene and the participants were asked to perform any actions they wished on their smartphones in order to be able to look through the door. A second picture (Figure 11-right) was then displayed: now facing the door, the participants were instructed to pass through the door.

Figure 11. Pictures presented during the guessability study.

In this second question, 11 participants performed hand translations to translate the point of view. Interestingly none suggested using the tactile modality. Results are more contrasted with the first question, requiring a rotation: 5 par-ticipants used a heading rotation of the handled smartphone; only one used the roll technique; three proposed to hit the target with their smartphone; five participants placed the smartphone vertically (either in landscape or portrait orientation) and then rotated the smartphone according to the vertical axis (roll) thus preventing the view on the smartphone screen.

B. Design solution

From the guessability study, we retained that physical translations of the smartphone seem to be the most direct way to perform translations of the point of view in the 3DVE. It has been implemented in our technique as follows. Bringing the smartphone to the left / right / front or back from its initial position triggers a corresponding shifting movement of the point of view in the 3DVE (Figure 12-a). The position of the point of view is thus controlled through a rate control approach; the applied rate is always the same and constant. It is of course possible to combine front / back translation with right / left translation.

Feedback is displayed on the smartphone while moving it to perform these translations (Figure 12-b). A large circle displayed on the smartphone represents the initial position of the smartphone and the physical area in which no action will be triggered: the neutral zone. A small circle represents the current position of the smartphone and arrows express the action triggered in the 3DVE. As long as the small circle is inside the large circle, the navigation in the 3DVE is not activated. The feedback provided during each of four

possible motions is illustrated in Figure 12-b. Finally, the smartphone vibrates every time that the navigation action is changed.

Figure 12. Our smartphone based interaction. (a) Physical action for translation. (b) Feedback of the translation: front, left, front and right, back

translation.

Regarding rotations, we retain from the guessability study the most usable solution: rotations of the hand-wrist handling the smartphone are mapped to orientations of the point of view in the 3DVE. In our implementation, horizontal wrist rotations to the left/right of the arm are mapped to left/right rotations of the viewpoint (heading axis, rY) and wrist rotations above/below the arm are mapped to up/down rotations of the viewpoint (pitch axis, rX) (Figure 13-a). A position control approach has been adopted here that establishes a direct coupling of the wrist angle with the point of view orientation. A constant gain has been set for the wrist rotations: the limited range of 10° left and right [38] can be used to cover the range of the rotation angle inside the 3DVE (180°). This solution does not bear a U-turn: this was not required in the experiment but could be solved by transforming the position control into a rate control when the wrist reaches a certain angle.

As for translations, feedback is displayed on the smartphone while moving it to perform these rotations. It is rendered through two "spirit levels": they provide an estimation of the current orientations of the smartphone (Figure 13-b) with respect to the initial orientations used as a reference.

Figure 13. Our smartphone based interaction. (a) Physical action for rotation. (b) Feedback of the rotation.

To avoid unintended motions of the virtual camera in the 3DVE, the translations and rotations of the smartphone are applied to the 3DVE only when the user is pressing the button “navigate” displayed on the smartphone.

The smartphone also displays a “calibrate” button. This allows the user to recalibrate the smartphone at will, i.e., to reset the center of the neutral zone to the current position of the smartphone and the reference orientations.

Figure 14 illustrates the use of this smartphone-based interaction technique. Circles in the middle of the smart-phone screen indicate that the user is in the neutral zone. Spirit levels on top of the smartphone screen indicate he is looking a little bit upwards (right spirit level) and slightly to the right (left spirit level). As the user's thumb is not pressing the "navigate" button displayed on the smartphone screen (left corner), the motions of the smartphone do not currently affect the point of view on the scene.

Figure 14. User navigating with the smartphone-based technique.

V. EXPERIMENT

We conducted an experiment to compare our smartphone-based interaction technique with two other techniques using devices available in museums: a keyboard-mouse combination and a 3D keyboard-mouse. In the museum context, the temporal performances are not predominant. In fact our goal was to assess and compare the usability and attractiveness of these three techniques. Our protocol does not include museum information in order to keep the participant focused on the interaction task.

A. Task

The task consisted in navigating inside a 3D tunnel com-posed by linear segments ending in a door (Figure 15-b). The task is similar to the one presented in [39] and sufficiently generic to evaluate the interaction techniques correctly. The participants had to go through the segments and go across the doors but could not get out of the tunnel. Black arrows on the wall allowed finding the direction of the next door easily. The segments between the doors formed the tunnel, its orientation was randomly generated: the center of each door is placed -40, -20, 0, 20 or 40 pixels to the left/ right axis and

to the top/bottom axis of the center of the previous door (Figure 15-a). One trial of the task consist in navigate inside a tunnel including all 25 possible directions of the next door. The movement of the user is not liable to gravity. When the user looks up and starts a front translation movement, the resulting motion is a translation in the direction of the targeted point.

Figure 15. (a) Representation of one segment of the 3D tunnel. (b) Screenshots of the 3D environment of the experiment.

B. Interaction techniques

We compared three techniques: keyboard-mouse, 3D mouse and our technique based on a smartphone. In keyboard-mouse, the movements of the mouse control the 2 DOF point of view of the virtual camera (orientation). The four directional arrows of the keyboard control the 2 DOF of the translation of the virtual camera. In 3D mouse, the participant applies lateral forces onto the device to control translations (right/left, front/back), and rotational forces to control orientations of the virtual camera. The use of our technique, the smartphone, has been described in Section IV. For the three techniques, it appears that left/right translations are particularly useful when collision with doors occurs.

For each technique we determined the speed gain of the translation and rotation tasks through a pre-experiment with six subjects. We asked the participants to navigate inside our 3D virtual environment with each technique and to adjust the gains freely to feel comfortable when performing the task. We stopped the experiment and recorded the settings when the participant successfully went through 5 consecutive doors. Finally, for each technique, we averaged the values of gain between the participants. We noticed that the gain of the translation of the keyboard-mouse was higher than the 3D mouse or smartphone. This is probably due to the people habit in handling this technique.

C. Apparatus

The experiment was done in full-screen mode on a 24″ monitor with a resolution of 1920 by 1080 pixels. We developed the environment with a 3D open source engine, Irrlicht, in C++. For the keyboard and mouse device, we used a conventional optical mouse and a standard keyboard with 108 keys (Figure 16-a). For the 3D mouse we used the Space-Navigator [36] (Figure 16-b), a commercial device with 6 DOF. For the smartphone, we implemented the technique on a Samsung Galaxy S2 running Android 4.1.2 (Figure 16-c). To avoid an overload of the smartphone computing capacities with the processing of the internal sensors (accelerometers, gyroscope) we used an external 6D

tracker: the Polhemus Patriot Wireless [40] (Figure 16-d). We fixed a sensor to the rear face of the smartphone. Via a driver written in C++, the marker returns the position and the orientation of the smart-phone. We filtered the data noise with the 1€ filter [41].

Figure 16. (a) The keyboard-mouse, (b) The 3D mouse and (c) the smartphone configuration. (d) The Polhemus sensor.

D. Participants and procedure

We recruited a group of 24 subjects (6 female) aged 29.3 (SD=9) on average. All subjects were used to the keyboard and mouse, 17 of them had a smartphone and only 1 had already used the 3D mouse.

Every participant performed the 3 techniques (smartphone, keyboard-mouse and 3D mouse). They started with the keyboard-mouse technique in order to be used as a reference. The order of smartphone and 3D mouse techniques was counterbalanced to limit the effect of learning, fatigue and concentration. For each technique, the subject navigated inside 6 different itineraries. We counterbalanced the itineraries associated with each technique across participants so that each technique was used repeatedly with each group of users.

The participants were siting during the experiment and were instructed to optimize the path, i.e., the distance travelled. They could train on each technique through one itinerary. When the user passed through a door, a positive beep was played. When the user collided with an edge of the tunnel, a negative beep was played.

After having completed the six trials for one technique, the subject filled the System Usability Scale (SUS) [42] and AttrakDiff [43] questionnaires and indicated three positive and negative aspects of the technique. The procedure is repeated for the two remaining technique. The experiment ended with a short interview to collect oral feedback. The overall duration of the experiment was about 1 hour and 30 minutes per participant.

E. Collected data

In addition to the SUS and AttrakDiff questionnaires filled after each technique to measure usability and attractiveness, we also asked for a ranking of the three interaction techniques in terms of preferences. From a quantitative point of view we measured the traveled distance and the number of collisions.

VI. RESULTS

In the following section here are the quantitative and qualitative results we obtained.

A. Quantitative results

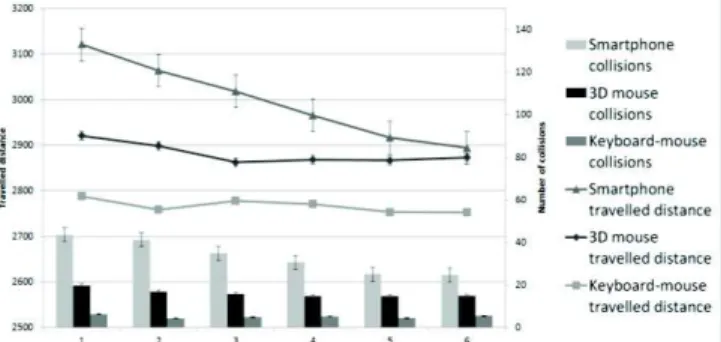

First, a Kruskal-Wallis test confirmed that none of the 18 randomly chosen itineraries had an influence on the collected results. On average, we observed (Figure 17) that the travelled distance is the smallest with the keyboard-mouse (2766px, SD = 79), followed by the 3D mouse (2881px, SD = 125) and the smartphone (2996px, SD = 225). According to a Wilcoxon test these differences are significant. The same conclusions can be drawn with regard to the amount of collisions (keyboard-mouse: 5.08, SD = 5.68; 3D mouse: 16.11, SD = 15.86; smartphone: 33.35, SD = 24.64).

Figure 17. Evolution of the travelled distance and the amount of collisions according to 6 trials of the subjects.

Given the high dispersion of the distance and collision measures, we refined this analysis in distinguishing the results obtained for each of the six trials performed by the 24 participants (Figure 17). This refined analysis reveals a significant learning effect with the smartphone technique: between the first and sixth trial, the distance is 7.3% shorter (Wilcoxon test, = 6 × 10!") and collision are reduced of

43.3% (Wilcoxon test, = 2 × 10!#). A significant learning

effect is also observed with the 3D mouse, but only in terms of distance and with a smaller improvement (1.6% shorter, Wilcoxon test, = 0.049).

The learning effect with the smartphone is so important that, at the last trial, the travelled distance for the smartphone (2893px) and the 3D mouse (2873px) is comparable (no significant difference, Wilcoxon test, = 0.49).

B. Qualitative results

Three aspects have been considered in the qualitative evaluation: usability, attractiveness and the user’s preference.

Usability evaluation: the SUS questionnaire [42] gives

an average score of 82.60 (SD=12.90) for the keyboard-mouse, 54.79 (SD=22.47) for the smartphone based interaction and 53.54 (SD=27.97) for the 3D mouse. A Wilcoxon test shows that the SUS difference is statistically significant between the keyboard-mouse and each of the two other techniques (3D mouse, smartphone). However, the SUS difference is not statically significant between the 3D mouse and the smartphone. Research conducted to the interpretation of the SUS score [44] permits to classify the usability of the keyboard-mouse as “excellent”. According to this interpretation scale, the usability of the smartphone and the 3D mouse is identified as “ok”.

We also note a wide dispersion of the SUS score. We thus performed a more detailed analysis of the SUS score. First, according to [44] a system with a “good” usability must obtain a score above 70. In our experiment, 33% of the participants scored the 3D mouse above 70 while 37% of the participants scored the smartphone above 70. Second, 3D mouse and smartphone were two techniques unfamiliar to the participants. The results of the SUS questionnaire show that when the smartphone is used after the 3D mouse, the average score for the smartphone is 65.62 whereas in the other order the average score is 43.96. The perceived usability of the two unfamiliar techniques is therefore lower than the perceived usability of the keyboard-mouse. However, once the participants have manipulated these two unfamiliar techniques, the perceived usability of the smartphone increases drastically.

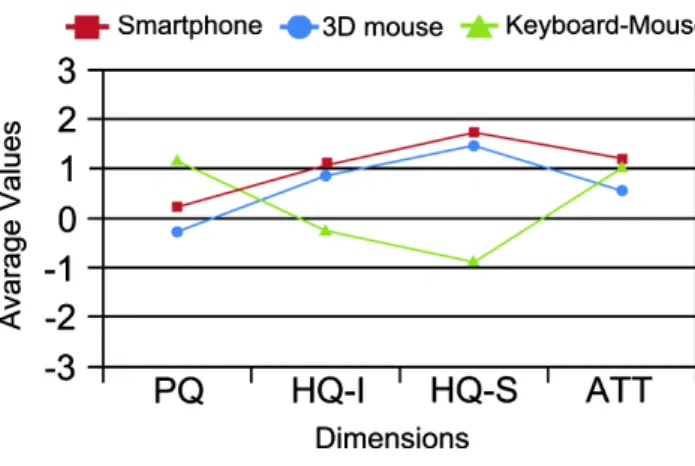

Attractiveness: Data collected using AttrakDiff [45]

give an idea of the attractiveness of the technique and how it is experienced. Attrakdiff supports the evaluation of a system according to four distinct dimensions: the pragmatic quality (PQ: product usability, indicates if the users could achieve their goals using it); the hedonic quality – stimulation (HQ-S: determine to which extent the product can support the need in terms of new, interesting and stimulating functions, contents and interaction); the hedonic quality – identity (HQ-I: indicates to what extend the product allows the user to identity with it); the attractiveness (ATT: global values of the product based on the quality perception).

Figure 18. Portfolio generated on the AttrakDiff website.

Figure 18 shows a portfolio of the average value of the PQ and the HQ (HQ-S+HQ-I) for the three interaction techniques assessed in our user experiment.

The keyboard-mouse was rated as “fairly practice-oriented”, i.e., one of the first levels in the “task-oriented” category. According to the website report [45], the average value of PQ (above 1) indicates that there is definite room of improvement in terms of usability. The average value of HQ

obtained (approx. -1) expresses that there is clearly room for improvement in terms of user’s stimulation. The 3D mouse was rated as “fairly self-oriented”, i.e., one of the first levels in the category “self- oriented”. The average value of PQ (approx. 0) expresses that there is room for improvement in terms of usability. The average value of HQ obtained (approx. 1) expresses that room for improvement also exists in terms of user’s stimulation. The smartphone was rated as “self-oriented”. The average value of PQ (approx. 0) expresses that there is room for improvement in terms of usability. The average value of HQ obtained (above 1) expresses that the user identifies with the product and is motivated and stimulated by it.

Figure 19 summarizes the average values for the four AttrakDiff dimensions of the three interaction techniques. With regards to the four dimensions the smartphone is rated higher than the 3D mouse and the differences are statistically significant (T-test, p<0.05). For the PQ value the keyboard-mouse is better than the smartphone (statistically significant, p<0.05). For HQ-I and HQ-S values the smartphone is better than the keyboard-mouse (statistically significant, p<0.05). In terms of ATT, the smartphone is again rated higher than keyboard-mouse but the difference is however not statistically significant (p>0.05). Compared to the keyboard-mouse, the smartphone is considered as novel, innovative, inventive, stylish and creative. Improvements in terms of simplicity, straightforwardness or predictability could increase the average value of PQ and probably increase even more the ATT value of the smartphone.

Figure 19. Average values for the four dimensions of the AttrakDiff questionnaire.

User preference: at the end of the experiment a short

semi-guided interview was performed. The participants were first asked to rank the three techniques from 1 (best) to 3 (worst). The results are in line with the SUS scores: the keyboard-mouse technique is largely preferred and the 3D mouse is by far the least appreciated technique: only 2 participants out of 24 ranked it as the best, and 14 ranked it as the worst. The smartphone based-interaction is ranked uniformly in the three places (7, 9, 8).

Finally, three positive points and three negative points were asked for each technique. The most frequently

mentioned positive points are “quick”, “easy” and “accurate” for the keyboard-mouse technique, “intuitive”, “novel” and “usable with on hand” for the 3D mouse and “immersive”, “funny”, and “accessible to everybody” for the smartphone. The participants thus appreciate the conditions of use created by the smartphone while they particularly pinpoint the effectiveness of the mouse and provide general comments about the 3D mouse.

The most frequently mentioned negative aspect is related to a practical aspect of the keyboard-mouse (“requires the use of both hands”). They are related to the effectiveness of use of the 3D mouse (“difficulty to combine translation and rotation at the same time”, “lack of precision” and “high need for concentration”) and for the smartphone it focuses on one specific feature (“difficulty to translate to the left or right”) and the overall context of use (“the apparent time of learning” and “the tiredness caused in the arm”).

Technical issues for the 3D mouse and effectiveness of the keyboard-mouse are thus highlighted while the benefits and limits related to the interactive experience are mentioned for the smartphone. This clear shift of interest between the three techniques reveals that the disappointing performances of the smartphone highlighted in the previous section are not totally overruling the interest of the participants for the smartphone-based technique. It is therefore a very interesting proof of interest for further exploring the use of a smartphone in 3DVE.

VII. DISCUSSION

A. Smartphone-based interaction: comparing our solution to existing ones

Among the existing attempts for exploring the navigation of 3DVE with a smartphone, two different settings exist. A first set of solutions, as opposed to our setting, proposes to display the 3DVE directly on the smartphone. Different techniques are explored to change the point of view inside the 3D scene: tactile screen like Navidget [46], integrated sensor [47], smartphone motions in the space around a reference, as Chameleon technique [48] and T(ether) [49] and manipulation of physical objects around the smartphone [50]. The second set of solutions avoids issues related to the occlusion of the 3DVE with fingers by displaying the 3DVE on a distant screen. These involved solutions integrated sensor [51] to detect user’s motions, additional tactile screen [52] or a combination of both [53]. Although our technique is clearly in line with this second set of solutions, our use of the smartphone presents three major originalities. Firstly, the smartphone is not limited to a remote controller: it is also used to provide the user with feedback or personalized information. Secondly, using tactile interaction to support the navigation would occlude part of the screen and prevent its use to visualize data, selecting objects or clicking on additional features. Instead, physical gesture are applied to the smartphone to control rotations like in [54] or [53] but also to control translations of the point of view in the 3DVE. Thirdly, the choice of the gestures to apply has been guided by the results of a guessability study that highlights the most probable gesture users would perform with a smartphone.

We used this approach rather than a pre-experiment or results of existing experiment [53] because when getting familiar with the manipulation of a smartphone, universal gestures will be adopted, and not necessarily the ones known as the most efficient. The users’ prime intuition of use looked more important to us.

B. User’s experiment results: analysis

Beyond the designed interaction technique, the contribu-tion includes a set of evaluacontribu-tion results. The user experiment revealed a significant learning effect with the smartphone. This is a very encouraging result because no learning effect was observed with keyboard-mouse and 3D mouse although the participants were unfamiliar with 3D mouse and smartphone: the use of a smartphone thus significantly improves over the time.

Results also revealed that the use of our smartphone based technique to navigate inside a 3DVE is more attractive and stimulating than a more usual technique such as the keyboard-mouse and the 3D mouse.

In terms of usability, users’ preferences (interaction technique ranking) and quantitatively (travelled distance and amount of collisions) our smartphone technique appears to be weaker than the keyboard-mouse technique but similar to the 3D mouse.

This tradeoff between attractivity and usability /performance emphasizes that compared to two manufac-tured devices; our technique is better accepted but weaker in performance and usability. This is particularly encouraging because technological improvements of our technique, such as mixing the use of integrated sensor with image processing to compute more robust and accurate smartphone position and orientation, will also increase the user's performance. In addition, the use of smartphones is already widely spread and we believe that their use as an interaction support with remote application will develop as well and become a usual interaction form.

Altogether this user experiment establishes that the use of a smartphone to interact with 3DVE is very promising and needs to be explored later.

VIII. INTEGRATING THE SMARTPHONE BASED TECHNIQUE AND OTHER ADVANCED INTERACTION TECHNIQUES IN THE

PLATFORM

A. Assessing the easy to plug feature of the platform

Following these very positive results, we have successfully and easily connected the presented smartphone interaction technique to the interactive platform presented in Section III. Concretely, our technique is used to control the navigation step in the pedagogical step of the scenario. It thus consists in moving through the dome and around the telescope to collect the inside temperature, displayed only when the visitor is close to the floor, and the outside temperature, displayed only when the visitor is close to the aperture of the dome. We just add one class implementing the Controller concept of the DLL to plug our interaction technique into the software platform. Approximately 10

minutes are sufficient to create the link between a new interaction technique and our software platform.

To further assess the ability of the platform to plug new interaction technique easily, we have developed two other advanced interaction techniques for controlling navigation. The first one is based on a physical doll (Figure 20 – left). An ARToolKit marker [55] and a push button are attached to the doll, which is handled by the user. When the user presses the button, the marker is tracked by a camera to trigger, in the interactive platform, a navigation command that corresponds to the doll motion. The user can thus move the doll to perform a translation in the 3D environment or a rotation of the point of view.

The second one is based on a physical cube (Figure 20 – right). Users’ movements to navigate in the 3D environment are identical to the doll technique. We used the Polhemus Patriot Wireless [40] for tracking the position and the orientation of the cube over timer. We had a Phidget SBC board [56] for wirelessly transmitting the state of a push button. When the cube face presenting the rotation and translation instructions is facing upward, and if the button is pressed, any motion applied by the user to the physical cube will be directly mapped to a navigation command in the 3D environment. This design reveals the possibility to map other features to the five remaining cube faces. Again, inserting this different type of interaction technique, based on a new type of sensors, in the platform did not raise any problem of software connections.

Figure 20. Tangible navigation technique based one a physical doll (left) and one a physical cube (right).

However, all the steps of the pedagogical scenario are not covered with these techniques. Indeed, the second and third steps that require selection and manipulation are not supported by these techniques.

Regarding the selection phase, as described in [57], we explored touchscreen input, mid-air movement of the mobile device (Figure 21-right), and mid-air movement of the hand around the device (Figure 21-left) for exploring and selecting element in 3D detail view of the virtual environment. Results shows that gesture with the smartphone or around the smartphone perform better than traditional tactile interaction modality. Interestingly, users preferred mid-air movement around the smartphone. Therefore, we developed an additional technique around the smartphone to support the selection step of the pedagogical scenario.

We also enriched the physical cube based interaction technique so that it covers the selection step by simply adding an RF-ID reader inside the cube and close to a second cube face. It thus supports the selection of a star to observe and thanks to the Phidget SBC board [56], the communication between the application and the RF-ID reader remains wireless.

Figure 21. Smartphone-based technique for exploring and selecting element in 3D detail view: mid-air movement of the mobile device (left), and mid-air movement of the hand around the device (right).

Now regarding the manipulation step of the scenario, the only interaction techniques initially available were limited to the WIMP based controller described in Section III.C.2. We developed a smartphone application, which allows the user to manipulate and visualize the telescope information through a simple and easy to pick up interface (Figure 22). Sliders are displayed to allow the modification of the different parameters while a list of labels can be visualized to monitor the state of the relevant data.

Figure 22. Smartphone interface for manipulation (left) and data visualization (right).

The cube based interaction techniques has also be enriched to support the manipulation task: two additional cube faces present the instructions to manipulate the different elements of the 3D environment. When facing upward, they respectively allow manipulating the dome elements and the telescope axes.

B. 3D Interactive Platform deployment

We deployed our interactive platform in two major public situations: in October 2013, during two days in our university hall for a scientific festival named Novela, and in June 2014, during two days in the museum of the Pic Du Midi (Figure 23). Large and varied audiences, ranging from

![Figure 9. WIMP interface to configure the 3D mouse named Space Navigator [36] (left corner)](https://thumb-eu.123doks.com/thumbv2/123doknet/3289736.94343/8.892.82.431.302.447/figure-wimp-interface-configure-mouse-space-navigator-corner.webp)