HAL Id: tel-01225776

https://pastel.archives-ouvertes.fr/tel-01225776

Submitted on 6 Nov 2015HAL is a multi-disciplinary open access

archive for the deposit and dissemination of sci-entific research documents, whether they are pub-lished or not. The documents may come from teaching and research institutions in France or abroad, or from public or private research centers.

L’archive ouverte pluridisciplinaire HAL, est destinée au dépôt et à la diffusion de documents scientifiques de niveau recherche, publiés ou non, émanant des établissements d’enseignement et de recherche français ou étrangers, des laboratoires publics ou privés.

Amélioration de la sécurité par la conception des

logiciels web

Theodoor Scholte

To cite this version:

Theodoor Scholte. Amélioration de la sécurité par la conception des logiciels web. Web. Télécom ParisTech, 2012. Français. �NNT : 2012ENST0024�. �tel-01225776�

2012-ENST-024

EDITE - ED 130

Doctorat ParisTech

T H È S E

pour obtenir le grade de docteur délivré par

TELECOM ParisTech

Spécialité « Réseaux et Sécurité »

présentée et soutenue publiquement par

Theodoor SCHOLTE

le 11/5/2012

Securing Web Applications by Design

Directeur de thèse : Prof. Engin KIRDA

Jury

Thorsten HOLZ, Professeur, Ruhr-Universit at Bochum, Germany Rapporteur

Martin JOHNS, Senior Researcher, SAP AG, Germany Rapporteur

Davide BALZAROTTI,Professeur, Institut EURECOM, France Examinateur

Angelos KEROMYTIS, Professeur, Columbia University, USA Examinateur

Thorsten STRUFE, Professeur, Technische Universit at Darmstadt, Germany Examinateur

TELECOM ParisTech

Acknowledgements

This dissertation would not have been possible without the support of many people. First, I would like to thank my parents. They have thaught and are teaching me every day a lot. They have raised me with a good mixture of strictness and love. I believe that they play an important role in all the good things in my life.

I am very grateful to prof. Engin Kirda. It is through his lectures that I have become interested in security. He has been an extraordinary advi-sor, always available to discuss. After he moved to the United States, he continued to be the person ‘next door’, always available to help me out. Fur-thermore, I would like to thank prof. Davide Balzarotti and prof. William Robertson. Completing this dissertation would not have been possible with-out their continuous support.

Thanks to my good friends Jaap, Gerben, Roel, Inge, Luit, Ellen and others I probably forget to mention. Over the years, we have shared and discussed our experiences of the professional working life. More importantly, we had a lot of fun. Although we lived in different parts of Europe, we managed to keep in touch as good friends do. Thanks to my ‘local’ friends: Claude, Luc, Alessandro, Marco, Leyla, Simone, Gerald and Julia. You have lightened up the years of hard work with activities such as drinking or brewing beer, barbecuing, climbing and skiing. I would like to thank my friends in particular for the moral support as personal life has not always been easy.

I would like to thank my colleagues at SAP, in particular Anderson, Gabriel, Henrik, Sylvine, Jean-Christophe, Volkmar and Agnès. Thank you all for your support and creating a good working environment.

Thanks to the staff at EURECOM, in particular to Gwenäelle for helping me and always being there when needed.

Finally, thanks to prof. Davide Balzarotti, prof. Thorsten Holz, prof. Angelos Keromytis, prof. Thorsten Strufe and Martin Johns for agreeing to be reporters and examinators.

Contents

1 Introduction 1 1.1 Motivation . . . 2 1.2 Research Problems . . . 6 1.3 Thesis Structure . . . 7 2 Related Work 9 2.1 Security Studies . . . 92.1.1 Large Scale Vulnerability Analysis . . . 9

2.1.2 Evolution of Software Vulnerabilities . . . 10

2.2 Web Application Security Studies . . . 15

2.3 Mitigating Web Application Vulnerabilities . . . 16

2.3.1 Attack Prevention . . . 16

2.3.2 Program Analysis . . . 20

2.3.3 Black-Box Testing . . . 22

2.3.4 Security by Construction . . . 23

3 Overview of Web Applications and Vulnerabilities 25 3.1 Web Applications . . . 25 3.1.1 Web Browser . . . 26 3.1.2 Web Server . . . 27 3.1.3 Communication . . . 28 3.1.4 Session Management . . . 33 3.2 Web Vulnerabilities . . . 33

3.2.1 Input Validation Vulnerabilities . . . 33

3.2.2 Broken Authentication and Session Management . . . 39

3.2.3 Broken Access Control and Insecure Direct Object References . . . 42

3.2.4 Cross-Site Request Forgery . . . 43

4 The Evolution of Input Validation Vulnerabilities in Web Applications 45 4.1 Methodology . . . 45

4.1.1 Data Gathering . . . 46 v

4.1.2 Vulnerability Classification . . . 47

4.1.3 The Exploit Data Set . . . 47

4.2 Analysis of the Vulnerabilities Trends . . . 48

4.2.1 Attack Sophistication . . . 49

4.2.2 Application Popularity . . . 54

4.2.3 Application and Vulnerability Lifetime . . . 56

4.3 Summary . . . 60

5 Input Validation Mechanisms in Web Applications and Lan-guages 63 5.1 Data Collection and Methodology . . . 64

5.1.1 Vulnerability Reports . . . 64

5.1.2 Attack Vectors . . . 65

5.2 Analysis . . . 65

5.2.1 Language Popularity and Reported Vulnerabilities . . 66

5.2.2 Language Choice and Input Validation . . . 68

5.2.3 Typecasting as an Implicit Defense . . . 70

5.2.4 Input Validation as an Explicit Defense . . . 71

5.3 Discussion . . . 72

5.4 Summary . . . 73

6 Automated Prevention of Input Validation Vulnerabilities in Web Applications 77 6.1 Preventing input validation vulnerabilities . . . 78

6.1.1 Output sanitization . . . 78 6.1.2 Input validation . . . 79 6.1.3 Discussion . . . 80 6.2 IPAAS . . . 80 6.2.1 Parameter Extraction . . . 81 6.2.2 Parameter Analysis . . . 81 6.2.3 Runtime Enforcement . . . 83 6.2.4 Prototype Implementation . . . 84 6.2.5 Discussion . . . 85 6.3 Evaluation . . . 86 6.3.1 Vulnerabilities . . . 86

6.3.2 Automated Parameter Analysis . . . 87

6.3.3 Static Analyzer . . . 88

6.3.4 Impact . . . 89

6.4 Summary . . . 90

7 Conclusion and Future Work 91 7.1 Summary of Contributions . . . 91

7.1.1 Evolution of Web Vulnerabilities . . . 92

7.1.3 Input Parameter Analysis System . . . 93 7.2 Critical Assessment . . . 94 7.3 Future Work . . . 95 8 French Summary 97 8.1 Résumé . . . 97 8.2 Introduction . . . 98 8.2.1 Problématiques de recherche . . . 101

8.3 L’évolution des vulnérabilités de validation d’entrée dans les applications Web . . . 103

8.3.1 Méthodologie . . . 103

8.3.2 L’analyse des tendances vulnérabilités . . . 106

8.3.3 Discussion . . . 111

8.4 Les mécanismes pour valider l’entrée des données dans les applications Web et des Langues . . . 112

8.4.1 Méthodologie . . . 113

8.4.2 Analyse . . . 114

8.4.3 Discussion . . . 118

8.5 Prévention automatique des vulnérabilités de validation d’en-trée dans les applications Web . . . 118

8.5.1 Extraction des paramètres . . . 119

8.5.2 Analyse des paramètres . . . 120

8.5.3 Runtime Environment . . . 121

8.6 Conclusion . . . 122

A Web Application Frameworks 125

List of Figures

1.1 Number of web vulnerabilities over time. . . 2

3.1 Example URL. . . 29

3.2 Example of a HTTP request message. . . 30

3.3 Example of an HTTP response message. . . 31

3.4 HTTP response message with cookie. . . 32

3.5 HTTP request message with cookie. . . 32

3.6 Example SQL statement. . . 34

3.7 Cross-site scripting example: search.php . . . 35

3.8 Directory traversal vulnerability. . . 37

3.9 HTTP Response vulnerability. . . 37

3.10 HTTP Parameter Pollution vulnerability. . . 38

3.11 Cross-site request forgery example. . . 43

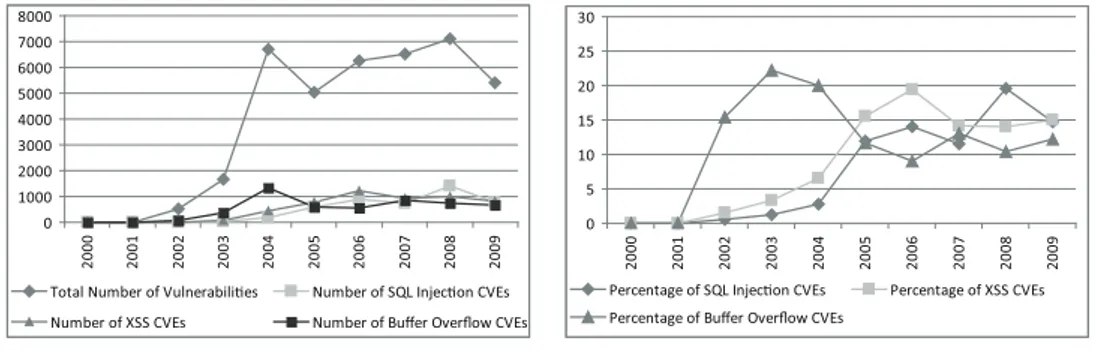

4.1 Buffer overflow, cross-site scripting and SQL injection vulner-abilities over time. . . 48

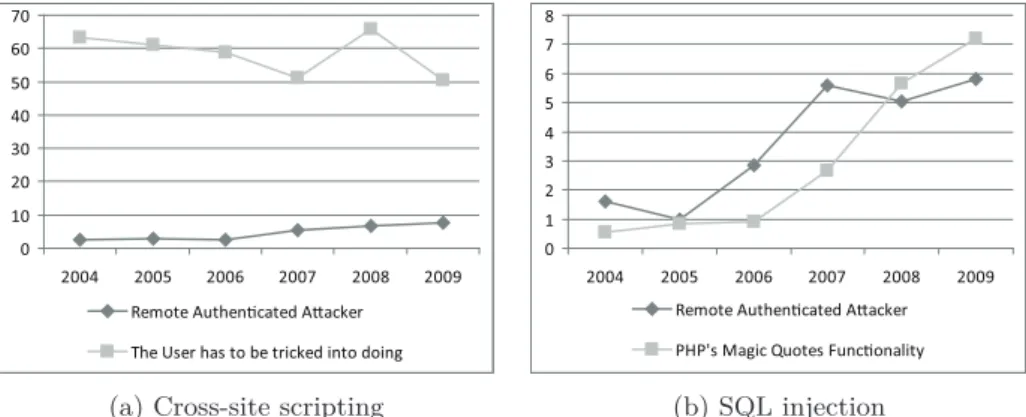

4.2 Prerequisites for successful attacks (in percentages). . . 50

4.3 Exploit complexity over time. . . 50

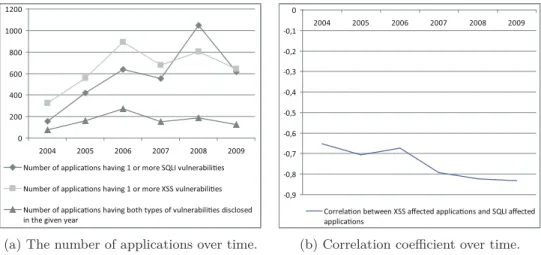

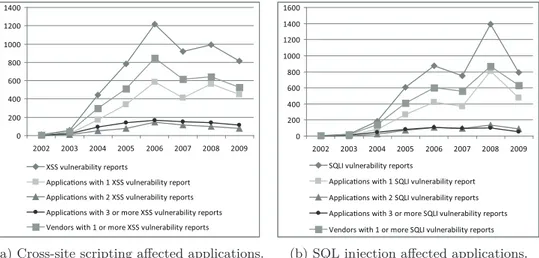

4.4 Applications having XSS and SQLI Vulnerabilities over time. 53 4.5 The number of affected applications over time. . . 54

4.6 Vulnerable applications and their popularity over time. . . 55

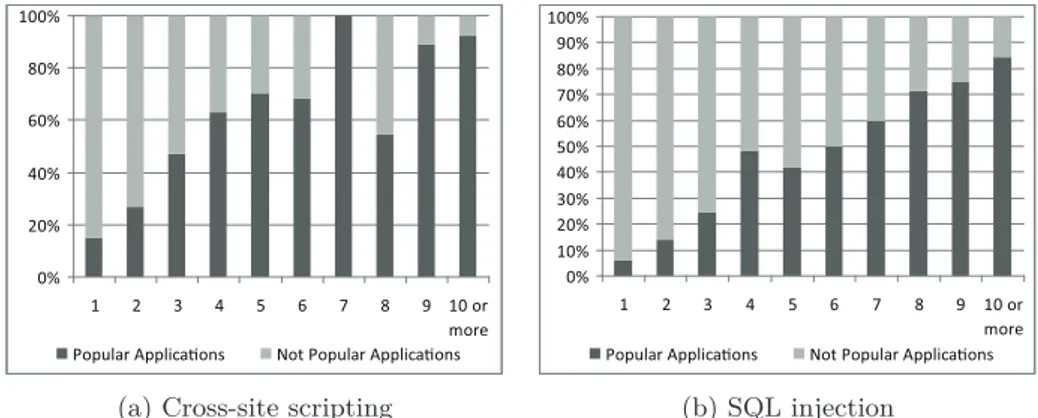

4.7 Popularity of applications across the distribution of the num-ber of vulnerability reports. . . 56

4.8 Reporting rate of Vulnerabilities . . . 57

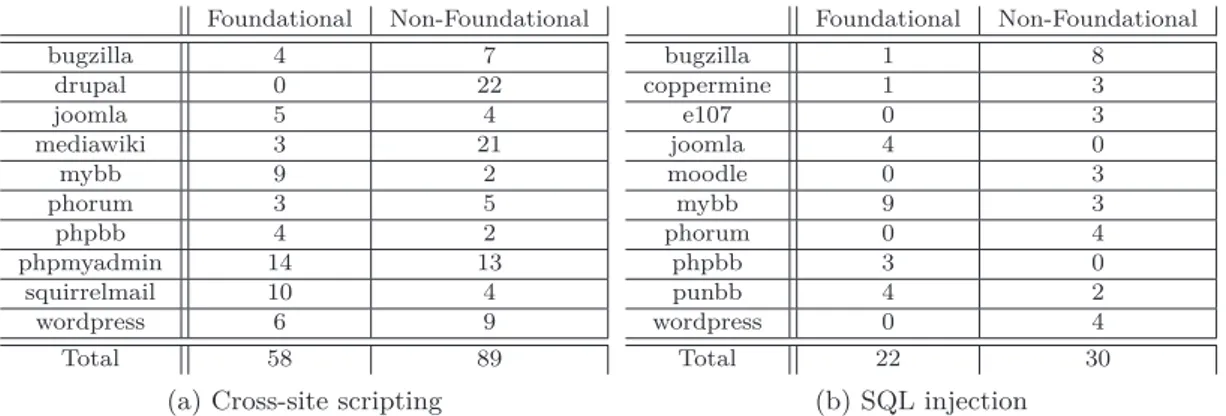

4.9 Time elapsed between software release and vulnerability dis-closure in years. . . 59

4.10 Average duration of vulnerability disclosure in years over time. 59 5.1 Distributions of popularity, reported XSS vulnerabilities, and reported SQL injection vulnerabilities for several web pro-gramming languages. . . 66

5.2 Example HTTP request. . . 69

5.3 Data types corresponding to vulnerable input parameters. . . 74 5.4 Structured string corresponding to vulnerable input parameters. 75

6.1 HTML fragment output sanitization example. . . 78 6.2 The IPAAS architecture. . . 81 8.1 Nombre de vulnérabilités web au fil du temps. . . 98 8.2 Les Buffer overflow, cross-site scripting and SQL injection

vulnérabilités au fil du temps. . . 106 8.3 La complexité des exploits au fil du temps. . . 107 8.4 Temps écoulé entre la version du logiciel et à la divulgation

de vulnérabilité au cours des années. . . 111 8.5 La durée moyenne de divulgation des vulnérabilités dans les

années au fil du temps. . . 112 8.6 Exemple de requête HTTP. . . 114 8.7 Les types de données correspondant à des paramètres d’entrée

vulnérables. . . 117 8.8 L’architecture IPAAS. . . 119

List of Tables

1.1 Largest data breaches. . . 3 4.1 Foundational and non-foundational vulnerabilities in the ten

most affected open source web applications. . . 58 4.2 The attack surface. . . 60 5.1 Framework support for various complex input validation types

across different languages. . . 72 6.1 IPAAS types and their validators. . . 82 6.2 PHP applications used in our experiments. . . 85 6.3 Manually identified data types of vulnerable parameters in

five large web applications. . . 86 6.4 Typing of vulnerable parameters in five large web applications

before static analysis. . . 87 6.5 Results of analyzing the code. . . 88 6.6 Typing of vulnerable parameters in five large web applications

after static analysis. . . 88 6.7 The number of prevented vulnerabilities in various large web

applications. . . 89 8.1 Les plus grandes fuites de données. . . 99 8.2 Vulnérabilités fondamentales et non-fondamentales dans les

dix les plus touchés ouverts applications Web source. . . 110 8.3 IPAAS types et leurs validateurs. . . 120 A.1 Web frameworks analyzed . . . 127

Chapter 1

Introduction

Global Internet penetration started in the late 80’s and early 90’s when an increasing number of Research Institutions from all over the world started to interconnect with each other and the first commercial Internet Service Providers (ISPs) began to emerge. At that time, the Internet was primarily used to exchange messages and news between hosts. In 1990, the number of interconnected hosts had grown to more than 300.000 hosts. In the same year, Tim Berners-Lee and Robert Cailliau from CERN started the World Wide Web (WWW) project to allow scientists to share research data, news and documentation in a simple manner.

Berners-Lee developed all the tools necessary for a working WWW in-cluding an application protocol (HTTP), a language to create web pages (HTML), a Web browser to render and display web pages and a Web server to serve web pages. As the WWW provided the infrastructure for publishing and obtaining information via the Internet, it simplified the use of the In-ternet. Hence, the Internet started to become tremendously popular among normal non-technical users resulting in an increasing number of connected hosts.

Over the past decade, affordable, high-speed and ‘always-on’ Internet connections have become the standard. Due to the ongoing investments in local cell infrastructure, Internet can now be accessed from everywhere at any device. People access the Internet using desktops, notebooks, Tablet PCs and cell phones from home, office, bars, restaurants, airports and other places.

Along with investments in Internet infrastructure, companies started to offer new types of services that helped to make the Web a more compelling experience. These services would not have been realized without a techno-logical evolution of the Web. The Web has evolved from simple static pages to very sophisticated web applications, whose content is dynamically gener-ated depending on the user’s input. Similar to static web pages, web appli-cations can be accessed over a network such as the Internet or an Intranet

0 1000 2000 3000 4000 5000 6000 7000 8000 2002 2003 2004 2005 2006 2007 2008 2009

Number of all vulnerabili!es

Number of web-related

Figure 1.1: Number of web vulnerabilities over time, data obtained from NVD CVE [78].

using a Web browser and it generates content depending on the user’s in-put. The ubiquity of Web browsers, the ability of updating and maintaining web applications without distributing and installing software on potentially thousands of computers and their cross-platform compatibility, are factors that contributed to the popularity of web applications.

The technological evolution of the Web has dramatically changed the type of services that are offered on the Web. New services such as social networking are introduced and traditional services such as e-mail and online banking have been replaced by offerings based on Web technology. Notably in this context is the emerging strategy of software vendors to replace their traditional server or desktop application offerings by sophisticated web ap-plications. An example is SAP’s Business ByDesign, an ERP solution offered by SAP as a web application.

The technological evolution has impacted the way how people nowa-days use the Web. Today, people critically depend on the Web to perform transactions, to obtain information, to interact, have fun and to socialize via social networking sites such as Facebook and Myspace. Search engines such as Google and Bing allow people to search and obtain all kinds of in-formation. The Web is also used for many different commercial purposes. These include purchasing airline tickets, do-it-yourself auctions via Ebay and electronic market places such Amazon.com.

1.1

Motivation

Over the years, the World Wide Web has attracted many malicious users and attacks against web applications have become prevalent. Recent data from SANS Institute estimates that up to 60% of Internet attacks target

Records Date Organizations

130.000.000 2009-01-20 Heartland Payment Systems, Tower Federal Credit Union, Beverly National Bank 94.000.000 2007-01-17 TJX Companies Inc.

90.000.000 1984-06-01 TRW, Sears Roebuck 77.000.000 2011-04-26 Sony Corporation

40.000.000 2005-06-19 CardSystems, Visa, MasterCard, American Express

40.000.000 2011-12-26 Tianya

35.000.000 2011-07-28 SK Communications, Nate, Cyworld 35.000.000 2011-11-10 Steam (Valve, Inc.)

32.000.000 2009-12-14 RockYou Inc.

26.500.000 2006-05-22 U.S. Department of Veterans Affairs

Table 1.1: Largest data breaches in terms of disclosed records according to [31].

web applications [23]. The insecure situation on the Web can be attributed to several factors.

First, the number of vulnerabilities in web applications has increased over the years. Figure 1.1 shows the number of all vulnerabilities compared to the number of web-related vulnerabilities that have been published be-tween 2000 and 2009 in the Common Vulnerabilities and Exposures (CVE) List [78]. We observe that as of 2006, more than half of the reported vul-nerabilities are web-related vulvul-nerabilities. The situation has not improved in recent years. Based on an analysis of 3000 web sites in 2010, a web site contained on average 230 vulnerabilities according to a report from White-Hat Security [105]. Although not all web vulnerabilities pose a security risk, many vulnerabilities are exploited by attackers to compromise the integrity, availability or confidentiality of a web application.

Second, attackers have a wide range of tools at their disposal to find web vulnerabilities and launch attacks against web applications. The advanced functionality of Google Search allows attackers to find security holes in the configuration and programming code of websites. This is also known as Google Hacking [12, 72]. Furthermore, there is a wide range of tools and frameworks available that allow attackers to launch attacks against web applications. Most notably in this context is the Metasploit framework [85]. This modular framework leverages on the world’s largest database of quality assured exploits, including hundreds of remote exploits, auxiliary modules, and payloads.

Finally, attackers do have motivations to perform attacks against web applications. These attacks can result into, among other things, data leak-age, impersonating innocent users and large-scale malware infections.

An increasing number of web applications store and process sensitive data such as user’s credentials, account records and credit card numbers. Vulnerabilities in web applications may occur in the form of data breaches which allow attackers to collect this sensitive information. The attacker may use this information for identity theft or he can sell it on the underground market. Stealing large amounts of credentials and selling them on the un-derground market can be profitable for an attacker as shown by several studies [124, 13, 39, 104]. Security researchers estimate that stolen credit card numbers can be sold for a price ranging between $2 to $20 each [13, 39]. For bank accounts the price range per item is between $10 and $1000 while for e-mail passwords the range is $4 to $30 [39] per item.

Vulnerable web applications can also be used by attackers to perform malicious actions on the victim’s behalf as part of phishing attack. In these types of attacks, attackers use social engineering techniques to acquire sen-sitive information such as user’s credentials or credit card details and/or let the user perform some unwanted actions thereby masquerading itself as a trustworthy entity in the communication. Certain flaws in web applications such as cross-site scripting make it easier for an attacker to perform a suc-cessful phishing attack because in such attack, a user is directed to the bank or service’s own web page where everything from the web address to the security certificates appears to be correct. The costs of phishing attacks are significant, RSA estimates that the losses of phishing attacks world wide in the first half year of 2011 amounted over more than 520 million Dollars [94]. Legitimate web applications that are vulnerable can be compromised by attackers to install malware on the victim’s host as part of a

drive-by-download [84]. The installed malware can take full control of the victim’s

machine and the attacker uses the malware to make financial profit. Typi-cally, malware is used for purposes such as acting as a botnet node, harvest-ing sensitive information from the victim’s machine, or performharvest-ing other malicious actions that can be monetized. Web-based malware is actively traded on the underground market [124]. While no certain assessments ex-ist on the total amount of money attackers earn with trading virtual assets such as malware on the underground market, some activities have been ana-lyzed. A study performed by Mcafee [64] shows that compromised machines are sold as anonymous proxy servers on the underground market for a price ranging between $35 and $550 a month depending on the features of the proxy.

Attacks against web applications affect the availability, integrity and confidentiality of web applications and the data they process. Because our society heavily depends on web applications, attacks against web applica-tions form a serious threat. The increasing number of web applicaapplica-tions that process more and more sensitive data made the situation even worse. Ta-ble 1.1 reports on the largest incidents in terms of exposed data records in

the past years. While the Web is not the primary source of data breaches, it still accounts for 11 % of the data breaches which is the second place. Although no overall figures exist on the annual loss caused by data breaches on the Web, the costs of some data breaches have been estimated. In 2011, approximately 77 million users accounts on the Sony Playstation Network were compromised through a SQL injection attack on two of Sony’s prop-erties. Sony estimated that it would spent 171.1 million Dollars in dealing with the data breach [102].

To summarize, the current insecure state of the Web can be attributed to the prevalence of web vulnerabilities, the readily available tools for exploit-ing them and the (financial) motivations of attackers. Unfortunately, the growing popularity of the Web will make the situation even worse. It will motivate attackers more as attacks can potentially affect a larger number of innocent users resulting into more profit for the attackers. The situation needs to be improved because the consequences of attacks are dramatic in terms of financial losses and efforts required to repair the damage.

To improve the security on the Web, much effort has been spent in the past decade on making web applications more secure. Organizations such as MITRE [62], SANS Institute [23] and OWASP [79] have emphasized the importance of improving the security education and awareness among programmers, software customers, software managers and chief information officers. Also, the security research community has worked on tools and techniques to improve the security of web applications. These tools and techniques mainly focus on either reducing the number of vulnerabilities in applications or on preventing the exploitation of vulnerabilities.

Although a considerable amount of effort has been spent by many dif-ferent stakeholders on making web applications more secure, we lack quan-titative evidence whether this attention has improved the security of web applications. In this thesis, we study how web vulnerabilities have evolved in the past decade. We focus in this dissertation on SQL injection and cross-site scripting vulnerabilities as these classes of web application vulnerabili-ties have the same root cause: improper sanitization of user-supplied input that result from invalid assumptions made by the developer on the input of the application. Moreover, these classes of vulnerabilities are prevalent, well-known and have been well-studied in the past decade.

We observe that, despite security awareness programs and tools, web developers consistently fail to implement existing countermeasures which results into vulnerable web applications. Furthermore, the traditional ap-proach of writing code and then testing for security does not seem to work well. Hence, we believe that there is a need for techniques that secure web applications by design. That is, techniques that make web applications au-tomatically secure without relying on the web developer. Applying these techniques on a large scale should significantly improve the security

situa-tion on the web.

1.2

Research Problems

The previous sections illustrates that web applications are frequently tar-geted by attackers and therefore solutions are necessary that help to improve the security situation on the web. Understanding how common web vulner-abilities can be automatically prevented, is the main research challenge in this work.

In this thesis, we tackle the following research problems with regard to the security of web applications:

• Do developers create more secure web applications today than they used

to do in the past?

In the past decade, much effort has been spent by many different stake-holders on making web applications more secure. To date, there is no empirical evidence available whether this attention has improved the security of web applications. To gain deeper insights, we perform an automated analysis on a large number of cross-site scripting and SQL injection vulnerability reports. In particular, we are interested in finding out if developers are more aware of web security problems today than they used to be in the past.

• Does the programming language used to develop a web application

in-fluence the exposure of those applications to vulnerabilities?

Programming languages often contain features which help program-mers to prevent bugs or security-related vulnerabilities. These features include, among others, static type systems, restricted name spaces and modular programming. No evidence exists today whether certain fea-tures of web programming languages help in mitigating input valida-tion vulnerabilities. In our work, we perform a quantitative analysis with the aim of understanding whether certain programming languages are intrinsically more robust against the exploitation of input valida-tion vulnerabilities than others.

• Is input validation an effective defense mechanism against common

web vulnerabilities?

To design and implement secure web applications, a good understand-ing of vulnerabilities and attacks is a prerequisite. We study a large number of vulnerability reports and the source code repositories of a significant number of vulnerable web applications with the aim of gaining deeper insights into how common web vulnerabilities can be prevented. We will analyze if typing mechanisms in a language and

input validation functions in a web application framework can po-tentially prevent many web vulnerabilities. No empirical evidence is available today that show to which extend these mechanisms are able to prevent web vulnerabilities.

• How can we help application developers, that are unaware of web

ap-plication security issues, to write more secure web apap-plications?

The results of our empirical studies suggest that many web application developers are unaware of security issues and that a significant number of web vulnerabilities can be prevented using simple straight-forward validation mechanisms. We present a system that learns that data types of input parameters when developers write web applications. This system is able to prevent many common web vulnerabilities by automatically augmenting otherwise insecure web development envi-ronments with robust input validators.

1.3

Thesis Structure

We start by giving an overview of the related work on vulnerability studies, program analysis techniques to find security vulnerabilities, client-side and server-side defense mechanisms and techniques to make web applications

se-cure by construction.

Chapter 3 gives an overview of web vulnerabilities. First, we give an overview of several web technologies to support our discussion on web appli-cation security issues. Then, we present different classes of input validation vulnerabilities. We explain how they are introduced and how to prevent them using existing countermeasures.

In Chapter 4, we study the evolution of input validation vulnerabilities in web applications. First, we describe how we automatically collect and pro-cess vulnerability and exploit information. Then, we perform an analysis of vulnerability trends. We measure the complexity of attacks, the popularity of vulnerable web applications, the lifetime of vulnerabilities and we build time-lines of our measurements. The results of this study have been pub-lished in the International Conference on Financial Cryptography and Data Security 2011 [99] and the journal Elsevier Computers & Security [100].

In Chapter 5, we study the relationship between a particular program-ming language used to develop web applications and the vulnerabilities com-monly reported. First, we describe how we link a vulnerability report to a programming language. Then, we describe how we measure the popularity of a programming language. Furthermore, we examine the source code of vulnerable web applications to determine the data types of vulnerable input. The results of this study have been published in the ACM Symposium on Applied Computing conference 2012 (ACM SAC 2012) [101].

Chapter 6 presents IPAAS, a completely automated system against web application attacks. The system automatically and transparently augments web application development environments with input validators that result in significant and tangible security improvements for real web applications. We describe the implementation of the IPAAS approach of transparently learning types for web application parameters, and automatically applying robust validators for these parameters at runtime. The results have been published in the IEEE Signature Conference on Computers, Software, and Applications 2012 (COMPSAC 2012).

In Chapter 7, we summarize and conclude this thesis. Also, we show future directions for research and provide some initial thoughts on these research directions.

Chapter 2

Related Work

In the past years, we have observed a growing interest on knowledge about the overall state of (web) application security. Furthermore, a lot of effort has been spent on techniques to improve the security of web applications. In this Chapter, we first discuss studies that analyzed general security trends and the life cycle of vulnerabilities in software. Then, we document stud-ies that analyzed the relationship between the security of web applications and the features provided by web programming languages or frameworks. Finally, we give an overview of techniques that can detect or prevent vul-nerabilities in web applications or can mitigate their impact by detecting or preventing attacks that target web applications.

Where applicable, we compare the related work with our work presented in this thesis.

2.1

Security Studies

In the past years, several studies have been conducted with the aim of getting a better understanding of the state of software security. In this section, we give an overview of these studies.

2.1.1 Large Scale Vulnerability Analysis

Security is often considered as an arms race between crackers who try to find and exploit flaws in applications and security professionals who try to pre-vent that. To better understand the security ecosystem, researchers study vulnerability, exploit and attack trends. These studies give us insights in the exposed risks of vulnerabilities to our economy and society. Furthermore, they improve the security education and awareness among programmers, managers and CIOs. Security researchers can use security studies to focus their research on a narrower subset of security issues that are prevalent.

Several security trend analysis studies have been conducted based on 9

CVE data [20, 75]. In [20], Christey et al. present an analysis of CVE data covering the period 2001 - 2006. The work is based on manual classification of CVE entries using the CWE classification system. In contrast, [75] uses an unsupervised learning technique on CVE text descriptions and introduces a classification system called ‘topic model’. While the works of Christey et al. and Neuhaus et al. focus on analyzing general trends in vulnerabil-ity databases, the work presented in this thesis specifically focuses on web application vulnerabilities, and, in particular, cross-site scripting and SQL injection. In contrast to the works of Neuhaus et al. and Christey et al., we have investigated the reasons behind the trends.

Commercial organizations such as HP, IBM, Microsoft and Whitehat collect and analyze security relevant data and publish regularly security risk and threat reports [17, 22, 27, 105]. These security reports give an overview of the prevalence of vulnerabilities and their exploitation. The data on which the studies are based, is often collected from a combination of public and private sources including vulnerability databases and honeypots. The study presented in [17], analyzes vulnerability disclosure trends using the X-Force Database. The authors identify that web application vulnerabilities account for almost 50 % of all vulnerabilities disclosed in 2011 and that cross-site scripting and SQL injection are still dominant. Although the percentage of disclosed SQL injection vulnerabilities is decreasing, a signature-based analysis on attack data suggests that SQL injection is a very popular attack vector. The security risks report of Hewlett Packard [22] identifies that the number of web application vulnerabilities submitted to vulnerabilities such as OSVDB [55] is decreasing. However, the actual number of vulnerabilities discovered by static analysis tools and blackbox testing tools on web applica-tions respectively public websites is increasing. A threat analysis performed by Microsoft [27] shows that the number of disclosed vulnerabilities through CVE is decreasing. Common Vulnerability Scoring System (CVSS) [65] is an industry standard for assessing the severity of vulnerabilities. Their vul-nerability complexity analysis based on CVSS data shows that the number of vulnerabilities that are easily exploitable is decreasing, while the number of complex vulnerabilities remains constant.

2.1.2 Evolution of Software Vulnerabilities

Our economy and society increasingly depends on large and complex soft-ware systems. Unfortunately, detecting and completely eliminating vulner-abilities before these systems go into production is an utopy. Although tools and techniques exist that minimize the number of vulnerabilities in software systems, it is inevitable that defects slip through the testing and debugging processes and escape into production runs [2, 73]. Economical incentives for software vendors also contribute to the release of insecure software. To gain market dominance, software should be released quickly and building in

security protection mechanisms could hinder this. These factors affects the reliability and security of software and impacts our economy and society.

Life-cycle of vulnerabilities

To assess the risk exposure of vulnerabilities to economy and society, one has to understand the life cycle of vulnerabilities. Arbaugh et al. [5] proposed a life cycle model for vulnerabilities and identified the following phases of a vulnerability:

• Birth. This stage denotes the creation of a vulnerability. This typ-ically happens during the implementation and deployment phases of the software. A vulnerability that is detected and repaired before public release of the software is not considered as a vulnerability. • Discovery. At this stage, the existence of a vulnerability becomes

known to a restricted group of stakeholders.

• Disclosure. A vulnerability may be disclosed by a discoverer through mailing lists such as Bugtraq [106] and CERTs [19]. As we study publicly disclosed vulnerabilities in this thesis, vulnerability disclosure processes become of interest. We will outline this in the next section. • Patch. The software vendor needs to respond on the vulnerability by developing a patch that fixes the flaw in the software. If the software is installed on the customers’ systems, then the vendor needs to re-lease the patch to the customers such that they can install it on their systems.

• Death. Once all the customers have installed the patch, the software is not vulnerable anymore. Hence, the vulnerability has disappeared. This model was applied to three cases studies which revealed that sys-tems remain vulnerable for a very long time after security fixes have been made available. In Chapter 4, we study the evolution of a particular class of vulnerabilities: input validation vulnerabilities in web applications. We looked in this study how long input validation vulnerabilities remain in soft-ware before these vulnerabilities are disclosed while Arbaugh et al. looked at the number of intrusions after a vulnerability has been disclosed.

Vulnerability Disclosure

The inherent difficulty of eliminating defects in software causes that soft-ware is shipped with vulnerabilities. After the release of a softsoft-ware system, vulnerabilities are discovered by an individual or an organization (e.g. inde-pendent researcher, vendor, cyber-criminal or governmental organization).

Discoverers have different motivations to find vulnerabilities including al-truism, self-marketing to highlight technical skills, recognition or fame and malicious intents to make profit. These motivations suggest that it is more rewarding for white hat and black hat hackers – i.e. hackers with benign re-spectively malicious intents – to find vulnerabilities in software that is more popular.

The relationship between the application popularity and its vulnerabil-ity disclosure rate has been studied in several works. In [2], the authors examined the vulnerability discovery process for different succeeding ver-sions of the Microsoft Windows and Red Hat Linux operating systems. An increase in the cumulative number of vulnerabilities somehow suggests that vulnerabilities are being discovered once software starts gaining momentum. An empirical study conducted by Woo et al. compares market share with the number of disclosed vulnerabilities in web browsers [120]. The results also suggest that the popularity of web browsers leads to a higher discovery rate. While the study of Woo et al. focus on web browser, we focus on web applications and discovered that popular web applications have an higher incidence of reported vulnerabilities.

After a vulnerability is discovered, the information about the vulnerabil-ity eventually becomes public. Vulnerabilvulnerabil-ity disclosure refers to the process of reporting a vulnerability to the public. The potential harms and ben-efits of publishing information that can be used for malicious purposes is subject of an on-going discussion among security researchers and software vendors [32]. We observe that software vendors have different standpoints on how to handle information about security vulnerabilities and adopt different

disclosure models. We discuss them below.

• Security through Obscurity. This principle attempts to use secrecy of design and implementation of a system to provide security. A system that relies on this principle may have vulnerabilities, but its owners believe that if flaws are unknown it is unlikely to find them.

Proponents of this standpoint argue that as publishing vulnerability information gives attackers the information they need to exploit a vulnerability in a system, it causes more harm than good. As there is no way to guarantee that cybercriminals do not get access to this information by other means, this is not a very realistic standpoint. • Full Disclosure. In contrast to the Security through Obscurity stance, Full

Disclosure attempts to disclose all the details of a security problem to

the public. The vulnerability disclosure includes all the details on how to detect and to exploit the vulnerability. This is also known as the Security through Transparency which is also advocated by Kerck-hoffs [49] who states that ‘the design of a cryptographic system should

not require secrecy and should not cause ‘inconvenience’ if it falls into the hands of the enemy’.

Proponents of this disclosure model argue that everyone gets the in-formation at the same time and can act upon it. Public disclosure mo-tivates software vendors to react quickly upon it by releasing patches. However, full disclosure is also controversial. With immediate full dis-closure, software users are exposed to an increased risk as creating and releasing a patch before disclosure is not possible anymore.

• Responsible Disclosure. In this model, all the stakeholders agree to a period of time before all the details of the vulnerability go public. This period allows software vendors to create and release a patch based on the information provided by the discoverer of the vulnerability. The discoverer provides the vulnerability information to the software vendor only, expecting that the vendor start to produce a patch. The software vendor has incentives to produce a patch as soon as possible because the discoverer can revert to full disclosure at any time. Once the patch is ready, the discoverer coordinates the publication of the advisory with the software vendor’s publication of the vulnerability and patch.

To study the relationship between the disclosure model and the secu-rity of applications, Arora et al. conducted an empirical analysis on 308 vulnerabilities selected from the CVE dataset [6]. The authors compared this vulnerability data with attack data to investigate whether vulnerability information disclosure and patch availability influences attackers to exploit these vulnerabilities on one hand and on software vendors to release patches on the other. The results of this study suggest that software vendors react more quickly in case of instant disclosure and that even if patches are avail-able, vulnerability disclosure increases the frequency of attacks. Rescorla shows in [87] that even if patches are made available by software vendors, administrators generally fail to install them. This situation does not change if the vulnerability is exploited on a large scale. Cavusoglu et al. found that the vulnerability disclosure policy influences the time it takes for a software vendor to release a patch [18]. In our study, we did not measure the impact of vulnerability disclosure. However, we used the information of disclosed vulnerabilities to measure their prevalence, the complexity and their lifetimes.

Zero-day Vulnerabilities

To conduct malicious activities, attackers exploit vulnerabilities that are unknown to the software vendor. This class of vulnerabilities is called zero-day vulnerabilities. This class of vulnerabilities is alarming as users and

administrators cannot effectively defend against them. Many techniques exist to reduce the probability of reliably exploiting (zero-day) vulnerabili-ties in software. These techniques include intrusion detection, application-level firewalls, Data Execution Prevention (DEP) and Address Space Layout Randomization (ASLR). To increase the reliability of software in terms of vulnerabilities and bugs, software vendors have adopted initiatives such as Secure Software Development Life Cycle Processes and made them part of their software development life cycle.

Frei et al. performed a large vulnerability study to better understand the state and the evolution of the security ecosystem at large [33]. The work focuses on zero-day vulnerabilities and shows that there has been a dramatic increase in such vulnerabilities. Also, the work shows that there is a faster availability of exploits than of patches.

Improving Software Security

In order to make investment decisions on software security solutions, it is desirable to have quantitative evidence on whether an improved attention to security also improves the security of those systems. One of the first empir-ical studies in this area investigated whether finding security vulnerabilities is a useful security activitity [88]. The author analyzed the defect rate and did not find a measurable effect of vulnerability finding.

To explore the relationship between code changes and security issues, Ozment et al. [80] studied how vulnerabilities evolve through different ver-sions of the OpenBSD operating system. The study shows that 62 percent of the vulnerabilities are foundational; they were introduced prior to the re-lease of the initial version and have not been altered since. The rate at which foundational vulnerabilities are reported is decreasing, somehow suggesting that the security of the same code is increasing. In contrast to our study, Ozment el al.’s study does not consider the security of web applications.

Clark et al. present in [21] a vulnerability study with a focus on the early existence of a software product. The work demonstrates that re-use of legacy code is a major contributor to the rate of vulnerability discovery and the number of vulnerabilities found. In contrast to our work, the paper does not focus on web applications, and it does not distinguish between particular types of vulnerabilities.

To better identify whether it is safe to release software, several works have focused on predicting the number of remaining vulnerabilities in the code base of software. To this end, Neuhaus et al. proposed Vulture [76]; a system that can predict new vulnerabilities using the insight that vulnerable software components such as functional calls and imports share similar past vulnerabilities. An evaluation on the Mozilla code base reveals that the system can actually predict half of the future vulnerabilities. Yamaguchi et al. [122] proposes a technique called vulnerability extrapolation to find

similar unknown vulnerabilities. It is based on analyzing API usage patterns which is more fine grained than the technique on which Vulture is based.

2.2

Web Application Security Studies

Web applications have become a popular way to provide access to services and information. Millions of people critically depend on web applications in their daily lives. Over the years, web applications have evolved towards complex software systems that exhibit critical vulnerabilities. Due to the popularity, the ease of access and the sensitivity of the information they pro-cess, web applications have become an attractive target for attackers and these critical vulnerabilities are actively being exploited. This causes finan-cial losses, damaged reputation and increased technical maintenance and support. To improve the security of web applications, tools and techniques are necessary that reduce the number of vulnerabilities in web applications. To drive the research on web application security, several studies have been conducted that analyze the security of web applications, frameworks and programming languages.

To measure the overall state of web application security, Walden et al. measured the vulnerability density of a selection of open source PHP web applications over the period 2006 until 2008 [25, 115]. In this period, the source code repositories of these applications where mined and then the code was exercised by static analysis to find vulnerabilities. While vulnerability density of the aggregate source code decreased over the time period, the vul-nerability density of eight out of fourteen applications increased. However, the vulnerability density is still above the average vulnerability density of a large number of desktop- and server- C/C++ applications which somehow suggests that the security of web application is more immature. An analysis on the vulnerability type distribution learns that the share of SQL injection vulnerabilities decreased dramatically while the share of cross-site scripting vulnerabilities increased in this period. The observation of the decrease of SQL injection vulnerabilities in an application’s code base is consistent with our study on the evolution of input validation vulnerabilities presented in Chapter 4. Compared to the study of Walden et al., our analysis is per-formed on a larger scale as it uses NVD CVE data as data source.

Although design flaws and configuration issues are causes of vulnera-bilities, most web application vulnerabilities are a result of programming errors. To better understand which tools and techniques can improve the development of secure web applications, several studies explored the rela-tionship between the security of web applications and programming lan-guages, frameworks and tools.

Fonseca et al. studied how software faults relate to web application secu-rity [29, 107]. Their results show that only a small set of software fault types

is responsible for most of the XSS and SQL injection vulnerabilities in web applications. Moreover, they empirically demonstrated that the most fre-quently occurring fault type is that of missing function calls to sanitization or input validation functions. Our work on input validation mechanisms in web programming languages and frameworks, presented in Chapter 5 of this thesis, partially corroborates this finding, but also focuses on the potential for automatic input validation as a means of improving the effectiveness of existing input validation methods.

In [118], Weinberger et al. explored in detail how effective web applica-tion frameworks are in sanitizing user-supplied input to defend applicaapplica-tions against XSS attacks. In their work, they compare the sanitization function-ality provided by web application frameworks and the features that popular web applications require. In contrast to our work presented in Chapter 5, their focus is on output sanitization as a defense mechanism against XSS, while we investigate the potential for input validation as an additional layer of defense against both XSS and SQL injection.

Finifter et al. also studied the relationship between the choice of devel-opment tools and the security of the resulting web applications [28]. Their study focused in-depth on nine applications written to an identical spec-ification, with implementations using several languages and frameworks, while our study is based on a large vulnerability data set and examined a broader selection of applications, languages, and frameworks. In contrast to our study in Chapter 5, their study did not find a relationship between the choice of development tools and application security. However, their work shows that automatic, framework-provided mechanisms are preferable to manual mechanisms for mitigating vulnerabilities related to Cross-Site Request Forgery, broken session management and insecure password stor-age. Walden et al. studied a selection of Java and PHP web applications to study whether language choice influences vulnerability density [114]. Similar to Finifter et al., the result was not statistically significant.

2.3

Mitigating Web Application Vulnerabilities

In the past decade, security researchers have worked on a number of tech-niques to improve the security of web applications. Generally, the related work has focused on classes of techniques that prevent the exploitation of vulnerabilities, techniques based on program analysis to detect vulnerabil-ities and the secure construction of web applications. We will discuss each of these classes in a separate section.

2.3.1 Attack Prevention

Due to legacy or operational constraints, it is not always possible to create, deploy and use secure web applications. Developers of web applications do

not always have the necessary security skills, sometimes it is necessary to reuse insecure legacy code and in other cases it is not possible to have access to the source code. To this end, various techniques have been proposed to detect the exploitation of web vulnerabilities with the goal of preventing attacks against web applications. In this section, we discuss web application firewalls and intrusion detection systems. These techniques are common in the sense that they analyze the payload submitted to the web application.

Input Validation

To mitigate the impact of malicious input data to web applications, tech-niques have been proposed for validating input. Scott and Sharp [103] pro-posed an application-level firewall to prevent malicious input from reaching the web server. Their approach required a specification of constraints on different inputs, and compiled those constraints into a policy validation pro-gram. Nowadays, several open source and commercial offerings are available including Barracuda Web Application Firewall [9], F5 Application Security Manager ASM [26] and ModSecurity [111]. In contrast to these approaches, our approach presented in Chapter 6 is integrated in the web application development environment and automatically learns the input constraints.

Automating the task of generating test vectors for exercising input vali-dation mechanisms is also a topic explored in the literature. Sania [54] is a system to be used in the development and debugging phases. It automat-ically generates SQL injection attacks based on the syntactic structure of queries found in the source code and tests a web application using the gen-erated attacks. Saxena et al. proposed Kudzu [97], which combines symbolic execution with constraint solving techniques to generate test cases with the goal of finding client-side code injection vulnerabilities in JavaScript code. Halfond et al. [36] use symbolic execution to infer web application interfaces to improve test coverage of web applications. Several papers propose tech-niques based on symbolic execution and string constraint solving to automat-ically generate cross-site scripting and SQL injection attacks and input gen-eration for systematic testing of applications implemented in C [16, 51, 50]. We consider these mechanisms to be complementary to the approach pre-sented in Chapter 6, in that they could be used to automatically generate input to test our input validation solution.

Intrusion Detection

An intrusion detection system (IDS) is a system that identifies malicious behavior against networks and resources by monitoring network traffic. IDSs can be either classified as signature-based or anomaly-based:

• Signature-based detection. Signature-based intrusion detection sys-tems monitors network traffic and compares that with patterns that

are associated with known attacks. These patterns are also called

sig-natures. Snort [92] is an open source intrusion detection system which

is by default configured with a number of ‘signatures’ that support the detection of web-based attacks. One of the main limitations of signature-based intrusion detection is that it is very difficult to keep the set of signatures up-to-date as new signatures must be developed when new attacks or modifications to previously known attacks are discovered. Almgren et al. proposed in [3] a technique to automat-ically deduce new signatures by tracking hosts exhibiting malicious behavior, combining signatures and by generalizing signatures. Noisy data are a result of software bugs and corrupt data can cause a sig-nificant number of false alarms reducing the effectiveness of intrusion detection systems. Julisch [48] studied the root causes of alarms and identified that a few dozens of root causes trigger 90 % of the false alarms. In [112], Vigna et al. present WebSTAT: a stateful intru-sion detection system that can detect more complex attacks such as cookie stealing and malicious behavior such as web crawlers that ignore the robots.txt file.

• Anomaly-based detection. Anomaly-based intrusion detection systems first build a statistical model describing the normal behavior of the network traffic. Then, the system can determine network traffic that significantly deviates from the model and identify that as anomalous behavior. Kruegel et al. [56, 57] proposed an anomaly-based detection system to detect web-based attacks. In this system, different statisti-cal models are used to characterize the parameters of HTTP requests. Unfortunately, anomaly-based detection systems are prone to produce a large number of false positives and/or false negatives. As the detec-tion of web attacks are relatively rare events, false positives form a big problem. In addition, anomaly-based intrusion detection systems are often not able to identify the type of web-based attack it has detected. To improve existing anomaly-based detection systems, Robertson et al. [91] proposed a technique which generalizes anomalies into a signa-ture such that similar anomalies can be classified as false positives and dismissed. Heuristics are used to infer the type of attack that caused the anomaly.

While intrusion detection systems focus on detecting attacks against web applications that have already been deployed, our focus in this thesis is on improving the secure development of web applications. To that end, we pro-pose in Chapter 6 a system that prevents the exploitation of input validation vulnerabilities in web applications as part of the application development environment.

Similar to our approach, an intrusion detection and prevention system prevents attacks. To stop an attack, such a system may change the payload,

terminate the connection or reconfigure the network topology (e.g. recon-figuring a firewall). If used with the purpose of protecting web applications, an intrusion detection and prevention system can be considered as a special form of a web application firewall.

Client-Side XSS Prevention

Client-side or browser-based mechanisms such as Noncespaces [34], Noxes [52], BEEP [44], DSI [74], or XSS auditor [10] relies on the browser infrastructure to prevent the execution of injected scripts. Noncespaces [34] prevents XSS attacks by adding randomized prefixes to trusted HTML content. A client side policy checker parses the response of the server and checks for injected content that does not correspond to the correct prefix. Unfortunately, this policy checker has significant impact on the performance of rendering web pages.

BEEP [44] is a policy-based system that prevents XSS attacks on the client-side by whitelisting legitimate scripts and disabling scripts for certain regions of the web page. The browser implements a security hook which enforces the policy that has to be embedded in each web page. Although the system can prevent script injection attacks, it cannot prevent against other forms of unsafe data usage (e.g. injecting malicious iframes). BEEP requires also changes to the source code of a web application, a process that can be complicated and error-prone. The goal of DSI [74] is to preserve the integrity of document structure. On the server-side, dynamic content is separated from static content and these two components are assembled on the client-side, thereby preserving the document structure intended by the web developer.

Kirda et al. proposed Noxes [52], a client-side firewall that stops leak-ing sensitive data to the attackers’ servers by disallowleak-ing the browser from contacting URLs that do not belong to the web application’s domain. Some browser-based XSS filters have been proposed to detect injected scripts. These filter include: Microsoft Internet Explorer 8 [93], noXSS [86] and NoScript [61]. The aim of these approaches is to block reflected XSS at-tacks by searching for content that is present both in HTTP requests and in HTTP responses. These approaches are either prone to a large number of false positives or lack performance. XSS auditor [10] attempts to overcome these issues by detecting XSS attacks after HTML parsing but before script execution.

Each of these aforementioned approaches requires that end-users upgrade their browsers or install additional software; unfortunately, many users do not regularly upgrade their systems [113].

Server-Side XSS or SQL Injection Prevention

Research effort has also been spent on server-side mechanisms for detecting and preventing XSS and SQL injection attacks. Many techniques focus on the prevention of injection attacks using runtime monitoring. For example, Wassermann and Su [110] propose a system that checks at runtime the syn-tactic structure of a SQL query for a tautology. AMNESIA [37] checks the syntactic structure of SQL queries at runtime against a model that is ob-tained through static analysis. Boyd et al. proposed SQLrand [14], a system that prevents SQL injection attacks by applying the concept of instruction-set randomization to SQL. The system appends to aach keyword in a SQL statement a random integer, a proxy server intercepts the randomized query and performs de-randomization before submitting the query to the database management system. XSSDS [46] is a system that aims to detect cross-site scripting attacks by comparing HTTP requests and responses. While these systems focus on preventing injection attacks by checking the integrity of queries or documents, we focus in this thesis on the secure development of web applications using input validation. Recent work has focused on automatically discovering parameter injection [7] and parameter tampering vulnerabilities [83].

Ter Louw et al. proposed BLUEPRINT [59], a system that enables the safe construction of parse trees in a browser-agnostic way. BLUEPRINT requires changes to the source code of a web application, a process that can be complicated and error-prone. In contrast, the IPAAS approach presented in Chapter 6 does not require any modifications to the application’s source code. Furthermore, it is platform- and language-agnostic.

2.3.2 Program Analysis

Program analysis refers to the process of automatically analyzing the be-havior of a computer program. Computer security researchers use program analysis tools for a number of security-related activities including vulnera-bility discovery and malware analysis. Depending on whether the program during the analysis is executed, program analysis is classified as static anal-ysis or dynamic analanal-ysis. In this section, we discuss related work that has applied program analysis techniques to find input validation vulnerabilities in web applications.

Static Analysis

Analyzing software without executing it, is called static analysis. Static analysis tools perform an automated analysis on an abstraction or a model of the program under consideration. This model has been extracted from the source code or binary representation of the program. It has been proven that finding all possible runtime errors in an application is an undecidable

problem; i.e. there is no mechanical method that can truthfully answer if an application exhibits runtime errors. However, it is still useful to come up with approximate answers.

Static analysis as a tool for finding security-critical bugs in software has also received a great deal of attention. WebSSARI [41] was one of the first efforts to apply classical information flow techniques to web application se-curity vulnerabilities, where the goal of the analysis is to check whether a sanitization routine is applied before data reaches a sensitive sink. Several static analysis approaches have been proposed for various languages includ-ing Java [58] and PHP [47]. Statically analyzinclud-ing web applications has a number of limitations. Many web applications rely on values that cannot be statically determined (e.g. current execution path, current system date, user input). This hinders the application of static analysis techniques. In addition, web applications are often implemented in dynamic weakly-typed languages (e.g. PHP). This class languages make it difficult to infer the possible values of variables by static analysis tools. These limitations result in practice into imprecision [121].

The IPAAS approach presented in Chapter 6 incorporates a static anal-ysis component as well as a dynamic component to learn parameter types. While our prototype static analyzer is simple and imprecise, our evaluation results are nevertheless encouraging.

Dynamic Analysis

In contrast to static analysis, dynamic analysis is performed by executing the program under analysis on a real or virtual processor. Dynamic analysis can only verify properties over the paths that have been explored. Therefore, the target program must be executed with a sufficient number of test inputs to achieve adequate path coverage of the program under analysis. The use of techniques from software testing such as code coverage can assist in ensuring that an adequate set of behaviors have been observed.

Approaches based on dynamic analysis to automatically harden web ap-plications have been proposed for PHP [82] and Java [35]. Both approaches hardcode the assertions to be checked thereby limiting the types of vulner-abilities that can be detected. In contrast, RESIN [123] is a system that allows application developers to annotate data objects with policies describ-ing the assertions to be checked by the runtime environment. This approach allows the prevention of directory traversal, cross-site scripting, SQL injec-tion and server-side script injecinjec-tion. Similar to RESIN, GuardRails [15] also requires the developer to specify policies. However, the policies are assigned to classes instead of objects. Hence, developers do not have to as-sign manually a policy to all the instances of a class as it is the case with RESIN. Although these approaches can work at a finer-grained level than static analysis tools, they incur runtime overhead. All these approaches aim

to detect missing sanitization functionality while the focus of this thesis is the validation of untrusted user input.

Sanitization Correctness

While much research effort has been spent on applying taint-tracking tech-niques [47, 58, 77, 82, 117, 121] to ensure that untrusted data is sanitized before its output, less effort has been spent on the correctness of input val-idation and sanitization. Because taint-tracking techniques do not model the semantics of input validation and sanitization routines, they lack preci-sion. Wassermann proposed a technique based on static string-taint analysis that determines the set of strings an application may generate for a given variable to detect SQL injection vulnerabilities [116] and cross-site scripting vulnerabilities [117] in PHP applications.

Balzarotti et al. [8] used a combination of static and dynamic analysis techniques to analyze sanitization routines in real web applications imple-mented in PHP. The results show that developers do not always implement correct sanitization routines. The BEK project [40] does not focus on taint-tracking, but proposes a language to model and a system to check the cor-rectness of sanitization functions.

Recent work has also focused on the correct use of sanitization routines to prevent cross-site scripting attacks. Scriptgard [98] can automatically detect and repair mismatches between sanitization routines and context. In addition, it ensures the correct ordering of sanitization routines.

2.3.3 Black-Box Testing

Black-box web vulnerability scanners are automated tools used by computer security professionals to probe web applications for security vulnerabilities without requiring access to the source code. These tools mimic real at-tackers by generating specially crafted input values, submitting that to the reachable input vectors of the web application and observing the behav-ior of the application to determine if a vulnerability has been exploited. Web vulnerability scanners have become popular because they are agnos-tic to web application technologies, the ease of use and the high degree of automation these tools provide. Examples of web vulnerability scanners in-clude: Acunetix WVS [1], HP WebInspect [38], IBM Rational AppScan [42], Burp [60] and w3af [89]. Unfortunately, these tools also have limitations. In particular, these tools do not provide any garantuee on soundness and, as a matter of fact, several studies have shown that web vulnerability scanners miss vulnerabilities [4, 81, 119].

Independent from each other, Bau et al. [11] and Doupe et al. [24] stud-ied the root causes behind the errors that web vulnerability scanners make. Both identified that web vulnerability scanners have difficulties in finding

more complex vulnerabilities such as stored XSS and second order SQL in-jection. To discover these more complex vulnerabilities, web vulnerability scanners should implement improved web crawling functionality and im-proved reverse engineering capabilities to keep better track of the state of the application.

2.3.4 Security by Construction

Until now, the discussion on related techniques for securing web applica-tions has concentrated around techniques to prevent web attacks and the analysis of web applications to find vulnerabilities. These techniques can be used to protect web applications when developers use insecure program-ming languages to develop a web application. With insecure programprogram-ming languages, vulnerabilities occur in source code because the default behavior of these languages is unsafe: the developer has to apply manually an input validation or an output sanitization routine on data before it can be used to construct a web document or SQL query. Security by construction refers to a set of techniques that aim to automatically eliminate issues such as cross-site scripting and SQL injection by providing safe behavior as default. The use of these techniques can automatically result in more secure web ap-plications without much effort from the developer. In this section, we give an overview of these techniques.

Several works have leveraged the language’s type system to provide auto-mated protection against cross-site scripting and SQL injection vulnerabili-ties. In the approach of Robertson et al. [90], cross-site scripting attacks are prevented by generating HTTP responses from statically-typed data struc-tures that represent web documents. During document rendering, context-aware sanitization routines are automatically applied to untrusted values. The approach requires that the web application constructs HTML content using special algebraic data types. This programmatic way of writing client-side code hinders developer acceptance. In addition, the approach is not easily extensible to other client-side languages than HTML, e.g. JavaScript and Flash. In contrast, Johns et al. [45] proposed a special data type which integrates embedded languages such as SQL, HTML and XML in the im-plementation language of the web application. The application developer can continue to write traditional SQL or HTML/JavaScript code using the special data type. A source-to-source code translator translates the data assigned to the special datatype to enforce a strict separation between data and code with the goal of mitigating cross-site scripting and SQL injection vulnerabilities. While these approaches focus on automated output saniti-zation, our focus is on automated input validation. In contrast to output sanitization, input validation does not prevent all cross-site scripting and SQL injection vulnerabilities. However, the IPAAS approach presented in Chapter 6 can secure legacy applications written using insecure languages

and it has shown to be remarkably effective in preventing cross-site scripting and SQL injection vulnerabilities in real web applications.

Recent work has focused on context-sensitive output sanitization as coun-termeasure against cross-site scripting vulnerabilities. To accurately defend against cross-site scripting vulnerabilities, sanitizers need to be placed in the right context and in the correct order. Scriptgard [98] employs dynamic anal-ysis to automatically detect and repair sanitization errors in legacy .NET applications at runtime. Since the analysis is performed per-path, the ap-proach relies on dynamic testing to achieve coverage. Samuel et al. [96] propose a type-qualifier based mechanism that can be used with existing templating languages to achieve context-sensitive auto-sanitization. Both approaches only focus on preventing cross-site scripting vulnerabilities. As we focus on automatically identifying parameter data types for input valida-tion, our approach presented in Chapter 6 can help preventing other classes of vulnerabilities such as SQL injection or, in principle, HTTP Parameter Pollution [7].

![Figure 1.1: Number of web vulnerabilities over time, data obtained from NVD CVE [78].](https://thumb-eu.123doks.com/thumbv2/123doknet/2646432.59786/15.892.332.628.147.379/figure-number-vulnerabilities-time-data-obtained-nvd-cve.webp)