Feature selection and term weighting beyond word frequency for calls for tenders documents

Texte intégral

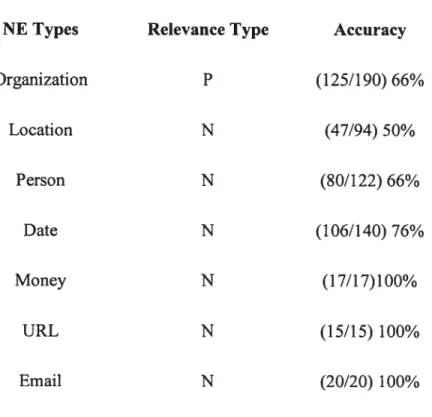

Figure

Documents relatifs

In conformal predictor, we also could set a level of significance ϵ to find the prediction region which contains all labels y ⊂ Y with the corresponding p- value larger than ϵ,

Also, as there have been developed methods for automating this process, the feature set size increases dramatically (leading to what is commonly refer to as

Vapnik, Gene selection for cancer classification using support vector machines, Machine Learning, 46 (2002), pp. Lemar´ echal, Convex Analysis and Minimization Algorithms

We start with the single model aggregation strategies and evaluate them, over a panel of datasets and feature selec- tion methods, both in terms of the stability of the aggre-

The Poisson likelihood (PL) and the weighted Poisson likelihood (WPL) are combined with two feature selection procedures: the adaptive lasso (AL) and the adaptive linearized

We report the performance of (i) a FastText model trained on the training subsed of the data set of 1,000 head- lines, (ii) an EC classification pipeline based on Word2Vec and

The features (byte image or n-gram frequency) were used as inputs to the classifier and the output was a set of float values assigned to the nine class nodes.. The node with the

According to the AP task literature, combinations of character n-grams with word n-gram features have proved to be highly discriminative for both gender and language